摘要

随着人工智能技术的快速发展,AI应用已成为现代软件开发的重要组成部分。然而,构建和部署复杂的AI应用系统往往面临诸多挑战,包括环境配置复杂、服务依赖管理困难、资源调度复杂等问题。Docker Compose作为一种轻量级的容器编排工具,为解决这些问题提供了有效的解决方案。本文将详细介绍如何使用Docker Compose构建一个完整的AI应用系统,涵盖环境搭建、服务配置、问题排查、性能优化等关键环节。通过丰富的实践案例和代码示例,帮助中国开发者特别是AI应用开发者快速掌握相关技能,构建稳定、高效的AI应用系统。

正文

1. 引言

在当今的软件开发领域,AI应用正变得越来越普及。从智能客服到内容推荐,从图像识别到自然语言处理,AI技术正在深刻改变着我们的生活和工作方式。然而,构建和部署AI应用系统并非易事,往往涉及多个服务组件、复杂的依赖关系以及严格的资源要求。

Docker Compose作为一种流行的容器编排工具,通过一个YAML文件定义和运行多容器Docker应用程序,为AI应用的部署提供了极大的便利。它允许开发者将复杂的AI系统分解为多个独立的服务组件,并通过简单的命令进行部署、扩展和管理。

本文将通过一个完整的实践案例,详细介绍如何使用Docker Compose构建一个包含API服务、Worker服务、Playwright服务和Redis缓存的AI应用系统。我们将从环境搭建开始,逐步介绍服务配置、问题排查、性能优化等关键环节,并提供丰富的代码示例和最佳实践建议。

2. 环境搭建

2.1 系统要求

在开始构建AI应用之前,需要确保系统满足以下基本要求:

- 操作系统:Linux(推荐Ubuntu 20.04+)、macOS或Windows(WSL2)

- 内存:至少8GB RAM(推荐16GB以上)

- 存储:至少20GB可用磁盘空间

- Docker:版本19.03或更高

- Docker Compose:版本1.27或更高

2.2 安装Docker和Docker Compose

以下是Ubuntu系统上的安装步骤:

# 更新包索引

sudo apt-get update

# 安装必要的包

sudo apt-get install \

apt-transport-https \

ca-certificates \

curl \

gnupg \

lsb-release

# 添加Docker官方GPG密钥

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

# 设置稳定版仓库

echo \

"deb [arch=amd64 signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

# 安装Docker Engine

sudo apt-get update

sudo apt-get install docker-ce docker-ce-cli containerd.io

# 验证Docker安装

sudo docker run hello-world

# 安装Docker Compose

sudo curl -L "https://github.com/docker/compose/releases/download/v2.20.2/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

# 验证Docker Compose安装

docker-compose --version

2.3 创建项目目录结构

创建一个项目目录,并在其中创建必要的文件和目录:

# 创建项目目录

mkdir ai-application

cd ai-application

# 创建项目结构

mkdir -p src config data logs scripts

touch docker-compose.yml .env README.md

# 创建Python脚本目录

mkdir -p src/api src/worker src/utils

3. 系统架构设计

3.1 整体架构

AI应用系统采用微服务架构,包含以下核心组件:

- API服务:提供RESTful API接口,处理客户端请求

- Worker服务:处理后台任务,如数据处理、模型推理等

- Playwright服务:用于网页自动化操作,处理复杂的网页交互

- Redis服务:作为缓存系统和任务队列,协调各服务间的工作

3.2 系统架构图

3.3 服务间交互流程

4. 服务配置

4.1 配置Docker Compose文件

创建一个完整的[docker-compose.yml](file:///C:/Users/13532/Desktop/%E5%8D%9A%E5%AE%A2/.history/docker-compose.yml)文件,定义所有服务组件:

# docker-compose.yml

version: '3.8'

# 定义通用服务配置

x-common-service: &common-service

image: swr.cn-north-4.myhuaweicloud.com/ddn-k8s/ghcr.io/mendableai/firecrawl:latest

ulimits:

nofile:

soft: 65535

hard: 65535

networks:

- backend

extra_hosts:

- "host.docker.internal:host-gateway"

deploy:

resources:

limits:

memory: 2G

cpus: '1.0'

# 定义通用环境变量

x-common-env: &common-env

REDIS_URL: ${REDIS_URL:-redis://redis:6381}

REDIS_RATE_LIMIT_URL: ${REDIS_RATE_LIMIT_URL:-redis://redis:6381}

PLAYWRIGHT_MICROSERVICE_URL: ${PLAYWRIGHT_MICROSERVICE_URL:-http://playwright-service:3000/scrape}

USE_DB_AUTHENTICATION: ${USE_DB_AUTHENTICATION:-false}

OPENAI_API_KEY: ${OPENAI_API_KEY}

LOGGING_LEVEL: ${LOGGING_LEVEL:-INFO}

PROXY_SERVER: ${PROXY_SERVER}

PROXY_USERNAME: ${PROXY_USERNAME}

PROXY_PASSWORD: ${PROXY_PASSWORD}

# 定义服务

services:

# Playwright服务 - 用于网页自动化

playwright-service:

image: swr.cn-north-4.myhuaweicloud.com/ddn-k8s/ghcr.io/mendableai/playwright-service:latest

environment:

PORT: 3000

PROXY_SERVER: ${PROXY_SERVER}

PROXY_USERNAME: ${PROXY_USERNAME}

PROXY_PASSWORD: ${PROXY_PASSWORD}

BLOCK_MEDIA: ${BLOCK_MEDIA:-true}

networks:

- backend

ports:

- "3000:3000"

deploy:

resources:

limits:

memory: 2G

cpus: '1.0'

shm_size: 2gb

healthcheck:

test: ["CMD", "wget", "--quiet", "--tries=1", "--spider", "http://localhost:3000"]

interval: 30s

timeout: 10s

retries: 3

start_period: 40s

# API服务 - 提供对外接口

api:

<<: *common-service

environment:

<<: *common-env

HOST: "0.0.0.0"

PORT: ${INTERNAL_PORT:-8083}

FLY_PROCESS_GROUP: app

ENV: local

depends_on:

redis:

condition: service_started

playwright-service:

condition: service_healthy

ports:

- "${PORT:-8083}:${INTERNAL_PORT:-8083}"

command: ["pnpm", "run", "start:production"]

deploy:

resources:

limits:

memory: 1G

cpus: '0.5'

# Worker服务 - 处理后台任务

worker:

<<: *common-service

environment:

<<: *common-env

FLY_PROCESS_GROUP: worker

ENV: local

NUM_WORKERS_PER_QUEUE: ${NUM_WORKERS_PER_QUEUE:-2}

depends_on:

redis:

condition: service_started

playwright-service:

condition: service_healthy

command: ["pnpm", "run", "workers"]

deploy:

replicas: ${WORKER_REPLICAS:-1}

resources:

limits:

memory: 2G

cpus: '1.0'

# Redis服务 - 用作缓存和任务队列

redis:

image: swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/library/redis:7.0.12

networks:

- backend

ports:

- "6381:6381"

command: redis-server --bind 0.0.0.0 --port 6381

volumes:

- redis-data:/data

deploy:

resources:

limits:

memory: 512M

cpus: '0.5'

# 定义网络

networks:

backend:

driver: bridge

# 定义卷

volumes:

redis-data:

driver: local

4.2 配置环境变量

在[.env](file:///C:/Users/13532/Desktop/%E5%8D%9A%E5%AE%A2/.history/.env)文件中配置必要的环境变量:

# .env - 环境变量配置文件

# ===== 必需的环境变量 =====

NUM_WORKERS_PER_QUEUE=2

WORKER_REPLICAS=1

PORT=8083

INTERNAL_PORT=8083

HOST=0.0.0.0

REDIS_URL=redis://redis:6381

REDIS_RATE_LIMIT_URL=redis://redis:6381

PLAYWRIGHT_MICROSERVICE_URL=http://playwright-service:3000/scrape

# ===== 可选的环境变量 =====

USE_DB_AUTHENTICATION=false

LOGGING_LEVEL=INFO

BLOCK_MEDIA=true

# ===== 代理配置 =====

PROXY_SERVER=

PROXY_USERNAME=

PROXY_PASSWORD=

# ===== AI模型配置 =====

OPENAI_API_KEY=

MODEL_NAME=gpt-3.5-turbo

MODEL_EMBEDDING_NAME=text-embedding-ada-002

# ===== 资源限制配置 =====

API_MEMORY_LIMIT=1G

API_CPU_LIMIT=0.5

WORKER_MEMORY_LIMIT=2G

WORKER_CPU_LIMIT=1.0

REDIS_MEMORY_LIMIT=512M

REDIS_CPU_LIMIT=0.5

PLAYWRIGHT_MEMORY_LIMIT=2G

PLAYWRIGHT_CPU_LIMIT=1.0

5. Python管理脚本

为了更好地管理和监控AI应用系统,我们编写一些Python脚本来辅助操作:

5.1 系统状态监控脚本

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

AI应用系统监控脚本

用于监控Docker Compose部署的AI应用系统状态

"""

import docker

import time

import json

import psutil

from typing import Dict, List

import subprocess

import requests

class AISystemMonitor:

"""AI系统监控器"""

def __init__(self, project_name: str = "ai-application"):

"""

初始化AI系统监控器

Args:

project_name (str): Docker Compose项目名称

"""

self.project_name = project_name

try:

self.client = docker.from_env()

print("✅ Docker客户端初始化成功")

except Exception as e:

print(f"❌ Docker客户端初始化失败: {e}")

raise

def get_system_resources(self) -> Dict:

"""

获取系统资源使用情况

Returns:

Dict: 系统资源使用情况

"""

# CPU使用率

cpu_percent = psutil.cpu_percent(interval=1)

# 内存使用情况

memory = psutil.virtual_memory()

# 磁盘使用情况

disk = psutil.disk_usage('/')

return {

'cpu_percent': cpu_percent,

'memory_total_gb': round(memory.total / (1024**3), 2),

'memory_used_gb': round(memory.used / (1024**3), 2),

'memory_percent': memory.percent,

'disk_total_gb': round(disk.total / (1024**3), 2),

'disk_used_gb': round(disk.used / (1024**3), 2),

'disk_percent': round((disk.used / disk.total) * 100, 2)

}

def get_container_stats(self, container_name: str) -> Dict:

"""

获取容器资源统计信息

Args:

container_name (str): 容器名称

Returns:

Dict: 容器资源统计信息

"""

try:

container = self.client.containers.get(container_name)

stats = container.stats(stream=False)

# CPU使用率计算

cpu_stats = stats['cpu_stats']

precpu_stats = stats['precpu_stats']

cpu_delta = cpu_stats['cpu_usage']['total_usage'] - precpu_stats['cpu_usage']['total_usage']

system_delta = cpu_stats['system_cpu_usage'] - precpu_stats['system_cpu_usage']

if system_delta > 0 and cpu_delta > 0:

cpu_percent = (cpu_delta / system_delta) * len(cpu_stats['cpu_usage']['percpu_usage']) * 100

else:

cpu_percent = 0.0

# 内存使用情况

memory_stats = stats['memory_stats']

memory_usage = memory_stats.get('usage', 0) / (1024 * 1024) # MB

memory_limit = memory_stats.get('limit', 0) / (1024 * 1024) # MB

memory_percent = (memory_usage / memory_limit) * 100 if memory_limit > 0 else 0

return {

'container_name': container_name,

'cpu_percent': round(cpu_percent, 2),

'memory_usage_mb': round(memory_usage, 2),

'memory_limit_mb': round(memory_limit, 2),

'memory_percent': round(memory_percent, 2)

}

except Exception as e:

print(f"获取容器 {container_name} 统计信息失败: {e}")

return {}

def get_service_status(self) -> List[Dict]:

"""

获取服务状态

Returns:

List[Dict]: 服务状态列表

"""

try:

# 使用docker-compose命令获取服务状态

result = subprocess.run([

"docker-compose",

"-p", self.project_name,

"ps", "--format", "json"

], capture_output=True, text=True)

if result.returncode == 0:

# 解析JSON输出

services = []

for line in result.stdout.strip().split('\n'):

if line:

service_info = json.loads(line)

services.append(service_info)

return services

else:

print(f"获取服务状态失败: {result.stderr}")

return []

except Exception as e:

print(f"获取服务状态时发生错误: {e}")

return []

def check_service_health(self, service_url: str) -> Dict:

"""

检查服务健康状态

Args:

service_url (str): 服务URL

Returns:

Dict: 健康检查结果

"""

try:

response = requests.get(service_url, timeout=5)

if response.status_code == 200:

return {'status': 'healthy', 'message': '服务运行正常'}

else:

return {'status': 'unhealthy', 'message': f'HTTP状态码异常: {response.status_code}'}

except Exception as e:

return {'status': 'unhealthy', 'message': f'健康检查失败: {str(e)}'}

def print_system_status(self):

"""打印系统状态报告"""

print(f"\n{'='*60}")

print(f"🤖 AI应用系统状态报告 - {time.strftime('%Y-%m-%d %H:%M:%S')}")

print(f"{'='*60}")

# 系统资源使用情况

print("\n💻 系统资源使用情况:")

resources = self.get_system_resources()

print(f" CPU使用率: {resources['cpu_percent']}%")

print(f" 内存使用: {resources['memory_used_gb']}GB / {resources['memory_total_gb']}GB ({resources['memory_percent']}%)")

print(f" 磁盘使用: {resources['disk_used_gb']}GB / {resources['disk_total_gb']}GB ({resources['disk_percent']}%)")

# 服务状态

print("\n📦 服务状态:")

services = self.get_service_status()

if services:

for service in services:

name = service.get('Service', 'N/A')

state = service.get('State', 'N/A')

status_icon = "✅" if state == 'running' else "❌" if state in ['exited', 'dead'] else "⚠️"

print(f" {status_icon} {name}: {state}")

else:

print(" 未获取到服务信息")

# 容器资源使用情况

print("\n📊 容器资源使用情况:")

if services:

print("-" * 80)

print(f"{'容器名称':<25} {'CPU使用率':<15} {'内存使用(MB)':<15} {'内存限制(MB)':<15} {'内存使用率':<15}")

print("-" * 80)

for service in services:

# 获取项目中的容器

try:

containers = self.client.containers.list(filters={

"label": f"com.docker.compose.service={service.get('Service', '')}"

})

for container in containers:

stats = self.get_container_stats(container.name)

if stats:

print(f"{stats['container_name'][:24]:<25} "

f"{stats['cpu_percent']:<15} "

f"{stats['memory_usage_mb']:<15} "

f"{stats['memory_limit_mb']:<15} "

f"{stats['memory_percent']:<15}%")

except Exception as e:

print(f"监控服务 {service.get('Service', '')} 时出错: {e}")

print("-" * 80)

# 健康检查

print("\n🏥 服务健康检查:")

health_checks = {

'API服务': 'http://localhost:8083/health',

'Playwright服务': 'http://localhost:3000'

}

for service_name, url in health_checks.items():

try:

health = self.check_service_health(url)

status_icon = "✅" if health['status'] == 'healthy' else "❌"

print(f" {status_icon} {service_name}: {health['message']}")

except Exception as e:

print(f" ❌ {service_name}: 健康检查失败: {e}")

def main():

"""主函数"""

monitor = AISystemMonitor("ai-application")

try:

while True:

monitor.print_system_status()

print(f"\n⏱️ 10秒后刷新,按 Ctrl+C 退出...")

time.sleep(10)

except KeyboardInterrupt:

print("\n👋 系统监控已停止")

except Exception as e:

print(f"❌ 监控过程中发生错误: {e}")

if __name__ == "__main__":

main()

5.2 服务管理脚本

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

AI应用服务管理脚本

用于管理Docker Compose部署的AI应用服务

"""

import subprocess

import sys

import time

from typing import List

class AIServiceManager:

"""AI服务管理器"""

def __init__(self, project_name: str = "ai-application"):

"""

初始化AI服务管理器

Args:

project_name (str): Docker Compose项目名称

"""

self.project_name = project_name

def run_command(self, command: List[str]) -> subprocess.CompletedProcess:

"""

执行命令

Args:

command (List[str]): 命令列表

Returns:

subprocess.CompletedProcess: 命令执行结果

"""

try:

print(f"执行命令: {' '.join(command)}")

result = subprocess.run(command, capture_output=True, text=True)

if result.returncode == 0:

print("✅ 命令执行成功")

else:

print(f"❌ 命令执行失败: {result.stderr}")

return result

except Exception as e:

print(f"❌ 命令执行出错: {e}")

return subprocess.CompletedProcess(args=command, returncode=1, stdout="", stderr=str(e))

def start_services(self, detach: bool = True):

"""

启动服务

Args:

detach (bool): 是否在后台运行

"""

print("🚀 正在启动AI应用服务...")

command = ["docker-compose", "-p", self.project_name, "up"]

if detach:

command.append("-d")

self.run_command(command)

def stop_services(self):

"""停止服务"""

print("🛑 正在停止AI应用服务...")

command = ["docker-compose", "-p", self.project_name, "down"]

self.run_command(command)

def restart_services(self):

"""重启服务"""

print("🔄 正在重启AI应用服务...")

command = ["docker-compose", "-p", self.project_name, "restart"]

self.run_command(command)

def view_logs(self, service_name: str = None, follow: bool = False):

"""

查看服务日志

Args:

service_name (str): 服务名称

follow (bool): 是否持续跟踪日志

"""

print("📋 正在查看服务日志...")

command = ["docker-compose", "-p", self.project_name, "logs"]

if follow:

command.append("-f")

if service_name:

command.append(service_name)

self.run_command(command)

def scale_service(self, service_name: str, replicas: int):

"""

扩展服务副本数

Args:

service_name (str): 服务名称

replicas (int): 副本数

"""

print(f"📈 正在扩展服务 {service_name} 到 {replicas} 个副本...")

command = ["docker-compose", "-p", self.project_name, "up", "-d", "--scale", f"{service_name}={replicas}"]

self.run_command(command)

def get_service_status(self):

"""获取服务状态"""

print("📊 正在获取服务状态...")

command = ["docker-compose", "-p", self.project_name, "ps"]

self.run_command(command)

def build_services(self, no_cache: bool = False):

"""

构建服务镜像

Args:

no_cache (bool): 是否不使用缓存

"""

print("🏗️ 正在构建服务镜像...")

command = ["docker-compose", "-p", self.project_name, "build"]

if no_cache:

command.append("--no-cache")

self.run_command(command)

def print_help():

"""打印帮助信息"""

help_text = """

🤖 AI应用服务管理工具

用法: python service_manager.py [命令] [选项]

命令:

start 启动服务

stop 停止服务

restart 重启服务

status 查看服务状态

logs 查看服务日志

scale 扩展服务副本数

build 构建服务镜像

选项:

start:

-d, --detach 在后台运行(默认)

logs:

-f, --follow 持续跟踪日志

<service> 指定服务名称

scale:

<service> 服务名称

<replicas> 副本数

build:

--no-cache 不使用缓存构建

示例:

python service_manager.py start

python service_manager.py logs api

python service_manager.py logs -f worker

python service_manager.py scale worker 3

python service_manager.py build --no-cache

"""

print(help_text)

def main():

"""主函数"""

if len(sys.argv) < 2:

print_help()

return

manager = AIServiceManager("ai-application")

command = sys.argv[1]

try:

if command == "start":

detach = "-d" in sys.argv or "--detach" in sys.argv

manager.start_services(detach=not ("-f" in sys.argv or "--foreground" in sys.argv))

elif command == "stop":

manager.stop_services()

elif command == "restart":

manager.restart_services()

elif command == "status":

manager.get_service_status()

elif command == "logs":

follow = "-f" in sys.argv or "--follow" in sys.argv

service_name = None

for arg in sys.argv[2:]:

if not arg.startswith("-"):

service_name = arg

break

manager.view_logs(service_name, follow)

elif command == "scale":

if len(sys.argv) >= 4:

service_name = sys.argv[2]

try:

replicas = int(sys.argv[3])

manager.scale_service(service_name, replicas)

except ValueError:

print("❌ 副本数必须是整数")

else:

print("❌ 请提供服务名称和副本数")

print("示例: python service_manager.py scale worker 3")

elif command == "build":

no_cache = "--no-cache" in sys.argv

manager.build_services(no_cache)

else:

print_help()

except Exception as e:

print(f"❌ 执行命令时发生错误: {e}")

if __name__ == "__main__":

main()

6. 问题排查

6.1 常见问题类型

在AI应用部署过程中,常见的问题包括:

- 任务超时问题:Worker服务未能正确启动或处理任务

- 连接问题:服务间网络连接异常,如

Cant accept connection - 内存不足:容器分配的内存不足以支持应用运行

- 架构不匹配:容器的架构与宿主机不匹配

- 依赖服务未就绪:服务启动顺序不当导致依赖服务未准备好

6.2 问题诊断脚本

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

AI应用问题诊断脚本

用于诊断和分析Docker Compose部署中的常见问题

"""

import docker

import psutil

import time

import json

from typing import Dict, List

import subprocess

import re

class AIDiagnostic:

"""AI应用诊断工具"""

def __init__(self, project_name: str = "ai-application"):

"""

初始化诊断工具

Args:

project_name (str): Docker Compose项目名称

"""

self.project_name = project_name

try:

self.client = docker.from_env()

print("✅ Docker客户端初始化成功")

except Exception as e:

print(f"❌ Docker客户端初始化失败: {e}")

raise

def check_system_resources(self) -> Dict:

"""

检查系统资源使用情况

Returns:

Dict: 系统资源使用情况

"""

# CPU使用率

cpu_percent = psutil.cpu_percent(interval=1)

# 内存使用情况

memory = psutil.virtual_memory()

# 磁盘使用情况

disk = psutil.disk_usage('/')

return {

'cpu_percent': cpu_percent,

'memory_total_gb': round(memory.total / (1024**3), 2),

'memory_available_gb': round(memory.available / (1024**3), 2),

'memory_percent': memory.percent,

'disk_total_gb': round(disk.total / (1024**3), 2),

'disk_free_gb': round(disk.free / (1024**3), 2),

'disk_percent': round(((disk.total - disk.free) / disk.total) * 100, 2)

}

def analyze_container_logs(self, container_name: str, lines: int = 100) -> Dict:

"""

分析容器日志,查找常见错误

Args:

container_name (str): 容器名称

lines (int): 获取日志行数

Returns:

Dict: 日志分析结果

"""

try:

# 获取容器日志

container = self.client.containers.get(container_name)

logs = container.logs(tail=lines).decode('utf-8')

# 分析常见错误模式

issues = []

suggestions = []

# 内存不足问题

if "OOMKilled" in logs or "out of memory" in logs.lower():

issues.append("内存不足导致容器被终止")

suggestions.append("增加容器内存限制或优化应用内存使用")

# Worker停滞问题

if "WORKER STALLED" in logs:

issues.append("Worker进程停滞")

suggestions.append("检查任务处理逻辑或增加资源限制")

# 连接问题

if "Cant accept connection" in logs or "connection refused" in logs.lower():

issues.append("连接失败")

suggestions.append("检查网络配置和服务依赖")

# 架构问题

if "segmentation fault" in logs.lower() or "architecture mismatch" in logs.lower():

issues.append("架构不匹配")

suggestions.append("使用与宿主机架构匹配的镜像")

# 超时问题

if "timeout" in logs.lower() or "timed out" in logs.lower():

issues.append("任务超时")

suggestions.append("增加超时时间或优化任务处理逻辑")

return {

'issues': issues,

'suggestions': suggestions,

'log_sample': logs[-500:] if len(logs) > 500 else logs

}

except Exception as e:

return {

'issues': [f"日志分析失败: {e}"],

'suggestions': ["检查容器是否正常运行"],

'log_sample': ""

}

def check_docker_daemon(self) -> Dict:

"""

检查Docker守护进程状态

Returns:

Dict: Docker守护进程状态

"""

try:

# 检查Docker守护进程

result = subprocess.run(["systemctl", "is-active", "docker"],

capture_output=True, text=True)

if result.returncode == 0 and result.stdout.strip() == "active":

return {'status': 'running', 'message': 'Docker守护进程运行正常'}

else:

return {'status': 'stopped', 'message': 'Docker守护进程未运行'}

except Exception as e:

return {'status': 'unknown', 'message': f'检查Docker守护进程时出错: {e}'}

def check_docker_resources(self) -> Dict:

"""

检查Docker资源限制

Returns:

Dict: Docker资源信息

"""

try:

info = self.client.info()

total_memory = info.get('MemTotal', 0)

total_cpus = info.get('NCPU', 0)

return {

'total_memory_gb': round(total_memory / (1024**3), 2) if total_memory > 0 else 0,

'total_cpus': total_cpus,

'docker_version': info.get('ServerVersion', 'unknown')

}

except Exception as e:

return {'error': f"获取Docker资源信息失败: {e}"}

def print_diagnostic_report(self):

"""打印诊断报告"""

print(f"\n{'='*70}")

print(f"🔍 AI应用诊断报告 - {time.strftime('%Y-%m-%d %H:%M:%S')}")

print(f"{'='*70}")

# Docker守护进程检查

print("\n🐳 Docker守护进程检查:")

docker_status = self.check_docker_daemon()

status_icon = "✅" if docker_status['status'] == 'running' else "❌"

print(f" {status_icon} 状态: {docker_status['message']}")

# Docker资源配置检查

print("\n⚙️ Docker资源配置:")

docker_resources = self.check_docker_resources()

if 'error' not in docker_resources:

print(f" Docker版本: {docker_resources['docker_version']}")

print(f" 总内存: {docker_resources['total_memory_gb']}GB")

print(f" CPU核心数: {docker_resources['total_cpus']}")

else:

print(f" {docker_resources['error']}")

# 系统资源检查

print("\n💻 系统资源使用情况:")

system_resources = self.check_system_resources()

print(f" CPU使用率: {system_resources['cpu_percent']}%")

print(f" 内存总量: {system_resources['memory_total_gb']}GB")

print(f" 可用内存: {system_resources['memory_available_gb']}GB ({system_resources['memory_percent']}%)")

print(f" 磁盘总量: {system_resources['disk_total_gb']}GB")

print(f" 可用磁盘: {system_resources['disk_free_gb']}GB ({system_resources['disk_percent']}%)")

# 容器状态和日志分析

print("\n📦 容器状态和问题分析:")

try:

# 获取项目中的所有容器

containers = self.client.containers.list(filters={

"label": f"com.docker.compose.project={self.project_name}"

})

if not containers:

print(" 未找到项目容器,请检查项目是否已启动")

return

for container in containers:

print(f"\n 📦 容器: {container.name}")

print(f" 状态: {container.status}")

# 分析容器日志

log_analysis = self.analyze_container_logs(container.name)

issues = log_analysis['issues']

suggestions = log_analysis['suggestions']

if issues:

print(f" 🔍 发现问题:")

for issue in issues:

print(f" - {issue}")

if suggestions:

print(f" 💡 解决建议:")

for suggestion in suggestions:

print(f" - {suggestion}")

else:

print(f" ✅ 未发现明显问题")

except Exception as e:

print(f" ❌ 容器状态检查失败: {e}")

def main():

"""主函数"""

diagnostic = AIDiagnostic("ai-application")

diagnostic.print_diagnostic_report()

if __name__ == "__main__":

main()

7. 实践案例

7.1 案例背景

假设我们需要构建一个AI网页内容分析应用,该应用能够爬取指定网页,提取关键信息,并使用AI模型进行分析。我们将使用Docker Compose搭建一个包含以下组件的系统:

- API服务:提供RESTful接口接收用户请求

- Worker服务:处理后台爬取和分析任务

- Playwright服务:处理复杂的网页交互

- Redis服务:作为任务队列和缓存

7.2 实施步骤

- 项目初始化

# 创建项目目录

mkdir ai-web-analyzer

cd ai-web-analyzer

# 创建项目结构

mkdir -p src/api src/worker src/utils config data logs scripts

touch docker-compose.yml .env README.md

# 创建Python脚本

touch scripts/monitor.py scripts/manager.py scripts/diagnostic.py

chmod +x scripts/*.py

- 配置Docker Compose文件

参考第4节的配置文件内容。

- 配置环境变量

参考第4.2节的环境变量配置。

- 启动系统

# 启动所有服务

docker-compose up -d

# 查看服务状态

docker-compose ps

# 查看服务日志

docker-compose logs -f

- 验证系统功能

# 测试API服务

curl -X POST http://localhost:8083/v1/scrape \

-H "Content-Type: application/json" \

-d '{"url":"https://example.com"}'

# 测试Redis连接

docker exec -it ai-application-redis-1 redis-cli -p 6381 ping

7.3 实施计划甘特图

8. 性能优化

8.1 资源优化策略

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

AI应用性能优化脚本

用于分析和优化AI应用的性能

"""

import psutil

import docker

import time

from typing import Dict

class PerformanceOptimizer:

"""性能优化器"""

def __init__(self, project_name: str = "ai-application"):

"""

初始化性能优化器

Args:

project_name (str): Docker Compose项目名称

"""

self.project_name = project_name

try:

self.client = docker.from_env()

except Exception as e:

print(f"Docker客户端初始化失败: {e}")

raise

def analyze_system_load(self) -> Dict:

"""

分析系统负载

Returns:

Dict: 系统负载信息

"""

# CPU使用率

cpu_percent = psutil.cpu_percent(interval=1)

# 内存使用情况

memory = psutil.virtual_memory()

# 磁盘使用情况

disk = psutil.disk_usage('/')

return {

'cpu_percent': cpu_percent,

'memory_percent': memory.percent,

'disk_percent': round(((disk.total - disk.free) / disk.total) * 100, 2),

'load_average': psutil.getloadavg()

}

def recommend_scaling(self, service_name: str) -> Dict:

"""

根据系统负载推荐服务扩缩容策略

Args:

service_name (str): 服务名称

Returns:

Dict: 扩缩容建议

"""

load = self.analyze_system_load()

# 简单的负载均衡策略

if load['cpu_percent'] > 80 or load['memory_percent'] > 80:

# 高负载,建议扩容

action = "scale_up"

reason = "系统负载较高"

elif load['cpu_percent'] < 30 and load['memory_percent'] < 30:

# 低负载,建议缩容

action = "scale_down"

reason = "系统负载较低"

else:

# 负载正常,保持现状

action = "keep"

reason = "系统负载正常"

return {

'action': action,

'reason': reason,

'system_load': load

}

def print_optimization_report(self):

"""打印优化建议报告"""

print(f"\n{'='*60}")

print(f"📊 AI应用性能优化建议报告 - {time.strftime('%Y-%m-%d %H:%M:%S')}")

print(f"{'='*60}")

load = self.analyze_system_load()

print(f"\n💻 系统负载分析:")

print(f" CPU使用率: {load['cpu_percent']}%")

print(f" 内存使用率: {load['memory_percent']}%")

print(f" 磁盘使用率: {load['disk_percent']}%")

print(f" 系统平均负载: {load['load_average']}")

print(f"\n💡 优化建议:")

if load['cpu_percent'] > 80:

print(" ⚠️ CPU使用率过高,建议:")

print(" 1. 增加Worker服务副本数")

print(" 2. 优化AI模型推理代码")

print(" 3. 考虑使用更强大的硬件")

if load['memory_percent'] > 80:

print(" ⚠️ 内存使用率过高,建议:")

print(" 1. 调整容器内存限制")

print(" 2. 优化数据处理逻辑,及时释放内存")

print(" 3. 考虑使用内存数据库")

if load['disk_percent'] > 80:

print(" ⚠️ 磁盘使用率过高,建议:")

print(" 1. 清理不必要的日志文件")

print(" 2. 配置日志轮转")

print(" 3. 移除不需要的Docker镜像")

if all(value < 30 for value in [load['cpu_percent'], load['memory_percent'], load['disk_percent']]):

print(" ✅ 系统资源使用正常,无需特别优化")

def main():

"""主函数"""

optimizer = PerformanceOptimizer("ai-application")

optimizer.print_optimization_report()

if __name__ == "__main__":

main()

8.2 扩展性优化

通过以下方式提高系统的扩展性:

- 水平扩展:使用

--scale参数动态调整服务副本数 - 负载均衡:在多个副本前部署负载均衡器

- 微服务架构:将大型服务拆分为更小的独立服务

9. 安全性考虑

9.1 安全配置示例

# 安全增强的docker-compose.yml

version: '3.8'

services:

redis:

image: redis:7-alpine

command: redis-server --port 6381 --requirepass ${REDIS_PASSWORD}

networks:

- backend

ports:

- "127.0.0.1:6381:6381" # 仅本地访问

volumes:

- redis-data:/data

deploy:

resources:

limits:

memory: 512M

user: "1001:1001" # 非root用户运行

security_opt:

- no-new-privileges:true

# 其他服务配置...

networks:

backend:

driver: bridge

ipam:

config:

- subnet: 172.20.0.0/16

volumes:

redis-data:

driver: local

9.2 敏感信息管理

# .env - 敏感信息配置

REDIS_PASSWORD=your_secure_password

OPENAI_API_KEY=sk-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

DATABASE_URL=postgresql://user:password@localhost:5432/dbname

10. 常见问题解答

10.1 任务超时问题

问题现象:提交的任务长时间未完成或超时

解决方案:

-

检查Worker服务状态:

docker-compose logs worker -

增加超时时间:

# 在.env中增加超时配置 WORKER_TIMEOUT=600 -

扩展Worker副本数:

docker-compose up -d --scale worker=3

10.2 连接问题

问题现象:出现Cant accept connection等连接错误

解决方案:

-

检查服务依赖:

depends_on: redis: condition: service_healthy -

检查网络配置:

# 测试服务间连接 docker exec ai-application-api-1 ping redis -

检查端口配置:

# 检查端口是否正确映射 docker-compose port redis 6381

10.3 内存不足问题

问题现象:容器被系统终止或应用性能下降

解决方案:

-

增加内存限制:

deploy: resources: limits: memory: 4G -

优化应用内存使用:

# 及时释放不需要的对象 import gc # 处理完大数据后强制垃圾回收 del large_data_object gc.collect()

10.4 架构不匹配问题

问题现象:容器启动失败,出现架构相关错误

解决方案:

-

使用正确架构的镜像:

docker build --platform linux/amd64 -t my-app . -

在Docker Compose中指定平台:

services: api: platform: linux/amd64

11. 扩展阅读

- Docker Compose官方文档: https://docs.docker.com/compose/

- Docker最佳实践: https://docs.docker.com/develop/develop-images/dockerfile_best-practices/

- Redis官方文档: https://redis.io/documentation/

- Playwright官方文档: https://playwright.dev/

- Python Docker SDK文档: https://docker-py.readthedocs.io/

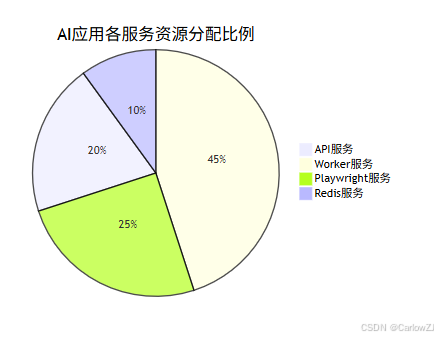

12. 资源分布情况

总结

本文通过一个完整的实践案例,详细介绍了如何使用Docker Compose构建和部署AI应用系统。我们从环境搭建、服务配置到问题排查和性能优化,全面覆盖了AI应用开发和部署的各个环节。

关键要点总结:

- 合理的架构设计:采用微服务架构,将复杂系统拆分为独立的服务组件

- 完善的配置管理:通过环境变量和配置文件管理应用配置

- 有效的监控机制:编写Python脚本实时监控系统状态和资源使用情况

- 系统的故障排查:提供诊断工具快速定位和解决常见问题

- 持续的性能优化:根据系统负载动态调整资源配置

- 严格的安全措施:从网络安全、数据安全等多个维度保障系统安全

通过遵循这些最佳实践,AI应用开发者可以快速构建稳定、高效、可扩展的容器化应用系统。Docker Compose作为轻量级的容器编排工具,特别适合中小型AI项目的开发和部署,能够显著提高开发效率和系统可靠性。

在实际应用中,应根据具体业务场景和性能要求,灵活调整优化策略,持续改进系统性能。同时,建议建立完善的监控和告警机制,确保系统稳定运行。

236

236

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?