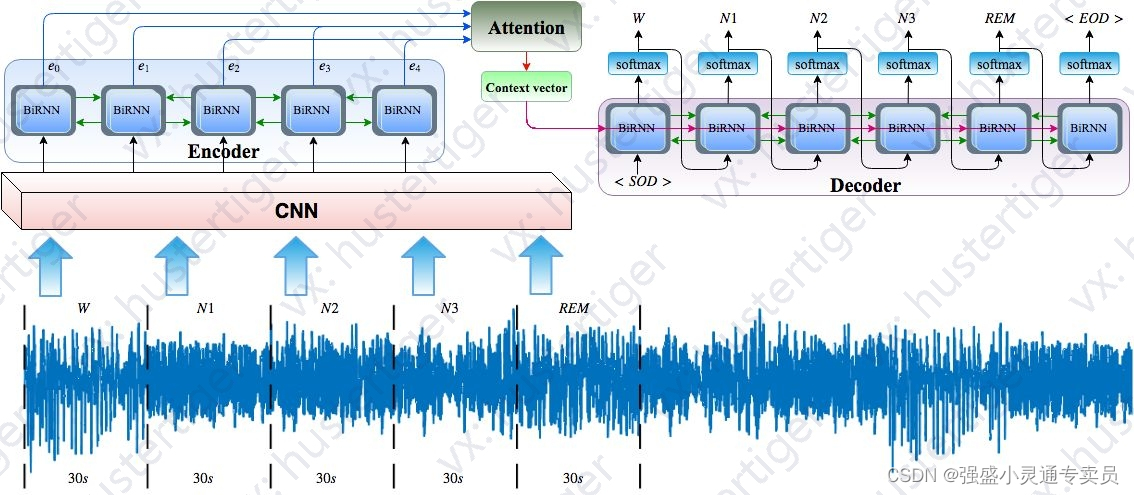

深度学习方法,用于使用单通道脑电图进行自动睡眠阶段评分。

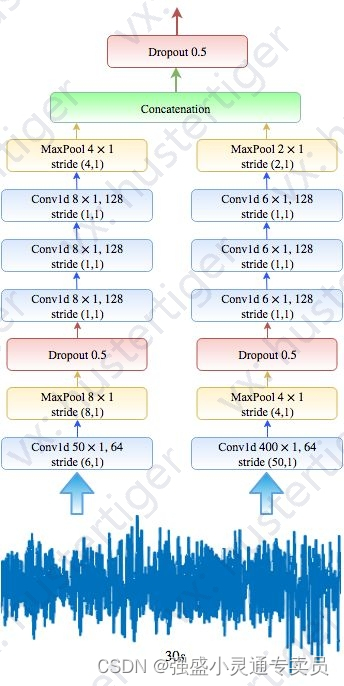

def build_firstPart_model(input_var,keep_prob_=0.5):

# List to store the output of each CNNs

output_conns = []

######### CNNs with small filter size at the first layer #########

# Convolution

network = tf.layers.conv1d(inputs=input_var, filters=64, kernel_size=50, strides=6,

padding='same', activation=tf.nn.relu)

network = tf.layers.max_pooling1d(inputs=network, pool_size=8, strides=8, padding='same')

# Dropout

network = tf.nn.dropout(network, keep_prob_)

# Convolution

network = tf.layers.conv1d(inputs=network, filters=128, kernel_size=8, strides=1,

padding='same', activation=tf.nn.relu)

network = tf.layers.conv1d(inputs=network, filters=128, kernel_size=8, strides=1,

padding='same', activation=tf.nn.relu)

network = tf.layers.conv1d(inputs=network, filters=128, kernel_size=8, strides=1,

padding='same', activation=tf.nn.relu)

# Max pooling

network = tf.layers.max_pooling1d(inputs=network, pool_size=4, strides=4, padding='same')

# Flatten

network = flatten(name="flat1", input_var=network)

output_conns.append(network)

######### CNNs with large filter size at the first layer #########

# Convolution

network = tf.layers.conv1d(inputs=input_var, filters=64, kernel_size=400, strides=50,

padding='same', activation=tf.nn.relu)

network = tf.layers.max_pooling1d(inputs=network, pool_size=4, strides=4, padding='same')

# Dropout

network = tf.nn.dropout(network, keep_prob_)

# Convolution

network = tf.layers.conv1d(inputs=network, filters=128, kernel_size=6, strides=1,

padding='same', activation=tf.nn.relu)

network = tf.layers.conv1d(inputs=network, filters=128, kernel_size=6, strides=1,

padding='same', activation=tf.nn.relu)

network = tf.layers.conv1d(inputs=network, filters=128, kernel_size=6, strides=1,

padding='same', activation=tf.nn.relu)

# Max pooling

network = tf.layers.max_pooling1d(inputs=network, pool_size=2, strides=2, padding='same')

# Flatten

network = flatten(name="flat2", input_var=network)

output_conns.append(network)

# Concat

network = tf.concat(output_conns,1, name="concat1")

# Dropout

network = tf.nn.dropout(network, keep_prob_)

return network

本文介绍了一种使用深度学习方法,特别是卷积神经网络(CNN)对单通道脑电图数据进行处理,以实现自动睡眠阶段评分的技术,包括了不同大小滤波器的CNN结构和dropout层的应用。

本文介绍了一种使用深度学习方法,特别是卷积神经网络(CNN)对单通道脑电图数据进行处理,以实现自动睡眠阶段评分的技术,包括了不同大小滤波器的CNN结构和dropout层的应用。