参考资料

https://nyu-cds.github.io/python-gpu/02-cuda/

https://docs.nvidia.com/cuda/cuda-c-programming-guide/index.html

https://docs.nvidia.com/cuda/cuda-c-best-practices-guide/

thread执行过程: https://docs.nvidia.com/cuda/cuda-c-programming-guide/index.html#simt-architecture

https://blog.youkuaiyun.com/java_zero2one/article/details/51477791

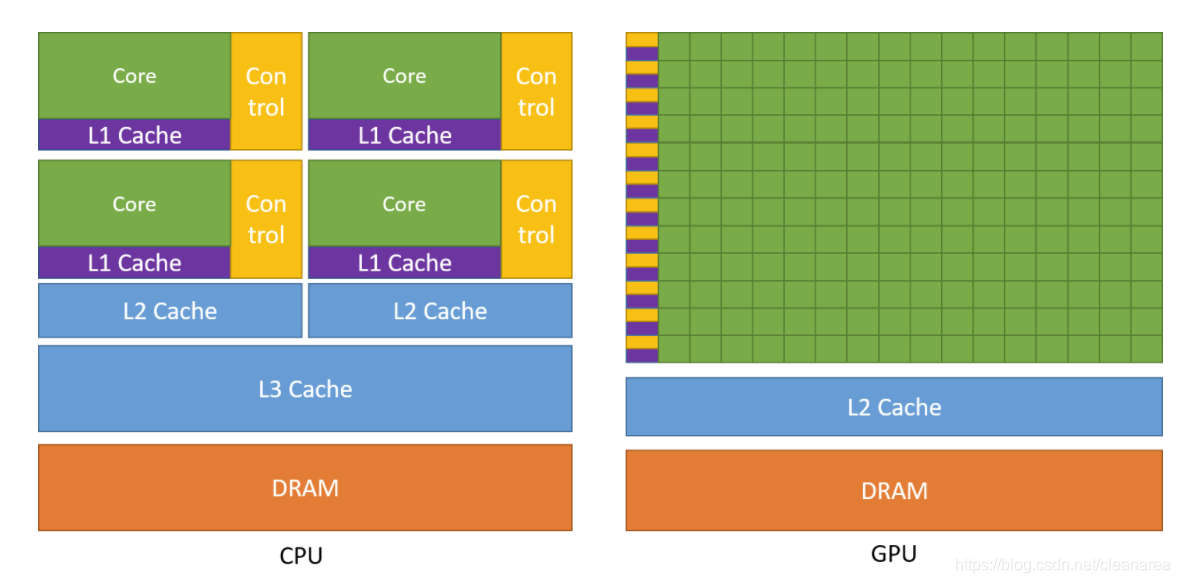

GPU结构

GPU更多强调的是改善系统吞吐,不提供复杂的逻辑控制,故其集成了更多的计算单元,同时支撑的指令的类型和数量较少

The core computational unit, which includes control, arithmetic, registers and typically some cache, is replicated some number of times and connected to memory via a network.

cuda最高层的抽象:

- a hierarchy of thread groups

- shared memories

- barrier synchronization

CPU和GPU的区别

Threading resources

Execution pipelines on host systems can support a limited number of concurrent threads. For example, servers that have two 32 core processors can run only 64 threads concurrently (or small multiple of that if the CPUs support simultaneous multithreading). By comparison, the smallest executable unit of parallelism on a CUDA device comprises 32 threads (termed a warp of threads). Modern NVIDIA GPUs can support up to 2048 active threads concurrently per multiprocessor (see Features and Specifications of the CUDA C++ Programming Guide) On GPUs with 80 multiprocessors, this leads to more than 160,000 concurrently active threads.

Threads

Threads on a CPU are generally heavyweight entities. The operating system must swap threads on and off CPU execution channels to provide multithreading capability. Context switches (when two threads are swapped) are therefore slow and expensive. By comparison, threads on GPUs are extremely lightweight. In a typical system, thousands of threads are queued up for work (in warps of 32 threads each). If the GPU must wait on one warp of threads(这要怎么理解?为什么还有wait的过程 => 可能是这个warp需要等待数据,等待输入输出), it simply begins executing work on another. Because separate registers are allocated to all active threads, no swapping of registers or other state need occur when switching among GPU threads. Resources stay allocated to each thread until it completes its execution(只要进入到GPU,进程就会占用特定资源). In short, CPU cores are designed to minimize latency for a small number of threads at a time each, whereas GPUs are designed to handle a large number of concurrent, lightweight threads in order to maximize throughput.

cuda thread的映射过程

cuda Thread执行过程

part 1: thread block的assignment

The NVIDIA GPU architecture is built around a scalable array of multithreaded Streaming Multiprocessors (SMs). When a CUDA program on the host CPU invokes a kernel grid, the blocks of the grid are enumerated and distributed to multiprocessors with available execution capacity. The threads of a thread block execute concurrently on one multiprocessor, and multiple thread blocks can execute concurrently on one multiprocessor. As thread blocks terminate, new blocks are launched on the vacated multiprocessors.

- 每个kernel都对应一个thread grid,该grid由多个thread block组成,不同block在kernel初始化过程中被创建到不同的SM上;

- 同一个block内的threads在SM上并发执行(并行==>在不同的处理单元上处理不同的任务,并发==>在同一个处理单元上执行多个任务,任务交替使用处理单元)

- 不同block可以在同一个SM上运行

part2: thread block的执行

The multiprocessor creates, manages, schedules, and executes threads in groups of 32 parallel threads called warps. Individual threads composing a warp start together at the same program address, but they have their own instruction address counter and register state and are therefore free to branch and execute independently. The term warp originates from weaving, the first parallel thread technology. A half-warp is either the first or second half of a warp. A quarter-warp is either the first, second, third, or fourth quarter of a warp.

When a multiprocessor is given one or more thread blocks to execute, it partitions them into warps and each warp gets scheduled by a warp scheduler for execution. The way a block is partitioned into warps is always the same; each warp contains threads of consecutive, increasing thread IDs with the first warp containing thread 0. Thread Hierarchy describes how thread IDs relate to thread indices in the block.

A warp executes one common instruction at a time, so full efficiency is realized when all 32 threads of a warp agree on their execution path. If threads of a warp diverge via a data-dependent conditional branch, the warp executes each branch path taken, disabling threads that are not on that path. Branch divergence occurs only within a warp; different warps execute independently regardless of whether they are executing common or disjoint code paths.

1. SM创建、调度、执行thread的基本单元是warp,每个warp由32个线程组成;同一个warp内的thread有相同的program地址,不同的instruction counter和register state;这意味着每个thread虽然执行相同的程序且独立运行,但是可能拥有不同的执行过程。

2. SM将每个thread block以warp(包括32个线程)为单位进行切分,并通过warp scheduler对warps进行调度。

3. 程序执行开始的时候,同一个warp内各thread执行相同的指令;但在执行过程中,程序可能拥有data-dependent的分支,使得部分thread的执行路径发生变化;warp会执行所有分支,在执行过程中不同于当前分支的thread会被暂时叫停。

思考:在CNN类的应用,GPU底层thread的分配和执行情况是怎样的?

5831

5831

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?