hive join的一个小问题

最近在看公司代码发现一个和join相关的奇怪的写法

抽象一下表结构如下

为了简化问题,这里就用这张示例表演示

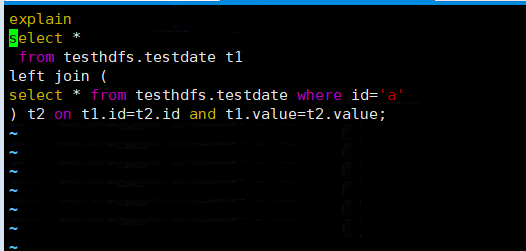

写法一

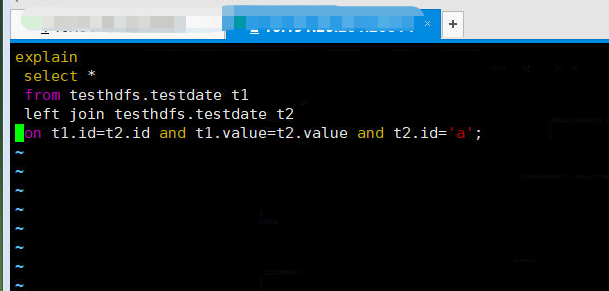

写法二

写法一 明细是想要缩小join表的范围,减少关联的数据,但是其实写法一和写法二的效果是一样的,问题的本质是用第一种方式的人对join on条件的理解不够深入,虽然说两种写法效果一样但是如果sql很复杂关联的表很多 第一种写法会导致sql的可读性非常差

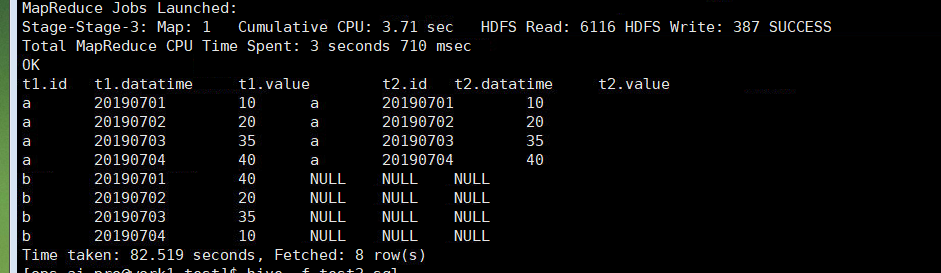

下面看下两种写法的执行结果

写法一执行结果

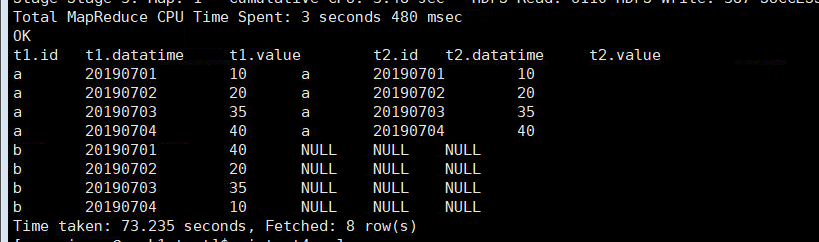

写法二执行结果

执行结果是一样 下面看下执行计划

写法一的执行计划

Explain

STAGE DEPENDENCIES:

Stage-4 is a root stage

Stage-3 depends on stages: Stage-4

Stage-0 depends on stages: Stage-3

STAGE PLANS:

Stage: Stage-4

Map Reduce Local Work

Alias -> Map Local Tables:

$hdt$_1:testdate

Fetch Operator

limit: -1

Alias -> Map Local Operator Tree:

$hdt$_1:testdate

TableScan

alias: testdate

Statistics: Num rows: 1 Data size: 112 Basic stats: COMPLETE Column stats: NONE

Filter Operator

predicate: (id = 'a') (type: boolean)

Statistics: Num rows: 1 Data size: 112 Basic stats: COMPLETE Column stats: NONE

Select Operator

expressions: 'a' (type: string), datatime (type: int), value (type: int)

outputColumnNames: _col0, _col1, _col2

Statistics: Num rows: 1 Data size: 112 Basic stats: COMPLETE Column stats: NONE

HashTable Sink Operator

filter predicates:

0 {(_col0 = 'a')}

1

keys:

0 _col2 (type: int)

1 _col2 (type: int)

Stage: Stage-3

Map Reduce

Map Operator Tree:

TableScan

alias: t1

Statistics: Num rows: 1 Data size: 112 Basic stats: COMPLETE Column stats: NONE

Select Operator

expressions: id (type: string), datatime (type: int), value (type: int)

outputColumnNames: _col0, _col1, _col2

Statistics: Num rows: 1 Data size: 112 Basic stats: COMPLETE Column stats: NONE

Map Join Operator

condition map:

Left Outer Join0 to 1

filter predicates:

0 {(_col0 = 'a')}

1

keys:

0 _col2 (type: int)

1 _col2 (type: int)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5

Statistics: Num rows: 1 Data size: 123 Basic stats: COMPLETE Column stats: NONE

File Output Operator

compressed: false

Statistics: Num rows: 1 Data size: 123 Basic stats: COMPLETE Column stats: NONE

table:

input format: org.apache.hadoop.mapred.SequenceFileInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

Local Work:

Map Reduce Local Work

Stage: Stage-0

Fetch Operator

limit: -1

Processor Tree:

ListSink

Time taken: 11.505 seconds, Fetched: 69 row(s)

写法二执行计划

Explain

STAGE DEPENDENCIES:

Stage-4 is a root stage

Stage-3 depends on stages: Stage-4

Stage-0 depends on stages: Stage-3

STAGE PLANS:

Stage: Stage-4

Map Reduce Local Work

Alias -> Map Local Tables:

$hdt$_1:t2

Fetch Operator

limit: -1

Alias -> Map Local Operator Tree:

$hdt$_1:t2

TableScan

alias: t2

Statistics: Num rows: 1 Data size: 112 Basic stats: COMPLETE Column stats: NONE

Filter Operator

predicate: (id = 'a') (type: boolean)

Statistics: Num rows: 1 Data size: 112 Basic stats: COMPLETE Column stats: NONE

Select Operator

expressions: 'a' (type: string), datatime (type: int), value (type: int)

outputColumnNames: _col0, _col1, _col2

Statistics: Num rows: 1 Data size: 112 Basic stats: COMPLETE Column stats: NONE

HashTable Sink Operator

filter predicates:

0 {(_col0 = 'a')}

1

keys:

0 _col2 (type: int)

1 _col2 (type: int)

Stage: Stage-3

Map Reduce

Map Operator Tree:

TableScan

alias: t1

Statistics: Num rows: 1 Data size: 112 Basic stats: COMPLETE Column stats: NONE

Select Operator

expressions: id (type: string), datatime (type: int), value (type: int)

outputColumnNames: _col0, _col1, _col2

Statistics: Num rows: 1 Data size: 112 Basic stats: COMPLETE Column stats: NONE

Map Join Operator

condition map:

Left Outer Join0 to 1

filter predicates:

0 {(_col0 = 'a')}

1

keys:

0 _col2 (type: int)

1 _col2 (type: int)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5

Statistics: Num rows: 1 Data size: 123 Basic stats: COMPLETE Column stats: NONE

File Output Operator

compressed: false

Statistics: Num rows: 1 Data size: 123 Basic stats: COMPLETE Column stats: NONE

table:

input format: org.apache.hadoop.mapred.SequenceFileInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

Local Work:

Map Reduce Local Work

Stage: Stage-0

Fetch Operator

limit: -1

Processor Tree:

ListSink

Time taken: 11.352 seconds, Fetched: 69 row(s)

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?