Spark教程

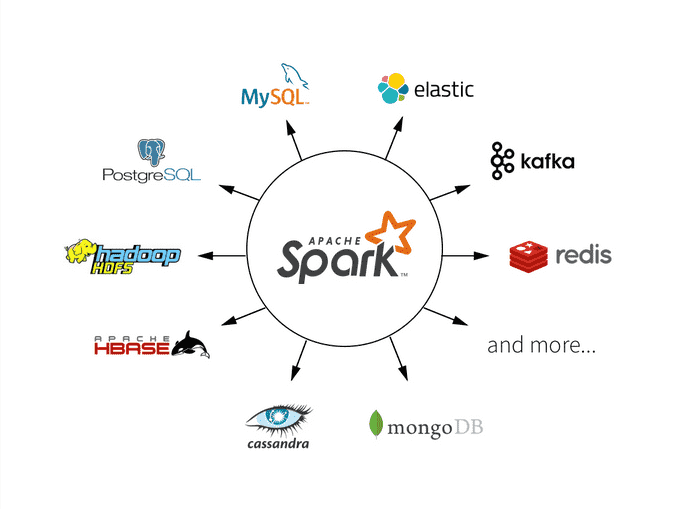

Apache Spark是一个开源集群计算框架。其主要目的是处理实时生成的数据。

Spark建立在Hadoop MapReduce的顶部。它被优化为在内存中运行,而Hadoop的MapReduce等替代方法将数据写入计算机硬盘驱动器或从计算机硬盘驱动器写入数据。因此,Spark比其他替代方案更快地处理数据。

Spark架构依赖于两个抽象:

- 弹性分布式数据集(RDD)

- 有向无环图(DAG)

scala> val data = sc.parallelize(List(10,20,30))

data: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[2] at parallelize at <console>:24

scala> data.collect

res3: Array[Int] = Array(10, 20, 30)

scala> val abc = data.filter(x => x!=30)

Spark 编程指南

Spark SQL教程

Spark Overview

Programming Guides:

- Quick Start: a quick introduction to the Spark API; start here!

- RDD Programming Guide: overview of Spark basics - RDDs (core but old API), accumulators, and broadcast variables

- Spark SQL, Datasets, and DataFrames: processing structured data with relational queries (newer API than RDDs)

- Structured Streaming: processing structured data streams with relation queries (using Datasets and DataFrames, newer API than DStreams)

- Spark Streaming: processing data streams using DStreams (old API)

- MLlib: applying machine learning algorithms

- GraphX: processing graphs

API Docs:

Quick Start

RDD Programming Guide

Spark 3.0.1 ScalaDoc

Apache Spark Examples

Additional Examples

Many additional examples are distributed with Spark:

- Basic Spark: Scala examples, Java examples, Python examples

- Spark Streaming: Scala examples, Java examples

史上最简单的spark系列教程 | 完结

本文介绍了Apache Spark的快速入门指南,包括其作为开源集群计算框架的优势,如处理实时生成数据的能力,以及与Hadoop MapReduce相比在内存中运行的优化特性。详细探讨了Spark的核心抽象——弹性分布式数据集(RDD)和有向无环图(DAG),并通过示例展示了如何使用Spark进行数据过滤操作。此外,还提供了丰富的资源链接,涵盖Spark SQL、流处理、机器学习等方面,适合初学者快速掌握Spark的基本使用。

本文介绍了Apache Spark的快速入门指南,包括其作为开源集群计算框架的优势,如处理实时生成数据的能力,以及与Hadoop MapReduce相比在内存中运行的优化特性。详细探讨了Spark的核心抽象——弹性分布式数据集(RDD)和有向无环图(DAG),并通过示例展示了如何使用Spark进行数据过滤操作。此外,还提供了丰富的资源链接,涵盖Spark SQL、流处理、机器学习等方面,适合初学者快速掌握Spark的基本使用。

3036

3036

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?