一、下载讯飞的SDK

二、集成流程

第一步:获取appid(进入控制台查看)

第二步:工程配置

1、将模板项目的lib文件拉入项目,并加入依赖库

注意: 添加iflyMSC.framework时,请检查工程BuildSetting中的framwork path的设置,如果出现找不到framework的情况,可以将path清空,在Xcode中删除framework,然后重新添加。 如果使用了离线识别,还需要增加libc++.tbd。 如果使用aiui,需要添加libc++.tbd,libicucord.tbd。

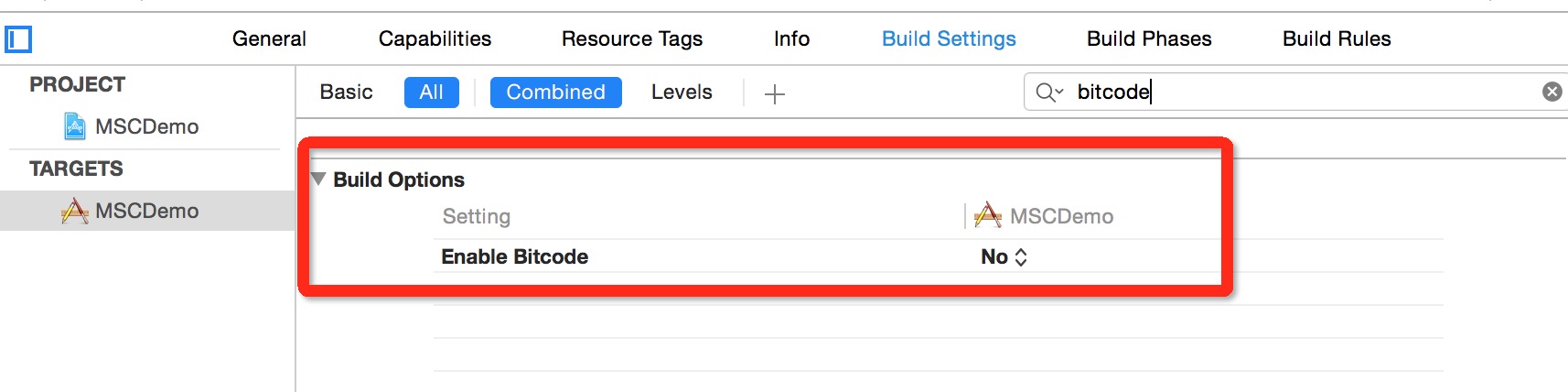

2、设置Bitcode

在Xcode 7,8默认开启了Bitcode,而Bitcode 需要工程依赖的所有类库同时支持。MSC SDK暂时还不支持Bitcode,可以先临时关闭。后续MSC SDK支持Bitcode 时,会在讯飞开放平台上进行SDK版本更新,QQ支持群中同时会有相关提醒,请关注。关闭此设置,只需在Targets - Build Settings 中搜索Bitcode 即可,找到相应选项,设置为NO。

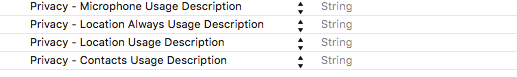

3、用户隐私权限配置

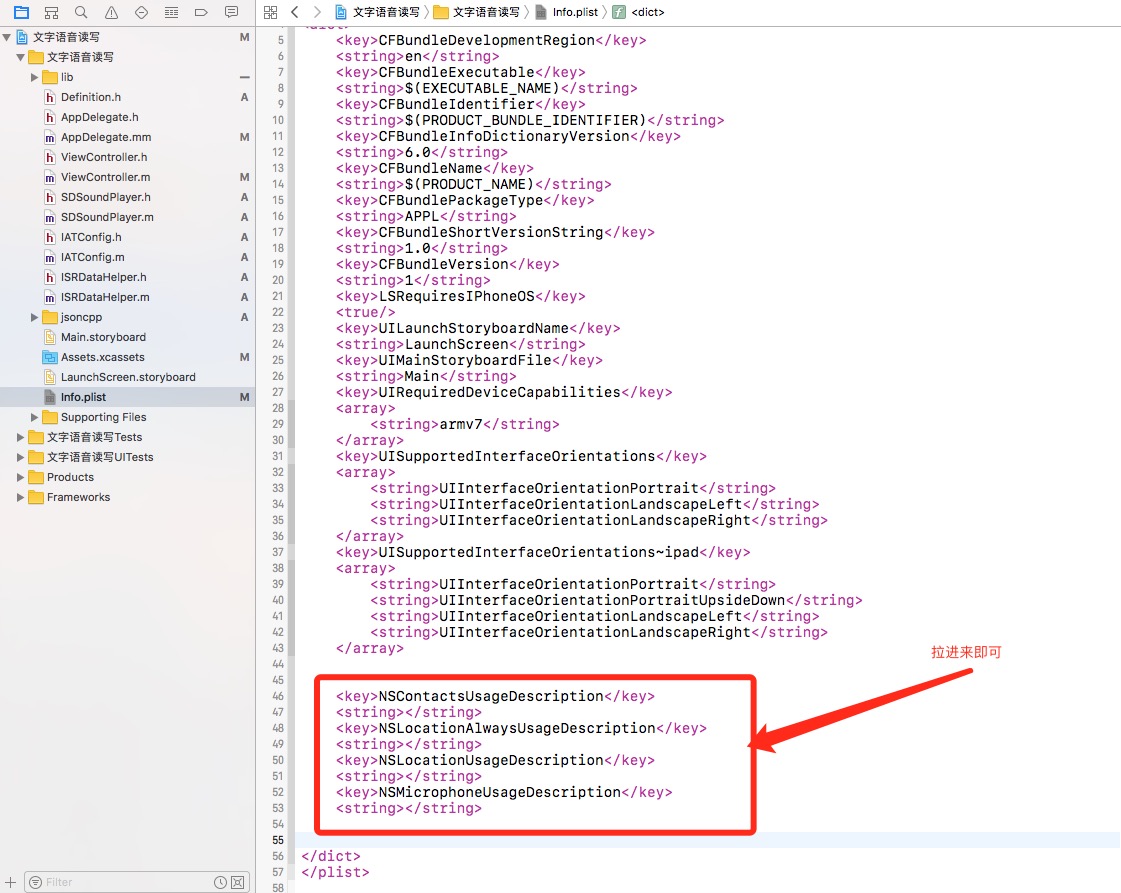

iOS 10发布以来,苹果为了用户信息安全,加入隐私权限设置机制,让用户来选择是否允许。 隐私权限配置可在info.plist 新增相关privacy字段,MSC SDK中需要用到的权限主要包括麦克风权限、联系人权限和地理位置权限:

NSMicrophoneUsageDescription

NSLocationUsageDescription

NSLocationAlwaysUsageDescription

NSContactsUsageDescription

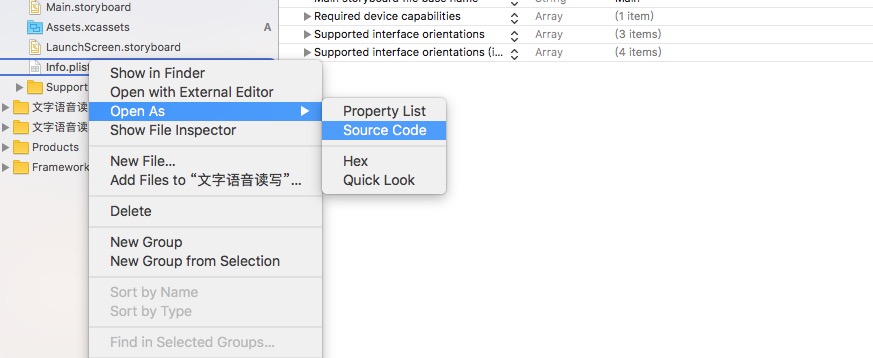

即在Info.plist 中增加下图设置:

第1种设置方法:

第2种设置方法:

第三步:初始化

初始化示例:

//可在启动时添加,Appid是应用的身份信息,具有唯一性,初始化时必须要传入Appid。

NSString *initString = [[NSString alloc] initWithFormat:@"appid=%@", @"YourAppid"];

[IFlySpeechUtility createUtility:initString];

注意: 初始化是一个异步过程,可放在App启动时执行初始化,具体代码可以参照Demo的MSCAppDelegate.m。

还需要在工程中添加结果解析文件,否则编译会报错。

文件在Demo中的位置:MSCDemo -> tools -> jsoncpp

还需要从模板项目中拖拽IATConfig类和ISRDataHelper类到自己的项目中

、、、、、、、、、、、、、、、、、、、、、、、、、、、、、、、、、、、

三、下面就是具体代码操作

1、语音在线合成

本示例对应Demo的TTSUIController文件,为在线合成的代码示例。

//包含头文件

#import "iflyMSC/IFlyMSC.h"

//需要实现IFlySpeechSynthesizerDelegate合成会话的服务代理

@interface TTSUIController : UIViewController<IFlySpeechSynthesizerDelegate>

@property (nonatomic, strong) IFlySpeechSynthesizer *iFlySpeechSynthesizer;

@end

//获取语音合成单例

_iFlySpeechSynthesizer = [IFlySpeechSynthesizer sharedInstance];

//设置协议委托对象

_iFlySpeechSynthesizer.delegate = self;

//设置合成参数

//设置在线工作方式

[_iFlySpeechSynthesizer setParameter:[IFlySpeechConstant TYPE_CLOUD] forKey:[IFlySpeechConstant ENGINE_TYPE]];

//设置音量,取值范围 0~100

[_iFlySpeechSynthesizer setParameter:@"50" forKey: [IFlySpeechConstant VOLUME]];

//发音人,默认为”xiaoyan”,可以设置的参数列表可参考“合成发音人列表”

[_iFlySpeechSynthesizer setParameter:@" xiaoyan "

forKey: [IFlySpeechConstant VOICE_NAME]];

//保存合成文件名,如不再需要,设置为nil或者为空表示取消,默认目录位于library/cache下

[_iFlySpeechSynthesizer setParameter:@" tts.pcm" forKey: [IFlySpeechConstant TTS_AUDIO_PATH]];

//启动合成会话

[_iFlySpeechSynthesizer startSpeaking: @"你好,我是科大讯飞的小燕"];

//IFlySpeechSynthesizerDelegate协议实现

//合成结束

- (void) onCompleted:(IFlySpeechError *) error {}

//合成开始

- (void) onSpeakBegin {}

//合成缓冲进度

- (void) onBufferProgress:(int) progress message:(NSString *)msg {}

//合成播放进度

- (void) onSpeakProgress:(int) progress beginPos:(int)beginPos endPos:(int)endPos {}

2、语音听写(带界面的例子) IFlySpeechRecognizer是不带界面的语音听写控件,IFlyRecognizerView是带界面的控件,此处仅介绍不带界面的语音听写控件。在ViewController.m中写的,可以直接测试

使用示例如下所示:

//

// ViewController.m

// 文字语音读写

//

// Created by User on 2017/7/7.

// Copyright © 2017年 User. All rights reserved.

//

#import "ViewController.h"

#import "IATConfig.h"

#import "ISRDataHelper.h"

#import "iflyMSC/iflyMSC.h"

@interface ViewController ()<IFlyRecognizerViewDelegate>

@property (nonatomic, strong) IFlySpeechRecognizer *iFlySpeechRecognizer;//语音转文字

//(讯飞自带的界面View)

@property (nonatomic, strong) IFlyRecognizerView *iflyRecognizerView;//Recognition control with view

@property (nonatomic, strong) NSMutableArray *resultARR;

@end

@implementation ViewController

#pragma mark - Initialization

/**

initialize recognition conctol and set recognition params

**/

-(void)initRecognizer

{

NSLog(@"%s",__func__);

//recognition singleton with view

if (_iflyRecognizerView == nil) {

_iflyRecognizerView= [[IFlyRecognizerView alloc] initWithCenter:self.view.center];

}

[_iflyRecognizerView setParameter:@"" forKey:[IFlySpeechConstant PARAMS]];

//set recognition domain

[_iflyRecognizerView setParameter:@"iat" forKey:[IFlySpeechConstant IFLY_DOMAIN]];

_iflyRecognizerView.delegate = self;

if (_iflyRecognizerView != nil) {

IATConfig *instance = [IATConfig sharedInstance];

//set timeout of recording

[_iflyRecognizerView setParameter:instance.speechTimeout forKey:[IFlySpeechConstant SPEECH_TIMEOUT]];

//set VAD timeout of end of speech(EOS)

[_iflyRecognizerView setParameter:instance.vadEos forKey:[IFlySpeechConstant VAD_EOS]];

//set VAD timeout of beginning of speech(BOS)

[_iflyRecognizerView setParameter:instance.vadBos forKey:[IFlySpeechConstant VAD_BOS]];

//set network timeout

[_iflyRecognizerView setParameter:@"20000" forKey:[IFlySpeechConstant NET_TIMEOUT]];

//set sample rate, 16K as a recommended option

[_iflyRecognizerView setParameter:instance.sampleRate forKey:[IFlySpeechConstant SAMPLE_RATE]];

//set language

[_iFlySpeechRecognizer setParameter:instance.language forKey:[IFlySpeechConstant LANGUAGE]];

//set accent

[_iFlySpeechRecognizer setParameter:instance.accent forKey:[IFlySpeechConstant ACCENT]];

//set whether or not to show punctuation in recognition results

[_iflyRecognizerView setParameter:instance.dot forKey:[IFlySpeechConstant ASR_PTT]];

if([[IATConfig sharedInstance].language isEqualToString:@"en_us"]){

if([IATConfig sharedInstance].isTranslate){

[self translation:NO];

}

}

else{

if([IATConfig sharedInstance].isTranslate){

[self translation:YES];

}

}

}

}

-(void)translation:(BOOL) langIsZh

{

if ([IATConfig sharedInstance].haveView == NO) {

[_iFlySpeechRecognizer setParameter:@"1" forKey:[IFlySpeechConstant ASR_SCH]];

if(langIsZh){

[_iFlySpeechRecognizer setParameter:@"cn" forKey:@"orilang"];

[_iFlySpeechRecognizer setParameter:@"en" forKey:@"translang"];

}

else{

[_iFlySpeechRecognizer setParameter:@"en" forKey:@"orilang"];

[_iFlySpeechRecognizer setParameter:@"cn" forKey:@"translang"];

}

[_iFlySpeechRecognizer setParameter:@"translate" forKey:@"addcap"];

[_iFlySpeechRecognizer setParameter:@"its" forKey:@"trssrc"];

}

else{

[_iflyRecognizerView setParameter:@"1" forKey:[IFlySpeechConstant ASR_SCH]];

if(langIsZh){

[_iflyRecognizerView setParameter:@"cn" forKey:@"orilang"];

[_iflyRecognizerView setParameter:@"en" forKey:@"translang"];

}

else{

[_iflyRecognizerView setParameter:@"en" forKey:@"orilang"];

[_iflyRecognizerView setParameter:@"cn" forKey:@"translang"];

}

[_iflyRecognizerView setParameter:@"translate" forKey:@"addcap"];

[_iflyRecognizerView setParameter:@"its" forKey:@"trssrc"];

}

}

- (void)viewDidLoad {

[super viewDidLoad];

_resultARR = [[NSMutableArray alloc]init];

if(_iflyRecognizerView == nil)

{

[self initRecognizer ];

}

//Set microphone as audio source

[_iflyRecognizerView setParameter:IFLY_AUDIO_SOURCE_MIC forKey:@"audio_source"];

//Set result type

[_iflyRecognizerView setParameter:@"plain" forKey:[IFlySpeechConstant RESULT_TYPE]];

//Set the audio name of saved recording file while is generated in the local storage path of SDK,by default in library/cache.

[_iflyRecognizerView setParameter:@"asr.pcm" forKey:[IFlySpeechConstant ASR_AUDIO_PATH]];

UIButton *btn = [UIButton buttonWithType:UIButtonTypeCustom];

btn.backgroundColor = [UIColor redColor];

btn.frame = CGRectMake(200, 300, 100, 60);

[btn addTarget:self action:@selector(btnClick1:) forControlEvents:UIControlEventTouchUpInside];

btn.selected = NO;

[self.view addSubview:btn];

[self setExclusiveTouchForButtons:self.view];

}

-(void)setExclusiveTouchForButtons:(UIView *)myView

{

for (UIView * button in [myView subviews]) {

if([button isKindOfClass:[UIButton class]])

{

[((UIButton *)button) setExclusiveTouch:YES];

}

else if ([button isKindOfClass:[UIView class]])

{

[self setExclusiveTouchForButtons:button];

}

}

}

- (void)btnClick1:(UIButton *)btn{

[_resultARR removeAllObjects];

//启动识别服务

[_iflyRecognizerView start];

}

/*!

* 回调返回识别结果

*

* @param resultArray 识别结果,NSArray的第一个元素为NSDictionary,NSDictionary的key为识别结果,sc为识别结果的置信度

* @param isLast -[out] 是否最后一个结果

*/

- (void)onResult:(NSArray *)resultArray isLast:(BOOL) isLast{

NSMutableString *resultString = [[NSMutableString alloc] init];

NSDictionary *dic = [resultArray objectAtIndex:0];

NSLog(@"%@",dic);

for (NSString *key in dic) {

[resultString appendFormat:@"%@",key];

}

NSString * resultFromJson = nil;

if([IATConfig sharedInstance].isTranslate){

NSDictionary *resultDic = [NSJSONSerialization JSONObjectWithData: //The result type must be utf8, otherwise an unknown error will happen.

[resultString dataUsingEncoding:NSUTF8StringEncoding] options:kNilOptions error:nil];

if(resultDic != nil){

NSDictionary *trans_result = [resultDic objectForKey:@"trans_result"];

NSString *src = [trans_result objectForKey:@"src"];

NSLog(@"src=%@",src);

resultFromJson = [NSString stringWithFormat:@"%@\nsrc:%@",resultString,src];

}

}

else{

resultFromJson = [NSString stringWithFormat:@"%@",resultString];//;[ISRDataHelper stringFromJson:resultString];

}

[_resultARR addObject:resultFromJson];

}

/*!

* 识别结束回调

*

* @param error 识别结束错误码

*/

- (void)onError: (IFlySpeechError *) error{

NSLog(@"errorCode:%d",[error errorCode]);

NSString *resultStr = [_resultARR componentsJoinedByString:@" "];

UIAlertView *alertV = [[UIAlertView alloc]initWithTitle:@"" message:resultStr delegate:self cancelButtonTitle:@"cancle" otherButtonTitles:nil];

[alertV show];

}

- (void)didReceiveMemoryWarning {

[super didReceiveMemoryWarning];

// Dispose of any resources that can be recreated.

}

@end

效果图:

////////////

最后,为了提高语音识别精确读,在程序启动时加入以下代码

#pragma mark----------语音转文字时需要精确辨别的词----------

- (void)setWordsIUsed{

//用户词表

#define USERWORDS @"{\"userword\":[{\"name\":\"iflytek\",\"words\":[\"指动生活\",\"指动\",\"生活\",\"温馨提示\",\"温馨\",\"提示\",\"家园活动\",\"家园\",\"活动\",\"智能门禁\",\"智能\",\"门禁\",\"投诉报修\",\"投诉\",\"报修\",\"物业缴费\",\"交费\",\"缴费\"]}]}"

//创建上传对象

_uploader = [[IFlyDataUploader alloc] init];

IFlyUserWords *iFlyUserWords = [[IFlyUserWords alloc] initWithJson:USERWORDS ];

//设置上传参数

[_uploader setParameter:@"uup" forKey:@"sub"];

[_uploader setParameter:@"userword" forKey:@"dtt"];

//启动上传(请注意name参数的不同)

[_uploader uploadDataWithCompletionHandler:^(NSString * grammerID, IFlySpeechError *error){

//

}name: @"userwords" data:[iFlyUserWords toString]];

}

6493

6493

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?