docker安装Hadoop

1 使用docker自带的hadoop安装

## 1.查看hadoop

[root@hadoop docker]# docker search hadoop

NAME DESCRIPTION STARS OFFICIAL AUTOMATED

sequenceiq/hadoop-docker An easy way to try Hadoop 661 [OK]

uhopper/hadoop Base Hadoop image with dynamic configuration… 103 [OK]

harisekhon/hadoop Apache Hadoop (HDFS + Yarn, tags 2.2 - 2.8) 67 [OK]

bde2020/hadoop-namenode Hadoop namenode of a hadoop cluster 52 [OK]

bde2020/hadoop-datanode Hadoop datanode of a hadoop cluster 39 [OK]

bde2020/hadoop-base Base image to create hadoop cluster. 20 [OK]

uhopper/hadoop-namenode Hadoop namenode 11 [OK]

bde2020/hadoop-nodemanager Hadoop node manager docker image. 10 [OK]

bde2020/hadoop-resourcemanager Hadoop resource manager docker image. 9 [OK]

uhopper/hadoop-datanode Hadoop datanode 9 [OK]

## 2.安装hadoop

[root@hadoop docker]# docker pull sequenceiq/hadoop-docker

Using default tag: latest

latest: Pulling from sequenceiq/hadoop-docker

Image docker.io/sequenceiq/hadoop-docker:latest uses outdated schema1 manifest format. Please upgrade to a schema2 image for better future compatibility. More information at https://docs.docker.com/registry/spec/deprecated-schema-v1/

b253335dcf03: Pulling fs layer

a3ed95caeb02: Pulling fs layer

69623ef05416: Pulling fs layer

8d2023764774: Pulling fs layer

0c3c0ff61963: Pulling fs layer

ff0696749bf6: Pulling fs layer

72accdc282f3: Pull complete

5298ddb3b339: Pull complete

f252bbba6bda: Pull complete

3984257f0553: Pull complete

26343a20fa29: Pull complete

## 3.查看安装成功结果

[root@hadoop docker]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

sequenceiq/hadoop-docker latest 5c3cc170c6bc 6 years ago 1.77GB

## 4.创建master节点

[root@hadoop docker]# docker run -it -p 2201:22 -p 8188:8088 -p 9000:9000 -p 50020:50020 -p 50010:50010 -p 50070:50070 -p 50075:50075 -p 50090:50090 -p 49707:49707--name hadoop -d -h master sequenceiq/hadoop-docker

43c17d7c556a1e7ca9e6cfd7d91ebbde22db2f5fb223956bf670710d733d0979

## 5.创建slave1节点

[root@hadoop docker]# docker run -p 2202:22 --name hadoop1 -d -h slave1 sequenceiq/hadoop-docker

697e4f687e0502f2b7727bdee6f9861cd515c55c1b38d5e43f09c29acc7989f3

## 6.创建slave2节点

[root@hadoop docker]# docker run -p 2203:22 --name hadoop2 -d -h slave2 sequenceiq/hadoop-docker

938f3a29ea7b9e99e02b0df4a448a75a9264d7d74c348dfc577fdbd01380e3e3

## 进入docker并查看Java JDK

[root@hadoop docker]# docker exec -it hadoop bash

bash-4.1# java -version

java version "1.7.0_71"

Java(TM) SE Runtime Environment (build 1.7.0_71-b14)

Java HotSpot(TM) 64-Bit Server VM (build 24.71-b01, mixed mode)

2 免密操作

2.1 master节点

## 1.master节点配置免密

[root@hadoop docker]# docker exec -it hadoop bash

bash-4.1# /etc/init.d/sshd start

bash-4.1# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

/root/.ssh/id_rsa already exists.

Overwrite (y/n)? y

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

fc:39:e8:f3:57:ee:40:c9:99:8c:95:16:a1:af:5f:69 root@master

The key's randomart image is:

+--[ RSA 2048]----+

| o. |

| . o |

| . + |

| . B + |

| S . O |

| o + .. |

| . = .oE |

| .. o.+. |

| .o..... |

+-----------------+

## 2.将/root/.ssh下的id_rsa.pub添加到authorized_keys

bash-4.1# cd /root/.ssh

bash-4.1# ls

authorized_keys config id_rsa id_rsa.pub

bash-4.1# cat id_rsa.pub > authorized_keys

bash-4.1# cat authorized_keys

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAwOpTpijrGlJTnlFEBjIRq9m24KIQ9oc50javrRpWBqQxhUrjIuVQTaOqiW6Dpgo8zDVNRratkB+HnlNQ8q3L0kE+IvlZINrCijZAGksZJpgbyuhqHKdf8Tmdco90FhAENQc54pcCvpDCD4dukSuICN3533rXIBxJU7omHnTQMORo+AMyGDTWW7pNgNDQoC7iZsjE+GcpF9Aq2+joQqYwOOrTDmQ2HI6TFomnM02PERlwZkbM/5ELZsb616JPu9QMNuv8BDHgRF87PtzZEI1vBEDeNfBAc3/J5vuirlqqxgS+zk5DFiWD0jcstJG1hTX5qRCXmvEyHMfE2kEtgkAXYQ== root@master

2.2 slave1节点

bash-4.1# /etc/init.d/sshd start

bash-4.1# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

/root/.ssh/id_rsa already exists.

Overwrite (y/n)? y

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

a3:6c:f7:44:82:e8:a3:3f:49:49:01:ca:6e:81:5c:c6 root@slave1

The key's randomart image is:

+--[ RSA 2048]----+

| o+ |

|+.oE. |

|o+ . |

|. . .. . |

| o .... S . |

|. .o. . + |

| .o.+ . . |

| .oo . o |

| .... . |

+-----------------+

bash-4.1# cd /root/.ssh

bash-4.1# cat id_rsa.pub > authorized_keys

bash-4.1# cat authorized_keys

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAuT0X48XmXIzJ3toiSd0tmWI1ABBgbBiILUgfTqZVdDlBGMq/bywoyJcp80cUI/k2DL4aMWHTypc776/1bMZ3/dmYnFdVWRJ2PzxJ6H2h32QXXXqAuCzVks9BU7Q58guEGoiMeWHHyIffyrcIoiS7rD0tuqgoQnFvQPQBWLVrbxyi1BxBOLeF0jsPdNmc5e4Y8ZaOjJDBvBUmSaJ2zRE6/fxQk58Xe01e4JnnwovDdL+rZB5oue6rvUwXoEiZlGkKkmr2b7xw6UCi/mnYS+wYa2LolhuMTilalkRYEtRYXZWz3VrjdlDGmuCeccUoP+r44f7yk2BTv1OIyYPpDS0HSQ== root@slave1

2.3 slave2节点

bash-4.1# /etc/init.d/sshd start

bash-4.1# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

/root/.ssh/id_rsa already exists.

Overwrite (y/n)? y

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

86:c1:25:7f:2e:64:43:cd:36:57:a2:07:6a:cc:17:6d root@slave2

The key's randomart image is:

+--[ RSA 2048]----+

| . ooo.... |

| . B .=+E. |

| o X.++. |

| * = . |

| . S . |

| . . |

| |

| |

| |

+-----------------+

bash-4.1# cd /root/.ssh

bash-4.1# cat id_rsa.pub > authorized_keys

bash-4.1# cat authorized_keys

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAowCUkXu8rkzpSIi/E53Zv3qYeIItuIO72MS+m/fMsHO7edKC8XjzB4DB406iPF6q2SobaAr8x++txLSq0bnTS4MHhY0GqUICuv7R0F5js1G5jV4cSMpdHfsIb9skz3LMjTXplxXR8md7TCmeS7Kmuf9FNmCXQIuWAdnPuUVxk12b4hFeaNyCi/yktuBy5Tqyi+b2Xp9cGXxz/dl32aHxBf9pkoEUJcej1o/g+hgAZ7c+9mv9aiLhbSI6ZPUGT1F3WBEq9tCx1rkbc5nQfjTCC3/K5btLvnkaaSUShEB4tUleh06LeuuTmCxNZ7vNBoVAb1cjyGvqdJZ6hHqHHddnQQ== root@slave2

2.4 将三个容器中的authorized_keys拷贝到本地合并

## 1.拷贝到本地

[root@localhost hadoop]# docker cp hadoop:/root/.ssh/authorized_keys ./authorized_keys_master

[root@localhost hadoop]# docker cp hadoop1:/root/.ssh/authorized_keys ./authorized_keys_slave1

[root@localhost hadoop]# docker cp hadoop2:/root/.ssh/authorized_keys ./authorized_keys_slave2

[root@localhost hadoop]# ls

authorized_keys_master authorized_keys_slave1 authorized_keys_slave2

## 2.合并

[root@localhost hadoop]# touch authorized_keys

[root@localhost hadoop]# ls

authorized_keys authorized_keys_master authorized_keys_slave1 authorized_keys_slave2

[root@localhost hadoop]# cat authorized_keys_master authorized_keys_slave1 authorized_keys_slave2 > authorized_keys

[root@localhost hadoop]# cat authorized_keys

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA7fQwylN9mv8IpKnUtHUF05DPQ8HxLwzUzS/spTIXmYZzvb8Z3SMtEUlNOymwkCRtglwyHJmy2MuhD57Kq56rRN4yvtPvkJ6KKGkpIgI24DDIsORuI9b9OHu2rYFEBTV9Drn0KrJlFfdYaWfhvCBpabvWPWKD77K1qc3FYsoh6jiLthVeM4a3qZTfObjvPsF8rONobxDltiqDAVKIyClzUQAKfgYY7aYO2YaHWHhxNP0C9+8dqCoKLPIXEUnre0SVGNe10/8hvmCEm8gk8vsDSetwbiEeDalu7WuCtcAn2L8xW+o0+Ko28bocuMy8GT/iz7/O+8aTjcGkuc8jxwMT/w== root@master

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAuT0X48XmXIzJ3toiSd0tmWI1ABBgbBiILUgfTqZVdDlBGMq/bywoyJcp80cUI/k2DL4aMWHTypc776/1bMZ3/dmYnFdVWRJ2PzxJ6H2h32QXXXqAuCzVks9BU7Q58guEGoiMeWHHyIffyrcIoiS7rD0tuqgoQnFvQPQBWLVrbxyi1BxBOLeF0jsPdNmc5e4Y8ZaOjJDBvBUmSaJ2zRE6/fxQk58Xe01e4JnnwovDdL+rZB5oue6rvUwXoEiZlGkKkmr2b7xw6UCi/mnYS+wYa2LolhuMTilalkRYEtRYXZWz3VrjdlDGmuCeccUoP+r44f7yk2BTv1OIyYPpDS0HSQ== root@slave1

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAowCUkXu8rkzpSIi/E53Zv3qYeIItuIO72MS+m/fMsHO7edKC8XjzB4DB406iPF6q2SobaAr8x++txLSq0bnTS4MHhY0GqUICuv7R0F5js1G5jV4cSMpdHfsIb9skz3LMjTXplxXR8md7TCmeS7Kmuf9FNmCXQIuWAdnPuUVxk12b4hFeaNyCi/yktuBy5Tqyi+b2Xp9cGXxz/dl32aHxBf9pkoEUJcej1o/g+hgAZ7c+9mv9aiLhbSI6ZPUGT1F3WBEq9tCx1rkbc5nQfjTCC3/K5btLvnkaaSUShEB4tUleh06LeuuTmCxNZ7vNBoVAb1cjyGvqdJZ6hHqHHddnQQ== root@slave2

[root@localhost hadoop]#2.5 将本地authorized_keys文件分别拷贝到3个容器中

[root@localhost hadoop]# docker cp ./authorized_keys hadoop:/root/.ssh/authorized_keys

[root@localhost hadoop]# docker cp ./authorized_keys hadoop1:/root/.ssh/authorized_keys

[root@localhost hadoop]# docker cp ./authorized_keys hadoop2:/root/.ssh/authorized_keys

## 在容器中查看

bash-4.1# cat authorized_keys

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAwOpTpijrGlJTnlFEBjIRq9m24KIQ9oc50javrRpWBqQxhUrjIuVQTaOqiW6Dpgo8zDVNRratkB+HnlNQ8q3L0kE+IvlZINrCijZAGksZJpgbyuhqHKdf8Tmdco90FhAENQc54pcCvpDCD4dukSuICN3533rXIBxJU7omHnTQMORo+AMyGDTWW7pNgNDQoC7iZsjE+GcpF9Aq2+joQqYwOOrTDmQ2HI6TFomnM02PERlwZkbM/5ELZsb616JPu9QMNuv8BDHgRF87PtzZEI1vBEDeNfBAc3/J5vuirlqqxgS+zk5DFiWD0jcstJG1hTX5qRCXmvEyHMfE2kEtgkAXYQ== root@master

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAxdKMpxzEHotmob4Oi12G6pGoF+z39b0zZF3mWwDMtwX6dNpKTqeoaFDmkKUnov7PEGYKPehT1rPSv0pDxrNcMylsNGYsNt2jhcR0k5YyIAcJKjjYzM3ifaHj4Pce7PtLyr9drqH39cES336xHiawfzKppMKa6tsbEUI5d4YhYRMAGhbDdDw6vVte9NmbPkos4yK272dlAQjwep5TiE63D3OwVP4TAdum99PhVim63ZO0RJYf8es5plAay33OmEUw1WeeXB/0EL/symE/eWlIXtDUbz2c1KHi8yviNB8qDb2YOsRTV8vO1OiRF/nXv6EKorDaeIYvwtDKyxT5ieENEQ== root@slave1

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAr4EKb4wjGwb1sKUX3iZ92T38ZqentL83Yok4tJ8k0UBdCCbOO7qgTGkZwg7gIhO0LBZ9YQDk6uR5hBJ8f3UN38l7MyJM9yYZl65dh04ZXqs0wRUMELYMBzTBKbIFdU9uwy07c8YMJbBvFBnBlzA764rFzGivFgoP4Kk9iIEFO/YQ/EwHyDpFhfRUVPEhjiJK4x7zS0sZAlhSSgk05fKQSw6qlXVr4JOGn5TWU7qylaj9lKABR9tI1WH4WoGx4zE8KDjgdUhtvygSmS4LO8JBOn1JPnqQq8PNWRI0xjWAohw3GnmUu54nKN5cjJjHvZPeXt0Y7B8YtI0+/DCQglo1zw== root@slave2

bash-4.1#3 查看容器IP,修改hosts映射IP

bash-4.1# vi /etc/hosts

...

172.17.0.5 master

172.17.0.6 slave1

172.17.0.7 slave2

映射设置和免密成功

bash-4.1# ssh master

-bash-4.1# exit

logout

bash-4.1# ssh slave1

-bash-4.1# exit

logout

bash-4.1# ssh slave2

-bash-4.1# exit

logout

bash-4.1#

4 修改Hadoop配置文件

注意:这里面三个集群容器均需要修改,可以先在master上修改,然后复制到slave中

find / -name hadoop-env.sh查找hadoop-env.sh的安装路径

bash-4.1# find / -name hadoop-env.sh

/usr/local/hadoop/etc/hadoop/hadoop-env.sh

bash-4.1# cd /usr/local/hadoop/etc/hadoop/

bash-4.1# ls

capacity-scheduler.xml hadoop-env.sh httpfs-log4j.properties kms-site.xml mapred-site.xml.template yarn-site.xml

configuration.xsl hadoop-metrics.properties httpfs-signature.secret log4j.properties slaves

container-executor.cfg hadoop-metrics2.properties httpfs-site.xml mapred-env.cmd ssl-client.xml.example

core-site.xml hadoop-policy.xml kms-acls.xml mapred-env.sh ssl-server.xml.example

core-site.xml.template hdfs-site.xml kms-env.sh mapred-queues.xml.template yarn-env.cmd

hadoop-env.cmd httpfs-env.sh kms-log4j.properties mapred-site.xml yarn-env.sh

bash-4.1#

4.1 hadoop-env.sh

## 容器中的Java已经安装好,继续使用docker默认的jdk

bash-4.1# vi hadoop-env.sh

# The java implementation to use.

export JAVA_HOME=/usr/java/default

export HADOOP_PREFIX=/usr/local/hadoop

export HADOOP_HOME=/usr/local/hadoop

4.2 core-site.xml

bash-4.1# vi core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://172.17.0.5:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/hadoop/hdpdata</value>

</property>

</configuration>

4.3 hdfs-site.xml

#配置备份数量,小于等于slave数量

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.client.use.datanode.hostname</name>

<value>true</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/home/hadoop/hdfs/namenode</value>

<final>true</final>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/home/hadoop/hdfs/datanode</value>

<final>true</final>

</property>

</configuration>

4.4 mapred-site.xml

## mr运行场景

bash-4.1# vi mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapred.job.tracker</name>

<value>172.17.0.5:9000</value>

</property>

</configuration>

4.5 yarn-site.xml

bash-4.1# vi yarn-site.xml

<configuration>

<!-- 配置resourcemanager的地址 -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>172.17.0.2</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

4.6 在master上格式化namenode

bash-4.1# cd /usr/local/hadoop/bin

bash-4.1# ./hadoop namenode -format

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

21/08/09 01:30:05 INFO namenode.NameNode: STARTUP_MSG:

21/08/09 01:30:14 INFO common.Storage: Storage directory /usr/local/hadoop-2.7.0/hdpdata/dfs/name has been successfully formatted.

21/08/09 01:30:14 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

21/08/09 01:30:14 INFO util.ExitUtil: Exiting with status 0

21/08/09 01:30:14 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at master/172.17.0.2

************************************************************/

bash-4.1# cd /usr/local/hadoop/sbin

## 启动hadoop

bash-4.1# ./start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [master]

master: namenode running as process 129. Stop it first.

localhost: datanode running as process 220. Stop it first.

Starting secondary namenodes [0.0.0.0]

0.0.0.0: secondarynamenode running as process 399. Stop it first.

starting yarn daemons

resourcemanager running as process 549. Stop it first.

localhost: nodemanager running as process 642. Stop it first.

bash-4.1# jps

220 DataNode

549 ResourceManager

399 SecondaryNameNode

129 NameNode

2058 Jps

642 NodeManager

5如何判断hadoop集群启动成功

1.Hadoop集群启用成功在虚拟机Hadoop中使用Jps命令有以下界面出现:

2.Hadoop可以访问50070界面

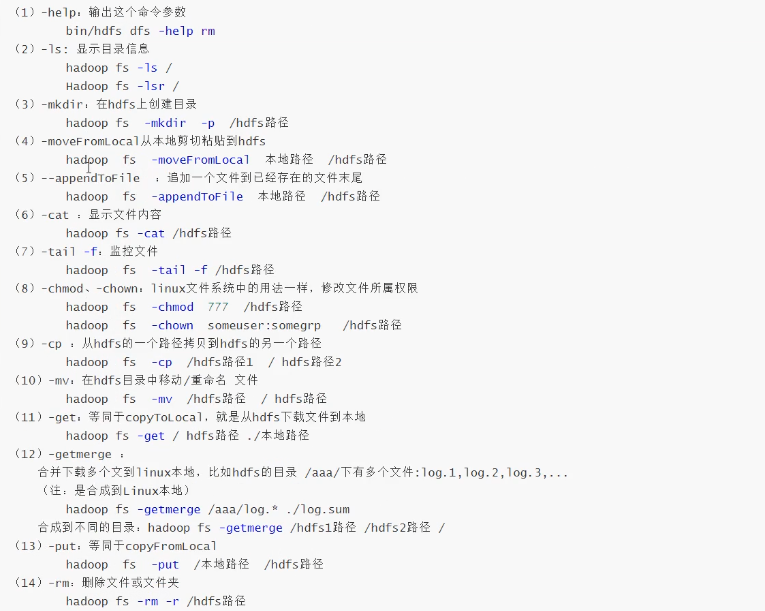

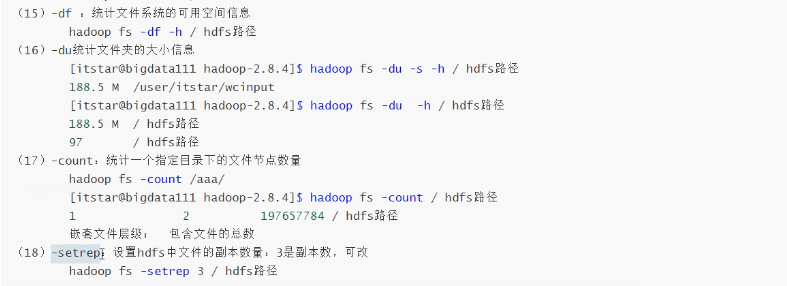

6在容器中进行hdfs操作

1)hdfs dfs的bash hdfs command not found解决方案

a、没有配置环境变量

vi /etc/profile

b、在配置文件最下面加入一下代码

#hadoop

export HADOOP_HOME=/usr/local/hadoop

export LD_LIBRARY_PATH=$HADOOP_HOME/lib/native

export PATH=:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

c、刷新配置文件

source /etc/profiled、创建一个文件夹

hdfs dfs -mkdir -p /usr/root/inpute、hdfs dfs -put 上传

hdfs dfs -put ./authorized_keys /usr/root/inputf、hdfs dfs -get 下载。从hdfs上下载文件到linux上。

hdfs dfs -get /a.txt /opt/data/ g、其他常用命令

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?