Redundant Arrys of Inexpensive Disk :荣措式廉价磁盘阵列。

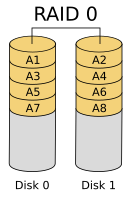

RAID-0(等量模式,stripe):效能最佳

使用相同型号与相同容量 磁盘组成,效果最佳,这种模式会先切出等量的块,当数据写入raid式,档案会依据区块大小切割,之后按顺序放入两个磁盘里。另外如果使用不同容量的磁盘,当小容量磁盘使用完,数据将全部放入最大的那颗磁盘。

如下图

raid-0速度最快,但没有冗余,因为没有纠错能力,一个磁盘损坏,全部数据丢失,危险性与JBOD相同。

RAID-1(镜像模式,mirror)完整备份

这种模式也需要相同模式的磁盘容量,最好一模一样的磁盘,如果磁盘容量不同,总是以磁盘容量小的为主,那么那颗磁盘容量大的就会浪费存储容量。

一份数据传到raid-1之后会被复制成两段,分别写入各个磁盘里,由于同一份数据会写入不同的磁盘,结果数据总量变大,但有效数据不变,写入效能变差,但数据的安全性最高。任意一块硬盘损坏,应为有完整的镜像硬盘数据,所以数据不会丢失。

示意图如下:

RAID 0+1,RAID 1+0

(1)RAID 0+1:就是先让两颗磁盘组成磁盘组raid-0,并且设定两组;两组raid-0组成raid-1,这就是raid 0+1

两组raid-0都会发送一份数据,由于是raid-0,所以每个磁盘的都只会写入二分之一数据,无论哪一组数据损毁,都不会造成数据损毁。

(2)RAID 1+0

类似RAID 0+1

如图下:

RAID 5:效能与数据备份的均衡考虑

RAID-5至少需要三颗以上磁盘,类似RAID-0循环写入,但在每颗磁盘加入一个奇偶校验数据(Partity),这个数据会记录其他磁盘的备份数据,用于磁盘损毁时的救援,

当一块磁盘损毁,可以凭借剩下的数据和奇偶校验数据恢复原数据,写入效能比RAID-0慢,冗余程度比RAID--1差,综合考虑RAID-0与RAID-1,。

示意图如下:

software,hardware RAID

fist -查看硬盘信息

[root@localhost ~]# fdisk -l

Disk /dev/sda: 21.5 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x0008ea31

Device Boot Start End Blocks Id System

/dev/sda1 * 1 39 307200 83 Linux

Partition 1 does not end on cylinder boundary.

/dev/sda2 39 2358 18631680 83 Linux

/dev/sda3 2358 2611 2031616 82 Linux swap / Solaris

Disk /dev/sdb: 10.7 GB, 10737418240 bytes

255 heads, 63 sectors/track, 1305 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0xcfefe37c

Device Boot Start End Blocks Id System

/dev/sdb1 1 125 1004031 83 Linux

/dev/sdb2 126 253 1028160 83 Linux

/dev/sdb4 254 1305 8450190 5 Extended

/dev/sdb5 254 381 1028128+ 83 Linux

/dev/sdb6 382 509 1028128+ 83 Linux

/dev/sdb7 510 637 1028128+ 83 Linux

/dev/sdb8 638 765 1028128+ 83 Linux

/dev/sdb9 766 893 1028128+ 83 Linux

这里你可以看到我有两块硬盘,第二块已经分好区,便于我们做软RAID,大家可以使用以下命令自己分五个相同大小的分区,这里我每个分区都是1000M

[root@localhost ~]# fdisk /dev/sdb

Command (m for help): n

~

~

Command (m for help): w

[root@localhost ~]#partproble

详细步骤大家自己百度以下Linux分区的命令及步骤。

Second-开始做RAID

[root@localhost ~]# mdadm --create --auto=yes /dev/md0 --level=5 --raid-devices=4 --spare=1 /dev/sdb{5,6,7,8,9}

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md0 started.

Third-查看RAID的详细信息

[root@localhost ~]# mdadm --detail /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Fri Sep 12 08:36:11 2014

Raid Level : raid5

Array Size : 3082752 (2.94 GiB 3.16 GB)

Used Dev Size : 1027584 (1003.67 MiB 1052.25 MB)

Raid Devices : 4

Total Devices : 5

Persistence : Superblock is persistent

Update Time : Fri Sep 12 08:36:19 2014

State : clean

Active Devices : 4

Working Devices : 5

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Name : localhost.localdomain:0 (local to host localhost.localdomain)

UUID : 35abd3a9:5290557a:0a30ef54:f26b0c58

Events : 18

Number Major Minor RaidDevice State

0 8 21 0 active sync /dev/sdb5

1 8 22 1 active sync /dev/sdb6

2 8 23 2 active sync /dev/sdb7

5 8 24 3 active sync /dev/sdb8

4 8 25 - spare /dev/sdb9

RAID5的概括信息

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdb8[5] sdb9[4](S) sdb7[2] sdb6[1] sdb5[0]

3082752 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

Fourth-格式化RAID,创建文件系统,挂在RAID。

[root@localhost ~]# mkfs -t ext4 /dev/md127

mke2fs 1.41.12 (17-May-2010)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=128 blocks, Stripe width=384 blocks

192768 inodes, 770688 blocks

38534 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=792723456

24 block groups

32768 blocks per group, 32768 fragments per group

8032 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912

Writing inode tables: done

Creating journal (16384 blocks): done

Writing superblocks and filesystem accounting information: done

This filesystem will be automatically checked every 36 mounts or

180 days, whichever comes first. Use tune2fs -c or -i to override.

挂载RAID

[root@localhost ~]# mkdir /mnt/raid

[root@localhost ~]# mount /dev/md127 /mnt/raid

Fiveth -向硬盘里写一些文件,查看你可以发现RAID已经可以使用

[root@localhost ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/sda2 18339256 2860964 14546708 17% /

tmpfs 506176 80 506096 1% /dev/shm

/dev/sda1 297485 34645 247480 13% /boot

/dev/md127 3034320 71536 2808648 3% /mnt/raid

Sixth-模拟一块硬盘损坏,看RAID5 是否可以保证文件的完整性

[root@localhost ~]# mdadm --manage /dev/md127 --fail /dev/sdb5

mdadm: set /dev/sdb5 faulty in /dev/md127

查看RAID是否还是可以正常运行:

创建raid1+0

[root@localhost ~]# mdadm --create --auto=yes /dev/md0 --level=1 --raid-device=2 /dev/sdb{6,7}

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md0 started.

[root@localhost ~]# mdadm --create --auto=yes /dev/md1 --level=1 --raid-device=2 /dev/sdb{8,9}

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md1 started.

[root@localhost ~]# mdadm --create --auto=yes /dev/md2 --level=0 --raid-device=2 /dev/md{0,1}

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md2 started.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4] [raid1] [raid0]

md2 : active raid0 md1[1] md0[0]

2054144 blocks super 1.2 512k chunks

md1 : active raid1 sdb9[1] sdb8[0]

1027584 blocks super 1.2 [2/2] [UU]

md0 : active raid1 sdb7[1] sdb6[0]

1027584 blocks super 1.2 [2/2] [UU]

unused devices: <none>

[root@localhost ~]# mdadm --detail /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Sat Sep 13 03:03:12 2014

Raid Level : raid1

Array Size : 1027584 (1003.67 MiB 1052.25 MB)

Used Dev Size : 1027584 (1003.67 MiB 1052.25 MB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sat Sep 13 03:03:50 2014

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Name : localhost.localdomain:0 (local to host localhost.localdomain)

UUID : cda9d765:2897864d:21e6aadb:6076cf49

Events : 17

Number Major Minor RaidDevice State

0 8 22 0 active sync /dev/sdb6

1 8 23 1 active sync /dev/sdb7

删除阵列:

若需要彻底清除这个阵列:

- [root@bogon ~]# umount /dev/md0

- mdadm -Ss /dev/md0

- [root@bogon ~]# mdadm --zero-superblock /dev/sd{b,c,d}1

- # --zero-superblock 加上该选项时,会判断如果该阵列是否包

- # 含一个有效的阵列超级快,若有则将该超级块中阵列信息抹除。

- [root@bogon ~]# rm /etc/mdadm.conf

本文详细介绍RAID的不同级别,包括RAID-0、RAID-1、RAID5、RAID0+1 和 RAID1+0 的特点与应用场景,并提供基于Linux系统的RAID配置实战案例。

本文详细介绍RAID的不同级别,包括RAID-0、RAID-1、RAID5、RAID0+1 和 RAID1+0 的特点与应用场景,并提供基于Linux系统的RAID配置实战案例。

1432

1432

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?