连接主机...

连接主机成功

Last login: Mon Dec 29 11:32:00 2025 from 192.168.81.1

[ma@master ~]$ su

密码:

[root@master ma]#

[root@master ma]# cd /export/server/kafka

[root@master kafka]# ./bin/kafka-server-start.sh config/server.properties

[2025-12-29 11:33:50,672] INFO Registered kafka:type=kafka.Log4jController MBean (kafka.utils.Log4jControllerRegistration$)

[2025-12-29 11:33:51,185] INFO Setting -D jdk.tls.rejectClientInitiatedRenegotiation=true to disable client-initiated TLS renegotiation (org.apache.zookeeper.common.X509Util)

[2025-12-29 11:33:51,250] INFO Registered signal handlers for TERM, INT, HUP (org.apache.kafka.common.utils.LoggingSignalHandler)

[2025-12-29 11:33:51,256] INFO starting (kafka.server.KafkaServer)

[2025-12-29 11:33:51,257] INFO Connecting to zookeeper on localhost:2181 (kafka.server.KafkaServer)

[2025-12-29 11:33:51,279] INFO [ZooKeeperClient Kafka server] Initializing a new session to localhost:2181. (kafka.zookeeper.ZooKeeperClient)

[2025-12-29 11:33:51,289] INFO Client environment:zookeeper.version=3.5.8-f439ca583e70862c3068a1f2a7d4d068eec33315, built on 05/04/2020 15:53 GMT (org.apache.zookeeper.ZooKeeper)

[2025-12-29 11:33:51,289] INFO Client environment:host.name=master (org.apache.zookeeper.ZooKeeper)

[2025-12-29 11:33:51,289] INFO Client environment:java.version=1.8.0_241 (org.apache.zookeeper.ZooKeeper)

[2025-12-29 11:33:51,289] INFO Client environment:java.vendor=Oracle Corporation (org.apache.zookeeper.ZooKeeper)

[2025-12-29 11:33:51,289] INFO Client environment:java.home=/export/server/jdk/jre (org.apache.zookeeper.ZooKeeper)

[2025-12-29 11:33:51,290] INFO Client environment:java.class.path=/export/server/kafka/bin/../libs/activation-1.1.1.jar:/export/server/kafka/bin/../libs/aopalliance-repackaged-2.5.0.jar:/export/server/kafka/bin/../libs/argparse4j-0.7.0.jar:/export/server/kafka/bin/../libs/audience-annotations-0.5.0.jar:/export/server/kafka/bin/../libs/commons-cli-1.4.jar:/export/server/kafka/bin/../libs/commons-lang3-3.8.1.jar:/export/server/kafka/bin/../libs/connect-api-2.6.0.jar:/export/server/kafka/bin/../libs/connect-basic-auth-extension-2.6.0.jar:/export/server/kafka/bin/../libs/connect-file-2.6.0.jar:/export/server/kafka/bin/../libs/connect-json-2.6.0.jar:/export/server/kafka/bin/../libs/connect-mirror-2.6.0.jar:/export/server/kafka/bin/../libs/connect-mirror-client-2.6.0.jar:/export/server/kafka/bin/../libs/connect-runtime-2.6.0.jar:/export/server/kafka/bin/../libs/connect-transforms-2.6.0.jar:/export/server/kafka/bin/../libs/hk2-api-2.5.0.jar:/export/server/kafka/bin/../libs/hk2-locator-2.5.0.jar:/export/server/kafka/bin/../libs/hk2-utils-2.5.0.jar:/export/server/kafka/bin/../libs/jackson-annotations-2.10.2.jar:/export/server/kafka/bin/../libs/jackson-core-2.10.2.jar:/export/server/kafka/bin/../libs/jackson-databind-2.10.2.jar:/export/server/kafka/bin/../libs/jackson-dataformat-csv-2.10.2.jar:/export/server/kafka/bin/../libs/jackson-datatype-jdk8-2.10.2.jar:/export/server/kafka/bin/../libs/jackson-jaxrs-base-2.10.2.jar:/export/server/kafka/bin/../libs/jackson-jaxrs-json-provider-2.10.2.jar:/export/server/kafka/bin/../libs/jackson-module-jaxb-annotations-2.10.2.jar:/export/server/kafka/bin/../libs/jackson-module-paranamer-2.10.2.jar:/export/server/kafka/bin/../libs/jackson-module-scala_2.12-2.10.2.jar:/export/server/kafka/bin/../libs/jakarta.activation-api-1.2.1.jar:/export/server/kafka/bin/../libs/jakarta.annotation-api-1.3.4.jar:/export/server/kafka/bin/../libs/jakarta.inject-2.5.0.jar:/export/server/kafka/bin/../libs/jakarta.ws.rs-api-2.1.5.jar:/export/server/kafka/bin/../libs/jakarta.xml.bind-api-2.3.2.jar:/export/server/kafka/bin/../libs/javassist-3.22.0-CR2.jar:/export/server/kafka/bin/../libs/javassist-3.26.0-GA.jar:/export/server/kafka/bin/../libs/javax.servlet-api-3.1.0.jar:/export/server/kafka/bin/../libs/javax.ws.rs-api-2.1.1.jar:/export/server/kafka/bin/../libs/jaxb-api-2.3.0.jar:/export/server/kafka/bin/../libs/jersey-client-2.28.jar:/export/server/kafka/bin/../libs/jersey-common-2.28.jar:/export/server/kafka/bin/../libs/jersey-container-servlet-2.28.jar:/export/server/kafka/bin/../libs/jersey-container-servlet-core-2.28.jar:/export/server/kafka/bin/../libs/jersey-hk2-2.28.jar:/export/server/kafka/bin/../libs/jersey-media-jaxb-2.28.jar:/export/server/kafka/bin/../libs/jersey-server-2.28.jar:/export/server/kafka/bin/../libs/jetty-client-9.4.24.v20191120.jar:/export/server/kafka/bin/../libs/jetty-continuation-9.4.24.v20191120.jar:/export/server/kafka/bin/../libs/jetty-http-9.4.24.v20191120.jar:/export/server/kafka/bin/../libs/jetty-io-9.4.24.v20191120.jar:/export/server/kafka/bin/../libs/jetty-security-9.4.24.v20191120.jar:/export/server/kafka/bin/../libs/jetty-server-9.4.24.v20191120.jar:/export/server/kafka/bin/../libs/jetty-servlet-9.4.24.v20191120.jar:/export/server/kafka/bin/../libs/jetty-servlets-9.4.24.v20191120.jar:/export/server/kafka/bin/../libs/jetty-util-9.4.24.v20191120.jar:/export/server/kafka/bin/../libs/jopt-simple-5.0.4.jar:/export/server/kafka/bin/../libs/kafka_2.12-2.6.0.jar:/export/server/kafka/bin/../libs/kafka_2.12-2.6.0-sources.jar:/export/server/kafka/bin/../libs/kafka-clients-2.6.0.jar:/export/server/kafka/bin/../libs/kafka-log4j-appender-2.6.0.jar:/export/server/kafka/bin/../libs/kafka-streams-2.6.0.jar:/export/server/kafka/bin/../libs/kafka-streams-examples-2.6.0.jar:/export/server/kafka/bin/../libs/kafka-streams-scala_2.12-2.6.0.jar:/export/server/kafka/bin/../libs/kafka-streams-test-utils-2.6.0.jar:/export/server/kafka/bin/../libs/kafka-tools-2.6.0.jar:/export/server/kafka/bin/../libs/log4j-1.2.17.jar:/export/server/kafka/bin/../libs/lz4-java-1.7.1.jar:/export/server/kafka/bin/../libs/maven-artifact-3.6.3.jar:/export/server/kafka/bin/../libs/metrics-core-2.2.0.jar:/export/server/kafka/bin/../libs/netty-buffer-4.1.50.Final.jar:/export/server/kafka/bin/../libs/netty-codec-4.1.50.Final.jar:/export/server/kafka/bin/../libs/netty-common-4.1.50.Final.jar:/export/server/kafka/bin/../libs/netty-handler-4.1.50.Final.jar:/export/server/kafka/bin/../libs/netty-resolver-4.1.50.Final.jar:/export/server/kafka/bin/../libs/netty-transport-4.1.50.Final.jar:/export/server/kafka/bin/../libs/netty-transport-native-epoll-4.1.50.Final.jar:/export/server/kafka/bin/../libs/netty-transport-native-unix-common-4.1.50.Final.jar:/export/server/kafka/bin/../libs/osgi-resource-locator-1.0.1.jar:/export/server/kafka/bin/../libs/paranamer-2.8.jar:/export/server/kafka/bin/../libs/plexus-utils-3.2.1.jar:/export/server/kafka/bin/../libs/reflections-0.9.12.jar:/export/server/kafka/bin/../libs/rocksdbjni-5.18.4.jar:/export/server/kafka/bin/../libs/scala-collection-compat_2.12-2.1.6.jar:/export/server/kafka/bin/../libs/scala-java8-compat_2.12-0.9.1.jar:/export/server/kafka/bin/../libs/scala-library-2.12.11.jar:/export/server/kafka/bin/../libs/scala-logging_2.12-3.9.2.jar:/export/server/kafka/bin/../libs/scala-reflect-2.12.11.jar:/export/server/kafka/bin/../libs/slf4j-api-1.7.30.jar:/export/server/kafka/bin/../libs/slf4j-log4j12-1.7.30.jar:/export/server/kafka/bin/../libs/snappy-java-1.1.7.3.jar:/export/server/kafka/bin/../libs/validation-api-2.0.1.Final.jar:/export/server/kafka/bin/../libs/zookeeper-3.5.8.jar:/export/server/kafka/bin/../libs/zookeeper-jute-3.5.8.jar:/export/server/kafka/bin/../libs/zstd-jni-1.4.4-7.jar (org.apache.zookeeper.ZooKeeper)

[2025-12-29 11:33:51,290] INFO Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib (org.apache.zookeeper.ZooKeeper)

[2025-12-29 11:33:51,290] INFO Client environment:java.io.tmpdir=/tmp (org.apache.zookeeper.ZooKeeper)

[2025-12-29 11:33:51,290] INFO Client environment:java.compiler=<NA> (org.apache.zookeeper.ZooKeeper)

[2025-12-29 11:33:51,290] INFO Client environment:os.name=Linux (org.apache.zookeeper.ZooKeeper)

[2025-12-29 11:33:51,290] INFO Client environment:os.arch=amd64 (org.apache.zookeeper.ZooKeeper)

[2025-12-29 11:33:51,290] INFO Client environment:os.version=3.10.0-1160.71.1.el7.x86_64 (org.apache.zookeeper.ZooKeeper)

[2025-12-29 11:33:51,290] INFO Client environment:user.name=root (org.apache.zookeeper.ZooKeeper)

[2025-12-29 11:33:51,290] INFO Client environment:user.home=/root (org.apache.zookeeper.ZooKeeper)

[2025-12-29 11:33:51,291] INFO Client environment:user.dir=/export/server/kafka (org.apache.zookeeper.ZooKeeper)

[2025-12-29 11:33:51,291] INFO Client environment:os.memory.free=977MB (org.apache.zookeeper.ZooKeeper)

[2025-12-29 11:33:51,291] INFO Client environment:os.memory.max=1024MB (org.apache.zookeeper.ZooKeeper)

[2025-12-29 11:33:51,291] INFO Client environment:os.memory.total=1024MB (org.apache.zookeeper.ZooKeeper)

[2025-12-29 11:33:51,293] INFO Initiating client connection, connectString=localhost:2181 sessionTimeout=18000 watcher=kafka.zookeeper.ZooKeeperClient$ZooKeeperClientWatcher$@15ff3e9e (org.apache.zookeeper.ZooKeeper)

[2025-12-29 11:33:51,299] INFO jute.maxbuffer value is 4194304 Bytes (org.apache.zookeeper.ClientCnxnSocket)

[2025-12-29 11:33:51,306] INFO zookeeper.request.timeout value is 0. feature enabled= (org.apache.zookeeper.ClientCnxn)

[2025-12-29 11:33:51,310] INFO [ZooKeeperClient Kafka server] Waiting until connected. (kafka.zookeeper.ZooKeeperClient)

[2025-12-29 11:33:51,315] INFO Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error) (org.apache.zookeeper.ClientCnxn)

[2025-12-29 11:33:51,319] INFO Socket connection established, initiating session, client: /127.0.0.1:54580, server: localhost/127.0.0.1:2181 (org.apache.zookeeper.ClientCnxn)

[2025-12-29 11:33:51,337] INFO Session establishment complete on server localhost/127.0.0.1:2181, sessionid = 0x100005654de0000, negotiated timeout = 18000 (org.apache.zookeeper.ClientCnxn)

[2025-12-29 11:33:51,341] INFO [ZooKeeperClient Kafka server] Connected. (kafka.zookeeper.ZooKeeperClient)

[2025-12-29 11:33:51,653] INFO Cluster ID = QvaJvRsMRZmudq1srV4Xyg (kafka.server.KafkaServer)

[2025-12-29 11:33:51,729] INFO KafkaConfig values:

advertised.host.name = null

advertised.listeners = PLAINTEXT://192.168.81.130:9092

advertised.port = null

alter.config.policy.class.name = null

alter.log.dirs.replication.quota.window.num = 11

alter.log.dirs.replication.quota.window.size.seconds = 1

authorizer.class.name =

auto.create.topics.enable = true

auto.leader.rebalance.enable = true

background.threads = 10

broker.id = 0

broker.id.generation.enable = true

broker.rack = null

client.quota.callback.class = null

compression.type = producer

connection.failed.authentication.delay.ms = 100

connections.max.idle.ms = 600000

connections.max.reauth.ms = 0

control.plane.listener.name = null

controlled.shutdown.enable = true

controlled.shutdown.max.retries = 3

controlled.shutdown.retry.backoff.ms = 5000

controller.socket.timeout.ms = 30000

create.topic.policy.class.name = null

default.replication.factor = 1

delegation.token.expiry.check.interval.ms = 3600000

delegation.token.expiry.time.ms = 86400000

delegation.token.master.key = null

delegation.token.max.lifetime.ms = 604800000

delete.records.purgatory.purge.interval.requests = 1

delete.topic.enable = true

fetch.max.bytes = 57671680

fetch.purgatory.purge.interval.requests = 1000

group.initial.rebalance.delay.ms = 0

group.max.session.timeout.ms = 1800000

group.max.size = 2147483647

group.min.session.timeout.ms = 6000

host.name =

inter.broker.listener.name = null

inter.broker.protocol.version = 2.6-IV0

kafka.metrics.polling.interval.secs = 10

kafka.metrics.reporters = []

leader.imbalance.check.interval.seconds = 300

leader.imbalance.per.broker.percentage = 10

listener.security.protocol.map = PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

listeners = PLAINTEXT://192.168.81.130:9092

log.cleaner.backoff.ms = 15000

log.cleaner.dedupe.buffer.size = 134217728

log.cleaner.delete.retention.ms = 86400000

log.cleaner.enable = true

log.cleaner.io.buffer.load.factor = 0.9

log.cleaner.io.buffer.size = 524288

log.cleaner.io.max.bytes.per.second = 1.7976931348623157E308

log.cleaner.max.compaction.lag.ms = 9223372036854775807

log.cleaner.min.cleanable.ratio = 0.5

log.cleaner.min.compaction.lag.ms = 0

log.cleaner.threads = 1

log.cleanup.policy = [delete]

log.dir = /tmp/kafka-logs

log.dirs = /export/server/kafka/logs

log.flush.interval.messages = 9223372036854775807

log.flush.interval.ms = null

log.flush.offset.checkpoint.interval.ms = 60000

log.flush.scheduler.interval.ms = 9223372036854775807

log.flush.start.offset.checkpoint.interval.ms = 60000

log.index.interval.bytes = 4096

log.index.size.max.bytes = 10485760

log.message.downconversion.enable = true

log.message.format.version = 2.6-IV0

log.message.timestamp.difference.max.ms = 9223372036854775807

log.message.timestamp.type = CreateTime

log.preallocate = false

log.retention.bytes = -1

log.retention.check.interval.ms = 300000

log.retention.hours = 168

log.retention.minutes = null

log.retention.ms = null

log.roll.hours = 168

log.roll.jitter.hours = 0

log.roll.jitter.ms = null

log.roll.ms = null

log.segment.bytes = 1073741824

log.segment.delete.delay.ms = 60000

max.connections = 2147483647

max.connections.per.ip = 2147483647

max.connections.per.ip.overrides =

max.incremental.fetch.session.cache.slots = 1000

message.max.bytes = 1048588

metric.reporters = []

metrics.num.samples = 2

metrics.recording.level = INFO

metrics.sample.window.ms = 30000

min.insync.replicas = 1

num.io.threads = 8

num.network.threads = 3

num.partitions = 1

num.recovery.threads.per.data.dir = 1

num.replica.alter.log.dirs.threads = null

num.replica.fetchers = 1

offset.metadata.max.bytes = 4096

offsets.commit.required.acks = -1

offsets.commit.timeout.ms = 5000

offsets.load.buffer.size = 5242880

offsets.retention.check.interval.ms = 600000

offsets.retention.minutes = 10080

offsets.topic.compression.codec = 0

offsets.topic.num.partitions = 50

offsets.topic.replication.factor = 1

offsets.topic.segment.bytes = 104857600

password.encoder.cipher.algorithm = AES/CBC/PKCS5Padding

password.encoder.iterations = 4096

password.encoder.key.length = 128

password.encoder.keyfactory.algorithm = null

password.encoder.old.secret = null

password.encoder.secret = null

port = 9092

principal.builder.class = null

producer.purgatory.purge.interval.requests = 1000

queued.max.request.bytes = -1

queued.max.requests = 500

quota.consumer.default = 9223372036854775807

quota.producer.default = 9223372036854775807

quota.window.num = 11

quota.window.size.seconds = 1

replica.fetch.backoff.ms = 1000

replica.fetch.max.bytes = 1048576

replica.fetch.min.bytes = 1

replica.fetch.response.max.bytes = 10485760

replica.fetch.wait.max.ms = 500

replica.high.watermark.checkpoint.interval.ms = 5000

replica.lag.time.max.ms = 30000

replica.selector.class = null

replica.socket.receive.buffer.bytes = 65536

replica.socket.timeout.ms = 30000

replication.quota.window.num = 11

replication.quota.window.size.seconds = 1

request.timeout.ms = 30000

reserved.broker.max.id = 1000

sasl.client.callback.handler.class = null

sasl.enabled.mechanisms = [GSSAPI]

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin = 60000

sasl.kerberos.principal.to.local.rules = [DEFAULT]

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.login.callback.handler.class = null

sasl.login.class = null

sasl.login.refresh.buffer.seconds = 300

sasl.login.refresh.min.period.seconds = 60

sasl.login.refresh.window.factor = 0.8

sasl.login.refresh.window.jitter = 0.05

sasl.mechanism.inter.broker.protocol = GSSAPI

sasl.server.callback.handler.class = null

security.inter.broker.protocol = PLAINTEXT

security.providers = null

socket.receive.buffer.bytes = 102400

socket.request.max.bytes = 104857600

socket.send.buffer.bytes = 102400

ssl.cipher.suites = []

ssl.client.auth = none

ssl.enabled.protocols = [TLSv1.2]

ssl.endpoint.identification.algorithm = https

ssl.engine.factory.class = null

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.principal.mapping.rules = DEFAULT

ssl.protocol = TLSv1.2

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

transaction.abort.timed.out.transaction.cleanup.interval.ms = 10000

transaction.max.timeout.ms = 900000

transaction.remove.expired.transaction.cleanup.interval.ms = 3600000

transaction.state.log.load.buffer.size = 5242880

transaction.state.log.min.isr = 2

transaction.state.log.num.partitions = 50

transaction.state.log.replication.factor = 1

transaction.state.log.segment.bytes = 104857600

transactional.id.expiration.ms = 604800000

unclean.leader.election.enable = false

zookeeper.clientCnxnSocket = null

zookeeper.connect = localhost:2181

zookeeper.connection.timeout.ms = 60000

zookeeper.max.in.flight.requests = 10

zookeeper.session.timeout.ms = 18000

zookeeper.set.acl = false

zookeeper.ssl.cipher.suites = null

zookeeper.ssl.client.enable = false

zookeeper.ssl.crl.enable = false

zookeeper.ssl.enabled.protocols = null

zookeeper.ssl.endpoint.identification.algorithm = HTTPS

zookeeper.ssl.keystore.location = null

zookeeper.ssl.keystore.password = null

zookeeper.ssl.keystore.type = null

zookeeper.ssl.ocsp.enable = false

zookeeper.ssl.protocol = TLSv1.2

zookeeper.ssl.truststore.location = null

zookeeper.ssl.truststore.password = null

zookeeper.ssl.truststore.type = null

zookeeper.sync.time.ms = 2000

(kafka.server.KafkaConfig)

[2025-12-29 11:33:51,752] INFO KafkaConfig values:

advertised.host.name = null

advertised.listeners = PLAINTEXT://192.168.81.130:9092

advertised.port = null

alter.config.policy.class.name = null

alter.log.dirs.replication.quota.window.num = 11

alter.log.dirs.replication.quota.window.size.seconds = 1

authorizer.class.name =

auto.create.topics.enable = true

auto.leader.rebalance.enable = true

background.threads = 10

broker.id = 0

broker.id.generation.enable = true

broker.rack = null

client.quota.callback.class = null

compression.type = producer

connection.failed.authentication.delay.ms = 100

connections.max.idle.ms = 600000

connections.max.reauth.ms = 0

control.plane.listener.name = null

controlled.shutdown.enable = true

controlled.shutdown.max.retries = 3

controlled.shutdown.retry.backoff.ms = 5000

controller.socket.timeout.ms = 30000

create.topic.policy.class.name = null

default.replication.factor = 1

delegation.token.expiry.check.interval.ms = 3600000

delegation.token.expiry.time.ms = 86400000

delegation.token.master.key = null

delegation.token.max.lifetime.ms = 604800000

delete.records.purgatory.purge.interval.requests = 1

delete.topic.enable = true

fetch.max.bytes = 57671680

fetch.purgatory.purge.interval.requests = 1000

group.initial.rebalance.delay.ms = 0

group.max.session.timeout.ms = 1800000

group.max.size = 2147483647

group.min.session.timeout.ms = 6000

host.name =

inter.broker.listener.name = null

inter.broker.protocol.version = 2.6-IV0

kafka.metrics.polling.interval.secs = 10

kafka.metrics.reporters = []

leader.imbalance.check.interval.seconds = 300

leader.imbalance.per.broker.percentage = 10

listener.security.protocol.map = PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

listeners = PLAINTEXT://192.168.81.130:9092

log.cleaner.backoff.ms = 15000

log.cleaner.dedupe.buffer.size = 134217728

log.cleaner.delete.retention.ms = 86400000

log.cleaner.enable = true

log.cleaner.io.buffer.load.factor = 0.9

log.cleaner.io.buffer.size = 524288

log.cleaner.io.max.bytes.per.second = 1.7976931348623157E308

log.cleaner.max.compaction.lag.ms = 9223372036854775807

log.cleaner.min.cleanable.ratio = 0.5

log.cleaner.min.compaction.lag.ms = 0

log.cleaner.threads = 1

log.cleanup.policy = [delete]

log.dir = /tmp/kafka-logs

log.dirs = /export/server/kafka/logs

log.flush.interval.messages = 9223372036854775807

log.flush.interval.ms = null

log.flush.offset.checkpoint.interval.ms = 60000

log.flush.scheduler.interval.ms = 9223372036854775807

log.flush.start.offset.checkpoint.interval.ms = 60000

log.index.interval.bytes = 4096

log.index.size.max.bytes = 10485760

log.message.downconversion.enable = true

log.message.format.version = 2.6-IV0

log.message.timestamp.difference.max.ms = 9223372036854775807

log.message.timestamp.type = CreateTime

log.preallocate = false

log.retention.bytes = -1

log.retention.check.interval.ms = 300000

log.retention.hours = 168

log.retention.minutes = null

log.retention.ms = null

log.roll.hours = 168

log.roll.jitter.hours = 0

log.roll.jitter.ms = null

log.roll.ms = null

log.segment.bytes = 1073741824

log.segment.delete.delay.ms = 60000

max.connections = 2147483647

max.connections.per.ip = 2147483647

max.connections.per.ip.overrides =

max.incremental.fetch.session.cache.slots = 1000

message.max.bytes = 1048588

metric.reporters = []

metrics.num.samples = 2

metrics.recording.level = INFO

metrics.sample.window.ms = 30000

min.insync.replicas = 1

num.io.threads = 8

num.network.threads = 3

num.partitions = 1

num.recovery.threads.per.data.dir = 1

num.replica.alter.log.dirs.threads = null

num.replica.fetchers = 1

offset.metadata.max.bytes = 4096

offsets.commit.required.acks = -1

offsets.commit.timeout.ms = 5000

offsets.load.buffer.size = 5242880

offsets.retention.check.interval.ms = 600000

offsets.retention.minutes = 10080

offsets.topic.compression.codec = 0

offsets.topic.num.partitions = 50

offsets.topic.replication.factor = 1

offsets.topic.segment.bytes = 104857600

password.encoder.cipher.algorithm = AES/CBC/PKCS5Padding

password.encoder.iterations = 4096

password.encoder.key.length = 128

password.encoder.keyfactory.algorithm = null

password.encoder.old.secret = null

password.encoder.secret = null

port = 9092

principal.builder.class = null

producer.purgatory.purge.interval.requests = 1000

queued.max.request.bytes = -1

queued.max.requests = 500

quota.consumer.default = 9223372036854775807

quota.producer.default = 9223372036854775807

quota.window.num = 11

quota.window.size.seconds = 1

replica.fetch.backoff.ms = 1000

replica.fetch.max.bytes = 1048576

replica.fetch.min.bytes = 1

replica.fetch.response.max.bytes = 10485760

replica.fetch.wait.max.ms = 500

replica.high.watermark.checkpoint.interval.ms = 5000

replica.lag.time.max.ms = 30000

replica.selector.class = null

replica.socket.receive.buffer.bytes = 65536

replica.socket.timeout.ms = 30000

replication.quota.window.num = 11

replication.quota.window.size.seconds = 1

request.timeout.ms = 30000

reserved.broker.max.id = 1000

sasl.client.callback.handler.class = null

sasl.enabled.mechanisms = [GSSAPI]

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin = 60000

sasl.kerberos.principal.to.local.rules = [DEFAULT]

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.login.callback.handler.class = null

sasl.login.class = null

sasl.login.refresh.buffer.seconds = 300

sasl.login.refresh.min.period.seconds = 60

sasl.login.refresh.window.factor = 0.8

sasl.login.refresh.window.jitter = 0.05

sasl.mechanism.inter.broker.protocol = GSSAPI

sasl.server.callback.handler.class = null

security.inter.broker.protocol = PLAINTEXT

security.providers = null

socket.receive.buffer.bytes = 102400

socket.request.max.bytes = 104857600

socket.send.buffer.bytes = 102400

ssl.cipher.suites = []

ssl.client.auth = none

ssl.enabled.protocols = [TLSv1.2]

ssl.endpoint.identification.algorithm = https

ssl.engine.factory.class = null

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.principal.mapping.rules = DEFAULT

ssl.protocol = TLSv1.2

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

transaction.abort.timed.out.transaction.cleanup.interval.ms = 10000

transaction.max.timeout.ms = 900000

transaction.remove.expired.transaction.cleanup.interval.ms = 3600000

transaction.state.log.load.buffer.size = 5242880

transaction.state.log.min.isr = 2

transaction.state.log.num.partitions = 50

transaction.state.log.replication.factor = 1

transaction.state.log.segment.bytes = 104857600

transactional.id.expiration.ms = 604800000

unclean.leader.election.enable = false

zookeeper.clientCnxnSocket = null

zookeeper.connect = localhost:2181

zookeeper.connection.timeout.ms = 60000

zookeeper.max.in.flight.requests = 10

zookeeper.session.timeout.ms = 18000

zookeeper.set.acl = false

zookeeper.ssl.cipher.suites = null

zookeeper.ssl.client.enable = false

zookeeper.ssl.crl.enable = false

zookeeper.ssl.enabled.protocols = null

zookeeper.ssl.endpoint.identification.algorithm = HTTPS

zookeeper.ssl.keystore.location = null

zookeeper.ssl.keystore.password = null

zookeeper.ssl.keystore.type = null

zookeeper.ssl.ocsp.enable = false

zookeeper.ssl.protocol = TLSv1.2

zookeeper.ssl.truststore.location = null

zookeeper.ssl.truststore.password = null

zookeeper.ssl.truststore.type = null

zookeeper.sync.time.ms = 2000

(kafka.server.KafkaConfig)

[2025-12-29 11:33:51,793] INFO [ThrottledChannelReaper-Fetch]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2025-12-29 11:33:51,794] INFO [ThrottledChannelReaper-Produce]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2025-12-29 11:33:51,795] INFO [ThrottledChannelReaper-Request]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2025-12-29 11:33:51,829] INFO Loading logs from log dirs ArrayBuffer(/export/server/kafka/logs) (kafka.log.LogManager)

[2025-12-29 11:33:51,831] INFO Skipping recovery for all logs in /export/server/kafka/logs since clean shutdown file was found (kafka.log.LogManager)

[2025-12-29 11:33:51,843] INFO Loaded 0 logs in 14ms. (kafka.log.LogManager)

[2025-12-29 11:33:51,862] INFO Starting log cleanup with a period of 300000 ms. (kafka.log.LogManager)

[2025-12-29 11:33:51,866] INFO Starting log flusher with a default period of 9223372036854775807 ms. (kafka.log.LogManager)

[2025-12-29 11:33:52,346] INFO Awaiting socket connections on 192.168.81.130:9092. (kafka.network.Acceptor)

[2025-12-29 11:33:52,397] INFO [SocketServer brokerId=0] Created data-plane acceptor and processors for endpoint : ListenerName(PLAINTEXT) (kafka.network.SocketServer)

[2025-12-29 11:33:52,425] INFO [ExpirationReaper-0-Produce]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2025-12-29 11:33:52,427] INFO [ExpirationReaper-0-Fetch]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2025-12-29 11:33:52,427] INFO [ExpirationReaper-0-DeleteRecords]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2025-12-29 11:33:52,428] INFO [ExpirationReaper-0-ElectLeader]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2025-12-29 11:33:52,444] INFO [LogDirFailureHandler]: Starting (kafka.server.ReplicaManager$LogDirFailureHandler)

[2025-12-29 11:33:52,514] INFO Creating /brokers/ids/0 (is it secure? false) (kafka.zk.KafkaZkClient)

[2025-12-29 11:33:52,538] ERROR Error while creating ephemeral at /brokers/ids/0, node already exists and owner '72057932414386176' does not match current session '72057964828950528' (kafka.zk.KafkaZkClient$CheckedEphemeral)

[2025-12-29 11:33:52,546] ERROR [KafkaServer id=0] Fatal error during KafkaServer startup. Prepare to shutdown (kafka.server.KafkaServer)

org.apache.zookeeper.KeeperException$NodeExistsException: KeeperErrorCode = NodeExists

at org.apache.zookeeper.KeeperException.create(KeeperException.java:126)

at kafka.zk.KafkaZkClient$CheckedEphemeral.getAfterNodeExists(KafkaZkClient.scala:1821)

at kafka.zk.KafkaZkClient$CheckedEphemeral.create(KafkaZkClient.scala:1759)

at kafka.zk.KafkaZkClient.checkedEphemeralCreate(KafkaZkClient.scala:1726)

at kafka.zk.KafkaZkClient.registerBroker(KafkaZkClient.scala:95)

at kafka.server.KafkaServer.startup(KafkaServer.scala:293)

at kafka.server.KafkaServerStartable.startup(KafkaServerStartable.scala:44)

at kafka.Kafka$.main(Kafka.scala:82)

at kafka.Kafka.main(Kafka.scala)

[2025-12-29 11:33:52,551] INFO [KafkaServer id=0] shutting down (kafka.server.KafkaServer)

[2025-12-29 11:33:52,553] INFO [SocketServer brokerId=0] Stopping socket server request processors (kafka.network.SocketServer)

[2025-12-29 11:33:52,556] INFO [SocketServer brokerId=0] Stopped socket server request processors (kafka.network.SocketServer)

[2025-12-29 11:33:52,559] INFO [ReplicaManager broker=0] Shutting down (kafka.server.ReplicaManager)

[2025-12-29 11:33:52,560] INFO [LogDirFailureHandler]: Shutting down (kafka.server.ReplicaManager$LogDirFailureHandler)

[2025-12-29 11:33:52,561] INFO [LogDirFailureHandler]: Stopped (kafka.server.ReplicaManager$LogDirFailureHandler)

[2025-12-29 11:33:52,562] INFO [LogDirFailureHandler]: Shutdown completed (kafka.server.ReplicaManager$LogDirFailureHandler)

[2025-12-29 11:33:52,562] INFO [ReplicaFetcherManager on broker 0] shutting down (kafka.server.ReplicaFetcherManager)

[2025-12-29 11:33:52,565] INFO [ReplicaFetcherManager on broker 0] shutdown completed (kafka.server.ReplicaFetcherManager)

[2025-12-29 11:33:52,565] INFO [ReplicaAlterLogDirsManager on broker 0] shutting down (kafka.server.ReplicaAlterLogDirsManager)

[2025-12-29 11:33:52,566] INFO [ReplicaAlterLogDirsManager on broker 0] shutdown completed (kafka.server.ReplicaAlterLogDirsManager)

[2025-12-29 11:33:52,566] INFO [ExpirationReaper-0-Fetch]: Shutting down (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2025-12-29 11:33:52,627] INFO [ExpirationReaper-0-Fetch]: Stopped (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2025-12-29 11:33:52,627] INFO [ExpirationReaper-0-Fetch]: Shutdown completed (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2025-12-29 11:33:52,629] INFO [ExpirationReaper-0-Produce]: Shutting down (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2025-12-29 11:33:52,827] INFO [ExpirationReaper-0-Produce]: Stopped (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2025-12-29 11:33:52,827] INFO [ExpirationReaper-0-Produce]: Shutdown completed (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2025-12-29 11:33:52,828] INFO [ExpirationReaper-0-DeleteRecords]: Shutting down (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2025-12-29 11:33:52,828] INFO [ExpirationReaper-0-DeleteRecords]: Stopped (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2025-12-29 11:33:52,828] INFO [ExpirationReaper-0-DeleteRecords]: Shutdown completed (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2025-12-29 11:33:52,828] INFO [ExpirationReaper-0-ElectLeader]: Shutting down (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2025-12-29 11:33:52,829] INFO [ExpirationReaper-0-ElectLeader]: Stopped (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2025-12-29 11:33:52,829] INFO [ExpirationReaper-0-ElectLeader]: Shutdown completed (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2025-12-29 11:33:52,836] INFO [ReplicaManager broker=0] Shut down completely (kafka.server.ReplicaManager)

[2025-12-29 11:33:52,837] INFO Shutting down. (kafka.log.LogManager)

[2025-12-29 11:33:52,877] INFO Shutdown complete. (kafka.log.LogManager)

[2025-12-29 11:33:52,878] INFO [ZooKeeperClient Kafka server] Closing. (kafka.zookeeper.ZooKeeperClient)

[2025-12-29 11:33:52,983] INFO Session: 0x100005654de0000 closed (org.apache.zookeeper.ZooKeeper)

[2025-12-29 11:33:52,983] INFO EventThread shut down for session: 0x100005654de0000 (org.apache.zookeeper.ClientCnxn)

[2025-12-29 11:33:52,984] INFO [ZooKeeperClient Kafka server] Closed. (kafka.zookeeper.ZooKeeperClient)

[2025-12-29 11:33:52,985] INFO [ThrottledChannelReaper-Fetch]: Shutting down (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2025-12-29 11:33:53,794] INFO [ThrottledChannelReaper-Fetch]: Stopped (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2025-12-29 11:33:53,795] INFO [ThrottledChannelReaper-Fetch]: Shutdown completed (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2025-12-29 11:33:53,795] INFO [ThrottledChannelReaper-Produce]: Shutting down (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2025-12-29 11:33:54,795] INFO [ThrottledChannelReaper-Produce]: Stopped (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2025-12-29 11:33:54,795] INFO [ThrottledChannelReaper-Produce]: Shutdown completed (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2025-12-29 11:33:54,796] INFO [ThrottledChannelReaper-Request]: Shutting down (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2025-12-29 11:33:54,797] INFO [ThrottledChannelReaper-Request]: Stopped (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2025-12-29 11:33:54,797] INFO [ThrottledChannelReaper-Request]: Shutdown completed (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2025-12-29 11:33:54,798] INFO [SocketServer brokerId=0] Shutting down socket server (kafka.network.SocketServer)

[2025-12-29 11:33:54,840] INFO [SocketServer brokerId=0] Shutdown completed (kafka.network.SocketServer)

[2025-12-29 11:33:54,847] INFO [KafkaServer id=0] shut down completed (kafka.server.KafkaServer)

[2025-12-29 11:33:54,848] ERROR Exiting Kafka. (kafka.server.KafkaServerStartable)

[2025-12-29 11:33:54,849] INFO [KafkaServer id=0] shutting down (kafka.server.KafkaServer)

[root@master kafka]#

还有tstrap-server localhost:9092

[2025-12-29 11:34:02,707] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:02,836] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:02,940] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:03,144] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:03,548] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:04,353] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:05,362] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:06,270] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:07,176] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:08,387] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:09,597] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:10,806] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:11,715] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:12,823] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:13,932] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:15,039] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:16,247] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:17,255] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:18,463] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:19,471] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:20,581] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:21,590] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:22,701] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:23,908] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:24,714] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:25,824] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:27,032] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:28,239] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:29,246] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:30,354] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:31,262] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:32,268] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:33,183] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:34,090] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:35,198] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:36,306] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:37,213] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:38,422] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:39,630] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:40,536] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:41,444] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:42,352] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:43,359] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:44,467] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:45,475] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:46,583] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:47,489] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:48,697] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:49,708] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:50,615] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:51,725] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:52,934] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:53,943] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:55,151] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:56,261] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:57,469] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:58,378] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:34:59,585] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:35:00,490] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:35:01,397] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2025-12-29 11:35:02,404] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

Error while executing topic command : Call(callName=createTopics, deadlineMs=1766979302733, tries=1, nextAllowedTryMs=1766979302834) timed out at 1766979302734 after 1 attempt(s)

[2025-12-29 11:35:02,738] ERROR org.apache.kafka.common.errors.TimeoutException: Call(callName=createTopics, deadlineMs=1766979302733, tries=1, nextAllowedTryMs=1766979302834) timed out at 1766979302734 after 1 attempt(s)

Caused by: org.apache.kafka.common.errors.TimeoutException: Timed out waiting for a node assignment.

(kafka.admin.TopicCommand$)

[root@master kafka]# 000

最新发布

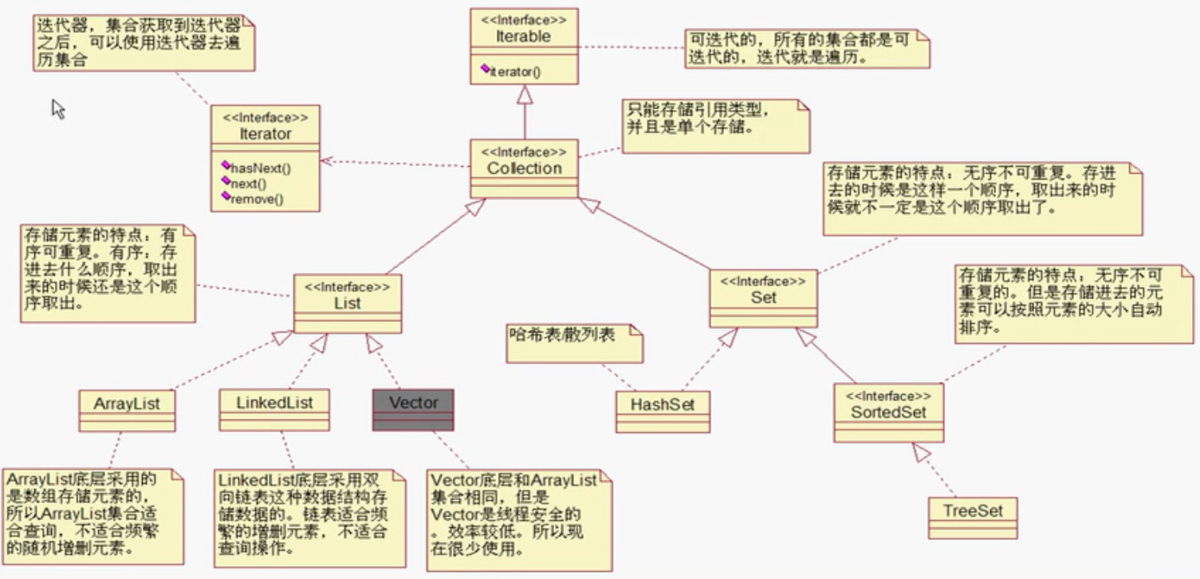

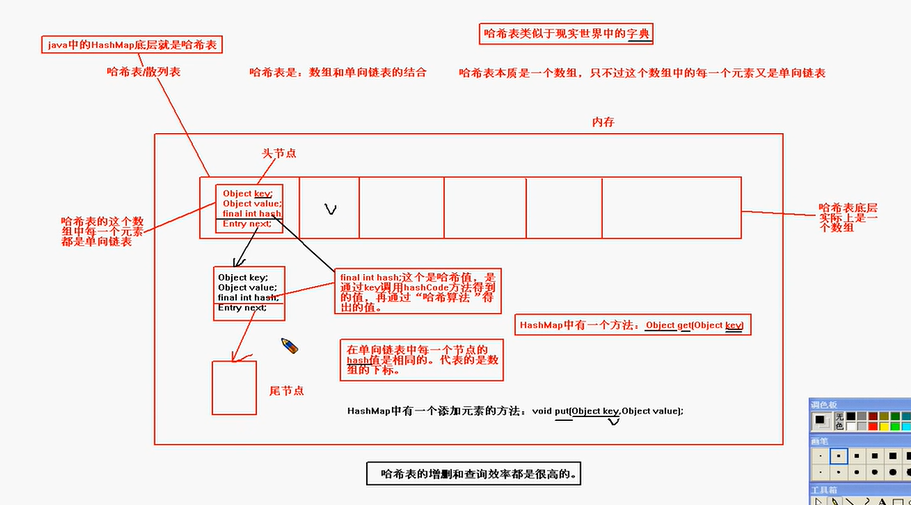

本文深入解析Java集合框架,包括List、HashSet、SortedSet等核心接口和实现类的特性和使用方法,探讨数组、哈希表和红黑树等底层数据结构,以及元素添加、排序和比较的原理。

本文深入解析Java集合框架,包括List、HashSet、SortedSet等核心接口和实现类的特性和使用方法,探讨数组、哈希表和红黑树等底层数据结构,以及元素添加、排序和比较的原理。

7194

7194

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?