Deferred lighting separate lighting from rendering and make lighting a completely image-space technique. This is very different from the forward rendering. At first as the limitation of the hardware, we could make per-object lit by max number of 8 lights at one time, everything (light source setting, materials, texture) must be set up by the render states before rendering; As the hardware become more powerful, we could do additive lighting, one light one pass, all lighting will be blend together. It seems no max number of light source here. If we put too much lighting calculation in the pxiel shader, the situation will become worse. Because of z-test, a lots of pixels that do the lighting calculation need to be discard. But this will be become the advantages of deferred lighting.

In deferred lighting, lights major cast is based on the screen area coverd; all lighting is per-pixel and all surface are lit equally (every objects will use the same light equation); light allows fast hardware Z-Reject. At the other hand, some disadvantages comes along: large frame-buffer size required; At some situation will become high fill-rate (a lots of lights do full screen lighting); It is difficult to support multiple light equation; It is very hard to handle transparncy objects; And hig hardward specification.

Deferred lighting could be adapted to photo-realistic rendering, that could achive very complex visual effects and very high visual quality than the tranditional forward rendering. At the same time, keep the frame rate still high. This the main reason why some latest TV or video games vastly using it.

G-Buffers

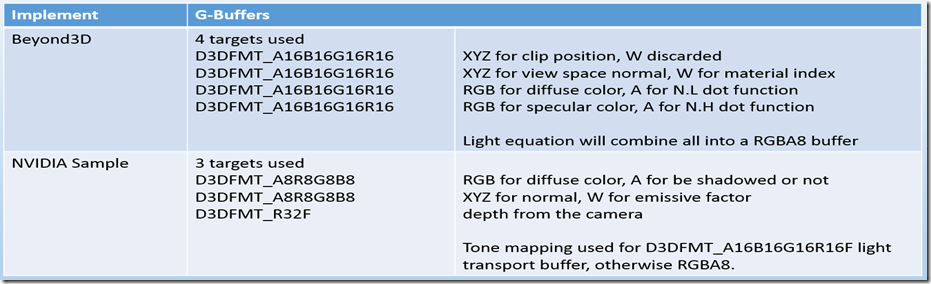

The key point of the deferred lighting is the G-Buffers or the image contents. What kind of parameters we should save for geometries in it depends on our light equation. For example, if we do direct lighting with Phong / Blinn Model, usually we need diffuse color, normal, position, diffuse coefficient, specular color, specular coefficient and so on. Choosing of lighting parameters depends on you, you could even ignore and discard some of them. You could discard the specular part, and do not use a dedicated buffer to save the surface position but just save the depth buffer (the postion could be restored by the screen space depth value)(There is such a HDR Deferred rendering sample on the Nvidia site). Here is a comparsion between two implements that I download from beyond3D and Nvidia[HDR Deferred Shading].

After G-buffers created, the light contribution will be calculated and saved into the light buffer. Sometimes, the final result value may beyond 1, you could ignore it and saturate the result with the hardware support or use a high dynamic range buffer to hold it. If you consider to use a HDR buffer, you should find some way to make those HDR display correctly on the LDR monitor. Here comes the tone mapping.

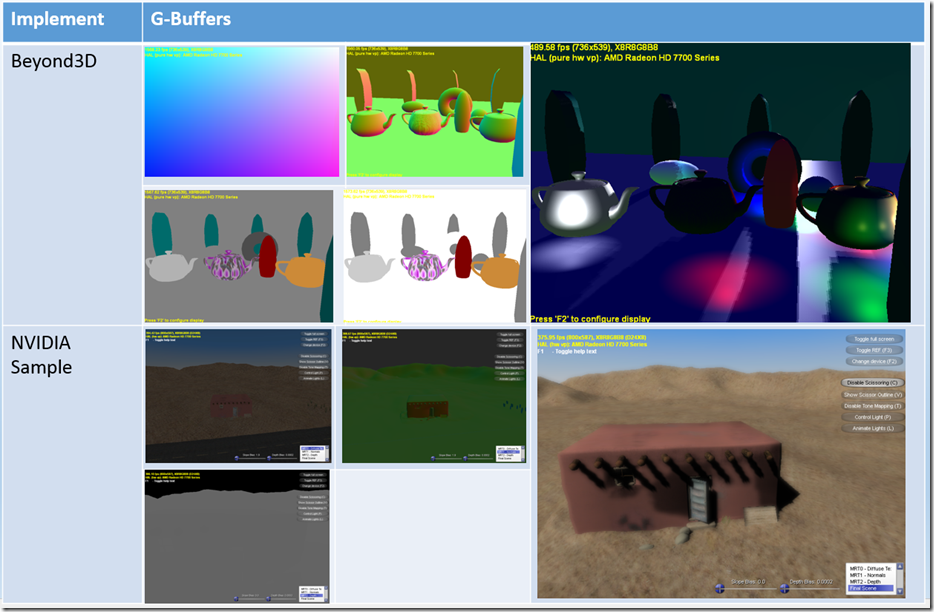

Here are screen shots about those buffer content:

High Dynamic Range (HDR)

Sometimes, using HDR buffers will waste too much memory. Actually we could Encode the HDR value(RGB) and store them into LDR buffers(RGBA). A lots of encoded method could be found on the Internet. Usually, we could use RGBM or RGBE. Some weak hardware like WII perfer to use RGBM encoding, and powerful device like PS3, XBOX360 perfer to use RGBE encoding.

Transparency Objects

There is no cheap solution for tranparency objects in deferred lighting. One way may be fall-back to forward rendering after deferred lighting; Or we could do the color blend with pixel shader in the post-process phase.

Light Optimization

As the deferred lighting is based on the screen space covered, so the best way to improve it is to find the minimal screen space covered. For the directional light, the full screen light shader will be used. For the volume light like point light and spot light, the convex light volume will be used to best enclosed and to find the minimal clip screen area(sphere for point light, frustum for spot light). Here we need to make sure that those light volume will not clip the near and far clip plane at the same time. Otherwise, there will be lighting hole.

Another method, we could use was use stencil buffer to accurately figure out the light screen areas.

Summary

-- Deferred lighting requires more memory. If you consider to use some post-process like blur, tone mapping, depth of field, more memory requried to save the immediate result (some effect could not done just with only one pass).

-- For some reasons, the authors prefer to texture to replace some complex pixel instructions. As you could find out that in those samples the N.L and N.H dot fucntion, light attenuation will be pre-calculated in some textures. Of course this will save some GPU performance. Well, this method is very popular because we could apply very complex light equation in real time. If you check the Unity3D ‘ShadowGun’ sample, you will find a editor generated texture for BRDF. That it is.

-- Currently, more and more engine provide deferred lighting for similar features. Unity and Unreal provide the forward and deferred rendering at the same time.

-- The full source code could be download from here.

本文深入探讨了延迟光照技术,一种将光照与渲染分离的图像空间技术,与传统的前向渲染相比,它能处理更多光源,实现更高质量的视觉效果,同时保持高帧率。文章详细分析了延迟光照的优势,如快速硬件Z拒绝、适应照片级真实感渲染,以及挑战,如大帧缓冲区需求和透明物体处理难度。此外,还讨论了G-Buffer的使用、HDR缓冲区的优劣、透明物体解决方案、光照优化策略等关键主题。

本文深入探讨了延迟光照技术,一种将光照与渲染分离的图像空间技术,与传统的前向渲染相比,它能处理更多光源,实现更高质量的视觉效果,同时保持高帧率。文章详细分析了延迟光照的优势,如快速硬件Z拒绝、适应照片级真实感渲染,以及挑战,如大帧缓冲区需求和透明物体处理难度。此外,还讨论了G-Buffer的使用、HDR缓冲区的优劣、透明物体解决方案、光照优化策略等关键主题。

7455

7455

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?