2025-08-23 21:58:38,964 INFO org.apache.hadoop.hdfs.server.namenode.FSImage: Start loading edits file /server/hadoop/data/nn/current/edits_0000000000000000001-0000000000000000001 maxTxnsToRead = 9223372036854775807

2025-08-23 21:58:38,966 INFO org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream: Fast-forwarding stream '/server/hadoop/data/nn/current/edits_0000000000000000001-0000000000000000001' to transaction ID 1

2025-08-23 21:58:39,048 INFO org.apache.hadoop.hdfs.server.namenode.FSImage: Loaded 1 edits file(s) (the last named /server/hadoop/data/nn/current/edits_0000000000000000001-0000000000000000001) of total size 1048576.0, total edits 1.0, total load time 64.0 ms

2025-08-23 21:58:39,050 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Need to save fs image? false (staleImage=false, haEnabled=false, isRollingUpgrade=false)

2025-08-23 21:58:39,050 INFO org.apache.hadoop.hdfs.server.namenode.FSEditLog: Starting log segment at 3

2025-08-23 21:58:39,279 INFO org.apache.hadoop.hdfs.server.namenode.NameCache: initialized with 0 entries 0 lookups

2025-08-23 21:58:39,279 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Finished loading FSImage in 708 msecs

2025-08-23 21:58:39,646 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: RPC server is binding to master:50070

2025-08-23 21:58:39,646 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: Enable NameNode state context:false

2025-08-23 21:58:39,656 INFO org.apache.hadoop.ipc.CallQueueManager: Using callQueue: class java.util.concurrent.LinkedBlockingQueue, queueCapacity: 10000, scheduler: class org.apache.hadoop.ipc.DefaultRpcScheduler, ipcBackoff: false.

2025-08-23 21:58:39,673 INFO org.apache.hadoop.ipc.Server: Listener at master:50070

2025-08-23 21:58:39,678 INFO org.apache.hadoop.ipc.Server: Starting Socket Reader #1 for port 50070

2025-08-23 21:58:39,789 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Registered FSNamesystemState, ReplicatedBlocksState and ECBlockGroupsState MBeans.

2025-08-23 21:58:39,791 INFO org.apache.hadoop.hdfs.server.common.Util: Assuming 'file' scheme for path /server/hadoop/data/nn in configuration.

2025-08-23 21:58:40,196 INFO org.apache.hadoop.hdfs.server.namenode.LeaseManager: Number of blocks under construction: 0

2025-08-23 21:58:40,397 INFO org.apache.hadoop.hdfs.server.blockmanagement.DatanodeAdminDefaultMonitor: Initialized the Default Decommission and Maintenance monitor

2025-08-23 21:58:40,400 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: Start MarkedDeleteBlockScrubber thread

2025-08-23 21:58:40,402 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: initializing replication queues

2025-08-23 21:58:40,415 INFO org.apache.hadoop.hdfs.StateChange: STATE* Leaving safe mode after 0 secs

2025-08-23 21:58:40,415 INFO org.apache.hadoop.hdfs.StateChange: STATE* Network topology has 0 racks and 0 datanodes

2025-08-23 21:58:40,415 INFO org.apache.hadoop.hdfs.StateChange: STATE* UnderReplicatedBlocks has 0 blocks

2025-08-23 21:58:40,457 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: Total number of blocks = 0

2025-08-23 21:58:40,457 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: Number of invalid blocks = 0

2025-08-23 21:58:40,457 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: Number of under-replicated blocks = 0

2025-08-23 21:58:40,457 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: Number of over-replicated blocks = 0

2025-08-23 21:58:40,457 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: Number of blocks being written = 0

2025-08-23 21:58:40,457 INFO org.apache.hadoop.hdfs.StateChange: STATE* Replication Queue initialization scan for invalid, over- and under-replicated blocks completed in 19 msec

2025-08-23 21:58:40,481 INFO org.apache.hadoop.ipc.Server: IPC Server Responder: starting

2025-08-23 21:58:40,485 INFO org.apache.hadoop.ipc.Server: IPC Server listener on 50070: starting

2025-08-23 21:58:40,648 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: NameNode RPC up at: master/192.168.88.8:50070

2025-08-23 21:58:40,671 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Starting services required for active state

2025-08-23 21:58:40,671 INFO org.apache.hadoop.hdfs.server.namenode.FSDirectory: Initializing quota with 12 thread(s)

2025-08-23 21:58:40,702 INFO org.apache.hadoop.hdfs.server.namenode.FSDirectory: Quota initialization completed in 30 milliseconds

name space=1

storage space=0

storage types=RAM_DISK=0, SSD=0, DISK=0, ARCHIVE=0, PROVIDED=0

2025-08-23 21:58:40,727 INFO org.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor: Starting CacheReplicationMonitor with interval 30000 milliseconds

2025-08-23 21:58:43,011 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* registerDatanode: from DatanodeRegistration(192.168.88.10:9866, datanodeUuid=da4db3bb-c966-465f-a90f-5d54ae18f35b, infoPort=9864, infoSecurePort=0, ipcPort=9867, storageInfo=lv=-57;cid=CID-b1ee6ae8-73a9-4763-a24f-2c840eac62b2;nsid=122842789;c=1755955721769) storage da4db3bb-c966-465f-a90f-5d54ae18f35b

2025-08-23 21:58:43,014 INFO org.apache.hadoop.net.NetworkTopology: Adding a new node: /default-rack/192.168.88.10:9866

2025-08-23 21:58:43,014 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockReportLeaseManager: Registered DN da4db3bb-c966-465f-a90f-5d54ae18f35b (192.168.88.10:9866).

2025-08-23 21:58:43,032 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* registerDatanode: from DatanodeRegistration(192.168.88.9:9866, datanodeUuid=fbbcb827-5b1b-4acd-a251-97a412da99d1, infoPort=9864, infoSecurePort=0, ipcPort=9867, storageInfo=lv=-57;cid=CID-b1ee6ae8-73a9-4763-a24f-2c840eac62b2;nsid=122842789;c=1755955721769) storage fbbcb827-5b1b-4acd-a251-97a412da99d1

2025-08-23 21:58:43,032 INFO org.apache.hadoop.net.NetworkTopology: Adding a new node: /default-rack/192.168.88.9:9866

2025-08-23 21:58:43,032 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockReportLeaseManager: Registered DN fbbcb827-5b1b-4acd-a251-97a412da99d1 (192.168.88.9:9866).

2025-08-23 21:58:43,156 INFO org.apache.hadoop.hdfs.server.blockmanagement.DatanodeDescriptor: Adding new storage ID DS-09455080-1e07-42ac-8c0c-97bd0539bce4 for DN 192.168.88.10:9866

2025-08-23 21:58:43,171 INFO org.apache.hadoop.hdfs.server.blockmanagement.DatanodeDescriptor: Adding new storage ID DS-e0af5c38-05e1-40cc-9839-7ca2a9555353 for DN 192.168.88.9:9866

2025-08-23 21:58:43,242 INFO BlockStateChange: BLOCK* processReport 0x95bc76793dd4a335 with lease ID 0xf16c10099c3274d4: Processing first storage report for DS-09455080-1e07-42ac-8c0c-97bd0539bce4 from datanode DatanodeRegistration(192.168.88.10:9866, datanodeUuid=da4db3bb-c966-465f-a90f-5d54ae18f35b, infoPort=9864, infoSecurePort=0, ipcPort=9867, storageInfo=lv=-57;cid=CID-b1ee6ae8-73a9-4763-a24f-2c840eac62b2;nsid=122842789;c=1755955721769)

2025-08-23 21:58:43,244 INFO BlockStateChange: BLOCK* processReport 0x95bc76793dd4a335 with lease ID 0xf16c10099c3274d4: from storage DS-09455080-1e07-42ac-8c0c-97bd0539bce4 node DatanodeRegistration(192.168.88.10:9866, datanodeUuid=da4db3bb-c966-465f-a90f-5d54ae18f35b, infoPort=9864, infoSecurePort=0, ipcPort=9867, storageInfo=lv=-57;cid=CID-b1ee6ae8-73a9-4763-a24f-2c840eac62b2;nsid=122842789;c=1755955721769), blocks: 0, hasStaleStorage: false, processing time: 3 msecs, invalidatedBlocks: 0

2025-08-23 21:58:43,244 INFO BlockStateChange: BLOCK* processReport 0x76705665125f17dc with lease ID 0xf16c10099c3274d5: Processing first storage report for DS-e0af5c38-05e1-40cc-9839-7ca2a9555353 from datanode DatanodeRegistration(192.168.88.9:9866, datanodeUuid=fbbcb827-5b1b-4acd-a251-97a412da99d1, infoPort=9864, infoSecurePort=0, ipcPort=9867, storageInfo=lv=-57;cid=CID-b1ee6ae8-73a9-4763-a24f-2c840eac62b2;nsid=122842789;c=1755955721769)

2025-08-23 21:58:43,245 INFO BlockStateChange: BLOCK* processReport 0x76705665125f17dc with lease ID 0xf16c10099c3274d5: from storage DS-e0af5c38-05e1-40cc-9839-7ca2a9555353 node DatanodeRegistration(192.168.88.9:9866, datanodeUuid=fbbcb827-5b1b-4acd-a251-97a412da99d1, infoPort=9864, infoSecurePort=0, ipcPort=9867, storageInfo=lv=-57;cid=CID-b1ee6ae8-73a9-4763-a24f-2c840eac62b2;nsid=122842789;c=1755955721769), blocks: 0, hasStaleStorage: false, processing time: 0 msecs, invalidatedBlocks: 0

2025-08-23 21:58:44,498 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* registerDatanode: from DatanodeRegistration(192.168.88.8:9866, datanodeUuid=5e3fedcf-07cf-4702-9b47-a4e827a92a5f, infoPort=9864, infoSecurePort=0, ipcPort=9867, storageInfo=lv=-57;cid=CID-b1ee6ae8-73a9-4763-a24f-2c840eac62b2;nsid=122842789;c=1755955721769) storage 5e3fedcf-07cf-4702-9b47-a4e827a92a5f

2025-08-23 21:58:44,498 INFO org.apache.hadoop.net.NetworkTopology: Adding a new node: /default-rack/192.168.88.8:9866

2025-08-23 21:58:44,498 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockReportLeaseManager: Registered DN 5e3fedcf-07cf-4702-9b47-a4e827a92a5f (192.168.88.8:9866).

2025-08-23 21:58:44,548 INFO org.apache.hadoop.hdfs.server.blockmanagement.DatanodeDescriptor: Adding new storage ID DS-0ade9864-83fa-4d01-933d-bd926d937ee1 for DN 192.168.88.8:9866

2025-08-23 21:58:44,589 INFO BlockStateChange: BLOCK* processReport 0xba3c5becc84253a1 with lease ID 0xf16c10099c3274d6: Processing first storage report for DS-0ade9864-83fa-4d01-933d-bd926d937ee1 from datanode DatanodeRegistration(192.168.88.8:9866, datanodeUuid=5e3fedcf-07cf-4702-9b47-a4e827a92a5f, infoPort=9864, infoSecurePort=0, ipcPort=9867, storageInfo=lv=-57;cid=CID-b1ee6ae8-73a9-4763-a24f-2c840eac62b2;nsid=122842789;c=1755955721769)

2025-08-23 21:58:44,590 INFO BlockStateChange: BLOCK* processReport 0xba3c5becc84253a1 with lease ID 0xf16c10099c3274d6: from storage DS-0ade9864-83fa-4d01-933d-bd926d937ee1 node DatanodeRegistration(192.168.88.8:9866, datanodeUuid=5e3fedcf-07cf-4702-9b47-a4e827a92a5f, infoPort=9864, infoSecurePort=0, ipcPort=9867, storageInfo=lv=-57;cid=CID-b1ee6ae8-73a9-4763-a24f-2c840eac62b2;nsid=122842789;c=1755955721769), blocks: 0, hasStaleStorage: false, processing time: 0 msecs, invalidatedBlocks: 0

2025-08-23 22:01:04,977 ERROR org.apache.hadoop.hdfs.server.namenode.NameNode: RECEIVED SIGNAL 15: SIGTERM

2025-08-23 22:01:04,982 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at master/192.168.88.8

************************************************************/

2025-08-23 22:01:16,701 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = master/192.168.88.8

STARTUP_MSG: args = []

STARTUP_MSG: version = 3.3.6

STARTUP_MSG: classpath = /server/hadoop/etc/hadoop:/server/hadoop/share/hadoop/common/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/server/hadoop/share/hadoop/common/lib/kerby-pkix-1.0.1.jar:/server/hadoop/share/hadoop/common/lib/jackson-annotations-2.12.7.jar:/server/hadoop/share/hadoop/common/lib/netty-handler-ssl-ocsp-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/metrics-core-3.2.4.jar:/server/hadoop/share/hadoop/common/lib/netty-resolver-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/commons-text-1.10.0.jar:/server/hadoop/share/hadoop/common/lib/netty-transport-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/hadoop-shaded-protobuf_3_7-1.1.1.jar:/server/hadoop/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/server/hadoop/share/hadoop/common/lib/jetty-server-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/common/lib/netty-common-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/kerby-util-1.0.1.jar:/server/hadoop/share/hadoop/common/lib/hadoop-annotations-3.3.6.jar:/server/hadoop/share/hadoop/common/lib/failureaccess-1.0.jar:/server/hadoop/share/hadoop/common/lib/jersey-json-1.20.jar:/server/hadoop/share/hadoop/common/lib/nimbus-jose-jwt-9.8.1.jar:/server/hadoop/share/hadoop/common/lib/netty-resolver-dns-native-macos-4.1.89.Final-osx-x86_64.jar:/server/hadoop/share/hadoop/common/lib/netty-codec-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/snappy-java-1.1.8.2.jar:/server/hadoop/share/hadoop/common/lib/kerb-identity-1.0.1.jar:/server/hadoop/share/hadoop/common/lib/kerb-util-1.0.1.jar:/server/hadoop/share/hadoop/common/lib/kerb-client-1.0.1.jar:/server/hadoop/share/hadoop/common/lib/gson-2.9.0.jar:/server/hadoop/share/hadoop/common/lib/commons-logging-1.1.3.jar:/server/hadoop/share/hadoop/common/lib/netty-transport-native-unix-common-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/netty-codec-redis-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/netty-codec-http2-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/netty-transport-native-kqueue-4.1.89.Final-osx-aarch_64.jar:/server/hadoop/share/hadoop/common/lib/jetty-util-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/common/lib/jsp-api-2.1.jar:/server/hadoop/share/hadoop/common/lib/jetty-util-ajax-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/common/lib/zookeeper-3.6.3.jar:/server/hadoop/share/hadoop/common/lib/guava-27.0-jre.jar:/server/hadoop/share/hadoop/common/lib/httpcore-4.4.13.jar:/server/hadoop/share/hadoop/common/lib/netty-transport-rxtx-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/kerb-admin-1.0.1.jar:/server/hadoop/share/hadoop/common/lib/curator-client-5.2.0.jar:/server/hadoop/share/hadoop/common/lib/netty-buffer-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/netty-transport-native-epoll-4.1.89.Final-linux-x86_64.jar:/server/hadoop/share/hadoop/common/lib/jcip-annotations-1.0-1.jar:/server/hadoop/share/hadoop/common/lib/netty-codec-xml-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/javax.servlet-api-3.1.0.jar:/server/hadoop/share/hadoop/common/lib/netty-resolver-dns-classes-macos-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/curator-recipes-5.2.0.jar:/server/hadoop/share/hadoop/common/lib/commons-net-3.9.0.jar:/server/hadoop/share/hadoop/common/lib/jackson-databind-2.12.7.1.jar:/server/hadoop/share/hadoop/common/lib/commons-beanutils-1.9.4.jar:/server/hadoop/share/hadoop/common/lib/netty-resolver-dns-native-macos-4.1.89.Final-osx-aarch_64.jar:/server/hadoop/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/server/hadoop/share/hadoop/common/lib/jettison-1.5.4.jar:/server/hadoop/share/hadoop/common/lib/slf4j-api-1.7.36.jar:/server/hadoop/share/hadoop/common/lib/jsr305-3.0.2.jar:/server/hadoop/share/hadoop/common/lib/netty-codec-dns-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/animal-sniffer-annotations-1.17.jar:/server/hadoop/share/hadoop/common/lib/kerb-core-1.0.1.jar:/server/hadoop/share/hadoop/common/lib/jetty-servlet-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/common/lib/netty-codec-socks-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/commons-io-2.8.0.jar:/server/hadoop/share/hadoop/common/lib/netty-transport-classes-epoll-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/re2j-1.1.jar:/server/hadoop/share/hadoop/common/lib/netty-handler-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/commons-daemon-1.0.13.jar:/server/hadoop/share/hadoop/common/lib/slf4j-reload4j-1.7.36.jar:/server/hadoop/share/hadoop/common/lib/commons-codec-1.15.jar:/server/hadoop/share/hadoop/common/lib/woodstox-core-5.4.0.jar:/server/hadoop/share/hadoop/common/lib/netty-codec-stomp-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/zookeeper-jute-3.6.3.jar:/server/hadoop/share/hadoop/common/lib/kerby-asn1-1.0.1.jar:/server/hadoop/share/hadoop/common/lib/kerby-config-1.0.1.jar:/server/hadoop/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/server/hadoop/share/hadoop/common/lib/stax2-api-4.2.1.jar:/server/hadoop/share/hadoop/common/lib/netty-codec-http-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/kerb-crypto-1.0.1.jar:/server/hadoop/share/hadoop/common/lib/jakarta.activation-api-1.2.1.jar:/server/hadoop/share/hadoop/common/lib/netty-transport-classes-kqueue-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/server/hadoop/share/hadoop/common/lib/hadoop-shaded-guava-1.1.1.jar:/server/hadoop/share/hadoop/common/lib/jackson-core-2.12.7.jar:/server/hadoop/share/hadoop/common/lib/commons-configuration2-2.8.0.jar:/server/hadoop/share/hadoop/common/lib/jaxb-api-2.2.11.jar:/server/hadoop/share/hadoop/common/lib/jetty-security-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/common/lib/token-provider-1.0.1.jar:/server/hadoop/share/hadoop/common/lib/reload4j-1.2.22.jar:/server/hadoop/share/hadoop/common/lib/netty-codec-haproxy-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/curator-framework-5.2.0.jar:/server/hadoop/share/hadoop/common/lib/jsch-0.1.55.jar:/server/hadoop/share/hadoop/common/lib/netty-transport-native-kqueue-4.1.89.Final-osx-x86_64.jar:/server/hadoop/share/hadoop/common/lib/checker-qual-2.5.2.jar:/server/hadoop/share/hadoop/common/lib/netty-codec-memcache-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/jetty-http-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/common/lib/netty-handler-proxy-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/netty-transport-native-epoll-4.1.89.Final-linux-aarch_64.jar:/server/hadoop/share/hadoop/common/lib/jetty-webapp-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/common/lib/jersey-server-1.19.4.jar:/server/hadoop/share/hadoop/common/lib/netty-transport-sctp-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/netty-resolver-dns-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/kerby-xdr-1.0.1.jar:/server/hadoop/share/hadoop/common/lib/jul-to-slf4j-1.7.36.jar:/server/hadoop/share/hadoop/common/lib/commons-lang3-3.12.0.jar:/server/hadoop/share/hadoop/common/lib/kerb-common-1.0.1.jar:/server/hadoop/share/hadoop/common/lib/jetty-io-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/common/lib/netty-transport-udt-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/kerb-simplekdc-1.0.1.jar:/server/hadoop/share/hadoop/common/lib/jetty-xml-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/common/lib/paranamer-2.3.jar:/server/hadoop/share/hadoop/common/lib/commons-math3-3.1.1.jar:/server/hadoop/share/hadoop/common/lib/audience-annotations-0.5.0.jar:/server/hadoop/share/hadoop/common/lib/jersey-servlet-1.19.4.jar:/server/hadoop/share/hadoop/common/lib/netty-codec-smtp-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/dnsjava-2.1.7.jar:/server/hadoop/share/hadoop/common/lib/commons-collections-3.2.2.jar:/server/hadoop/share/hadoop/common/lib/netty-codec-mqtt-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/kerb-server-1.0.1.jar:/server/hadoop/share/hadoop/common/lib/httpclient-4.5.13.jar:/server/hadoop/share/hadoop/common/lib/netty-all-4.1.89.Final.jar:/server/hadoop/share/hadoop/common/lib/j2objc-annotations-1.1.jar:/server/hadoop/share/hadoop/common/lib/jersey-core-1.19.4.jar:/server/hadoop/share/hadoop/common/lib/commons-cli-1.2.jar:/server/hadoop/share/hadoop/common/lib/hadoop-auth-3.3.6.jar:/server/hadoop/share/hadoop/common/lib/jsr311-api-1.1.1.jar:/server/hadoop/share/hadoop/common/lib/commons-compress-1.21.jar:/server/hadoop/share/hadoop/common/lib/avro-1.7.7.jar:/server/hadoop/share/hadoop/common/hadoop-registry-3.3.6.jar:/server/hadoop/share/hadoop/common/hadoop-common-3.3.6.jar:/server/hadoop/share/hadoop/common/hadoop-kms-3.3.6.jar:/server/hadoop/share/hadoop/common/hadoop-nfs-3.3.6.jar:/server/hadoop/share/hadoop/common/hadoop-common-3.3.6-tests.jar:/server/hadoop/share/hadoop/hdfs:/server/hadoop/share/hadoop/hdfs/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/server/hadoop/share/hadoop/hdfs/lib/kerby-pkix-1.0.1.jar:/server/hadoop/share/hadoop/hdfs/lib/jackson-annotations-2.12.7.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-handler-ssl-ocsp-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/metrics-core-3.2.4.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-resolver-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/commons-text-1.10.0.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-transport-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/hadoop-shaded-protobuf_3_7-1.1.1.jar:/server/hadoop/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/server/hadoop/share/hadoop/hdfs/lib/jetty-server-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-common-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/kerby-util-1.0.1.jar:/server/hadoop/share/hadoop/hdfs/lib/hadoop-annotations-3.3.6.jar:/server/hadoop/share/hadoop/hdfs/lib/failureaccess-1.0.jar:/server/hadoop/share/hadoop/hdfs/lib/jersey-json-1.20.jar:/server/hadoop/share/hadoop/hdfs/lib/nimbus-jose-jwt-9.8.1.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-resolver-dns-native-macos-4.1.89.Final-osx-x86_64.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-codec-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/snappy-java-1.1.8.2.jar:/server/hadoop/share/hadoop/hdfs/lib/okio-2.8.0.jar:/server/hadoop/share/hadoop/hdfs/lib/kerb-identity-1.0.1.jar:/server/hadoop/share/hadoop/hdfs/lib/kerb-util-1.0.1.jar:/server/hadoop/share/hadoop/hdfs/lib/kerb-client-1.0.1.jar:/server/hadoop/share/hadoop/hdfs/lib/gson-2.9.0.jar:/server/hadoop/share/hadoop/hdfs/lib/kotlin-stdlib-1.4.10.jar:/server/hadoop/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-transport-native-unix-common-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-codec-redis-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-codec-http2-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-transport-native-kqueue-4.1.89.Final-osx-aarch_64.jar:/server/hadoop/share/hadoop/hdfs/lib/jetty-util-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/hdfs/lib/jetty-util-ajax-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/hdfs/lib/zookeeper-3.6.3.jar:/server/hadoop/share/hadoop/hdfs/lib/guava-27.0-jre.jar:/server/hadoop/share/hadoop/hdfs/lib/httpcore-4.4.13.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-transport-rxtx-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/kerb-admin-1.0.1.jar:/server/hadoop/share/hadoop/hdfs/lib/curator-client-5.2.0.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-buffer-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-transport-native-epoll-4.1.89.Final-linux-x86_64.jar:/server/hadoop/share/hadoop/hdfs/lib/jcip-annotations-1.0-1.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-codec-xml-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/javax.servlet-api-3.1.0.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-resolver-dns-classes-macos-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/curator-recipes-5.2.0.jar:/server/hadoop/share/hadoop/hdfs/lib/commons-net-3.9.0.jar:/server/hadoop/share/hadoop/hdfs/lib/jackson-databind-2.12.7.1.jar:/server/hadoop/share/hadoop/hdfs/lib/commons-beanutils-1.9.4.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-resolver-dns-native-macos-4.1.89.Final-osx-aarch_64.jar:/server/hadoop/share/hadoop/hdfs/lib/jaxb-impl-2.2.3-1.jar:/server/hadoop/share/hadoop/hdfs/lib/jettison-1.5.4.jar:/server/hadoop/share/hadoop/hdfs/lib/jsr305-3.0.2.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-codec-dns-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/animal-sniffer-annotations-1.17.jar:/server/hadoop/share/hadoop/hdfs/lib/kerb-core-1.0.1.jar:/server/hadoop/share/hadoop/hdfs/lib/jetty-servlet-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-codec-socks-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/commons-io-2.8.0.jar:/server/hadoop/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-transport-classes-epoll-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/re2j-1.1.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-handler-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/server/hadoop/share/hadoop/hdfs/lib/commons-codec-1.15.jar:/server/hadoop/share/hadoop/hdfs/lib/woodstox-core-5.4.0.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-codec-stomp-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/zookeeper-jute-3.6.3.jar:/server/hadoop/share/hadoop/hdfs/lib/kerby-asn1-1.0.1.jar:/server/hadoop/share/hadoop/hdfs/lib/kerby-config-1.0.1.jar:/server/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/server/hadoop/share/hadoop/hdfs/lib/stax2-api-4.2.1.jar:/server/hadoop/share/hadoop/hdfs/lib/kotlin-stdlib-common-1.4.10.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-codec-http-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/kerb-crypto-1.0.1.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-3.10.6.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/jakarta.activation-api-1.2.1.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-transport-classes-kqueue-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/server/hadoop/share/hadoop/hdfs/lib/hadoop-shaded-guava-1.1.1.jar:/server/hadoop/share/hadoop/hdfs/lib/jackson-core-2.12.7.jar:/server/hadoop/share/hadoop/hdfs/lib/okhttp-4.9.3.jar:/server/hadoop/share/hadoop/hdfs/lib/commons-configuration2-2.8.0.jar:/server/hadoop/share/hadoop/hdfs/lib/jaxb-api-2.2.11.jar:/server/hadoop/share/hadoop/hdfs/lib/jetty-security-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/hdfs/lib/token-provider-1.0.1.jar:/server/hadoop/share/hadoop/hdfs/lib/reload4j-1.2.22.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-codec-haproxy-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/curator-framework-5.2.0.jar:/server/hadoop/share/hadoop/hdfs/lib/jsch-0.1.55.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-transport-native-kqueue-4.1.89.Final-osx-x86_64.jar:/server/hadoop/share/hadoop/hdfs/lib/checker-qual-2.5.2.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-codec-memcache-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/jetty-http-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-handler-proxy-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-transport-native-epoll-4.1.89.Final-linux-aarch_64.jar:/server/hadoop/share/hadoop/hdfs/lib/jetty-webapp-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/hdfs/lib/jersey-server-1.19.4.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-transport-sctp-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-resolver-dns-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/kerby-xdr-1.0.1.jar:/server/hadoop/share/hadoop/hdfs/lib/HikariCP-java7-2.4.12.jar:/server/hadoop/share/hadoop/hdfs/lib/commons-lang3-3.12.0.jar:/server/hadoop/share/hadoop/hdfs/lib/kerb-common-1.0.1.jar:/server/hadoop/share/hadoop/hdfs/lib/jetty-io-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-transport-udt-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/kerb-simplekdc-1.0.1.jar:/server/hadoop/share/hadoop/hdfs/lib/jetty-xml-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/hdfs/lib/paranamer-2.3.jar:/server/hadoop/share/hadoop/hdfs/lib/commons-math3-3.1.1.jar:/server/hadoop/share/hadoop/hdfs/lib/audience-annotations-0.5.0.jar:/server/hadoop/share/hadoop/hdfs/lib/jersey-servlet-1.19.4.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-codec-smtp-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/dnsjava-2.1.7.jar:/server/hadoop/share/hadoop/hdfs/lib/commons-collections-3.2.2.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-codec-mqtt-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/kerb-server-1.0.1.jar:/server/hadoop/share/hadoop/hdfs/lib/json-simple-1.1.1.jar:/server/hadoop/share/hadoop/hdfs/lib/httpclient-4.5.13.jar:/server/hadoop/share/hadoop/hdfs/lib/netty-all-4.1.89.Final.jar:/server/hadoop/share/hadoop/hdfs/lib/j2objc-annotations-1.1.jar:/server/hadoop/share/hadoop/hdfs/lib/jersey-core-1.19.4.jar:/server/hadoop/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/server/hadoop/share/hadoop/hdfs/lib/hadoop-auth-3.3.6.jar:/server/hadoop/share/hadoop/hdfs/lib/jsr311-api-1.1.1.jar:/server/hadoop/share/hadoop/hdfs/lib/commons-compress-1.21.jar:/server/hadoop/share/hadoop/hdfs/lib/avro-1.7.7.jar:/server/hadoop/share/hadoop/hdfs/hadoop-hdfs-rbf-3.3.6-tests.jar:/server/hadoop/share/hadoop/hdfs/hadoop-hdfs-nfs-3.3.6.jar:/server/hadoop/share/hadoop/hdfs/hadoop-hdfs-native-client-3.3.6.jar:/server/hadoop/share/hadoop/hdfs/hadoop-hdfs-native-client-3.3.6-tests.jar:/server/hadoop/share/hadoop/hdfs/hadoop-hdfs-client-3.3.6.jar:/server/hadoop/share/hadoop/hdfs/hadoop-hdfs-rbf-3.3.6.jar:/server/hadoop/share/hadoop/hdfs/hadoop-hdfs-httpfs-3.3.6.jar:/server/hadoop/share/hadoop/hdfs/hadoop-hdfs-3.3.6.jar:/server/hadoop/share/hadoop/hdfs/hadoop-hdfs-3.3.6-tests.jar:/server/hadoop/share/hadoop/hdfs/hadoop-hdfs-client-3.3.6-tests.jar:/server/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-3.3.6.jar:/server/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-common-3.3.6.jar:/server/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.3.6-tests.jar:/server/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-uploader-3.3.6.jar:/server/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-nativetask-3.3.6.jar:/server/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.6.jar:/server/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-app-3.3.6.jar:/server/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-3.3.6.jar:/server/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-3.3.6.jar:/server/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-3.3.6.jar:/server/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.3.6.jar:/server/hadoop/share/hadoop/yarn:/server/hadoop/share/hadoop/yarn/lib/snakeyaml-2.0.jar:/server/hadoop/share/hadoop/yarn/lib/bcpkix-jdk15on-1.68.jar:/server/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-json-provider-2.12.7.jar:/server/hadoop/share/hadoop/yarn/lib/jetty-client-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/yarn/lib/jersey-client-1.19.4.jar:/server/hadoop/share/hadoop/yarn/lib/javax-websocket-client-impl-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/yarn/lib/jline-3.9.0.jar:/server/hadoop/share/hadoop/yarn/lib/javax.websocket-client-api-1.0.jar:/server/hadoop/share/hadoop/yarn/lib/swagger-annotations-1.5.4.jar:/server/hadoop/share/hadoop/yarn/lib/mssql-jdbc-6.2.1.jre7.jar:/server/hadoop/share/hadoop/yarn/lib/guice-servlet-4.0.jar:/server/hadoop/share/hadoop/yarn/lib/jetty-annotations-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/yarn/lib/jetty-jndi-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/yarn/lib/aopalliance-1.0.jar:/server/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-base-2.12.7.jar:/server/hadoop/share/hadoop/yarn/lib/json-io-2.5.1.jar:/server/hadoop/share/hadoop/yarn/lib/javax.inject-1.jar:/server/hadoop/share/hadoop/yarn/lib/objenesis-2.6.jar:/server/hadoop/share/hadoop/yarn/lib/asm-tree-9.4.jar:/server/hadoop/share/hadoop/yarn/lib/geronimo-jcache_1.0_spec-1.0-alpha-1.jar:/server/hadoop/share/hadoop/yarn/lib/websocket-common-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/yarn/lib/jetty-plus-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/yarn/lib/jna-5.2.0.jar:/server/hadoop/share/hadoop/yarn/lib/javax.websocket-api-1.0.jar:/server/hadoop/share/hadoop/yarn/lib/javax-websocket-server-impl-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/yarn/lib/websocket-api-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/yarn/lib/websocket-client-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/yarn/lib/jackson-module-jaxb-annotations-2.12.7.jar:/server/hadoop/share/hadoop/yarn/lib/bcprov-jdk15on-1.68.jar:/server/hadoop/share/hadoop/yarn/lib/asm-commons-9.4.jar:/server/hadoop/share/hadoop/yarn/lib/guice-4.0.jar:/server/hadoop/share/hadoop/yarn/lib/websocket-servlet-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/yarn/lib/websocket-server-9.4.51.v20230217.jar:/server/hadoop/share/hadoop/yarn/lib/jakarta.xml.bind-api-2.3.2.jar:/server/hadoop/share/hadoop/yarn/lib/fst-2.50.jar:/server/hadoop/share/hadoop/yarn/lib/jersey-guice-1.19.4.jar:/server/hadoop/share/hadoop/yarn/lib/ehcache-3.3.1.jar:/server/hadoop/share/hadoop/yarn/lib/java-util-1.9.0.jar:/server/hadoop/share/hadoop/yarn/hadoop-yarn-registry-3.3.6.jar:/server/hadoop/share/hadoop/yarn/hadoop-yarn-server-nodemanager-3.3.6.jar:/server/hadoop/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-3.3.6.jar:/server/hadoop/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-3.3.6.jar:/server/hadoop/share/hadoop/yarn/hadoop-yarn-common-3.3.6.jar:/server/hadoop/share/hadoop/yarn/hadoop-yarn-server-common-3.3.6.jar:/server/hadoop/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-3.3.6.jar:/server/hadoop/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-3.3.6.jar:/server/hadoop/share/hadoop/yarn/hadoop-yarn-server-web-proxy-3.3.6.jar:/server/hadoop/share/hadoop/yarn/hadoop-yarn-server-timeline-pluginstorage-3.3.6.jar:/server/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-3.3.6.jar:/server/hadoop/share/hadoop/yarn/hadoop-yarn-client-3.3.6.jar:/server/hadoop/share/hadoop/yarn/hadoop-yarn-applications-mawo-core-3.3.6.jar:/server/hadoop/share/hadoop/yarn/hadoop-yarn-server-router-3.3.6.jar:/server/hadoop/share/hadoop/yarn/hadoop-yarn-services-api-3.3.6.jar:/server/hadoop/share/hadoop/yarn/hadoop-yarn-api-3.3.6.jar:/server/hadoop/share/hadoop/yarn/hadoop-yarn-services-core-3.3.6.jar:/server/hadoop/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-3.3.6.jar

STARTUP_MSG: build = https://github.com/apache/hadoop.git -r 1be78238728da9266a4f88195058f08fd012bf9c; compiled by 'ubuntu' on 2023-06-18T08:22Z

STARTUP_MSG: java = 1.8.0_401

************************************************************/

2025-08-23 22:01:16,708 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

2025-08-23 22:01:16,801 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: createNameNode []

2025-08-23 22:01:16,913 INFO org.apache.hadoop.metrics2.impl.MetricsConfig: Loaded properties from hadoop-metrics2.properties

2025-08-23 22:01:16,997 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Scheduled Metric snapshot period at 10 second(s).

2025-08-23 22:01:16,997 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: NameNode metrics system started

2025-08-23 22:01:17,072 INFO org.apache.hadoop.hdfs.server.namenode.NameNodeUtils: fs.defaultFS is hdfs://master:8020

2025-08-23 22:01:17,072 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: Clients should use master:8020 to access this namenode/service.

2025-08-23 22:01:17,291 INFO org.apache.hadoop.util.JvmPauseMonitor: Starting JVM pause monitor

2025-08-23 22:01:17,380 INFO org.apache.hadoop.hdfs.DFSUtil: Filter initializers set : org.apache.hadoop.http.lib.StaticUserWebFilter,org.apache.hadoop.hdfs.web.AuthFilterInitializer

2025-08-23 22:01:17,386 INFO org.apache.hadoop.hdfs.DFSUtil: Starting Web-server for hdfs at: http://0.0.0.0:9870

2025-08-23 22:01:17,398 INFO org.eclipse.jetty.util.log: Logging initialized @1267ms to org.eclipse.jetty.util.log.Slf4jLog

2025-08-23 22:01:17,499 WARN org.apache.hadoop.security.authentication.server.AuthenticationFilter: Unable to initialize FileSignerSecretProvider, falling back to use random secrets. Reason: Could not read signature secret file: /root/hadoop-http-auth-signature-secret

2025-08-23 22:01:17,515 INFO org.apache.hadoop.http.HttpRequestLog: Http request log for http.requests.namenode is not defined

2025-08-23 22:01:17,522 INFO org.apache.hadoop.http.HttpServer2: Added global filter 'safety' (class=org.apache.hadoop.http.HttpServer2$QuotingInputFilter)

2025-08-23 22:01:17,524 INFO org.apache.hadoop.http.HttpServer2: Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context hdfs

2025-08-23 22:01:17,524 INFO org.apache.hadoop.http.HttpServer2: Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context static

2025-08-23 22:01:17,524 INFO org.apache.hadoop.http.HttpServer2: Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context logs

2025-08-23 22:01:17,526 INFO org.apache.hadoop.http.HttpServer2: Added filter AuthFilter (class=org.apache.hadoop.hdfs.web.AuthFilter) to context hdfs

2025-08-23 22:01:17,526 INFO org.apache.hadoop.http.HttpServer2: Added filter AuthFilter (class=org.apache.hadoop.hdfs.web.AuthFilter) to context static

2025-08-23 22:01:17,526 INFO org.apache.hadoop.http.HttpServer2: Added filter AuthFilter (class=org.apache.hadoop.hdfs.web.AuthFilter) to context logs

2025-08-23 22:01:17,559 INFO org.apache.hadoop.http.HttpServer2: addJerseyResourcePackage: packageName=org.apache.hadoop.hdfs.server.namenode.web.resources;org.apache.hadoop.hdfs.web.resources, pathSpec=/webhdfs/v1/*

2025-08-23 22:01:17,569 INFO org.apache.hadoop.http.HttpServer2: Jetty bound to port 9870

2025-08-23 22:01:17,571 INFO org.eclipse.jetty.server.Server: jetty-9.4.51.v20230217; built: 2023-02-17T08:19:37.309Z; git: b45c405e4544384de066f814ed42ae3dceacdd49; jvm 1.8.0_401-b10

2025-08-23 22:01:17,598 INFO org.eclipse.jetty.server.session: DefaultSessionIdManager workerName=node0

2025-08-23 22:01:17,598 INFO org.eclipse.jetty.server.session: No SessionScavenger set, using defaults

2025-08-23 22:01:17,599 INFO org.eclipse.jetty.server.session: node0 Scavenging every 660000ms

2025-08-23 22:01:17,618 WARN org.apache.hadoop.security.authentication.server.AuthenticationFilter: Unable to initialize FileSignerSecretProvider, falling back to use random secrets. Reason: Could not read signature secret file: /root/hadoop-http-auth-signature-secret

2025-08-23 22:01:17,621 INFO org.eclipse.jetty.server.handler.ContextHandler: Started o.e.j.s.ServletContextHandler@465232e9{logs,/logs,file:///server/hadoop/logs/,AVAILABLE}

2025-08-23 22:01:17,622 INFO org.eclipse.jetty.server.handler.ContextHandler: Started o.e.j.s.ServletContextHandler@7486b455{static,/static,file:///server/hadoop/share/hadoop/hdfs/webapps/static/,AVAILABLE}

2025-08-23 22:01:17,691 INFO org.eclipse.jetty.server.handler.ContextHandler: Started o.e.j.w.WebAppContext@2d6c53fc{hdfs,/,file:///server/hadoop/share/hadoop/hdfs/webapps/hdfs/,AVAILABLE}{file:/server/hadoop/share/hadoop/hdfs/webapps/hdfs}

2025-08-23 22:01:17,702 INFO org.eclipse.jetty.server.AbstractConnector: Started ServerConnector@21d03963{HTTP/1.1, (http/1.1)}{0.0.0.0:9870}

2025-08-23 22:01:17,702 INFO org.eclipse.jetty.server.Server: Started @1571ms

2025-08-23 22:01:18,186 INFO org.apache.hadoop.hdfs.server.common.Util: Assuming 'file' scheme for path /server/hadoop/data/nn in configuration.

2025-08-23 22:01:18,186 INFO org.apache.hadoop.hdfs.server.common.Util: Assuming 'file' scheme for path /server/hadoop/data/nn in configuration.

2025-08-23 22:01:18,186 WARN org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Only one image storage directory (dfs.namenode.name.dir) configured. Beware of data loss due to lack of redundant storage directories!

2025-08-23 22:01:18,186 WARN org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Only one namespace edits storage directory (dfs.namenode.edits.dir) configured. Beware of data loss due to lack of redundant storage directories!

2025-08-23 22:01:18,192 INFO org.apache.hadoop.hdfs.server.common.Util: Assuming 'file' scheme for path /server/hadoop/data/nn in configuration.

2025-08-23 22:01:18,192 INFO org.apache.hadoop.hdfs.server.common.Util: Assuming 'file' scheme for path /server/hadoop/data/nn in configuration.

2025-08-23 22:01:18,245 INFO org.apache.hadoop.hdfs.server.namenode.FSEditLog: Edit logging is async:true

2025-08-23 22:01:18,277 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: KeyProvider: null

2025-08-23 22:01:18,280 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: fsLock is fair: true

2025-08-23 22:01:18,280 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Detailed lock hold time metrics enabled: false

2025-08-23 22:01:18,288 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: fsOwner = root (auth:SIMPLE)

2025-08-23 22:01:18,288 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: supergroup = supergroup

2025-08-23 22:01:18,288 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: isPermissionEnabled = true

2025-08-23 22:01:18,288 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: isStoragePolicyEnabled = true

2025-08-23 22:01:18,289 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: HA Enabled: false

2025-08-23 22:01:18,344 INFO org.apache.hadoop.hdfs.server.common.Util: dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling

2025-08-23 22:01:18,528 INFO org.apache.hadoop.hdfs.server.blockmanagement.DatanodeManager: dfs.block.invalidate.limit : configured=1000, counted=60, effected=1000

2025-08-23 22:01:18,528 INFO org.apache.hadoop.hdfs.server.blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

2025-08-23 22:01:18,532 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

2025-08-23 22:01:18,532 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: The block deletion will start around 2025 八月 23 22:01:18

2025-08-23 22:01:18,535 INFO org.apache.hadoop.util.GSet: Computing capacity for map BlocksMap

2025-08-23 22:01:18,535 INFO org.apache.hadoop.util.GSet: VM type = 64-bit

2025-08-23 22:01:18,536 INFO org.apache.hadoop.util.GSet: 2.0% max memory 752 MB = 15.0 MB

2025-08-23 22:01:18,536 INFO org.apache.hadoop.util.GSet: capacity = 2^21 = 2097152 entries

2025-08-23 22:01:18,546 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: Storage policy satisfier is disabled

2025-08-23 22:01:18,546 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: dfs.block.access.token.enable = false

2025-08-23 22:01:18,564 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.threshold-pct = 0.999

2025-08-23 22:01:18,565 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.min.datanodes = 0

2025-08-23 22:01:18,565 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.extension = 30000

2025-08-23 22:01:18,565 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: defaultReplication = 3

2025-08-23 22:01:18,566 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: maxReplication = 512

2025-08-23 22:01:18,566 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: minReplication = 1

2025-08-23 22:01:18,566 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: maxReplicationStreams = 2

2025-08-23 22:01:18,566 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: redundancyRecheckInterval = 3000ms

2025-08-23 22:01:18,566 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: encryptDataTransfer = false

2025-08-23 22:01:18,566 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: maxNumBlocksToLog = 1000

2025-08-23 22:01:18,637 INFO org.apache.hadoop.hdfs.server.namenode.FSDirectory: GLOBAL serial map: bits=29 maxEntries=536870911

2025-08-23 22:01:18,638 INFO org.apache.hadoop.hdfs.server.namenode.FSDirectory: USER serial map: bits=24 maxEntries=16777215

2025-08-23 22:01:18,638 INFO org.apache.hadoop.hdfs.server.namenode.FSDirectory: GROUP serial map: bits=24 maxEntries=16777215

2025-08-23 22:01:18,638 INFO org.apache.hadoop.hdfs.server.namenode.FSDirectory: XATTR serial map: bits=24 maxEntries=16777215

2025-08-23 22:01:18,658 INFO org.apache.hadoop.util.GSet: Computing capacity for map INodeMap

2025-08-23 22:01:18,658 INFO org.apache.hadoop.util.GSet: VM type = 64-bit

2025-08-23 22:01:18,658 INFO org.apache.hadoop.util.GSet: 1.0% max memory 752 MB = 7.5 MB

2025-08-23 22:01:18,658 INFO org.apache.hadoop.util.GSet: capacity = 2^20 = 1048576 entries

2025-08-23 22:01:18,659 INFO org.apache.hadoop.hdfs.server.namenode.FSDirectory: ACLs enabled? true

2025-08-23 22:01:18,659 INFO org.apache.hadoop.hdfs.server.namenode.FSDirectory: POSIX ACL inheritance enabled? true

2025-08-23 22:01:18,659 INFO org.apache.hadoop.hdfs.server.namenode.FSDirectory: XAttrs enabled? true

2025-08-23 22:01:18,659 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: Caching file names occurring more than 10 times

2025-08-23 22:01:18,667 INFO org.apache.hadoop.hdfs.server.namenode.snapshot.SnapshotManager: Loaded config captureOpenFiles: false, skipCaptureAccessTimeOnlyChange: false, snapshotDiffAllowSnapRootDescendant: true, maxSnapshotLimit: 65536

2025-08-23 22:01:18,670 INFO org.apache.hadoop.hdfs.server.namenode.snapshot.SnapshotManager: SkipList is disabled

2025-08-23 22:01:18,676 INFO org.apache.hadoop.util.GSet: Computing capacity for map cachedBlocks

2025-08-23 22:01:18,676 INFO org.apache.hadoop.util.GSet: VM type = 64-bit

2025-08-23 22:01:18,676 INFO org.apache.hadoop.util.GSet: 0.25% max memory 752 MB = 1.9 MB

2025-08-23 22:01:18,676 INFO org.apache.hadoop.util.GSet: capacity = 2^18 = 262144 entries

2025-08-23 22:01:18,684 INFO org.apache.hadoop.hdfs.server.namenode.top.metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

2025-08-23 22:01:18,684 INFO org.apache.hadoop.hdfs.server.namenode.top.metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

2025-08-23 22:01:18,684 INFO org.apache.hadoop.hdfs.server.namenode.top.metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

2025-08-23 22:01:18,687 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Retry cache on namenode is enabled

2025-08-23 22:01:18,687 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

2025-08-23 22:01:18,689 INFO org.apache.hadoop.util.GSet: Computing capacity for map NameNodeRetryCache

2025-08-23 22:01:18,689 INFO org.apache.hadoop.util.GSet: VM type = 64-bit

2025-08-23 22:01:18,689 INFO org.apache.hadoop.util.GSet: 0.029999999329447746% max memory 752 MB = 231.0 KB

2025-08-23 22:01:18,689 INFO org.apache.hadoop.util.GSet: capacity = 2^15 = 32768 entries

2025-08-23 22:01:18,721 INFO org.apache.hadoop.hdfs.server.common.Storage: Lock on /server/hadoop/data/nn/in_use.lock acquired by nodename 12582@master

2025-08-23 22:01:18,750 INFO org.apache.hadoop.hdfs.server.namenode.FileJournalManager: Recovering unfinalized segments in /server/hadoop/data/nn/current

2025-08-23 22:01:18,800 INFO org.apache.hadoop.hdfs.server.namenode.FileJournalManager: Finalizing edits file /server/hadoop/data/nn/current/edits_inprogress_0000000000000000003 -> /server/hadoop/data/nn/current/edits_0000000000000000003-0000000000000000003

2025-08-23 22:01:18,839 INFO org.apache.hadoop.hdfs.server.namenode.FSImage: Planning to load image: FSImageFile(file=/server/hadoop/data/nn/current/fsimage_0000000000000000000, cpktTxId=0000000000000000000)

2025-08-23 22:01:18,940 INFO org.apache.hadoop.hdfs.server.namenode.FSImageFormatPBINode: Loading 1 INodes.

2025-08-23 22:01:18,948 INFO org.apache.hadoop.hdfs.server.namenode.FSImageFormatPBINode: Successfully loaded 1 inodes

2025-08-23 22:01:18,960 INFO org.apache.hadoop.hdfs.server.namenode.FSImageFormatPBINode: Completed update blocks map and name cache, total waiting duration 0ms.

2025-08-23 22:01:18,968 INFO org.apache.hadoop.hdfs.server.namenode.FSImageFormatProtobuf: Loaded FSImage in 0 seconds.

2025-08-23 22:01:18,969 INFO org.apache.hadoop.hdfs.server.namenode.FSImage: Loaded image for txid 0 from /server/hadoop/data/nn/current/fsimage_0000000000000000000

2025-08-23 22:01:18,973 INFO org.apache.hadoop.hdfs.server.namenode.FSImage: Reading org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream@372b0d86 expecting start txid #1

2025-08-23 22:01:18,973 INFO org.apache.hadoop.hdfs.server.namenode.FSImage: Start loading edits file /server/hadoop/data/nn/current/edits_0000000000000000001-0000000000000000001 maxTxnsToRead = 9223372036854775807

2025-08-23 22:01:18,974 INFO org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream: Fast-forwarding stream '/server/hadoop/data/nn/current/edits_0000000000000000001-0000000000000000001' to transaction ID 1

2025-08-23 22:01:19,030 INFO org.apache.hadoop.hdfs.server.namenode.FSImage: Loaded 1 edits file(s) (the last named /server/hadoop/data/nn/current/edits_0000000000000000001-0000000000000000001) of total size 1048576.0, total edits 1.0, total load time 35.0 ms

2025-08-23 22:01:19,032 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Need to save fs image? false (staleImage=false, haEnabled=false, isRollingUpgrade=false)

2025-08-23 22:01:19,050 INFO org.apache.hadoop.hdfs.server.namenode.FSEditLog: Starting log segment at 4

2025-08-23 22:01:19,143 INFO org.apache.hadoop.hdfs.server.namenode.NameCache: initialized with 0 entries 0 lookups

2025-08-23 22:01:19,144 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Finished loading FSImage in 444 msecs

2025-08-23 22:01:19,375 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: RPC server is binding to hdfs:8020

2025-08-23 22:01:19,375 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: Enable NameNode state context:false

2025-08-23 22:01:19,384 INFO org.apache.hadoop.ipc.CallQueueManager: Using callQueue: class java.util.concurrent.LinkedBlockingQueue, queueCapacity: 10000, scheduler: class org.apache.hadoop.ipc.DefaultRpcScheduler, ipcBackoff: false.

2025-08-23 22:01:19,396 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Stopping services started for active state

2025-08-23 22:01:19,396 INFO org.apache.hadoop.hdfs.server.namenode.FSEditLog: Ending log segment 4, 4

2025-08-23 22:01:19,398 INFO org.apache.hadoop.hdfs.server.namenode.FSEditLog: Number of transactions: 2 Total time for transactions(ms): 1 Number of transactions batched in Syncs: 3 Number of syncs: 3 SyncTimes(ms): 7

2025-08-23 22:01:19,399 INFO org.apache.hadoop.hdfs.server.namenode.FileJournalManager: Finalizing edits file /server/hadoop/data/nn/current/edits_inprogress_0000000000000000004 -> /server/hadoop/data/nn/current/edits_0000000000000000004-0000000000000000005

2025-08-23 22:01:19,401 INFO org.apache.hadoop.hdfs.server.namenode.FSEditLog: FSEditLogAsync was interrupted, exiting

2025-08-23 22:01:19,418 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Stopping services started for active state

2025-08-23 22:01:19,419 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Stopping services started for standby state

2025-08-23 22:01:19,426 INFO org.eclipse.jetty.server.handler.ContextHandler: Stopped o.e.j.w.WebAppContext@2d6c53fc{hdfs,/,null,STOPPED}{file:/server/hadoop/share/hadoop/hdfs/webapps/hdfs}

2025-08-23 22:01:19,431 INFO org.eclipse.jetty.server.AbstractConnector: Stopped ServerConnector@21d03963{HTTP/1.1, (http/1.1)}{0.0.0.0:9870}

2025-08-23 22:01:19,431 INFO org.eclipse.jetty.server.session: node0 Stopped scavenging

2025-08-23 22:01:19,431 INFO org.eclipse.jetty.server.handler.ContextHandler: Stopped o.e.j.s.ServletContextHandler@7486b455{static,/static,file:///server/hadoop/share/hadoop/hdfs/webapps/static/,STOPPED}

2025-08-23 22:01:19,431 INFO org.eclipse.jetty.server.handler.ContextHandler: Stopped o.e.j.s.ServletContextHandler@465232e9{logs,/logs,file:///server/hadoop/logs/,STOPPED}

2025-08-23 22:01:19,462 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Stopping NameNode metrics system...

2025-08-23 22:01:19,463 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: NameNode metrics system stopped.

2025-08-23 22:01:19,464 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: NameNode metrics system shutdown complete.

2025-08-23 22:01:19,485 ERROR org.apache.hadoop.hdfs.server.namenode.NameNode: Failed to start namenode.

java.net.SocketException: Call From hdfs to null:0 failed on socket exception: java.net.SocketException: Unresolved address; For more details see: http://wiki.apache.org/hadoop/SocketException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:930)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:888)

at org.apache.hadoop.ipc.Server.bind(Server.java:676)

at org.apache.hadoop.ipc.Server$Listener.<init>(Server.java:1284)

at org.apache.hadoop.ipc.Server.<init>(Server.java:3211)

at org.apache.hadoop.ipc.RPC$Server.<init>(RPC.java:1195)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Server.<init>(ProtobufRpcEngine2.java:485)

at org.apache.hadoop.ipc.ProtobufRpcEngine2.getServer(ProtobufRpcEngine2.java:387)

at org.apache.hadoop.ipc.RPC$Builder.build(RPC.java:986)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.<init>(NameNodeRpcServer.java:474)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createRpcServer(NameNode.java:878)

at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:784)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:1033)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:1008)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1782)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1847)

Caused by: java.net.SocketException: Unresolved address

at sun.nio.ch.Net.translateToSocketException(Net.java:130)

at sun.nio.ch.Net.translateException(Net.java:156)

at sun.nio.ch.Net.translateException(Net.java:162)

at sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:76)

at org.apache.hadoop.ipc.Server.bind(Server.java:659)

... 13 more

Caused by: java.nio.channels.UnresolvedAddressException

at sun.nio.ch.Net.checkAddress(Net.java:100)

at sun.nio.ch.ServerSocketChannelImpl.bind(ServerSocketChannelImpl.java:220)

at sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:74)

... 14 more

2025-08-23 22:01:19,488 INFO org.apache.hadoop.util.ExitUtil: Exiting with status 1: java.net.SocketException: Call From hdfs to null:0 failed on socket exception: java.net.SocketException: Unresolved address; For more details see: http://wiki.apache.org/hadoop/SocketException

2025-08-23 22:01:19,497 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at master/192.168.88.8

************************************************************/

[root@master hadoop]#

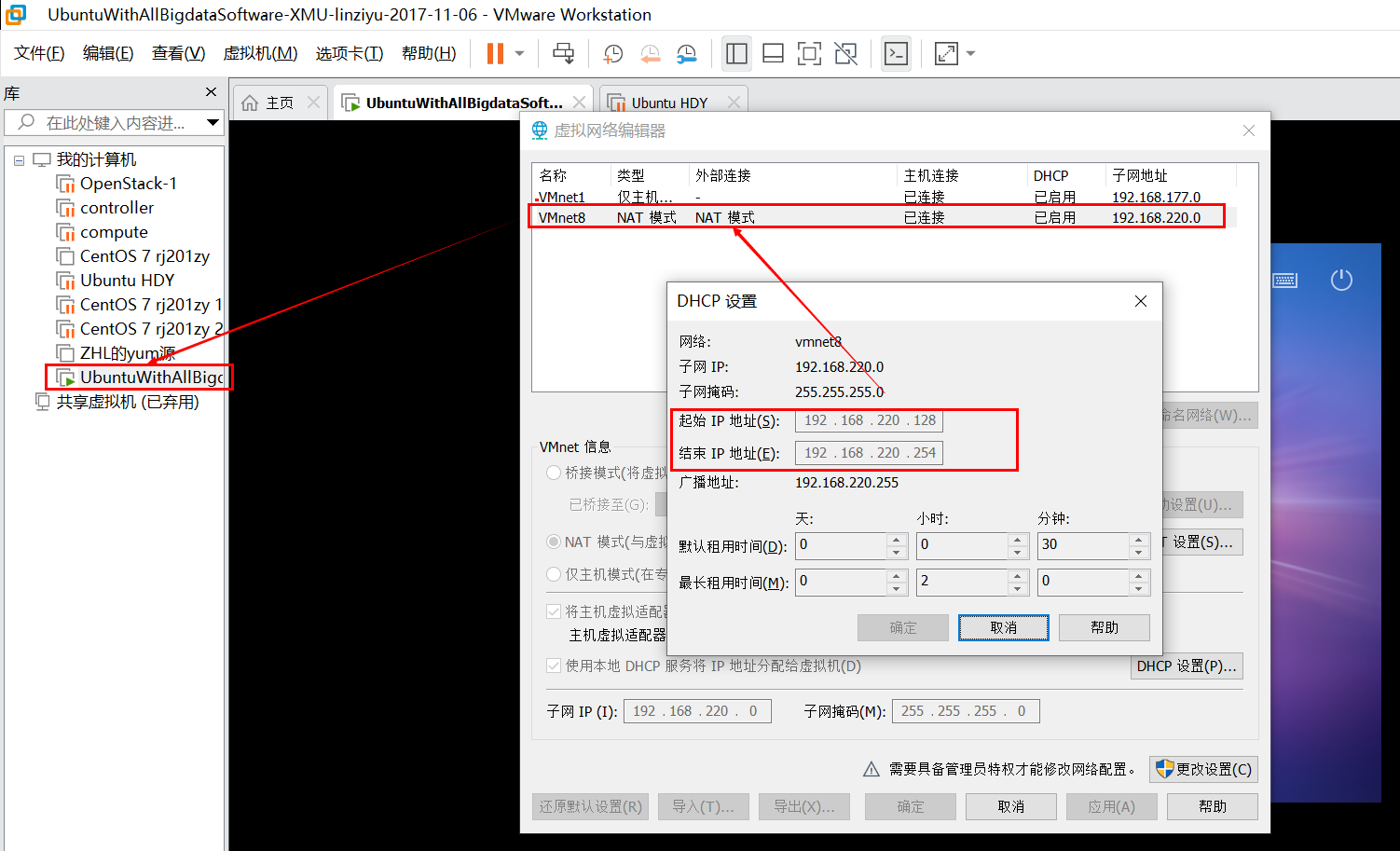

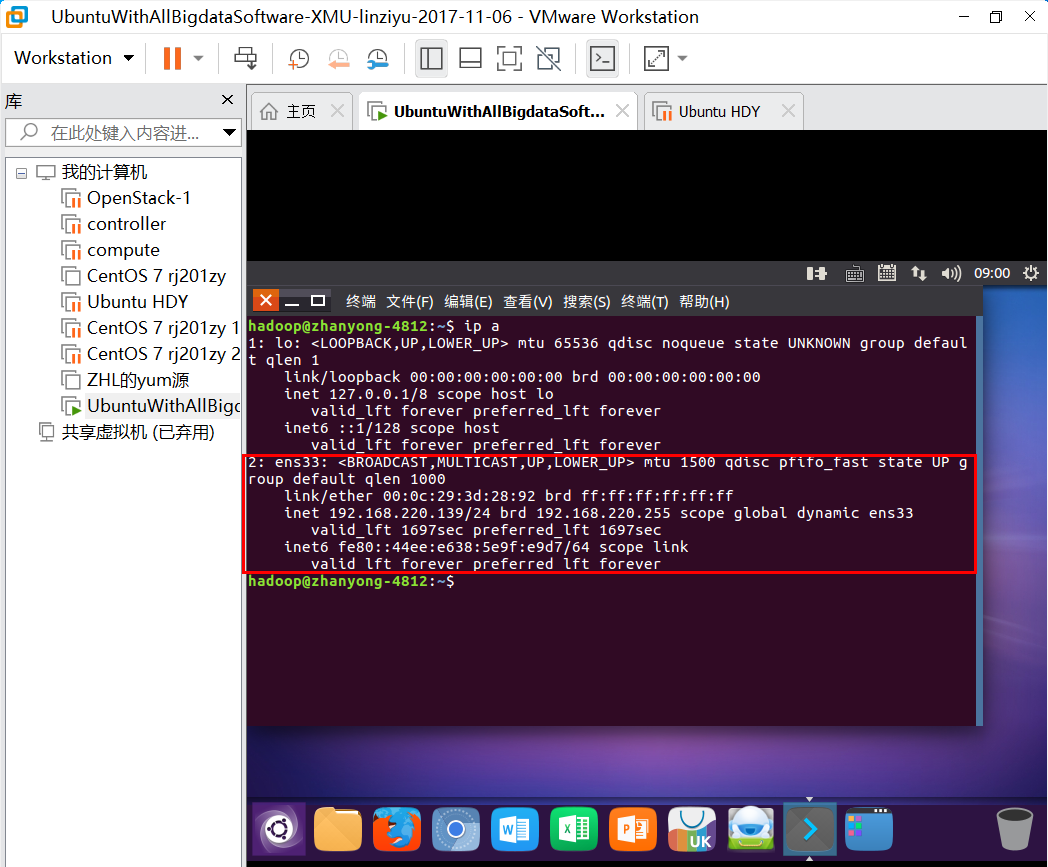

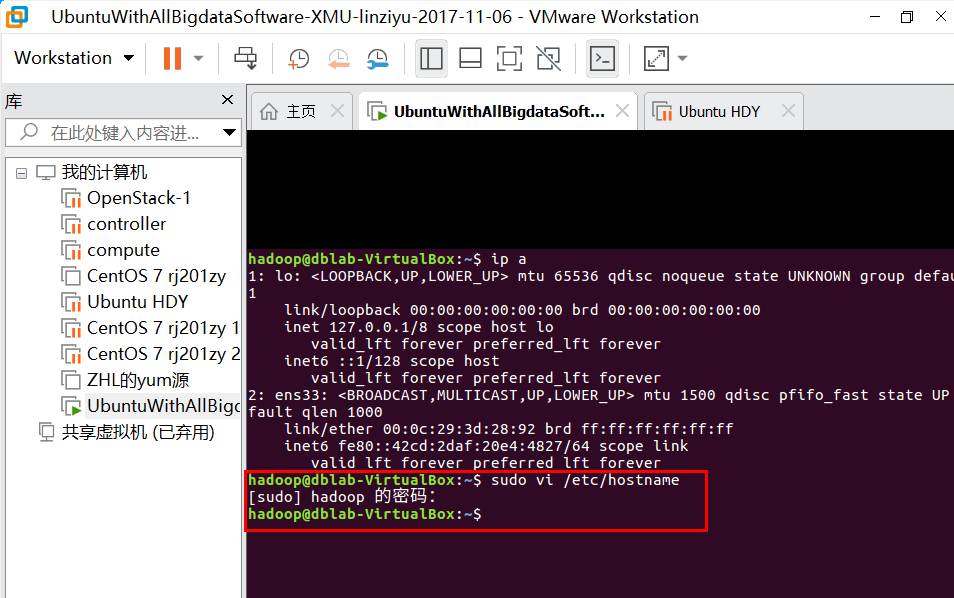

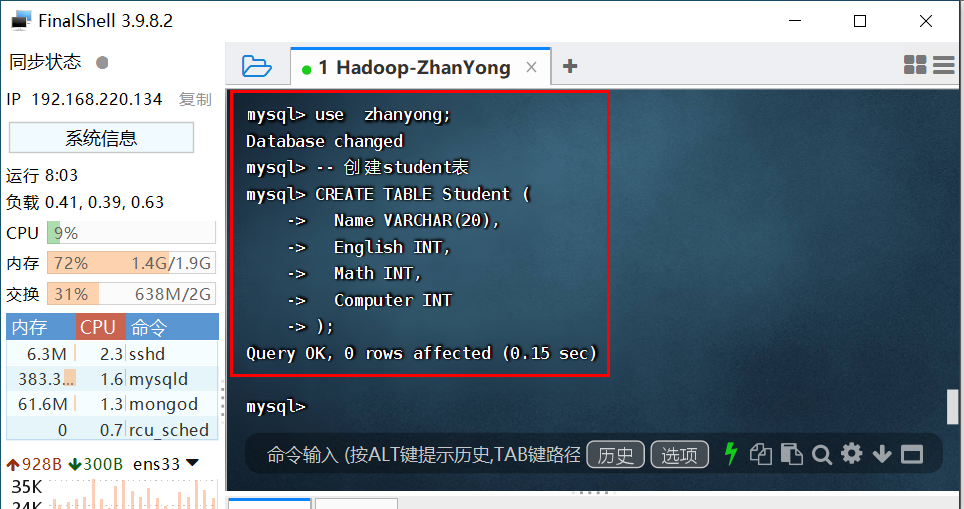

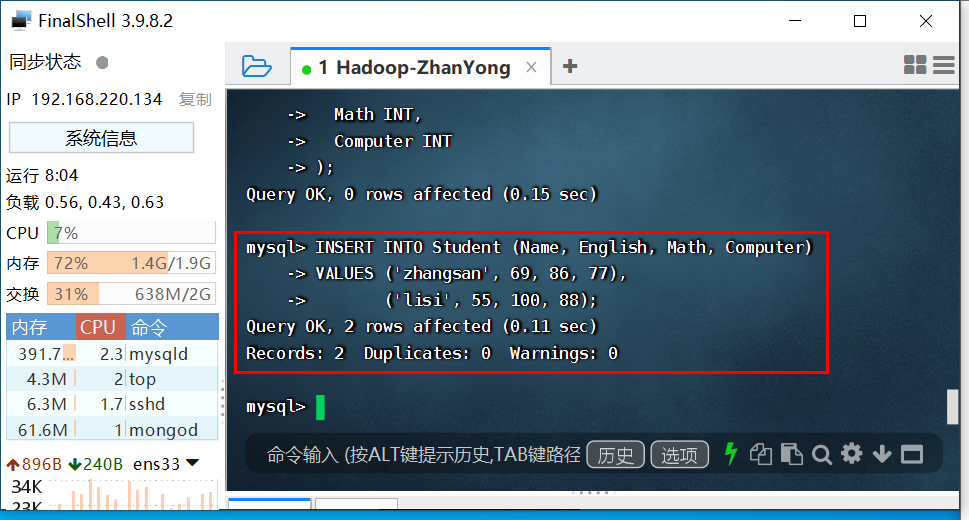

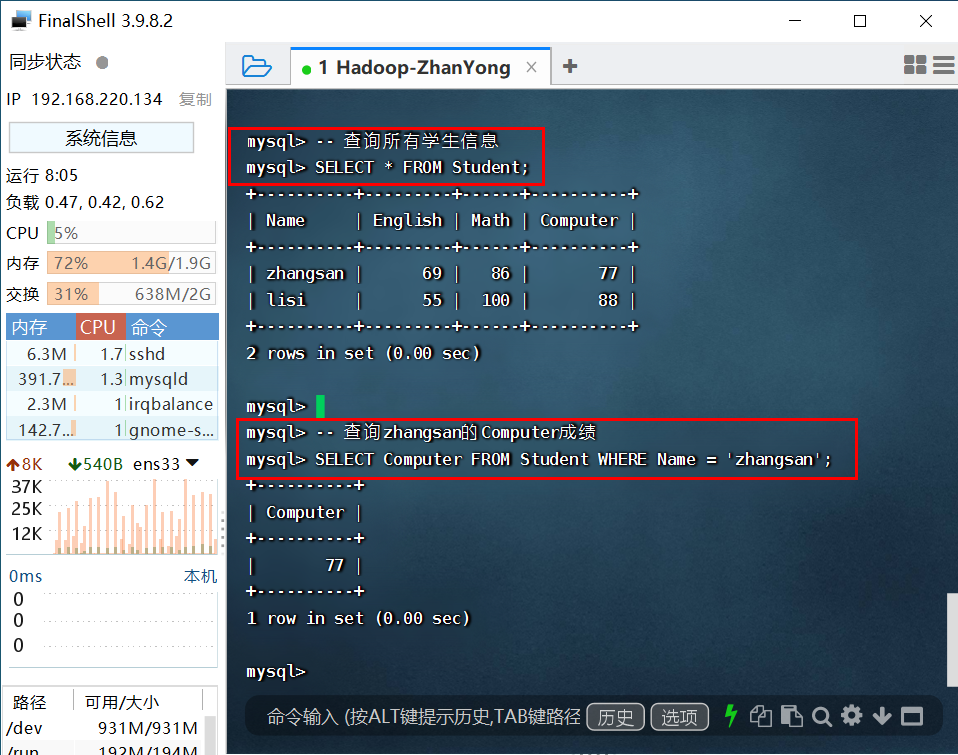

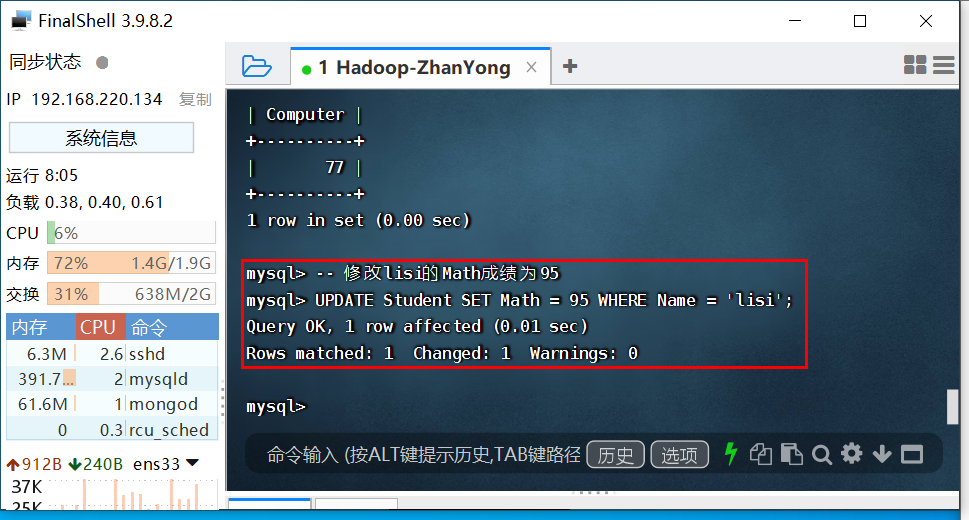

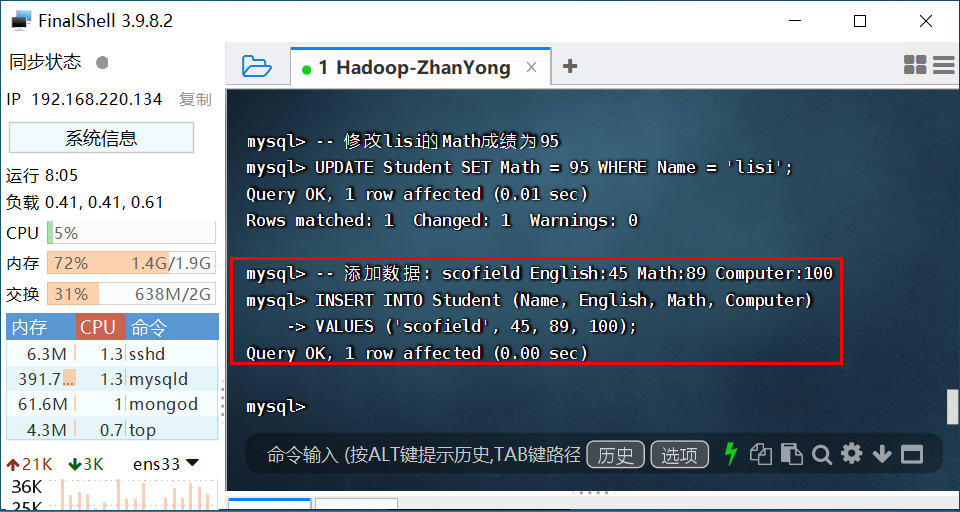

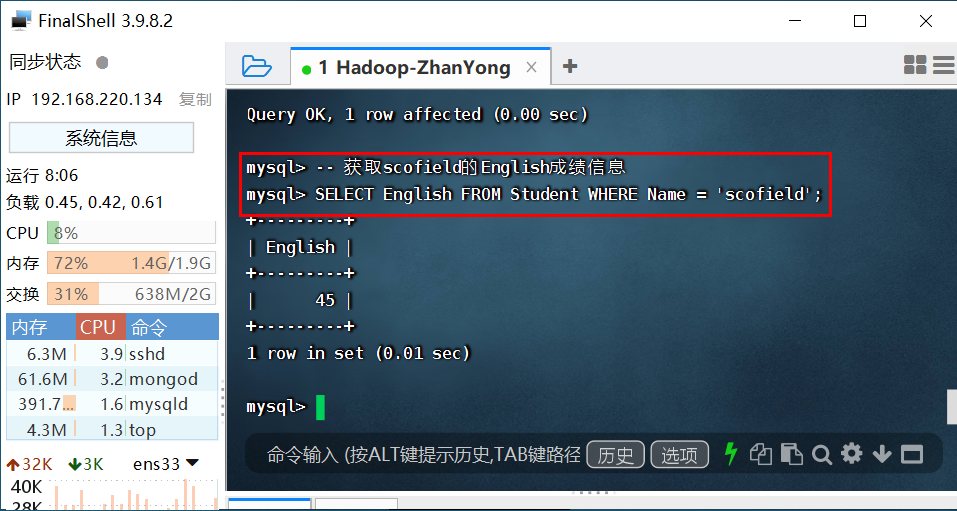

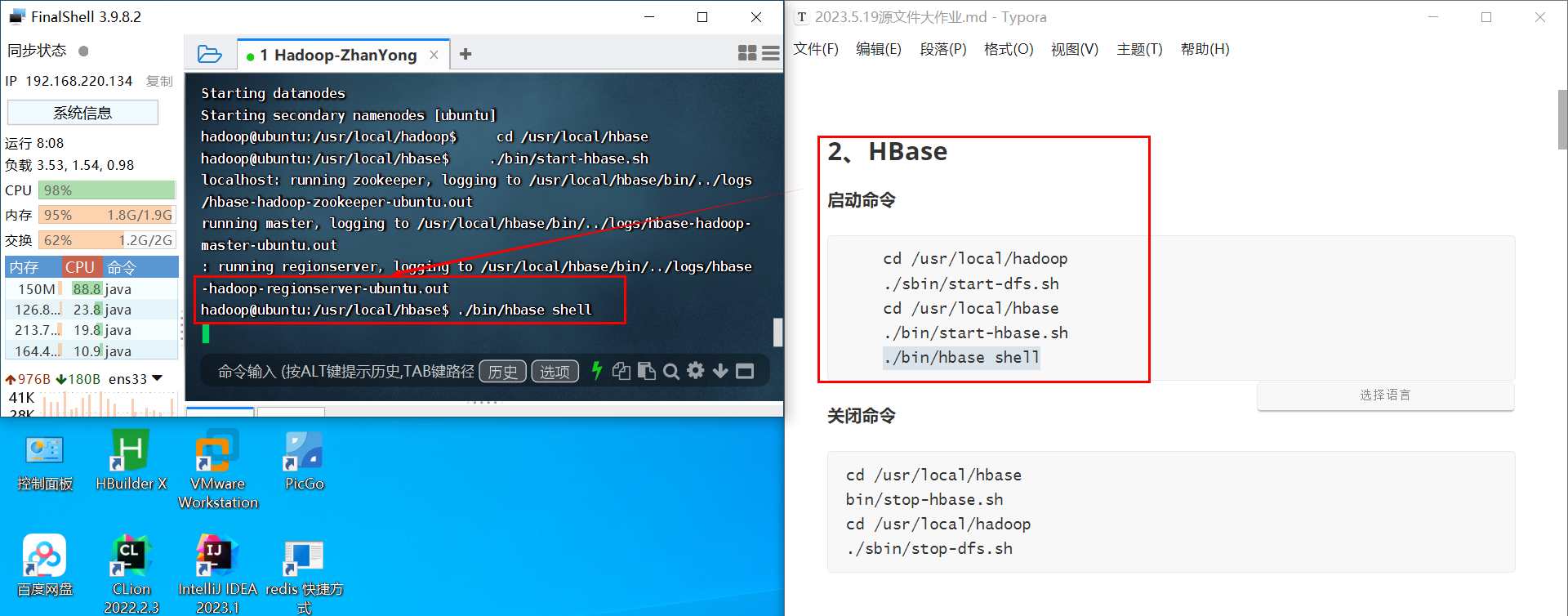

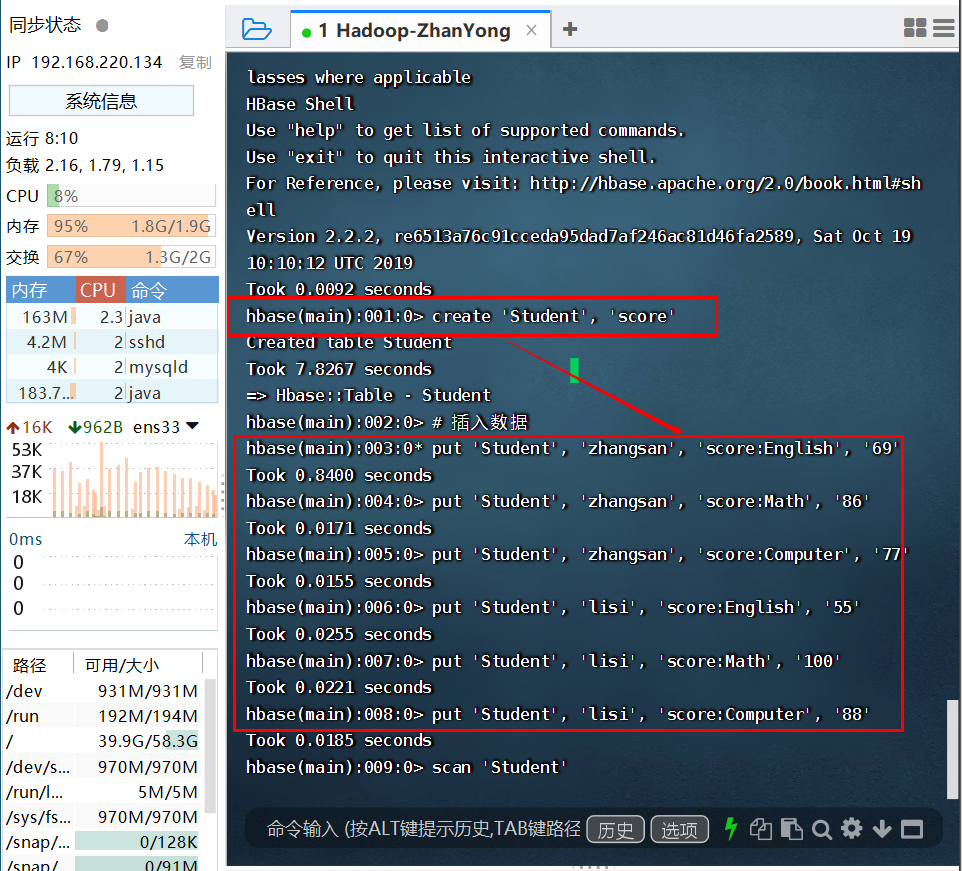

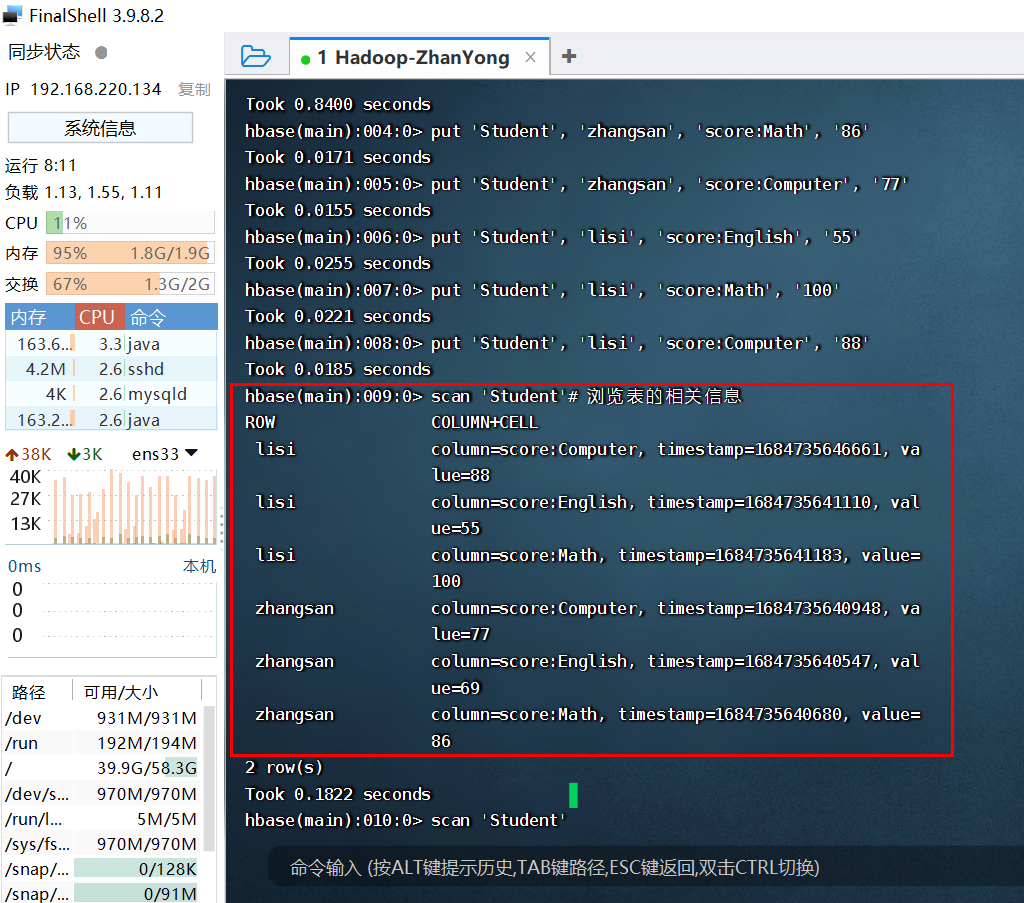

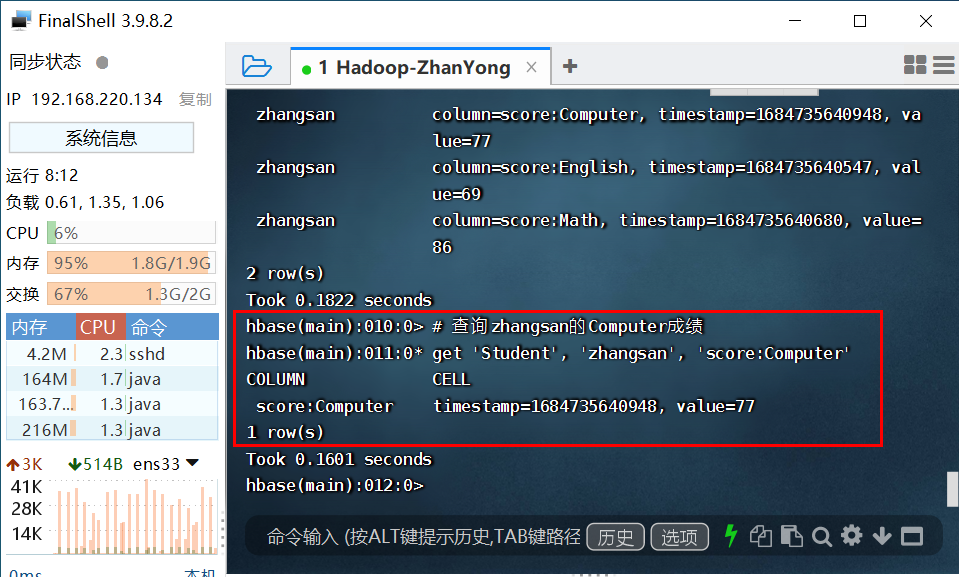

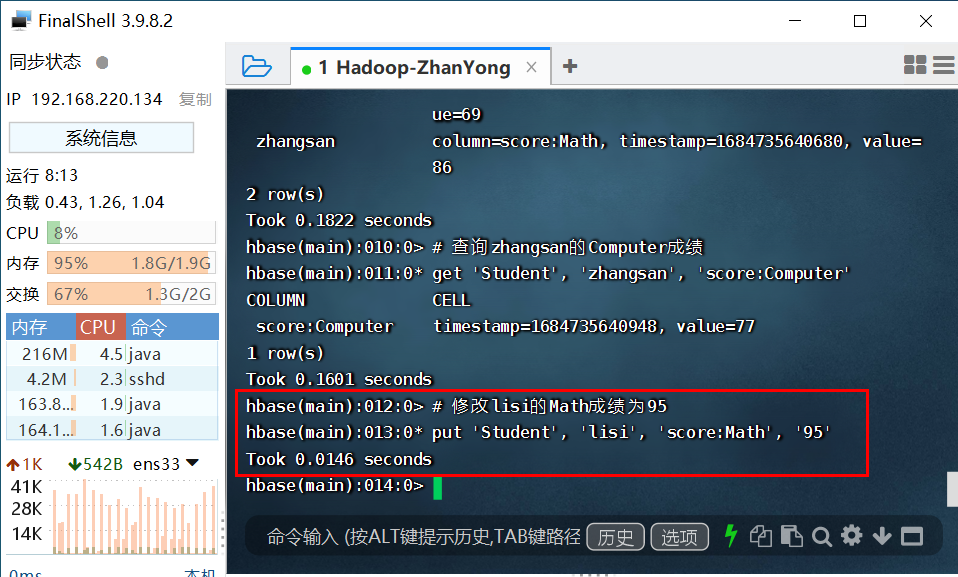

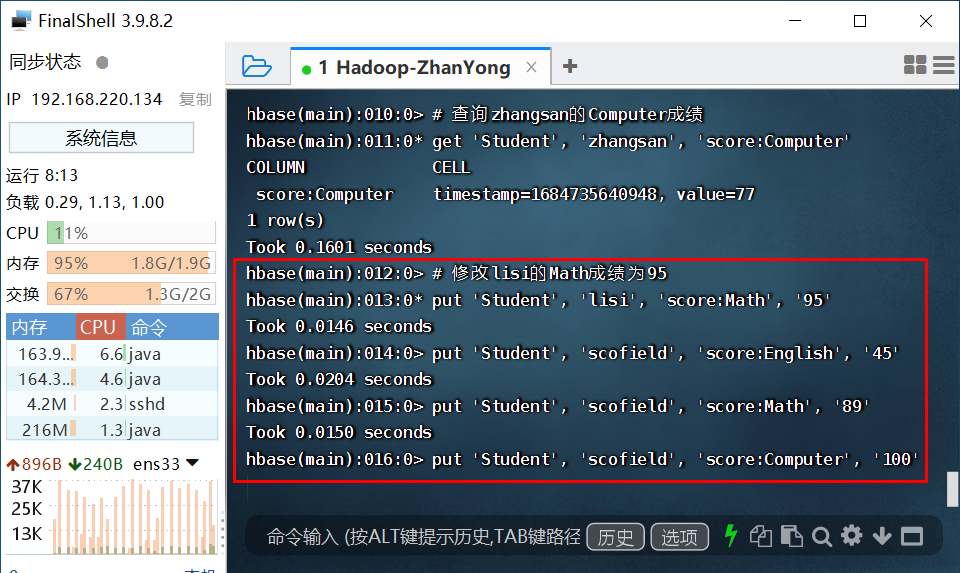

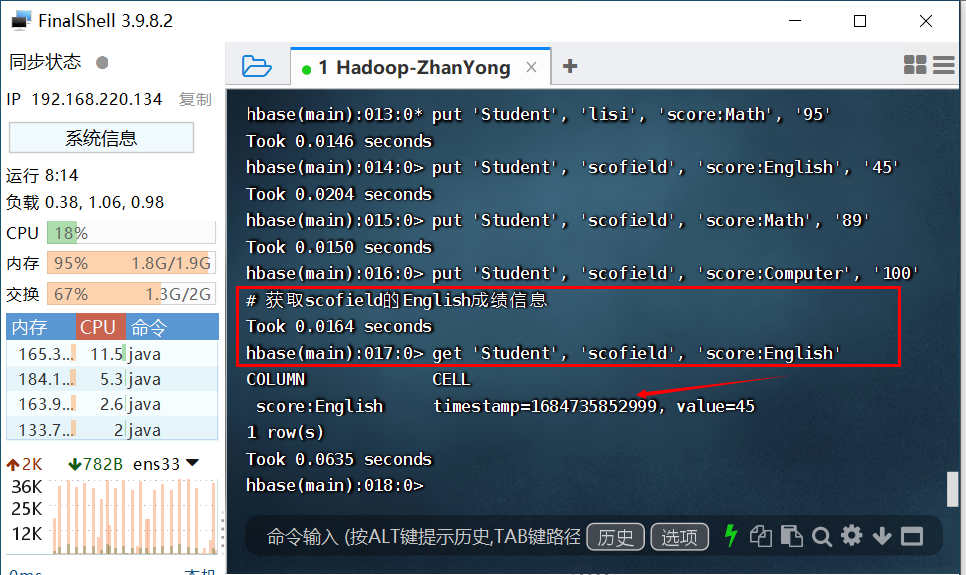

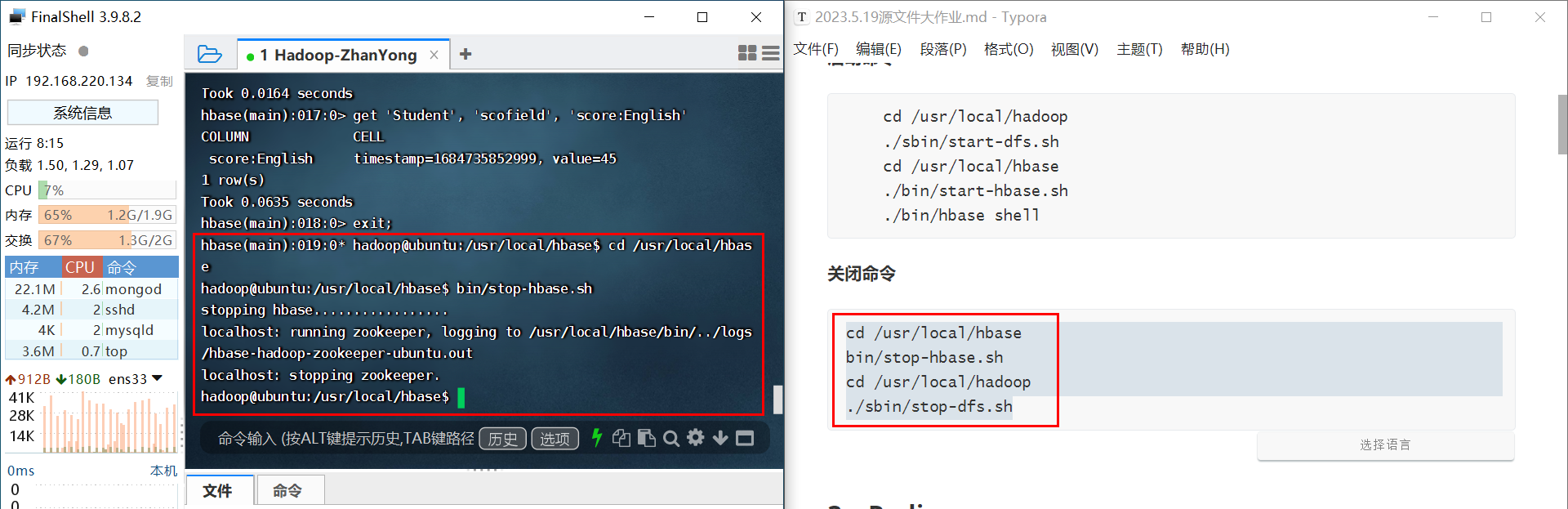

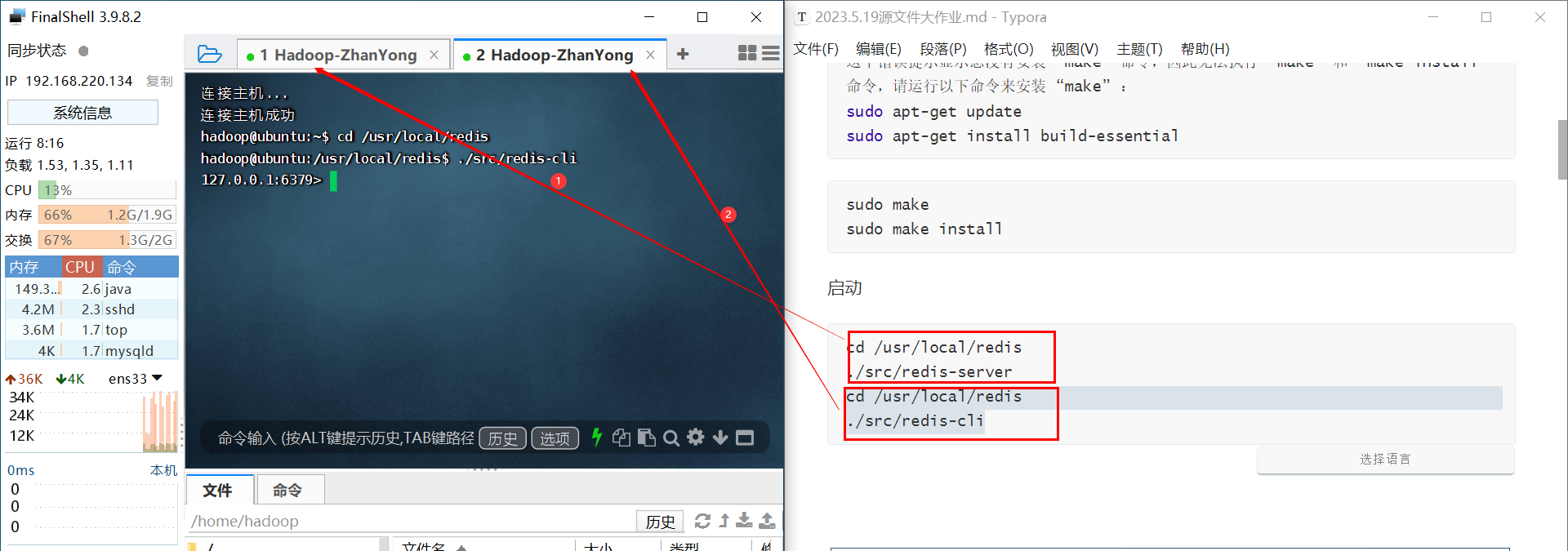

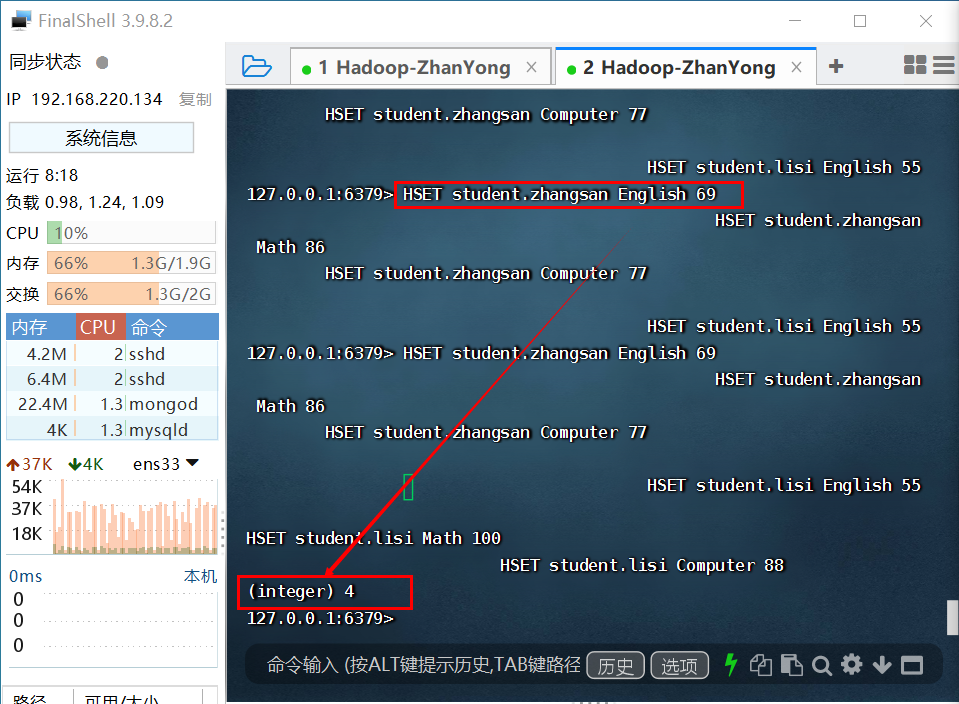

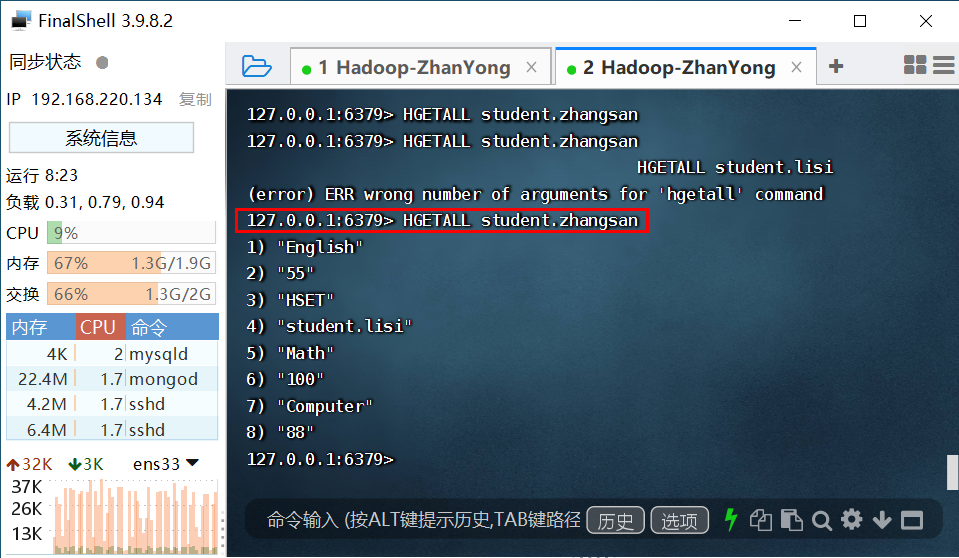

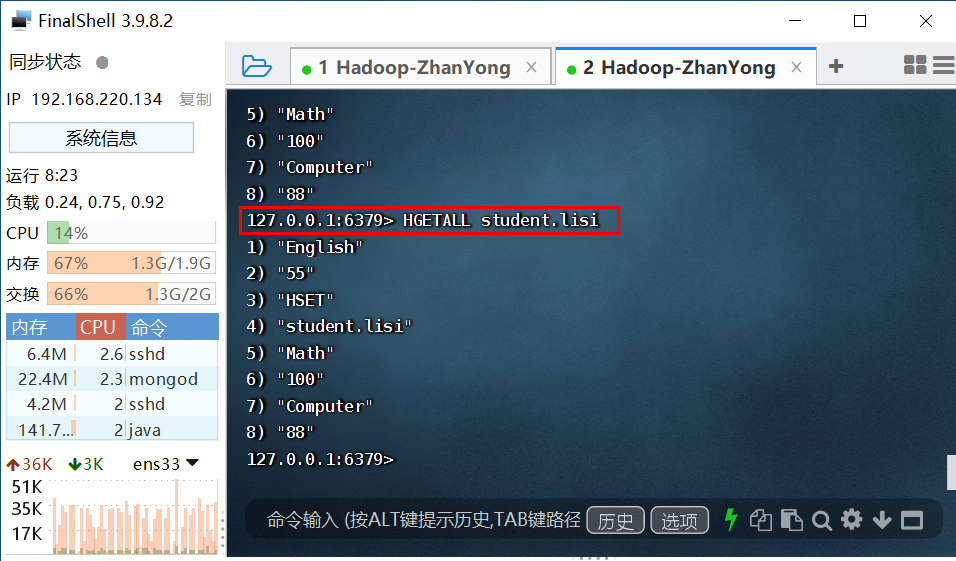

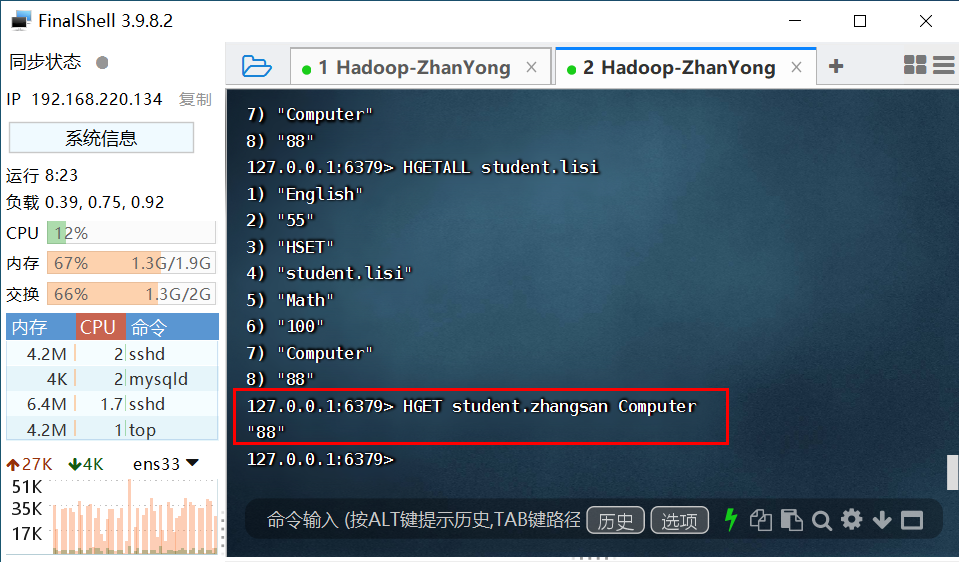

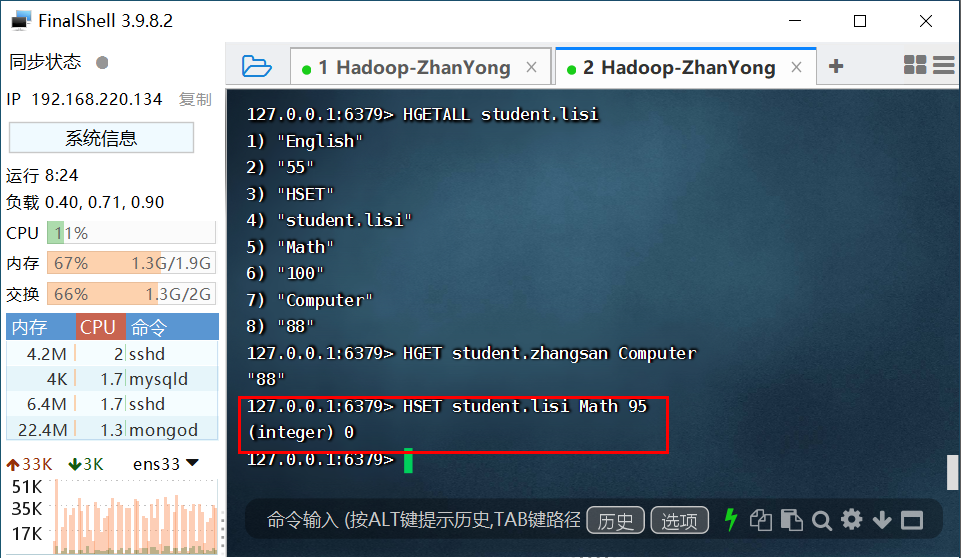

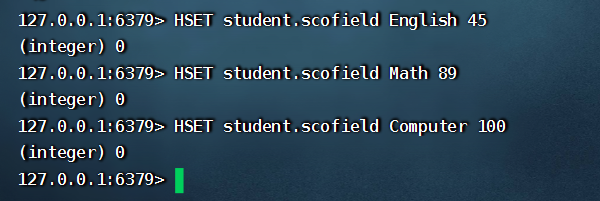

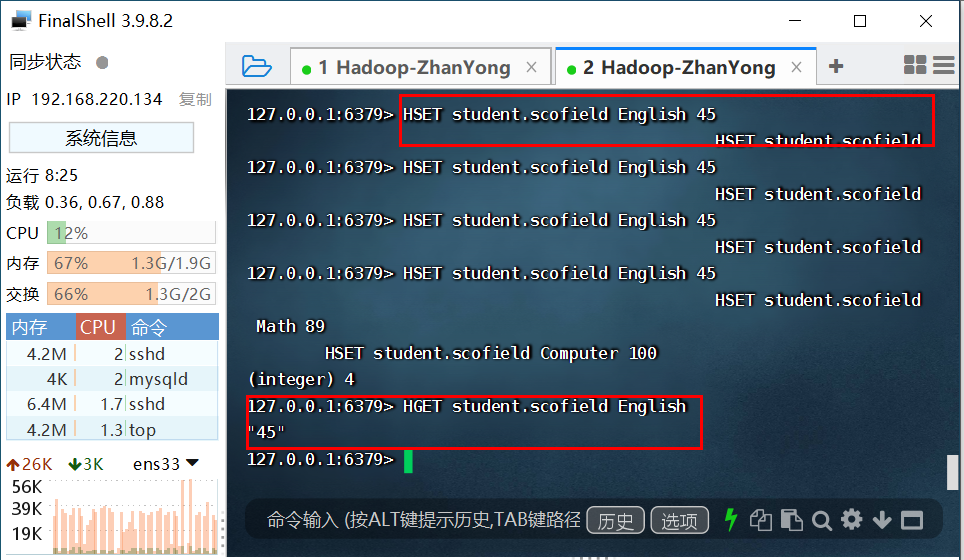

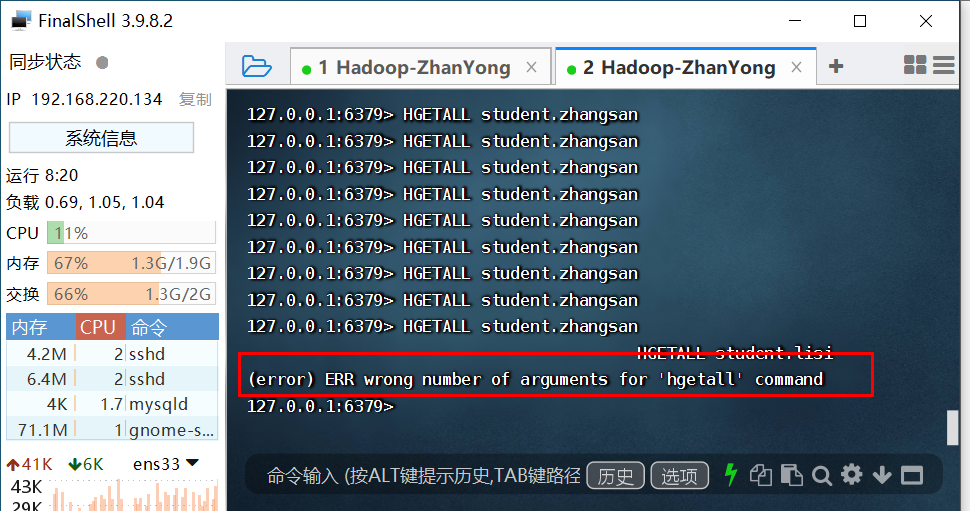

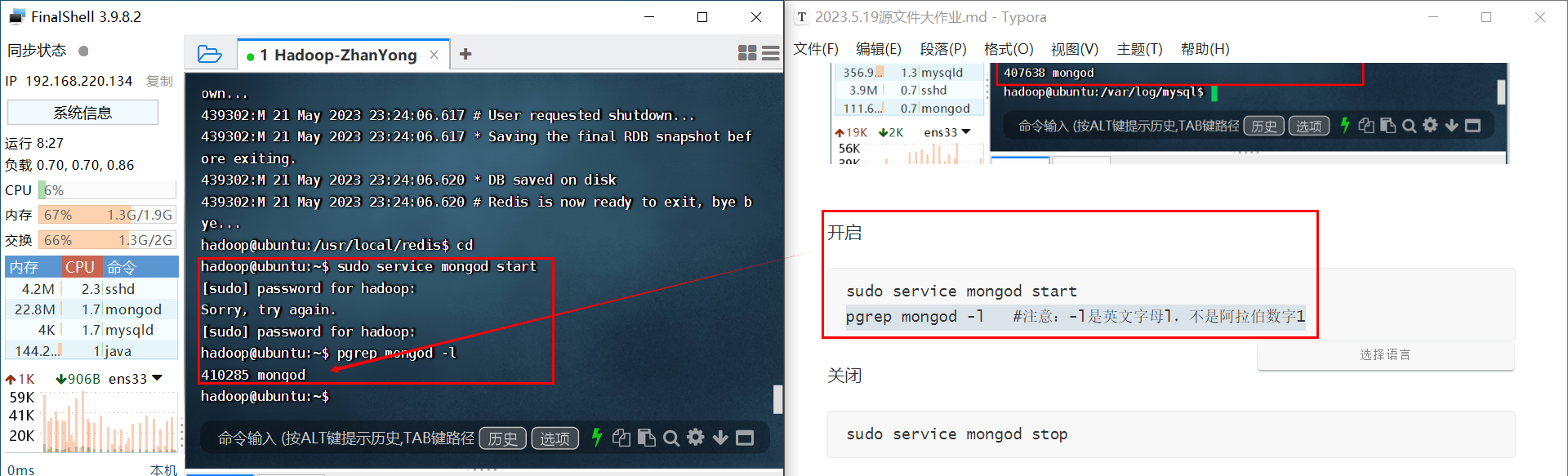

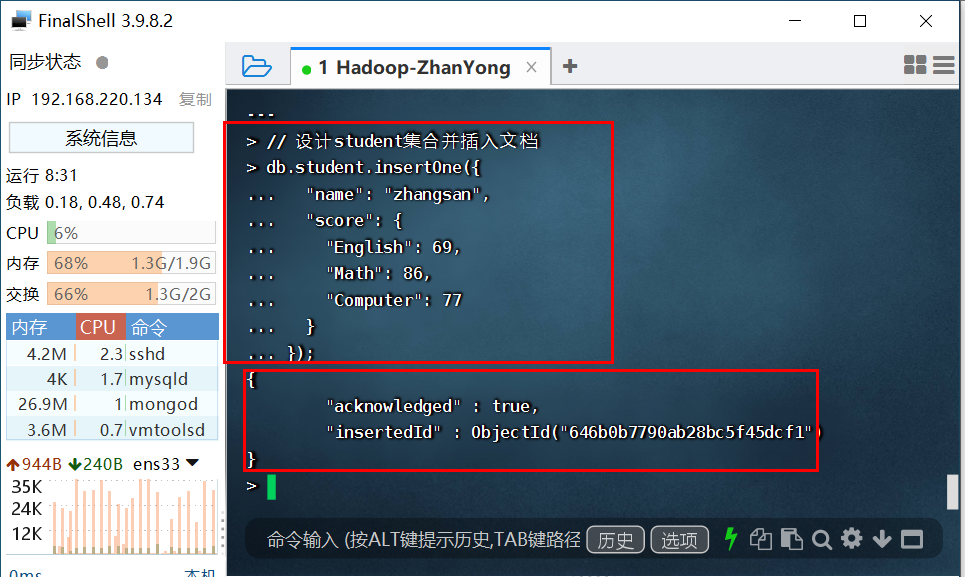

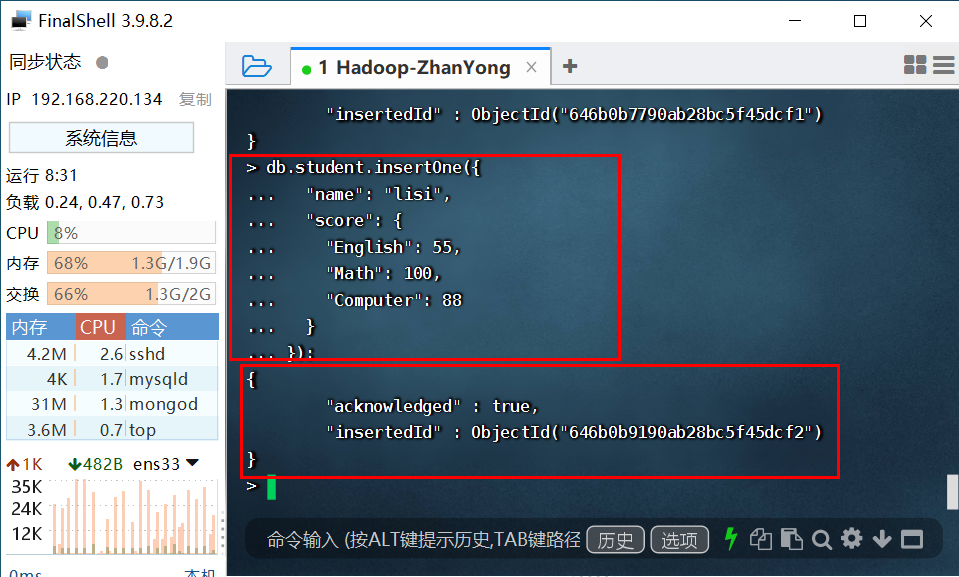

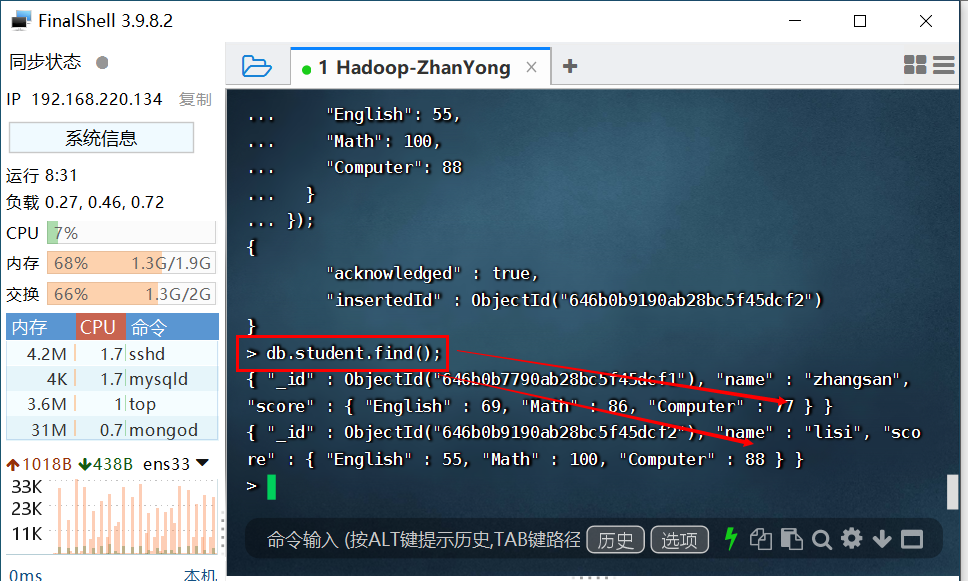

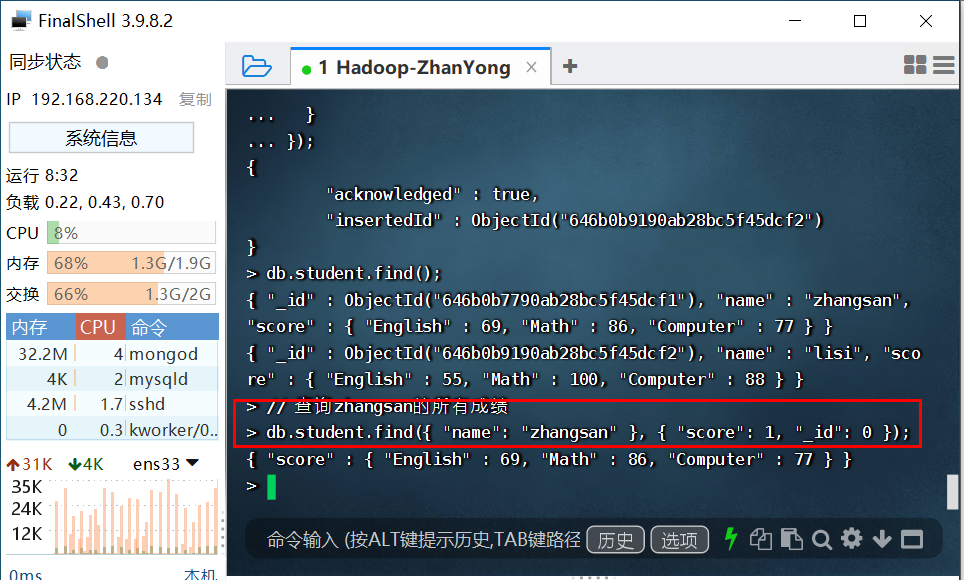

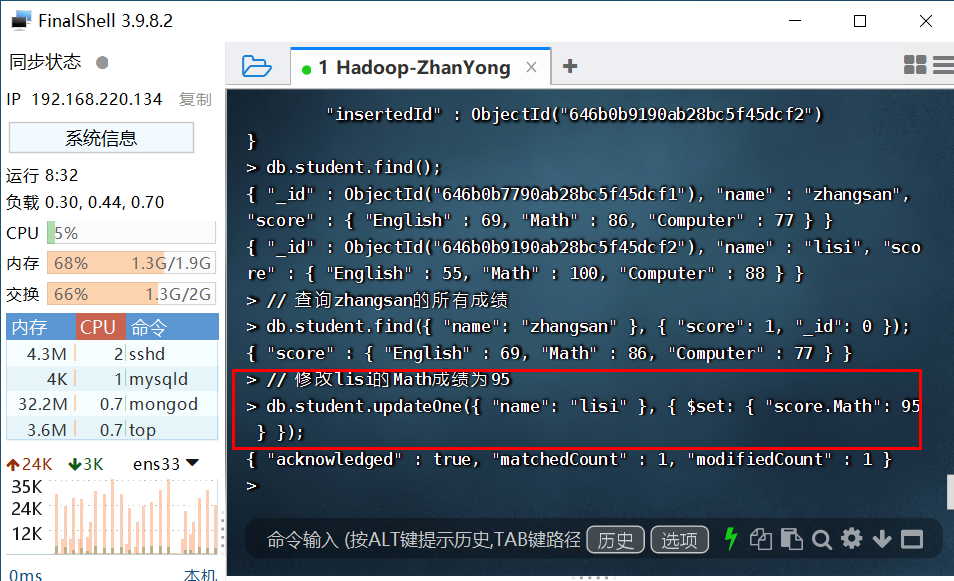

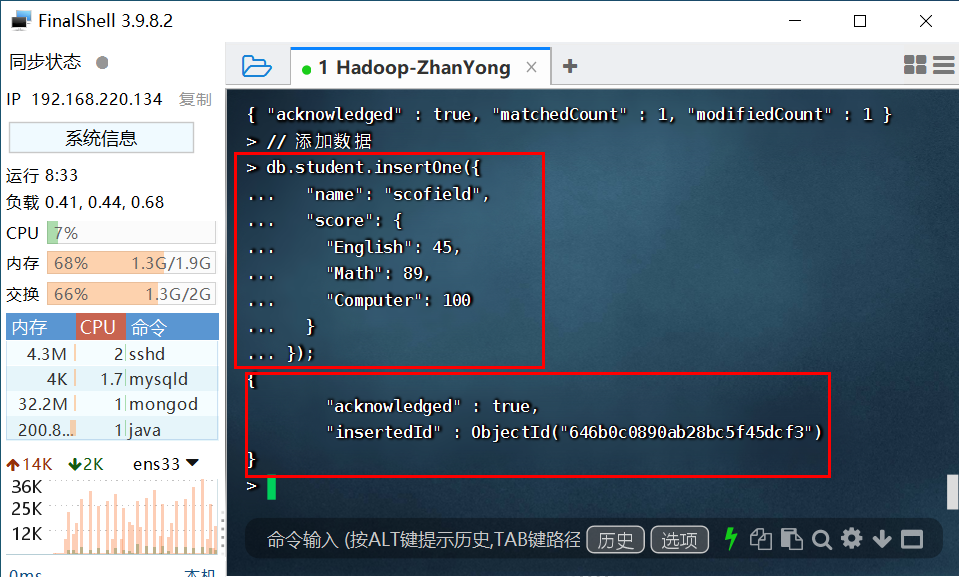

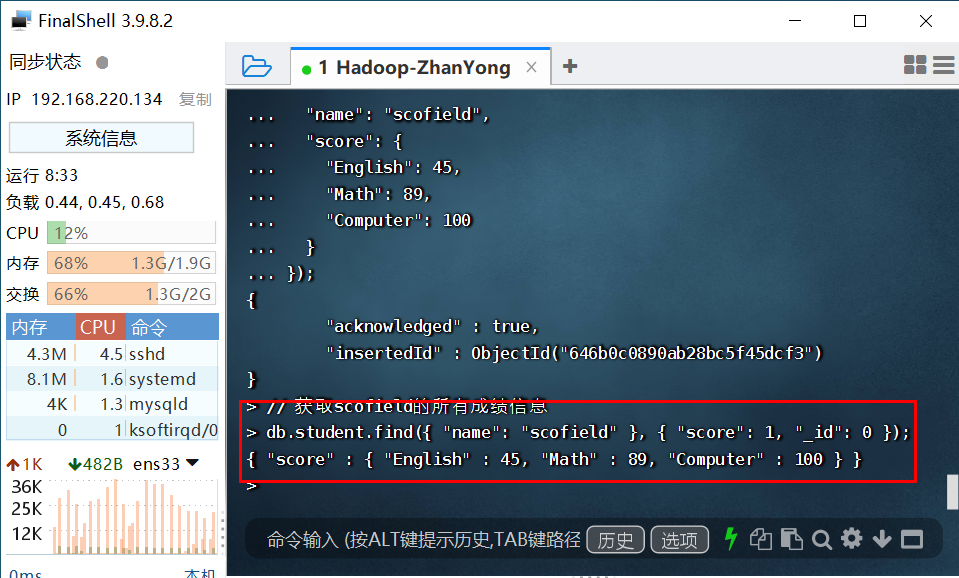

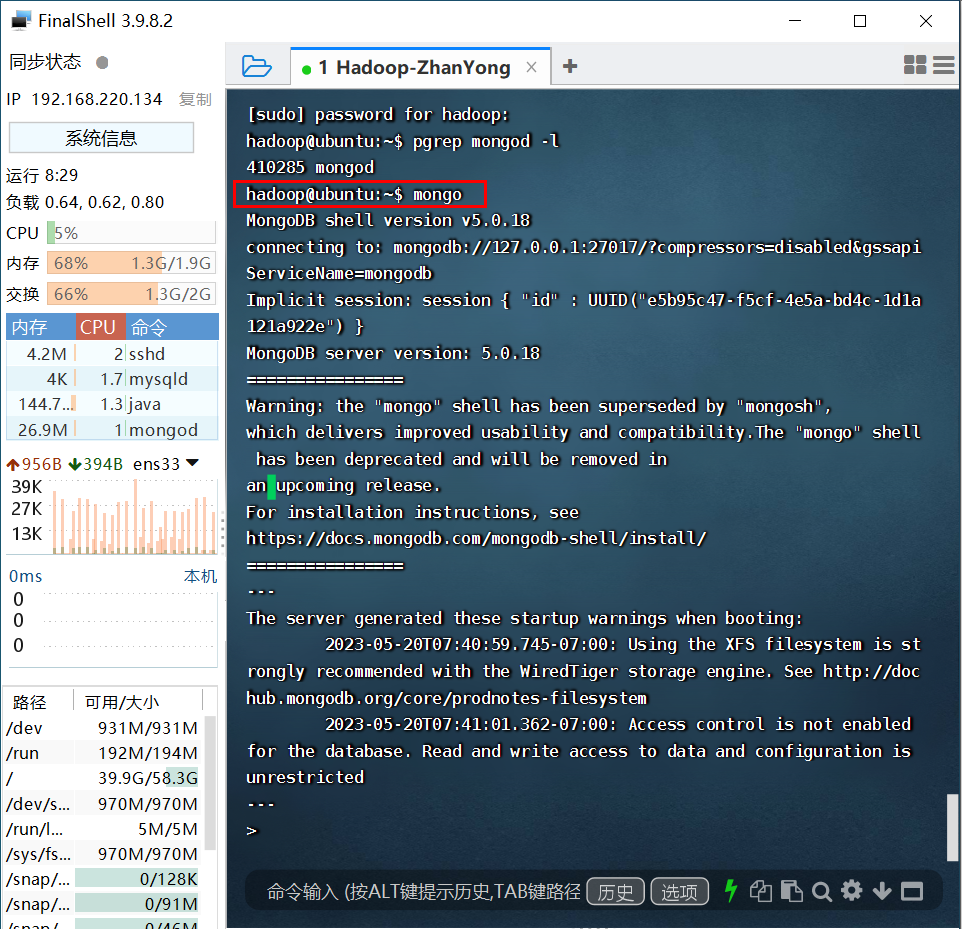

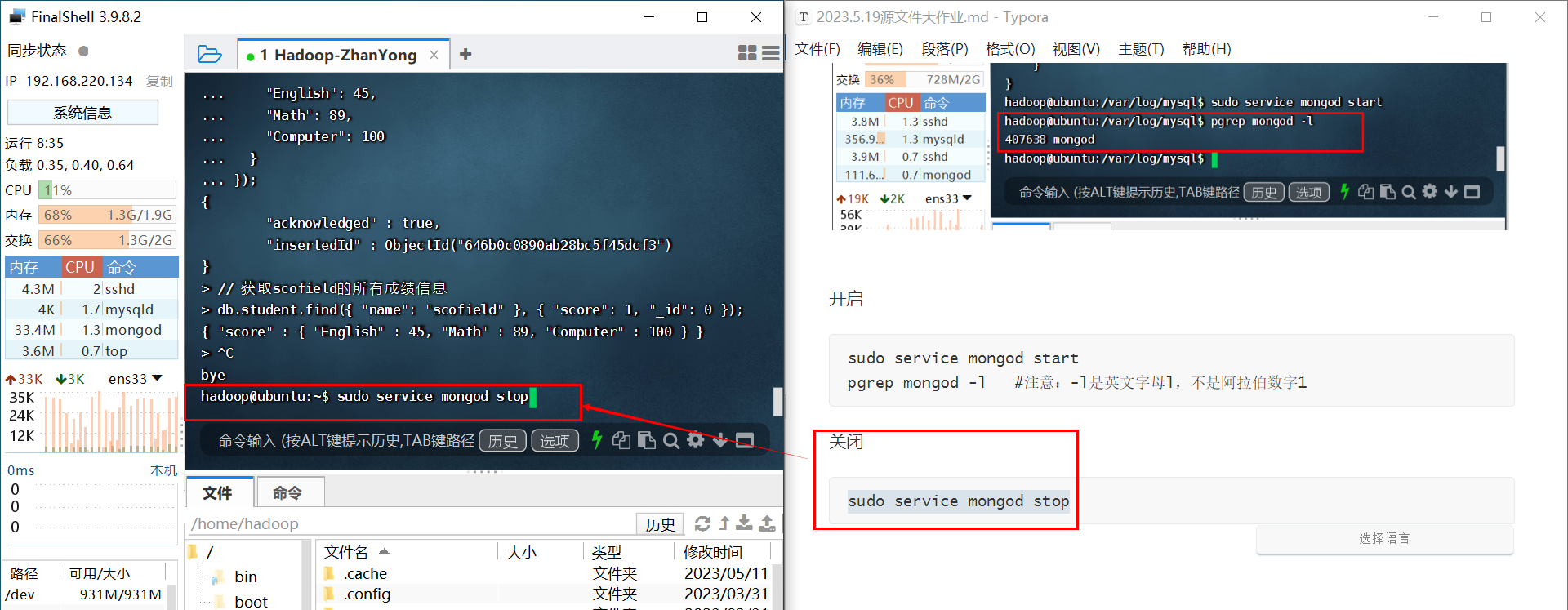

本文介绍了如何配置Linux虚拟机的静态IP,包括编辑网络接口配置文件和重启网络服务。接着,展示了在MySQL、HBase、Redis和MongoDB中设计和操作学生表的过程,包括插入、查询和修改数据。此外,还提醒了MongoDBshell已被mongosh替代,并给出了启动警告的解决建议。

本文介绍了如何配置Linux虚拟机的静态IP,包括编辑网络接口配置文件和重启网络服务。接着,展示了在MySQL、HBase、Redis和MongoDB中设计和操作学生表的过程,包括插入、查询和修改数据。此外,还提醒了MongoDBshell已被mongosh替代,并给出了启动警告的解决建议。

942

942

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?