计算机视觉迁移学习

学习目标

在本课程中,将学习如何使用迁移学习来训练卷积神经网络进行图像分类。

相关知识点

- 微调卷积网络

学习内容

1 微调卷积网络

实际上,很少有人从头开始训练整个卷积网络(使用随机初始化),因为拥有足够大小的数据集相对较少。相反,通常会在一个非常大的数据集(例如包含 120 万张图像和 1000 个类别的 ImageNet)上预训练一个卷积网络,然后将该卷积网络用作感兴趣任务的初始化或固定特征提取器。

这两种主要的迁移学习场景如下:

- 微调卷积网络:不是随机初始化,而是用预训练的网络(例如在 ImageNet 1000 数据集上训练的网络)来初始化网络。其余的训练过程与通常的训练过程相同。

- 卷积网络作为固定特征提取器:在这种情况下,除了最后一个全连接层外,我们将冻结网络的所有权重。最后一个全连接层将被一个具有随机权重的新层替换,并且只训练这一层。

%matplotlib inline

from __future__ import print_function, division

import torch

import torch_npu

from torch_npu.contrib import transfer_to_npu

import torch.nn as nn

import torch.optim as optim

from torch.optim import lr_scheduler

import torch.backends.cudnn as cudnn

import numpy as np

import torchvision

from torchvision import datasets, models, transforms

import matplotlib.pyplot as plt

import time

import os

import copy

cudnn.benchmark = True

plt.ion()

1.1 加载数据

我们将使用 torchvision 和 torch.utils.data 包来加载数据。

今天我们要解决的问题是训练一个模型来对蚂蚁和蜜蜂进行分类。我们有大约 120 张蚂蚁和蜜蜂的训练图像,每个类别各有 75 张验证图像。通常情况下,这是一个非常小的数据集,如果从头开始训练,很难泛化。由于我们使用了迁移学习,我们应该能够合理地泛化。

这个数据集是 ImageNet 的一个非常小的子集。

!wget https://model-community-picture.obs.cn-north-4.myhuaweicloud.com/ascend-zone/notebook_datasets/cfeec85ee9df11efac8afa163edcddae/hymenoptera_data.zip

!unzip hymenoptera_data.zip

data_transforms = {

'train': transforms.Compose([

transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

'val': transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

}

data_dir = 'hymenoptera_data'

image_datasets = {x: datasets.ImageFolder(os.path.join(data_dir, x),

data_transforms[x])

for x in ['train', 'val']}

dataloaders = {x: torch.utils.data.DataLoader(image_datasets[x], batch_size=4,

shuffle=True, num_workers=4)

for x in ['train', 'val']}

dataset_sizes = {x: len(image_datasets[x]) for x in ['train', 'val']}

class_names = image_datasets['train'].classes

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

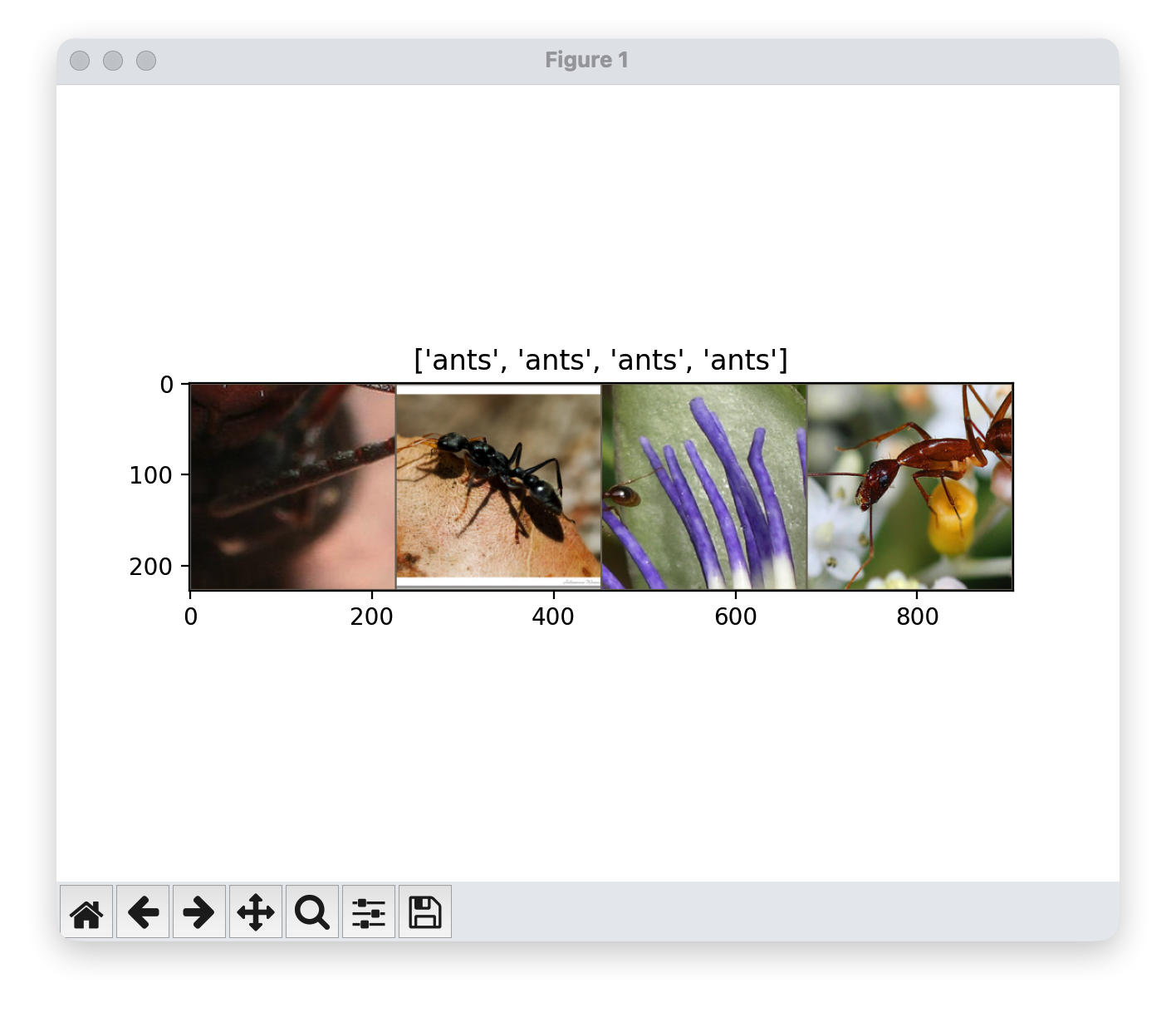

1.2 可视化一些图像

让我们可视化一些训练图像,以便理解数据增强。

def imshow(inp, title=None):

"""Imshow for Tensor."""

inp = inp.numpy().transpose((1, 2, 0))

mean = np.array([0.485, 0.456, 0.406])

std = np.array([0.229, 0.224, 0.225])

inp = std * inp + mean

inp = np.clip(inp, 0, 1)

plt.imshow(inp)

if title is not None:

plt.title(title)

plt.pause(0.001)

inputs, classes = next(iter(dataloaders['train']))

out = torchvision.utils.make_grid(inputs)

imshow(out, title=[class_names[x] for x in classes])

1.3 训练模型

现在,我们来编写一个通用的函数来训练模型。在这里,我们将展示:

- 调整学习率

- 保存最佳模型

在下面的内容中,参数 scheduler 是来自 torch.optim.lr_scheduler 的一个学习率调度器对象。

def train_model(model, criterion, optimizer, scheduler, num_epochs=25):

since = time.time()

best_model_wts = copy.deepcopy(model.state_dict())

best_acc = 0.0

for epoch in range(num_epochs):

print(f'Epoch {epoch}/{num_epochs - 1}')

print('-' * 10)

# Each epoch has a training and validation phase

for phase in ['train', 'val']:

if phase == 'train':

model.train() # Set model to training mode

else:

model.eval() # Set model to evaluate mode

running_loss = 0.0

running_corrects = 0

# Iterate over data.

for inputs, labels in dataloaders[phase]:

inputs = inputs.to(device)

labels = labels.to(device)

# zero the parameter gradients

optimizer.zero_grad()

# forward

# track history if only in train

with torch.set_grad_enabled(phase == 'train'):

outputs = model(inputs)

_, preds = torch.max(outputs, 1)

loss = criterion(outputs, labels)

# backward + optimize only if in training phase

if phase == 'train':

loss.backward()

optimizer.step()

# statistics

running_loss += loss.item() * inputs.size(0)

running_corrects += torch.sum(preds == labels.data)

if phase == 'train':

scheduler.step()

epoch_loss = running_loss / dataset_sizes[phase]

epoch_acc = running_corrects.double() / dataset_sizes[phase]

print(f'{phase} Loss: {epoch_loss:.4f} Acc: {epoch_acc:.4f}')

# deep copy the model

if phase == 'val' and epoch_acc > best_acc:

best_acc = epoch_acc

best_model_wts = copy.deepcopy(model.state_dict())

print()

time_elapsed = time.time() - since

print(f'Training complete in {time_elapsed // 60:.0f}m {time_elapsed % 60:.0f}s')

print(f'Best val Acc: {best_acc:4f}')

# load best model weights

model.load_state_dict(best_model_wts)

return model

- Or MPS用户(MacBook pro2019 亲测可用)

上述NPU适配代码可以使用下列代码替换:

import time

import copy

import torch

import torch.nn as nn

import torch.optim as optim

from torch.optim import lr_scheduler

import torchvision

from torchvision import datasets, models, transforms

# 1. 首先定义设备(确保设备选择逻辑正确)

device = torch.device("mps" if torch.backends.mps.is_available() else "cpu")

print(f"Using device: {device}")

# 2. 修复后的训练函数

def train_model(model, criterion, optimizer, scheduler, dataloaders, dataset_sizes, num_epochs=25):

since = time.time()

best_model_wts = copy.deepcopy(model.state_dict())

best_acc = 0.0

for epoch in range(num_epochs):

print(f'Epoch {epoch}/{num_epochs - 1}')

print('-' * 10)

# 每个epoch包含训练和验证阶段

for phase in ['train', 'val']:

if phase == 'train':

model.train() # 训练模式

else:

model.eval() # 验证模式

running_loss = 0.0

running_corrects = 0

# 迭代数据

for inputs, labels in dataloaders[phase]:

inputs = inputs.to(device)

labels = labels.to(device)

# 清零梯度

optimizer.zero_grad()

# 前向传播(仅训练阶段跟踪梯度)

with torch.set_grad_enabled(phase == 'train'):

outputs = model(inputs)

_, preds = torch.max(outputs, 1)

loss = criterion(outputs, labels)

# 反向传播+优化(仅训练阶段)

if phase == 'train':

loss.backward()

optimizer.step()

# 统计指标(关键修复:移除double,改用float)

running_loss += loss.item() * inputs.size(0)

# 修复1:将preds和labels的比较结果转为float(MPS支持)

running_corrects += torch.sum(preds == labels.data).float()

if phase == 'train':

scheduler.step()

# 修复2:使用float计算准确率,避免double

epoch_loss = running_loss / dataset_sizes[phase]

epoch_acc = running_corrects / dataset_sizes[phase] # 已为float类型

print(f'{phase} Loss: {epoch_loss:.4f} Acc: {epoch_acc:.4f}')

# 保存最佳模型权重

if phase == 'val' and epoch_acc > best_acc:

best_acc = epoch_acc

best_model_wts = copy.deepcopy(model.state_dict())

print()

time_elapsed = time.time() - since

print(f'Training complete in {time_elapsed // 60:.0f}m {time_elapsed % 60:.0f}s')

print(f'Best val Acc: {best_acc:.4f}')

# 加载最佳模型权重

model.load_state_dict(best_model_wts)

return model

# 3. 模型初始化(修复pretrained警告)

# 替换deprecated的pretrained参数,使用weights参数

model_ft = models.resnet18(weights=torchvision.models.ResNet18_Weights.IMAGENET1K_V1)

num_ftrs = model_ft.fc.in_features

# 替换全连接层(适配2分类)

model_ft.fc = nn.Linear(num_ftrs, 2)

model_ft = model_ft.to(device)

# 4. 损失函数、优化器、学习率调度器

criterion = nn.CrossEntropyLoss()

optimizer_ft = optim.SGD(model_ft.parameters(), lr=0.001, momentum=0.9)

exp_lr_scheduler = lr_scheduler.StepLR(optimizer_ft, step_size=7, gamma=0.1)

# 5. 数据加载逻辑

# 请替换为你的数据集路径

data_dir = "./hymenoptera_data" # 例如:"./hymenoptera_data"

data_transforms = {

'train': transforms.Compose([

transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

'val': transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

}

image_datasets = {

x: datasets.ImageFolder(f"{data_dir}/{x}", data_transforms[x])

for x in ['train', 'val']

}

dataloaders = {

x: torch.utils.data.DataLoader(image_datasets[x], batch_size=4, shuffle=True, num_workers=2)

for x in ['train', 'val']

}

dataset_sizes = {x: len(image_datasets[x]) for x in ['train', 'val']}

class_names = image_datasets['train'].classes

# 6. 启动训练

model_ft = train_model(

model_ft, criterion, optimizer_ft, exp_lr_scheduler,

dataloaders, dataset_sizes, num_epochs=25

)

MPS Out(16分钟,没有NPU快):

Epoch 0/24

----------

train Loss: 0.5657 Acc: 0.7295

val Loss: 0.2148 Acc: 0.9150

Epoch 1/24

----------

train Loss: 0.5380 Acc: 0.7951

val Loss: 0.3365 Acc: 0.8758

Epoch 2/24

----------

train Loss: 0.5078 Acc: 0.8115

val Loss: 0.3787 Acc: 0.8431

Epoch 3/24

----------

train Loss: 0.5044 Acc: 0.7459

val Loss: 0.3706 Acc: 0.8758

Epoch 4/24

----------

train Loss: 0.5988 Acc: 0.7377

val Loss: 0.6115 Acc: 0.7516

Epoch 5/24

----------

train Loss: 0.5791 Acc: 0.7828

val Loss: 0.2452 Acc: 0.9216

Epoch 6/24

----------

train Loss: 0.4556 Acc: 0.8156

val Loss: 0.2754 Acc: 0.8824

Epoch 7/24

----------

train Loss: 0.2597 Acc: 0.8893

val Loss: 0.2213 Acc: 0.9085

Epoch 8/24

----------

train Loss: 0.3767 Acc: 0.8443

val Loss: 0.2365 Acc: 0.9085

Epoch 9/24

----------

train Loss: 0.3373 Acc: 0.8484

val Loss: 0.2259 Acc: 0.9150

Epoch 10/24

----------

train Loss: 0.2736 Acc: 0.8934

val Loss: 0.2352 Acc: 0.9085

Epoch 11/24

----------

train Loss: 0.2941 Acc: 0.8648

val Loss: 0.2384 Acc: 0.9150

Epoch 12/24

----------

train Loss: 0.2914 Acc: 0.8934

val Loss: 0.2210 Acc: 0.9216

Epoch 13/24

----------

train Loss: 0.2116 Acc: 0.9344

val Loss: 0.2319 Acc: 0.8889

Epoch 14/24

----------

train Loss: 0.2901 Acc: 0.8689

val Loss: 0.2156 Acc: 0.9346

Epoch 15/24

----------

train Loss: 0.2977 Acc: 0.8811

val Loss: 0.2336 Acc: 0.8954

Epoch 16/24

----------

train Loss: 0.2514 Acc: 0.8893

val Loss: 0.2313 Acc: 0.8889

Epoch 17/24

----------

train Loss: 0.2752 Acc: 0.8730

val Loss: 0.2178 Acc: 0.8889

Epoch 18/24

----------

train Loss: 0.2320 Acc: 0.8852

val Loss: 0.2125 Acc: 0.9150

Epoch 19/24

----------

train Loss: 0.2762 Acc: 0.8975

val Loss: 0.2148 Acc: 0.9085

Epoch 20/24

----------

train Loss: 0.2994 Acc: 0.8730

val Loss: 0.2564 Acc: 0.8824

Epoch 21/24

----------

train Loss: 0.2786 Acc: 0.8689

val Loss: 0.2067 Acc: 0.9281

Epoch 22/24

----------

train Loss: 0.2399 Acc: 0.8975

val Loss: 0.2228 Acc: 0.9216

Epoch 23/24

----------

train Loss: 0.2450 Acc: 0.8934

val Loss: 0.2361 Acc: 0.9216

Epoch 24/24

----------

train Loss: 0.2812 Acc: 0.8893

val Loss: 0.2102 Acc: 0.9477

Training complete in 16m 4s

Best val Acc: 0.9477

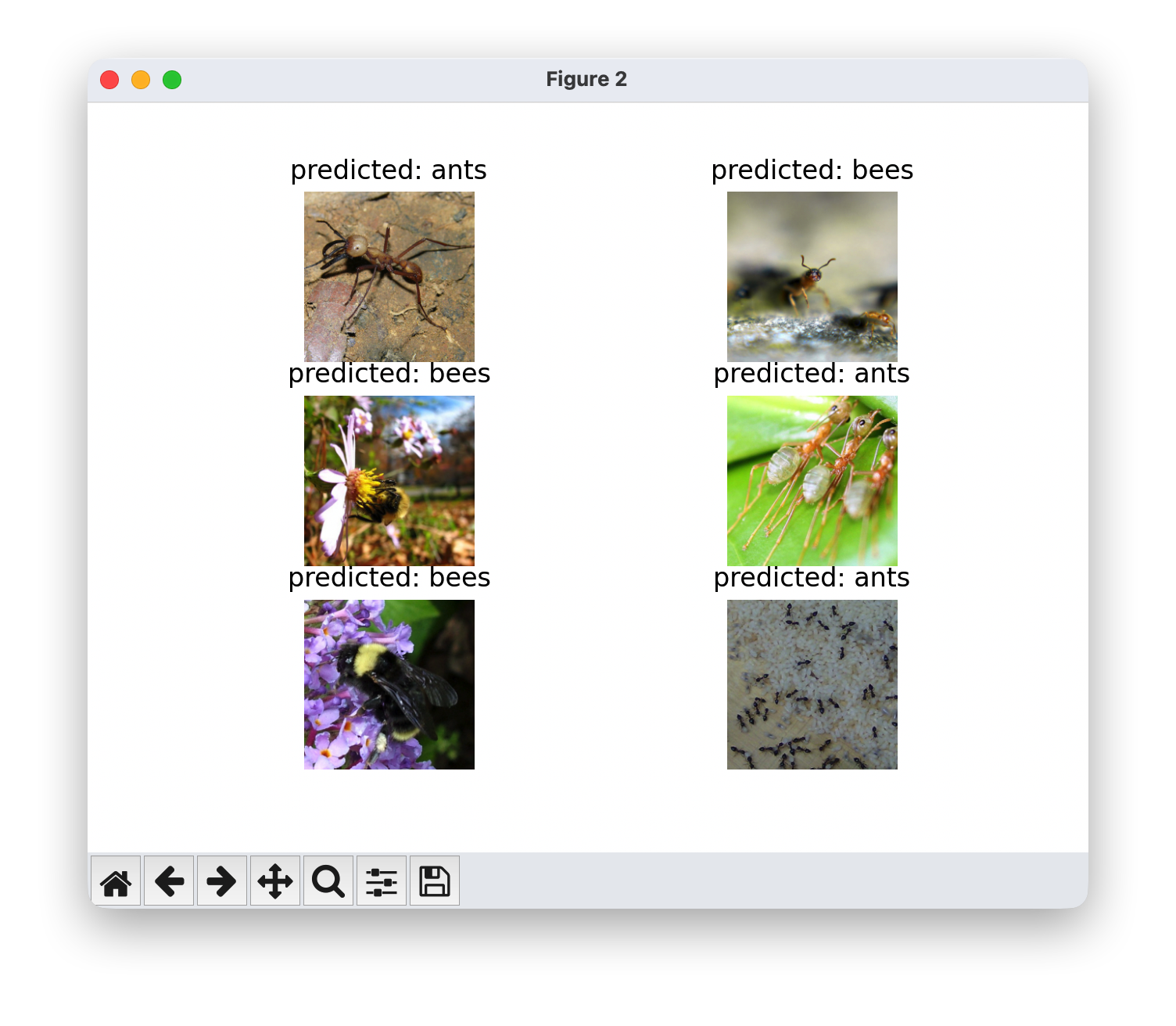

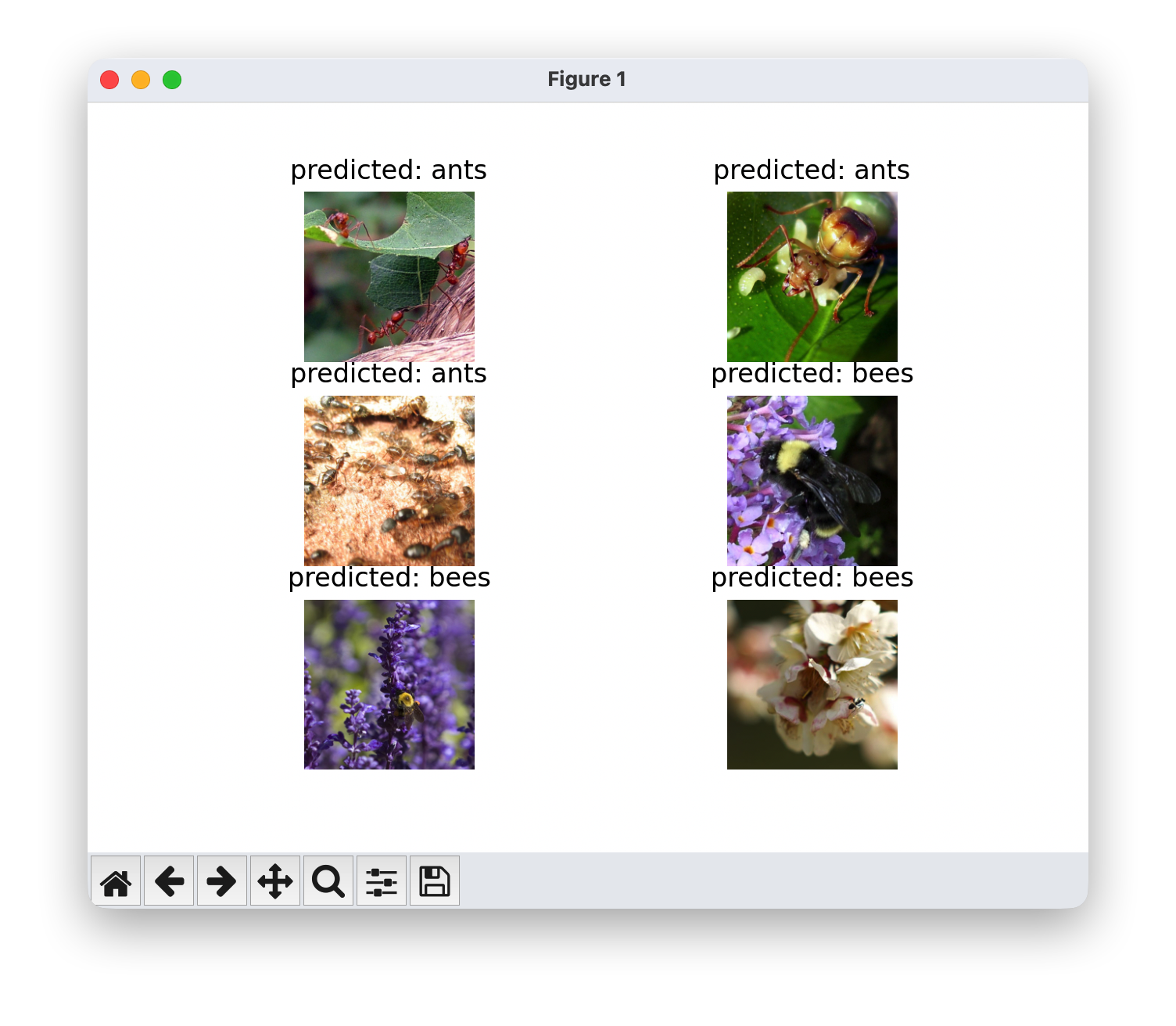

1.4 可视化模型预测

用于显示一些图像的预测结果的通用函数

def visualize_model(model, num_images=6):

was_training = model.training

model.eval()

images_so_far = 0

fig = plt.figure()

with torch.no_grad():

for i, (inputs, labels) in enumerate(dataloaders['val']):

inputs = inputs.to(device)

labels = labels.to(device)

outputs = model(inputs)

_, preds = torch.max(outputs, 1)

for j in range(inputs.size()[0]):

images_so_far += 1

ax = plt.subplot(num_images//2, 2, images_so_far)

ax.axis('off')

ax.set_title(f'predicted: {class_names[preds[j]]}')

imshow(inputs.cpu().data[j])

if images_so_far == num_images:

model.train(mode=was_training)

return

model.train(mode=was_training)

1.5 微调卷积神经网络

加载预训练模型并重置最终的全连接层。

model_ft = models.resnet18(pretrained=True)

num_ftrs = model_ft.fc.in_features

# Here the size of each output sample is set to 2.

# Alternatively, it can be generalized to nn.Linear(num_ftrs, len(class_names)).

model_ft.fc = nn.Linear(num_ftrs, 2)

model_ft = model_ft.to(device)

criterion = nn.CrossEntropyLoss()

# Observe that all parameters are being optimized

optimizer_ft = optim.SGD(model_ft.parameters(), lr=0.001, momentum=0.9)

# Decay LR by a factor of 0.1 every 7 epochs

exp_lr_scheduler = lr_scheduler.StepLR(optimizer_ft, step_size=7, gamma=0.1)

1.6 训练和评估

在 CPU 上大约需要 15-25 分钟,在MPS版的GPU上大概16分钟。然而,在 NPU 上,它不到一分钟就能完成。

model_ft = train_model(model_ft, criterion, optimizer_ft, exp_lr_scheduler,

num_epochs=25)

Out:

Epoch 1/24

----------

train Loss: 0.5648 Acc: 0.7541

val Loss: 0.3638 Acc: 0.8497

Epoch 2/24

----------

train Loss: 0.5923 Acc: 0.7500

val Loss: 0.4437 Acc: 0.8627

Epoch 3/24

----------

train Loss: 0.5490 Acc: 0.8115

val Loss: 0.4247 Acc: 0.8627

Epoch 4/24

----------

train Loss: 0.3876 Acc: 0.8320

val Loss: 0.3033 Acc: 0.8824

Epoch 5/24

----------

train Loss: 0.4958 Acc: 0.8156

val Loss: 0.4198 Acc: 0.8431

Epoch 6/24

----------

train Loss: 0.4787 Acc: 0.7992

val Loss: 0.3056 Acc: 0.8693

Epoch 7/24

----------

train Loss: 0.4151 Acc: 0.8074

val Loss: 0.2482 Acc: 0.8889

Epoch 8/24

----------

train Loss: 0.2557 Acc: 0.8975

val Loss: 0.2401 Acc: 0.9150

Epoch 9/24

----------

train Loss: 0.3052 Acc: 0.8689

val Loss: 0.2226 Acc: 0.9150

Epoch 10/24

----------

train Loss: 0.3878 Acc: 0.8197

val Loss: 0.2191 Acc: 0.9216

Epoch 11/24

----------

train Loss: 0.2919 Acc: 0.8770

val Loss: 0.2011 Acc: 0.9281

Epoch 12/24

----------

train Loss: 0.3005 Acc: 0.8525

val Loss: 0.2325 Acc: 0.9150

Epoch 13/24

----------

train Loss: 0.2389 Acc: 0.9139

val Loss: 0.2034 Acc: 0.9216

Epoch 14/24

----------

train Loss: 0.2929 Acc: 0.8648

val Loss: 0.2273 Acc: 0.9020

Epoch 15/24

----------

train Loss: 0.2133 Acc: 0.9139

val Loss: 0.2063 Acc: 0.9085

Epoch 16/24

----------

train Loss: 0.2147 Acc: 0.9057

val Loss: 0.2599 Acc: 0.8693

Epoch 17/24

----------

train Loss: 0.2193 Acc: 0.8975

val Loss: 0.1925 Acc: 0.9412

Epoch 18/24

----------

train Loss: 0.2253 Acc: 0.9098

val Loss: 0.2086 Acc: 0.9216

Epoch 19/24

----------

train Loss: 0.2902 Acc: 0.8811

val Loss: 0.2403 Acc: 0.8758

Epoch 20/24

----------

train Loss: 0.3423 Acc: 0.8484

val Loss: 0.1919 Acc: 0.9281

Epoch 21/24

----------

train Loss: 0.2449 Acc: 0.9098

val Loss: 0.2010 Acc: 0.9542

Epoch 22/24

----------

train Loss: 0.2822 Acc: 0.8689

val Loss: 0.2146 Acc: 0.9085

Epoch 23/24

----------

train Loss: 0.2740 Acc: 0.8648

val Loss: 0.1931 Acc: 0.9216

Epoch 24/24

----------

train Loss: 0.2484 Acc: 0.8893

val Loss: 0.1944 Acc: 0.9216

Training complete in 1m 22s

Best val Acc: 0.954248

visualize_model(model_ft)

1.7 卷积神经网络作为固定特征提取器

在这里,我们需要冻结除最后一层之外的所有网络。我们需要将 requires_grad = False 设置为冻结参数,这样在 backward() 中就不会计算梯度。

model_conv = torchvision.models.resnet18(pretrained=True)

for param in model_conv.parameters():

param.requires_grad = False

# Parameters of newly constructed modules have requires_grad=True by default

num_ftrs = model_conv.fc.in_features

model_conv.fc = nn.Linear(num_ftrs, 2)

model_conv = model_conv.to(device)

criterion = nn.CrossEntropyLoss()

# Observe that only parameters of final layer are being optimized as

# opposed to before.

optimizer_conv = optim.SGD(model_conv.fc.parameters(), lr=0.001, momentum=0.9)

# Decay LR by a factor of 0.1 every 7 epochs

exp_lr_scheduler = lr_scheduler.StepLR(optimizer_conv, step_size=7, gamma=0.1)

- OR MPS版

# 卷积神经网络作为固定特征提取器(适配MPS)

# 替换废弃的pretrained参数,使用weights(torchvision 0.13+规范)

model_conv = models.resnet18(weights=torchvision.models.ResNet18_Weights.IMAGENET1K_V1)

# 冻结除最后一层外的所有参数(MPS兼容)

for param in model_conv.parameters():

param.requires_grad = False # 冻结参数,不计算梯度

# 替换最后一层全连接层(新层默认requires_grad=True)

num_ftrs = model_conv.fc.in_features

model_conv.fc = nn.Linear(num_ftrs, 2) # 2分类任务

# 将模型移至MPS设备(显式指定float32,避免隐式类型问题)

model_conv = model_conv.to(device, dtype=torch.float32)

# 5. 定义损失函数、优化器、学习率调度器

criterion = nn.CrossEntropyLoss()

# 仅优化最后一层的参数(固定特征提取器核心逻辑)

optimizer_conv = optim.SGD(model_conv.fc.parameters(), lr=0.001, momentum=0.9)

# 学习率调度器

exp_lr_scheduler = lr_scheduler.StepLR(optimizer_conv, step_size=7, gamma=0.1)

# 6. 启动训练(传入dataloaders和dataset_sizes)

model_conv = train_model(

model_conv, criterion, optimizer_conv, exp_lr_scheduler,

dataloaders, dataset_sizes, num_epochs=25

)

- MPS Out(12分钟):

Epoch 0/24

----------

train Loss: 0.6667 Acc: 0.6557

val Loss: 0.4189 Acc: 0.7778

Epoch 1/24

----------

train Loss: 0.4929 Acc: 0.7623

val Loss: 0.2065 Acc: 0.9216

Epoch 2/24

----------

train Loss: 0.4790 Acc: 0.8115

val Loss: 0.1632 Acc: 0.9346

Epoch 3/24

----------

train Loss: 0.4182 Acc: 0.8156

val Loss: 0.2658 Acc: 0.9085

Epoch 4/24

----------

train Loss: 0.3356 Acc: 0.8730

val Loss: 0.1907 Acc: 0.9412

Epoch 5/24

----------

train Loss: 0.5482 Acc: 0.7541

val Loss: 0.1722 Acc: 0.9412

Epoch 6/24

----------

train Loss: 0.5254 Acc: 0.7910

val Loss: 0.2860 Acc: 0.9085

Epoch 7/24

----------

train Loss: 0.4591 Acc: 0.7910

val Loss: 0.1933 Acc: 0.9281

Epoch 8/24

----------

train Loss: 0.2642 Acc: 0.9098

val Loss: 0.1796 Acc: 0.9477

Epoch 9/24

----------

train Loss: 0.3640 Acc: 0.8607

val Loss: 0.1914 Acc: 0.9412

Epoch 10/24

----------

train Loss: 0.3379 Acc: 0.8566

val Loss: 0.1941 Acc: 0.9412

Epoch 11/24

----------

train Loss: 0.3870 Acc: 0.8279

val Loss: 0.1668 Acc: 0.9346

Epoch 12/24

----------

train Loss: 0.3284 Acc: 0.8648

val Loss: 0.1938 Acc: 0.9412

Epoch 13/24

----------

train Loss: 0.4206 Acc: 0.8361

val Loss: 0.1821 Acc: 0.9346

Epoch 14/24

----------

train Loss: 0.3670 Acc: 0.8197

val Loss: 0.1853 Acc: 0.9346

Epoch 15/24

----------

train Loss: 0.3325 Acc: 0.8648

val Loss: 0.1843 Acc: 0.9346

Epoch 16/24

----------

train Loss: 0.3002 Acc: 0.8730

val Loss: 0.1861 Acc: 0.9412

Epoch 17/24

----------

train Loss: 0.2989 Acc: 0.8689

val Loss: 0.1873 Acc: 0.9346

Epoch 18/24

----------

train Loss: 0.4518 Acc: 0.7910

val Loss: 0.2449 Acc: 0.9150

Epoch 19/24

----------

train Loss: 0.3168 Acc: 0.8566

val Loss: 0.1938 Acc: 0.9412

Epoch 20/24

----------

train Loss: 0.3449 Acc: 0.8525

val Loss: 0.1746 Acc: 0.9477

Epoch 21/24

----------

train Loss: 0.3623 Acc: 0.8443

val Loss: 0.2040 Acc: 0.9346

Epoch 22/24

----------

train Loss: 0.3273 Acc: 0.8484

val Loss: 0.1837 Acc: 0.9346

Epoch 23/24

----------

train Loss: 0.3368 Acc: 0.8607

val Loss: 0.1958 Acc: 0.9412

Epoch 24/24

----------

train Loss: 0.3086 Acc: 0.8648

val Loss: 0.1927 Acc: 0.9346

Training complete in 12m 31s

Best val Acc: 0.9477

1.8 训练和评估

在 CPU 上,这将比之前的情况大约节省一半的时间。这是可以预期的,因为对于网络的大部分神经元,不需要计算梯度。然而,正向传播仍然需要计算。

model_conv = train_model(model_conv, criterion, optimizer_conv,

exp_lr_scheduler, num_epochs=25)

NPU Out(1分钟)

Epoch 0/24

----------

train Loss: 0.6476 Acc: 0.6352

val Loss: 0.1929 Acc: 0.9412

Epoch 1/24

----------

train Loss: 0.3520 Acc: 0.8525

val Loss: 0.1906 Acc: 0.9281

Epoch 2/24

----------

train Loss: 0.4690 Acc: 0.7746

val Loss: 0.1899 Acc: 0.9477

Epoch 3/24

----------

train Loss: 0.3424 Acc: 0.8361

val Loss: 0.1966 Acc: 0.9346

Epoch 4/24

----------

train Loss: 0.4996 Acc: 0.7910

val Loss: 0.1938 Acc: 0.9412

Epoch 5/24

----------

train Loss: 0.4661 Acc: 0.8033

val Loss: 0.1946 Acc: 0.9346

Epoch 6/24

----------

train Loss: 0.5912 Acc: 0.7951

val Loss: 0.2231 Acc: 0.9412

Epoch 7/24

----------

train Loss: 0.3492 Acc: 0.8443

val Loss: 0.1950 Acc: 0.9477

Epoch 8/24

----------

train Loss: 0.3643 Acc: 0.8156

val Loss: 0.1981 Acc: 0.9477

Epoch 9/24

----------

train Loss: 0.3917 Acc: 0.8115

val Loss: 0.1998 Acc: 0.9412

Epoch 10/24

----------

train Loss: 0.3665 Acc: 0.8156

val Loss: 0.1992 Acc: 0.9412

Epoch 11/24

----------

train Loss: 0.3153 Acc: 0.8730

val Loss: 0.1975 Acc: 0.9477

Epoch 12/24

----------

train Loss: 0.4131 Acc: 0.8115

val Loss: 0.2335 Acc: 0.9346

Epoch 13/24

----------

train Loss: 0.3618 Acc: 0.8566

val Loss: 0.1945 Acc: 0.9477

Epoch 14/24

----------

train Loss: 0.3167 Acc: 0.8443

val Loss: 0.2070 Acc: 0.9412

Epoch 15/24

----------

train Loss: 0.3206 Acc: 0.8811

val Loss: 0.2130 Acc: 0.9412

Epoch 16/24

----------

train Loss: 0.3715 Acc: 0.8361

val Loss: 0.2355 Acc: 0.9281

Epoch 17/24

----------

train Loss: 0.3498 Acc: 0.8443

val Loss: 0.1933 Acc: 0.9412

Epoch 18/24

----------

train Loss: 0.2917 Acc: 0.8607

val Loss: 0.1950 Acc: 0.9477

Epoch 19/24

----------

train Loss: 0.2853 Acc: 0.8730

val Loss: 0.1892 Acc: 0.9477

Epoch 20/24

----------

train Loss: 0.2961 Acc: 0.8770

val Loss: 0.1999 Acc: 0.9477

Epoch 21/24

----------

train Loss: 0.3339 Acc: 0.8402

val Loss: 0.2348 Acc: 0.9412

Epoch 22/24

----------

train Loss: 0.3689 Acc: 0.8525

val Loss: 0.2257 Acc: 0.9412

Epoch 23/24

----------

train Loss: 0.4128 Acc: 0.7992

val Loss: 0.2317 Acc: 0.9281

Epoch 24/24

----------

train Loss: 0.4125 Acc: 0.8115

val Loss: 0.2207 Acc: 0.9281

Training complete in 1m 0s

Best val Acc: 0.947712

visualize_model(model_conv)

plt.ioff()

plt.show()

PyTorch视觉迁移学习实战

PyTorch视觉迁移学习实战

8万+

8万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?