import asyncio

from datetime import timedelta

import httpx

import json

import os

import torch

import uvicorn

from fastapi import Form

from fastapi import Depends, FastAPI, Request, Response

from fastapi.responses import HTMLResponse, JSONResponse, RedirectResponse

from fastapi.staticfiles import StaticFiles

from fastapi.templating import Jinja2Templates

from jinja2 import Environment, FileSystemLoader

from dotenv import load_dotenv

from pathlib import Path

from transformers import AutoModelForCausalLM, AutoTokenizer

from jwt_handler import (

decode_access_token,

get_current_user_id,

verify_password,

get_password_hash,

create_access_token

)

import database

import logging

from typing import Callable

# --- 日志配置 ---

LOG_DIR = Path(__file__).parent / "logs"

LOG_DIR.mkdir(exist_ok=True) # 确保 logs 目录存在

LOG_FILE = LOG_DIR / "app.log"

# 配置 logger

logging.basicConfig(

level=logging.INFO,

format='%(asctime)s | %(levelname)s | %(message)s',

handlers=[

logging.FileHandler(LOG_FILE, encoding='utf-8', mode='a'), # 写入文件

logging.StreamHandler() # 同时输出到控制台

]

)

logger = logging.getLogger(__name__)

BASE_DIR = Path(__file__).resolve().parent.parent # 项目根目录

FRONTEND_DIR = BASE_DIR / "frontend"

app = FastAPI()

load_dotenv(dotenv_path=BASE_DIR / "backend" / ".env")

# 挂载静态资源

app.mount("/static", StaticFiles(directory=str(FRONTEND_DIR / "static")), name="static")

templates = Jinja2Templates(directory=str(FRONTEND_DIR))

template_env = Environment(loader=FileSystemLoader(str(FRONTEND_DIR)))

model, tokenizer = None, None

conversation_history = {} # 内存中缓存最近对话(用于实时推理)

max_history_turns = 5

def load_model():

model_name = str(BASE_DIR / "model/deepseek-coder-1.3b-instruct")

print("Loading tokenizer...")

tok = AutoTokenizer.from_pretrained(model_name)

print("Loading model...")

m = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype=torch.float16 if torch.cuda.is_available() else torch.float32,

device_map="auto",

low_cpu_mem_usage=True

).eval()

return m, tok

async def start_load():

global model, tokenizer

loop = asyncio.get_event_loop()

model, tokenizer = await loop.run_in_executor(None, load_model)

print("✅ Model loaded during startup!")

# --- HTTP 中间件用于调试日志 ---

@app.middleware("http")

async def debug_request_middleware(request: Request, call_next: Callable):

# 记录客户端 IP

client_host = request.client.host if request.client else "unknown"

logger.info(f"➡️ {request.method} {request.url.path} from {client_host}")

# 尝试读取 cookie 中的 token 并解析用户 ID(不中断流程)

try:

access_token = request.cookies.get("access_token")

user_id = None

if access_token:

user_id = decode_access_token(access_token) # 直接调用,不加 await

logger.info(f"👤 User ID: {user_id or 'Not logged in'}")

except Exception as e:

logger.warning(f"⚠️ Failed to decode user from token: {e}")

user_id = None

# 记录请求体(仅 POST/PUT 等有 body 的)

if request.method in ("POST", "PUT", "PATCH"):

try:

body = await request.json()

logger.debug(f"📥 Request Body: {body}")

except Exception as e:

logger.debug(f"❌ Could not parse request body: {e}")

# 执行请求

response: Response = await call_next(request)

# 记录响应状态

logger.info(f"⬅️ Response status: {response.status_code}")

return response

@app.get("/", response_class=HTMLResponse)

async def home():

template = template_env.get_template("home.html")

content = template.render(debug_user=None)

return HTMLResponse(content=content)

@app.get("/login", response_class=HTMLResponse)

async def login_page():

template = template_env.get_template("login.html")

content = template.render()

return HTMLResponse(content=content)

@app.post("/login")

async def login(request: Request):

data = await request.json()

account = data.get("account")

password = data.get("password")

hash_password = get_password_hash(password)

if not account or not password:

logger.warning(f"Login failed: missing credentials from {request.client.host}")

return JSONResponse(

{"success": False, "message": "请输入用户名和密码"},

status_code=400

)

result = database.check_users(account, hash_password)

if not result:

logger.warning(f"Login failed: user not found - {account}")

return JSONResponse(

{"success": False, "message": "用户名或密码错误"},

status_code=401

)

user_id, hashed_password_from_db = result

if not verify_password(password, hashed_password_from_db):

logger.warning(f"Login failed: wrong password for user {account}")

return JSONResponse(

{"success": False, "message": "用户名或密码错误"},

status_code=401

)

access_token_expires = timedelta(minutes=int(os.getenv("ACCESS_TOKEN_EXPIRE_MINUTES", 30)))

access_token = create_access_token(data={"sub": str(user_id)}, expires_delta=access_token_expires)

logger.info(f"🔐 User {user_id} logged in successfully")

response = JSONResponse({"success": True, "account": account})

response.set_cookie(

key="access_token",

value=access_token,

httponly=True,

secure=False,

samesite="lax",

max_age=access_token_expires.total_seconds()

)

return response

@app.post("/save_role_setting")

async def save_role_setting(

request: Request,

current_user_id: str = Depends(get_current_user_id),

payload: dict = None

):

if not payload:

payload = await request.json()

role_setting = payload.get("role_setting", "").strip()

if not role_setting:

return JSONResponse({"error": "角色设定不能为空"}, status_code=400)

try:

database.save_role_profile(current_user_id,role_setting)

return JSONResponse({"message": "角色设定已保存!", "role": role_setting})

except Exception as e:

logger.error(f"[DB] Save role failed: {e}")

return JSONResponse({"error": "保存失败"}, status_code=500)

@app.get("/user1", response_class=HTMLResponse)

async def chat_page(request: Request, current_user_id: str = Depends(get_current_user_id)):

client_ip = request.client.host

logger.info(f"📋 User {current_user_id} accessing /user1 from IP: {client_ip}")

if not current_user_id:

logger.warning(f"🚫 Unauthorized access to /user1 from IP: {client_ip}")

return RedirectResponse(url="/login")

# 查询用户当前的角色设定

custom_role = "你是一个乐于助人的助手。"

try:

custom_role = database.get_role_profile(current_user_id)

except Exception as e:

logger.error(f"[DB Error] Failed to fetch role setting: {e}")

template = template_env.get_template("myAI1.html")

content = template.render(

debug_user=current_user_id,

custom_role=custom_role # 传给前端

)

return HTMLResponse(content=content)

@app.post("/user1/chat")# 主聊天接口

async def chat_handler(request: Request, current_user_id: str):

client_ip = request.client.host

if not current_user_id:

logger.warning(f"🚫 Chat attempt without auth from IP: {client_ip}")

return JSONResponse({"error": "未授权访问"}, status_code=401)

logger.info(f"💬 User {current_user_id} sending message from {client_ip}")

data = await request.json()

user_message = data.get("message")

if not user_message:

logger.warning(f"User {current_user_id}: Missing params in chat request - {data}")

return JSONResponse({"error": "缺少必要参数"}, status_code=400)

global model, tokenizer

if model is None or tokenizer is None:

return JSONResponse({"error": "模型尚未加载,请先启动模型"}, status_code=500)

# 初始化用户对话历史(内存中)

if current_user_id not in conversation_history:

conversation_history[current_user_id] = []

user_history = conversation_history[current_user_id]

# ✅ 动态获取用户专属角色设定

user_role_setting = "你是一个乐于助人的助手。" # 默认值

try:

user_role_setting = database.get_role_profile(current_user_id)

except Exception as e:

logger.warning(f"⚠️ Failed to load custom role for {current_user_id}: {e}")

# 【保存到数据库】

try:

database.save_conversation(current_user_id, user_message, "") # 先存用户消息

except Exception as e:

logger.error(f"💾 DB save failed: {e}")

# 构建输入消息(带角色设定)

recent_ai_reflection = database.get_latest_ai_reflection(current_user_id) # 查询 ai_character_log 最新一条

messages = [{"role": "system", "content": user_role_setting}]

if recent_ai_reflection:

messages.append({

"role": "system",

"content": f"📌 注意:你在之前与该用户的对话中被观察到:{recent_ai_reflection['exhibited_behavior']}。\n"

"请根据这一反馈适当调整语气,保持一致性或进行修复。"

})

# 添加近期对话(最多 max_history_turns 轮)

start_idx = max(0, len(user_history) - max_history_turns)

for hist in user_history[start_idx:]:

messages.append({"role": "user", "content": hist["user"]})

messages.append({"role": "assistant", "content": hist["bot"]})

messages.append({"role": "user", "content": user_message})

# 使用 tokenizer 构造输入

input_text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

inputs = tokenizer(input_text, return_tensors="pt").to(model.device)

# 推理

with torch.no_grad():

outputs = model.generate(

**inputs,

max_new_tokens=512,

temperature=0.8,

top_p=0.9,

do_sample=True,

repetition_penalty=1.1,

eos_token_id=tokenizer.eos_token_id,

pad_token_id=tokenizer.eos_token_id

)

full_response = tokenizer.decode(outputs[0], skip_special_tokens=False)

reply = ""

if "<|assistant|>" in full_response:

reply = full_response.split("<|assistant|>")[-1].strip()

else:

reply = full_response[len(input_text):].strip()

eot_token = "<|EOT|>"

if eot_token in reply:

reply = reply.split(eot_token)[0].strip()

# 更新本地历史

user_history.append({

"user": user_message,

"bot": reply

})

# 控制长度

while len(user_history) > max_history_turns * 2:

user_history.pop(0)

# 【保存 AI 回复到数据库】

try:

# 更新之前插入的记录(或新增一条)—— 这里建议改为直接插入完整 pair

# 更合理的做法是上面只记 user,这里再补一条完整记录

database.save_conversation(current_user_id, user_message, reply)

except Exception as e:

logger.error(f"💾 Save AI response failed: {e}")

# ================================

# 🔥 触发记忆总结(每满 6 条对话触发一次)

# ================================

SHOULD_SUMMARIZE = len(user_history) > 0 and len(user_history) % 6 == 0

# 在 SHOULD_SUMMARIZE 分支内添加:

if SHOULD_SUMMARIZE:

# === 1. 总结用户事件 + 性格情感 ===

events, personality_emotion = await summarize_conversation_and_personality(

current_user_id, user_history

)

for evt in events:

database.save_memory(current_user_id, evt, "event")

if personality_emotion.strip():

lines = [ln.strip() for ln in personality_emotion.split('\n') if ln.strip()]

personality = ""

emotion = ""

for line in lines:

if "性格:" in line:

personality = line.replace("性格:", "").strip(";;")

elif "情绪:" in line or "情感:" in line:

emotion = line.replace("情绪:", "").replace("情感:", "").strip(";;")

database.update_user_profile(current_user_id, personality, emotion)

# === 2. 总结 AI 自身的表现(新增)===

exhibited_behavior, deviation_analysis = await summarize_ai_character(

current_user_id,

user_history,

user_role_setting # 原始角色设定传入

)

database.save_ai_character_log(current_user_id,user_role_setting,exhibited_behavior,user_history)

database.save_user_to_ai(exhibited_behavior,deviation_analysis,current_user_id)

logger.info(f"🤖 AI self-reflection saved for user {current_user_id}: {exhibited_behavior}")

# === 4. 清理本地历史 ===

database.clear_local_and_db_conversations(current_user_id)

logger.info(f"🧹 Cleared conversation history for user {current_user_id} after summarization.")

return JSONResponse({"reply": reply})

@app.get("/user2", response_class=HTMLResponse)

async def chat_page(request: Request, current_user_id: str = Depends(get_current_user_id)):

client_ip = request.client.host

logger.info(f"📋 User {current_user_id} accessing /user2 from IP: {client_ip}")

if not current_user_id:

logger.warning(f"🚫 Unauthorized access to /user2 from IP: {client_ip}")

return RedirectResponse(url="/login")

template = template_env.get_template("myAI2.html")

characters = database.get_all_characters()

characters_json = json.dumps([

{"id": c["id"], "name": c["name"], "trait": c["trait"]}

for c in characters

], ensure_ascii=False)

content = template.render(characters=characters, characters_json=characters_json, debug_user=current_user_id)

return HTMLResponse(content=content)

@app.post("/user2/chat")

async def dashscope_chat(

request: Request,

current_user_id: str = Depends(get_current_user_id)

):

client_ip = request.client.host

if not current_user_id:

logger.warning(f"🚫 Chat attempt without auth from IP: {client_ip}")

return JSONResponse({"error": "未授权访问"}, status_code=401)

logger.info(f"💬 User {current_user_id} sending message from {client_ip}")

data = await request.json()

character_id = data.get("character_id")

user_message = data.get("message")

if not character_id or not user_message:

logger.warning(f"User {current_user_id}: Missing params in chat request - {data}")

return JSONResponse({"error": "缺少必要参数"}, status_code=400)

character = database.get_character_by_id(character_id)

if not character:

logger.warning(f"User {current_user_id}: Invalid character ID {character_id}")

return JSONResponse({"error": "角色不存在"}, status_code=404)

system_prompt = f"""

你正在扮演 {character['name']}。

人物设定:{character['trait']}

请始终以这个角色的身份、语气和思维方式回答问题。

不要暴露你是 AI 的事实。

""".strip()

try:

API_KEY = os.getenv("DASHSCOPE_API_KEY")

if not API_KEY:

logger.error("❗ DASHSCOPE_API_KEY is not set")

return JSONResponse({"error": "API密钥未配置"}, status_code=500)

headers = {

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json",

"Accept": "application/json"

}

payload = {

"model": "qwen-plus",

"messages": [

{"role": "system", "content": system_prompt},

{"role": "user", "content": user_message}

],

"temperature": 0.85,

"top_p": 0.95,

"max_tokens": 512,

"stream": False

}

async with httpx.AsyncClient(timeout=30.0) as client:

resp = await client.post(

"https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions",

json=payload,

headers=headers

)

if resp.status_code != 200:

error_detail = resp.text

logger.error(f"☁️ Remote API error [{resp.status_code}]: {error_detail}")

return JSONResponse(

{"error": f"远程API错误 [{resp.status_code}]", "detail": error_detail},

status_code=resp.status_code

)

result = resp.json()

reply = result["choices"][0]["message"]["content"].strip()

database.save_conversation(int(current_user_id), user_message, reply, character_id)

logger.info(f"🤖 Reply generated for user {current_user_id}, length: {len(reply)} chars")

return JSONResponse({"reply": reply})

except Exception as e:

import traceback

error_msg = traceback.format_exc()

logger.critical(f"💥 Unexpected error in /user2/chat:\n{error_msg}")

return JSONResponse(

{"error": f"请求失败: {str(e)}", "detail": str(e)},

status_code=500

)

#对话历史总结

async def summarize_conversation_and_personality(user_id: str, history: list):

"""

同时生成:

1. 结构化事件记忆(某年某月某日...)

2. 用户性格与情感总结

"""

if not history:

return [], ""

prompt = f"""

请根据以下用户与AI的对话内容,完成两项任务:

### 任务一:提取重要生活事件

格式要求:

- 每条以「YYYY年MM月DD日」开头(无法确定则写“近日”)

- 格式:「YYYY年MM月DD日,和[人物]在[地点]做了[事情]」

- 每条独立一行

### 任务二:总结用户性格特征与近期情绪状态

回答格式如下:

---

事件总结:

2025年4月1日,和朋友小李在杭州西湖边散步并拍照

2025年4月3日,独自在家完成了项目报告撰写

性格与情感:

性格:外向、富有同情心、喜欢计划;

情绪:近期表现出轻度焦虑,但整体趋于稳定。

---

现在开始分析对话记录:

"""

for h in history:

prompt += f"\n用户: {h['user']}\nAI: {h['bot']}\n"

prompt += "\n请开始输出:\n"

try:

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

with torch.no_grad():

outputs = model.generate(

**inputs,

max_new_tokens=512,

temperature=0.7,

top_p=0.9,

do_sample=True,

repetition_penalty=1.2,

eos_token_id=tokenizer.eos_token_id,

pad_token_id=tokenizer.eos_token_id

)

full_output = tokenizer.decode(outputs[0], skip_special_tokens=True)

# 解析输出

if "事件总结:" not in full_output or "性格与情感:" not in full_output:

return [], "" # 格式错误,跳过

part1 = full_output.split("事件总结:")[1]

event_section = part1.split("性格与情感:")[0].strip()

profile_section = part1.split("性格与情感:")[1].strip()

# 提取事件条目

events = []

for line in event_section.split('\n'):

line = line.strip()

if ("年" in line and "月" in line and "日" in line) or "近日" in line:

if "做了" in line:

events.append(line)

# 返回 (事件列表, 性格情绪字符串)

return events, profile_section

except Exception as e:

print(f"[Error] Summarization failed: {e}")

return [], ""

async def summarize_ai_character(user_id: str, history: list, original_role: str):

"""

让模型总结:AI 在本次对话中实际表现出的性格与情绪

输入:

- user_id: 用户ID

- history: 对话历史 [{"user": "", "bot": ""}, ...]

- original_role: 初始角色设定(如"你是一个温柔耐心的助手")

输出:

- exhibited: 实际表现

- deviation: 是否偏离 + 解释

"""

prompt = f"""

你是一个元认知分析系统,任务是评估AI助手在与用户对话中的实际行为表现。

原始角色设定:{original_role}

以下是AI与用户的对话记录:

"""

for h in history:

prompt += f"\n用户: {h['user']}\nAI: {h['bot']}\n"

prompt += f"""

请回答以下问题:

1. 根据上述对话,AI实际表现出的性格特征有哪些?(例如:急躁、幽默、冷漠、鼓励性等)

2. AI的情绪倾向如何?(如积极、防御性、疲惫感、热情下降等)

3. 相较于原始角色设定,是否有明显偏离?如果有,请说明原因(比如用户情绪影响、话题压力等)

请以如下格式输出:

---

实际表现:

性格:幽默、反应迅速、偶尔打断;

情绪:前期热情高涨,后期略显疲惫。

与原设对比:

存在轻微偏离。原设定为“冷静理性”,但在用户多次追问下表现出一定防御性,可能因上下文过长导致响应紧迫。

---

"""

try:

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

with torch.no_grad():

outputs = model.generate(

**inputs,

max_new_tokens=300,

temperature=0.7,

top_p=0.9,

do_sample=True,

repetition_penalty=1.2,

eos_token_id=tokenizer.eos_token_id,

pad_token_id=tokenizer.eos_token_id

)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

# 提取内容

if "实际表现:" not in response or "与原设对比:" not in response:

return "未知", "无显著变化"

exhibited = response.split("实际表现:")[1].split("与原设对比:")[0].strip()

deviation = response.split("与原设对比:")[1].strip()

return exhibited, deviation

except Exception as e:

print(f"[Error] AI self-reflection failed: {e}")

return "分析失败", "无法评估"

if __name__ == "__main__":

uvicorn.run("myapp:app", host="127.0.0.1", port=8000, reload=True, log_level="info")

主程序内容过多,将一部分抽离作为资源调用

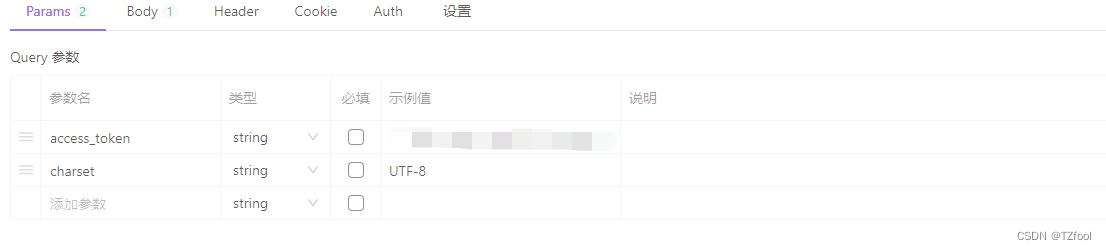

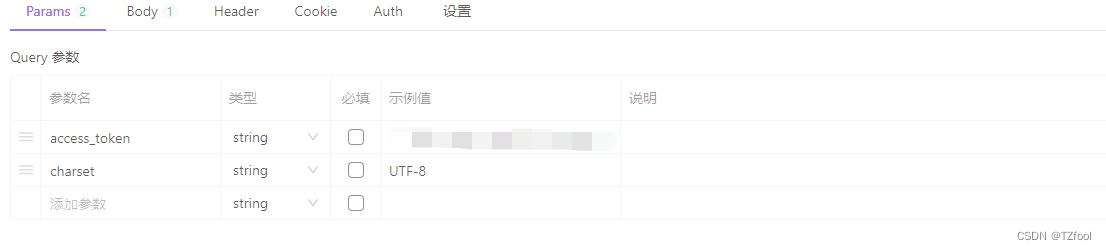

本文介绍了如何在与百度API交互时,由于默认请求体采用GBK编码,需在HTTP头部添加charset= UTF-8 的解决方法,强调了字符集转换的重要性。

本文介绍了如何在与百度API交互时,由于默认请求体采用GBK编码,需在HTTP头部添加charset= UTF-8 的解决方法,强调了字符集转换的重要性。

535

535

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?