import torch

import torch.nn as nn

from torch.autograd import Variable

import torch.utils.data as Data

import torchvision # 数据库模块

torch.manual_seed(1) # reproducible

DOWNLOAD_MNIST = True

BATCH_SIZE = 50

# 获取训练用的Mnist手写数字

train_data = torchvision.datasets.MNIST(

root='./mnist/', # 保存或者提取数据的位置

train=True, # this is training data

transform=torchvision.transforms.ToTensor(), # 將原始输入数据转化成tensor

download=DOWNLOAD_MNIST, # 是否需要下载原始数据

)

# 批训练 50 samples, 1 channel, 28x28 (50, 1, 28, 28)

train_loader = Data.DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True) # 如果你已经下载好了mnist数据就写

class CNN(nn.Module):

def __init__(self):

super(CNN, self).__init__()

self.conv1 = nn.Sequential(

nn.Conv2d(

in_channels=1, # input height

out_channels=16, # n_filters

kernel_size=5, # filter size

stride=1, # filter movement/step

padding=2, # 如果想要 con2d 出来的图片长宽没有变化, padding=(kernel_size-1)/ 2 当 stride=1

),

nn.ReLU(), # activation

nn.MaxPool2d(kernel_size=2), # 在 2x2 空间里向下采样

)

self.conv2 = nn.Sequential( # input shape (16, 14, 14)

nn.Conv2d(16, 32, 5, 1, 2), # output shape (32, 14, 14)

nn.ReLU(), # activation

nn.MaxPool2d(2), # output shape (32, 7, 7)

)

self.out = nn.Linear(32 * 7 * 7, 10) # fully connected layer, output 10 classes

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = x.view(x.size(0), -1) # 展平多维的卷积图 (batch_size, 32 * 7 * 7)

output = self.out(x)

return output

# 测试数据

test_data = torchvision.datasets.MNIST(root='./mnist/', train=False)

test_x = Variable(torch.unsqueeze(test_data.test_data, dim=1), volatile=True).type(torch.FloatTensor)/ 255.

test_y = test_data.test_labels

cnn = CNN()

optimizer = torch.optim.Adam(cnn.parameters(), lr=0.01) # optimize all cnn parameters

loss_func = nn.CrossEntropyLoss() # the target label is not one-hotted

# training and testing

EPOCH=10

for epoch in range(EPOCH):

for step, (x, y) in enumerate(train_loader):

b_x = Variable(x) # batch x

b_y = Variable(y) # batch y

output = cnn(b_x) # cnn output

loss = loss_func(output, b_y) # cross entropy loss

optimizer.zero_grad() # clear gradients for this training step

loss.backward() # backpropagation, compute gradients

optimizer.step() # apply gradients

if step % 50 == 0:

test_output, last_layer = cnn(test_x)

pred_y = torch.max(test_output, 1)[1].data.squeeze()

accuracy = sum(pred_y == test_y) / float(test_y.size(0))

print('Epoch: ', epoch, '| train loss: %.4f' % loss.data[0], '| test accuracy: %.2f' % accuracy)代码报错PS C:\Users\Wang\Desktop\Minst> & C:\Users\Wang\AppData\Local\Programs\Python\Python311\python.exe "c:/Users/Wang/Desktop/Minst/import torch4.py"

C:\Users\Wang\AppData\Local\Programs\Python\Python311\Lib\site-packages\torchvision\datasets\mnist.py:81: UserWarning: test_data has been renamed data

warnings.warn("test_data has been renamed data")

c:\Users\Wang\Desktop\Minst\import torch4.py:50: UserWarning: volatile was removed and now has no effect. Use `with torch.no_grad():` instead.

test_x = Variable(torch.unsqueeze(test_data.test_data, dim=1), volatile=True).type(torch.FloatTensor)/ 255.

C:\Users\Wang\AppData\Local\Programs\Python\Python311\Lib\site-packages\torchvision\datasets\mnist.py:71: UserWarning: test_labels has been renamed targets

warnings.warn("test_labels has been renamed targets")

Traceback (most recent call last):

File "c:\Users\Wang\Desktop\Minst\import torch4.py", line 71, in <module>

test_output, last_layer = cnn(test_x)

^^^^^^^^^^^^^^^^^^^^^^^

ValueError: too many values to unpack (expected 2)

最新发布

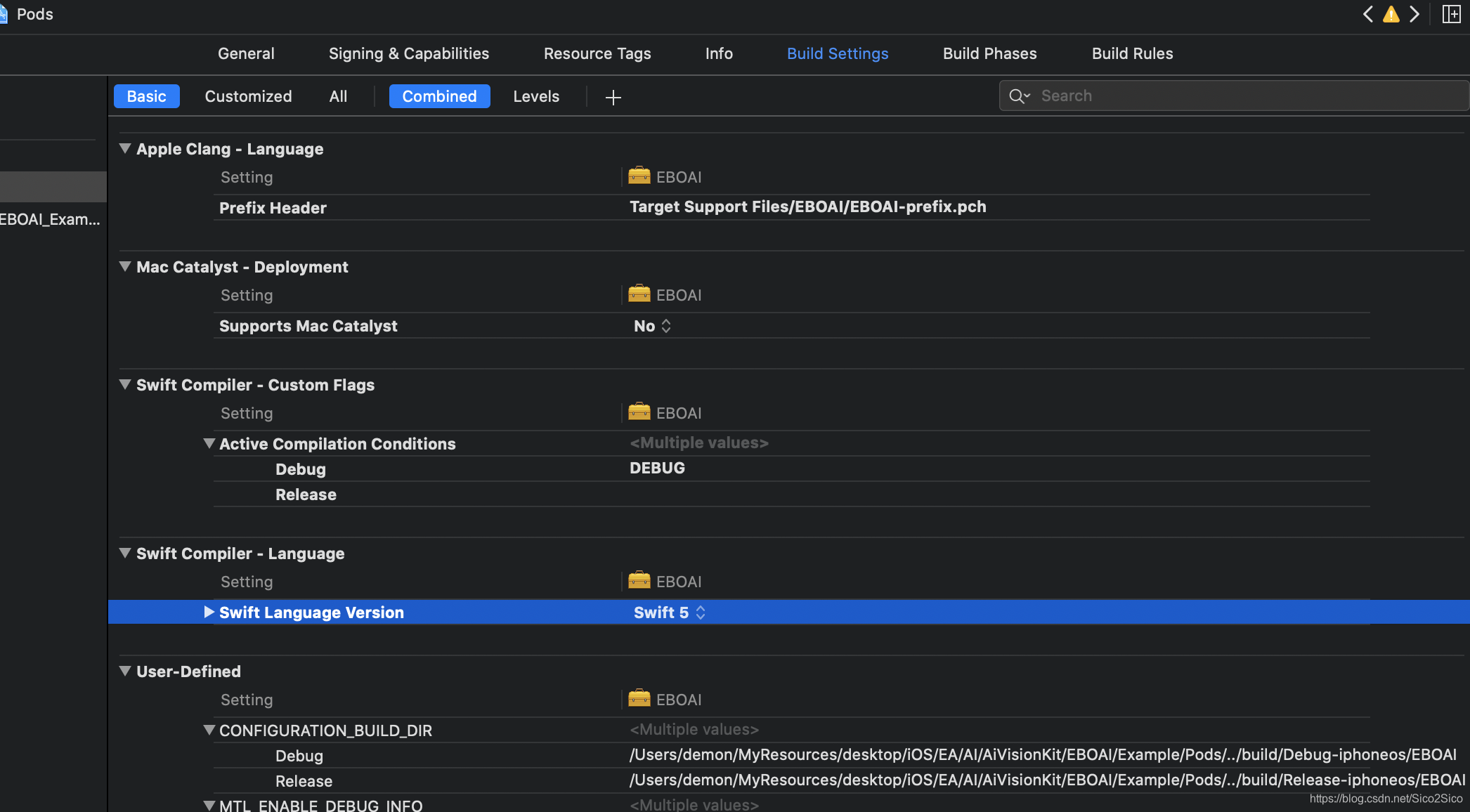

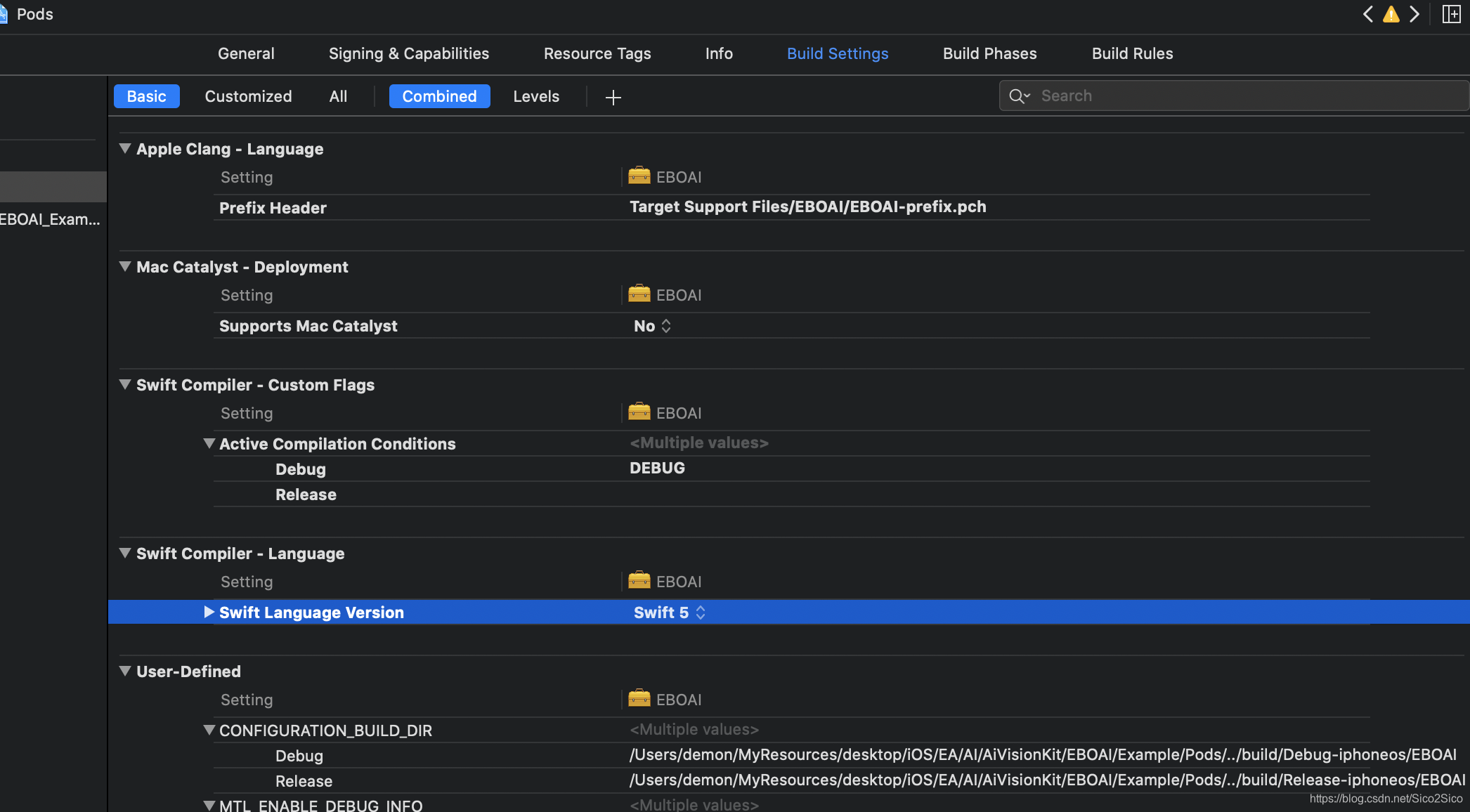

博客提及Swift语言版本为Swift5,这是信息技术领域中移动开发方向的重要信息,Swift常用于IOS开发等场景。

博客提及Swift语言版本为Swift5,这是信息技术领域中移动开发方向的重要信息,Swift常用于IOS开发等场景。

633

633

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?