文章目录

1 拉取镜像

docker pull delron/fastdfs

2 构建tracker容器

2.1 创建配置文件和数据文件路径(只在主机上创建)

mkdir -p /data/docker_data/fastdfs/tracker/conf /data/docker_data/fastdfs/tracker/data

在delron/fastdfs镜像中,上面创建的目录分别对应docker中配置文件路径:/etc/fdfs,数据文件路径:/var/fdfs(下面有data和log两个文件夹)

2.2 在官网下载了原装tracker.conf,修改了一个参数最大并发连接数,max_connections:改为1024(默认256)

# is this config file disabled

# false for enabled

# true for disabled

disabled=false

# bind an address of this host

# empty for bind all addresses of this host

bind_addr=

# the tracker server port

port=22122

# connect timeout in seconds

# default value is 30s

connect_timeout=30

# network timeout in seconds

# default value is 30s

network_timeout=60

# the base path to store data and log files

base_path=/var/fdfs

# max concurrent connections this server supported

max_connections=256

# accept thread count

# default value is 1

# since V4.07

accept_threads=1

# work thread count, should <= max_connections

# default value is 4

# since V2.00

work_threads=4

# min buff size

# default value 8KB

min_buff_size = 8KB

# max buff size

# default value 128KB

max_buff_size = 128KB

# the method of selecting group to upload files

# 0: round robin

# 1: specify group

# 2: load balance, select the max free space group to upload file

store_lookup=2

# which group to upload file

# when store_lookup set to 1, must set store_group to the group name

store_group=group2

# which storage server to upload file

# 0: round robin (default)

# 1: the first server order by ip address

# 2: the first server order by priority (the minimal)

store_server=0

# which path(means disk or mount point) of the storage server to upload file

# 0: round robin

# 2: load balance, select the max free space path to upload file

store_path=0

# which storage server to download file

# 0: round robin (default)

# 1: the source storage server which the current file uploaded to

download_server=0

# reserved storage space for system or other applications.

# if the free(available) space of any stoarge server in

# a group <= reserved_storage_space,

# no file can be uploaded to this group.

# bytes unit can be one of follows:

### G or g for gigabyte(GB)

### M or m for megabyte(MB)

### K or k for kilobyte(KB)

### no unit for byte(B)

### XX.XX% as ratio such as reserved_storage_space = 10%

reserved_storage_space = 10%

#standard log level as syslog, case insensitive, value list:

### emerg for emergency

### alert

### crit for critical

### error

### warn for warning

### notice

### info

### debug

log_level=error

#unix group name to run this program,

#not set (empty) means run by the group of current user

run_by_group=

#unix username to run this program,

#not set (empty) means run by current user

run_by_user=

# allow_hosts can ocur more than once, host can be hostname or ip address,

# "*" (only one asterisk) means match all ip addresses

# we can use CIDR ips like 192.168.5.64/26

# and also use range like these: 10.0.1.[0-254] and host[01-08,20-25].domain.com

# for example:

# allow_hosts=10.0.1.[1-15,20]

# allow_hosts=host[01-08,20-25].domain.com

# allow_hosts=192.168.5.64/26

allow_hosts=*

# sync log buff to disk every interval seconds

# default value is 10 seconds

sync_log_buff_interval = 10

# check storage server alive interval seconds

check_active_interval = 120

# thread stack size, should >= 64KB

# default value is 64KB

thread_stack_size = 64KB

# auto adjust when the ip address of the storage server changed

# default value is true

storage_ip_changed_auto_adjust = true

# storage sync file max delay seconds

# default value is 86400 seconds (one day)

# since V2.00

storage_sync_file_max_delay = 86400

# the max time of storage sync a file

# default value is 300 seconds

# since V2.00

storage_sync_file_max_time = 300

# if use a trunk file to store several small files

# default value is false

# since V3.00

use_trunk_file = false

# the min slot size, should <= 4KB

# default value is 256 bytes

# since V3.00

slot_min_size = 256

# the max slot size, should > slot_min_size

# store the upload file to trunk file when it's size <= this value

# default value is 16MB

# since V3.00

slot_max_size = 16MB

# the trunk file size, should >= 4MB

# default value is 64MB

# since V3.00

trunk_file_size = 64MB

# if create trunk file advancely

# default value is false

# since V3.06

trunk_create_file_advance = false

# the time base to create trunk file

# the time format: HH:MM

# default value is 02:00

# since V3.06

trunk_create_file_time_base = 02:00

# the interval of create trunk file, unit: second

# default value is 38400 (one day)

# since V3.06

trunk_create_file_interval = 86400

# the threshold to create trunk file

# when the free trunk file size less than the threshold, will create

# the trunk files

# default value is 0

# since V3.06

trunk_create_file_space_threshold = 20G

# if check trunk space occupying when loading trunk free spaces

# the occupied spaces will be ignored

# default value is false

# since V3.09

# NOTICE: set this parameter to true will slow the loading of trunk spaces

# when startup. you should set this parameter to true when neccessary.

trunk_init_check_occupying = false

# if ignore storage_trunk.dat, reload from trunk binlog

# default value is false

# since V3.10

# set to true once for version upgrade when your version less than V3.10

trunk_init_reload_from_binlog = false

# the min interval for compressing the trunk binlog file

# unit: second

# default value is 0, 0 means never compress

# FastDFS compress the trunk binlog when trunk init and trunk destroy

# recommand to set this parameter to 86400 (one day)

# since V5.01

trunk_compress_binlog_min_interval = 0

# if use storage ID instead of IP address

# default value is false

# since V4.00

use_storage_id = false

# specify storage ids filename, can use relative or absolute path

# since V4.00

storage_ids_filename = storage_ids.conf

# id type of the storage server in the filename, values are:

## ip: the ip address of the storage server

## id: the server id of the storage server

# this paramter is valid only when use_storage_id set to true

# default value is ip

# since V4.03

id_type_in_filename = ip

# if store slave file use symbol link

# default value is false

# since V4.01

store_slave_file_use_link = false

# if rotate the error log every day

# default value is false

# since V4.02

rotate_error_log = false

# rotate error log time base, time format: Hour:Minute

# Hour from 0 to 23, Minute from 0 to 59

# default value is 00:00

# since V4.02

error_log_rotate_time=00:00

# rotate error log when the log file exceeds this size

# 0 means never rotates log file by log file size

# default value is 0

# since V4.02

rotate_error_log_size = 0

# keep days of the log files

# 0 means do not delete old log files

# default value is 0

log_file_keep_days = 30

# if use connection pool

# default value is false

# since V4.05

use_connection_pool = false

# connections whose the idle time exceeds this time will be closed

# unit: second

# default value is 3600

# since V4.05

connection_pool_max_idle_time = 3600

# HTTP port on this tracker server

http.server_port=8080

# check storage HTTP server alive interval seconds

# <= 0 for never check

# default value is 30

http.check_alive_interval=30

# check storage HTTP server alive type, values are:

# tcp : connect to the storge server with HTTP port only,

# do not request and get response

# http: storage check alive url must return http status 200

# default value is tcp

http.check_alive_type=tcp

# check storage HTTP server alive uri/url

# NOTE: storage embed HTTP server support uri: /status.html

http.check_alive_uri=/status.html

关键参数(只是了解,conf文件中没有修改,为默认配置):

2.3 启动tracker容器,映射射配置文件和数据文件路径

docker run -dti --network=host --restart always --name tracker -v /data/docker_data/fastdfs/tracker/data:/var/fdfs -v /data/docker_data/fastdfs/tracker/conf:/etc/fdfs -v /etc/localtime:/etc/localtime delron/fastdfs tracker

3 构建storage容器

两台都创建目录,数据文件存放路径映射,构建storage容器(两台机器都创建,xxxxxxx为tracker所在服务器ip)

mkdir -p /data/docker_data/fastdfs/storage/data

docker run -dti --network=host --name storage --restart always -e TRACKER_SERVER=xxxxxxx:22122 -v /etc/localtime:/etc/localtime -v /data/docker_data/fastdfs/storage/data:/var/fdfs delron/fastdfs storage

4 测试

1)两台都查看storage日志,出现选举出成功(successfully connect to storage server)连接tracker则成功

主服务器结果:

[root@131-0-4-22 fastdfs]# docker logs storage

ngx_http_fastdfs_set pid=9

try to start the storage node...

mkdir data path: FA ...

mkdir data path: FB ...

mkdir data path: FC ...

mkdir data path: FD ...

mkdir data path: FE ...

mkdir data path: FF ...

data path: /var/fdfs/data, mkdir sub dir done.

[2022-08-04 19:47:00] INFO - file: storage_param_getter.c, line: 191, use_storage_id=0, id_type_in_filename=ip, storage_ip_changed_auto_adjust=1, store_path=0, reserved_storage_space=10.00%, use_trunk_file=0, slot_min_size=256, slot_max_size=16 MB, trunk_file_size=64 MB, trunk_create_file_advance=0, trunk_create_file_time_base=02:00, trunk_create_file_interval=86400, trunk_create_file_space_threshold=20 GB, trunk_init_check_occupying=0, trunk_init_reload_from_binlog=0, trunk_compress_binlog_min_interval=0, store_slave_file_use_link=0

[2022-08-04 19:47:00] INFO - file: storage_func.c, line: 257, tracker_client_ip: xxxx, my_server_id_str: xxxx, g_server_id_in_filename: 352583811

[2022-08-04 19:47:00] INFO - file: tracker_client_thread.c, line: 310, successfully connect to tracker server xxxx:22122, as a tracker client, my ip is xxxx

[2022-08-04 19:47:30] INFO - file: tracker_client_thread.c, line: 1263, tracker server xxxx:22122, set tracker leader: xxxx:22122

[2022-08-04 19:48:00] INFO - file: storage_sync.c, line: 2732, successfully connect to storage server xxxxx:23000

从服务器结果:

[root@131-0-4-22 fastdfs]# docker logs storage

ngx_http_fastdfs_set pid=9

try to start the storage node...

mkdir data path: FC ...

mkdir data path: FD ...

mkdir data path: FE ...

mkdir data path: FF ...

data path: /var/fdfs/data, mkdir sub dir done.

[2022-08-04 19:47:55] INFO - file: storage_param_getter.c, line: 191, use_storage_id=0, id_type_in_filename=ip, storage_ip_changed_auto_adjust=1, store_path=0, reserved_storage_space=10.00%, use_trunk_file=0, slot_min_size=256, slot_max_size=16 MB, trunk_file_size=64 MB, trunk_create_file_advance=0, trunk_create_file_time_base=02:00, trunk_create_file_interval=86400, trunk_create_file_space_threshold=20 GB, trunk_init_check_occupying=0, trunk_init_reload_from_binlog=0, trunk_compress_binlog_min_interval=0, store_slave_file_use_link=0

[2022-08-04 19:47:55] INFO - file: storage_func.c, line: 257, tracker_client_ip: 131.0.4.22, my_server_id_str: xxx, g_server_id_in_filename: 369361027

[2022-08-04 19:47:55] INFO - file: tracker_client_thread.c, line: 310, successfully connect to tracker server 131.0.4.21:22122, as a tracker client, my ip is xxxx

[2022-08-04 19:47:55] INFO - file: tracker_client_thread.c, line: 1263, tracker server 131.0.4.21:22122, set tracker leader: xxxx:22122

[2022-08-04 19:47:55] INFO - file: storage_sync.c, line: 2732, successfully connect to storage server xxxx:23000

2)主服务器查看tracker日志,出现i am new tracker leader则成功

[root@131-0-4-21 storage]# docker logs tracker

try to start the tracker node...

[2022-08-04 19:43:05] INFO - FastDFS v5.11, base_path=/var/fdfs, run_by_group=, run_by_user=, connect_timeout=30s, network_timeout=60s, port=22122, bind_addr=, max_connections=1024, accept_threads=1, work_threads=4, min_buff_size=8192, max_buff_size=131072, store_lookup=2, store_group=, store_server=0, store_path=0, reserved_storage_space=10.00%, download_server=0, allow_ip_count=-1, sync_log_buff_interval=10s, check_active_interval=120s, thread_stack_size=64 KB, storage_ip_changed_auto_adjust=1, storage_sync_file_max_delay=86400s, storage_sync_file_max_time=300s, use_trunk_file=0, slot_min_size=256, slot_max_size=16 MB, trunk_file_size=64 MB, trunk_create_file_advance=0, trunk_create_file_time_base=02:00, trunk_create_file_interval=86400, trunk_create_file_space_threshold=20 GB, trunk_init_check_occupying=0, trunk_init_reload_from_binlog=0, trunk_compress_binlog_min_interval=0, use_storage_id=0, id_type_in_filename=ip, storage_id_count=0, rotate_error_log=0, error_log_rotate_time=00:00, rotate_error_log_size=0, log_file_keep_days=0, store_slave_file_use_link=0, use_connection_pool=0, g_connection_pool_max_idle_time=3600s

[2022-08-04 19:46:59] INFO - file: tracker_relationship.c, line: 389, selecting leader...

[2022-08-04 19:46:59] INFO - file: tracker_relationship.c, line: 407, I am the new tracker leader 131.0.4.21:22122

[2022-08-04 19:47:59] WARNING - file: tracker_mem.c, line: 4754, storage server: 131.0.4.22:23000, dest status: 2, my status: 1, should change my status!

[2022-08-04 19:47:59] WARNING - file: tracker_mem.c, line: 4754, storage server: 131.0.4.22:23000, dest status: 5, my status: 2, should change my status!

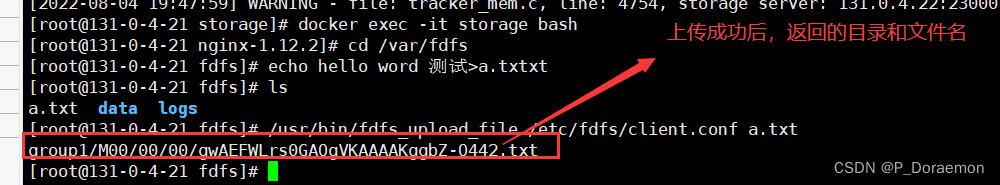

3)测试两台storage同步,进入storage容器,/var/fdfs目录,模拟上传一个文件,看从服务器器storage节点是否同步成功。

[root@131-0-4-21 storage]# docker exec -it storage bash

[root@131-0-4-21 nginx-1.12.2]# cd /var/fdfs

[root@131-0-4-21 fdfs]# echo hello word 测试>a.txt

[root@131-0-4-21 fdfs]# ls

a.txt data logs

[root@131-0-4-21 fdfs]# /usr/bin/fdfs_upload_file /etc/fdfs/client.conf a.txt

group1/M00/00/00/gwAEFWLrs0GAQgVKAAAAKggbZ-Q442.txt

会返回一串文件名,之后,连接另一台看是否出现同名的文件,如果有则同步也成功。

4)FastDFS 默认使用了以下端口,如果开启了防火墙,请保证以下端口可访问:

*

FastDFS Tracker 端口:22122

*

FastDFS Storage 端口:23000

*

FastDHT 端口:11411

*

访问端口:8888

5、配置fastdfs跨域

搭好fastdfs以后,正常访问浏览器没问题,但是前端获取的时候会出现跨越访问。因为访问fastdfs的图片的时候是用Nginx访问的,nginx当中要配置跨域访问,前端才能正常获取图片。

进入tracker容器,然后找到nginx配置文件位置修改

location ~/group([0-9])/M00 {

ngx_fastdfs_module;

#加上如下2行解决前端获取文件跨域访问的问题

add_header 'Access-Control-Allow-Origin' '*';

add_header 'Access-Control-Allow-Credentials' 'true';

}

文章详细描述了如何在Docker环境下搭建FastDFS服务,包括拉取镜像、创建tracker和storage容器,配置tracker的`tracker.conf`文件以增大最大并发连接数,以及启动和测试这两个容器。同时,文章提到了FastDFS的端口设置和跨域配置,需要在Nginx中添加相应头部以允许跨域访问。

文章详细描述了如何在Docker环境下搭建FastDFS服务,包括拉取镜像、创建tracker和storage容器,配置tracker的`tracker.conf`文件以增大最大并发连接数,以及启动和测试这两个容器。同时,文章提到了FastDFS的端口设置和跨域配置,需要在Nginx中添加相应头部以允许跨域访问。

997

997

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?