Kubernetes 常见的高可用集群部署大致分为 3 种:

手工部署以“进程”方式运行

用 kubeadm 部署以“容器”方式运行

手工部署为以“容器”方式运行

如果对以“进程”方式运行感兴趣,可以访问以下 URL 了解 etcd cluster 的部署方式:https://github.com/zhangjiongdev/k8s/blob/master/etcdsetupfor_centos7.md。

安装部署的顺序、设置和后面介绍的顺序需保持一致,在实际的测试中不要轻易打乱文档步骤的顺序和修改设置,以避免部署问题。

第一部分

部署台式电脑:

CPU:Intel i7

内存:16 GB

操作系统:64 位 Windows 10 专业版

网络:可以无限制的访问国内互联网

虚机软件:VirtualBox 6.0.8

虚机操作系统镜像:CentOS-7-x86_64-DVD-1810.iso

注意:某些网络中的上网行为管理,可能会影响安装部署过程的顺利进行。

下载 VirtualBox and CentOS:

VirtualBox 主页:www.virtualbox.org

VirtualBox-6.0.8-130520-Win.exe:https://download.virtualbox.org/virtualbox/6.0.8/VirtualBox-6.0.8-130520-Win.exe

OracleVMVirtualBoxExtensionPack-6.0.8.vbox-extpack:https://download.virtualbox.org/virtualbox/6.0.8/OracleVMVirtualBoxExtensionPack-6.0.8.vbox-extpack

CentOS 主页:https://www.centos.org

CentOS-7-x8664-DVD-1810.iso:http://isoredirect.centos.org/centos/7/isos/x8664/CentOS-7-x86_64-DVD-1810.iso

安装 VirtualBox-6.0.8-130520-Win.exe:

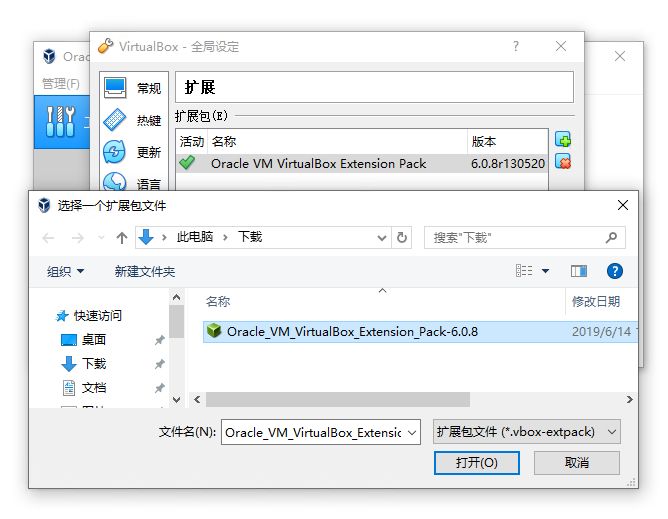

VirtualBox 导入 Extension_Pack:

菜单:管理>>全局设定>>扩展>>添加新包>>选择OracleVMVirtualBoxExtensionPack-6.0.8.vbox-extpack。

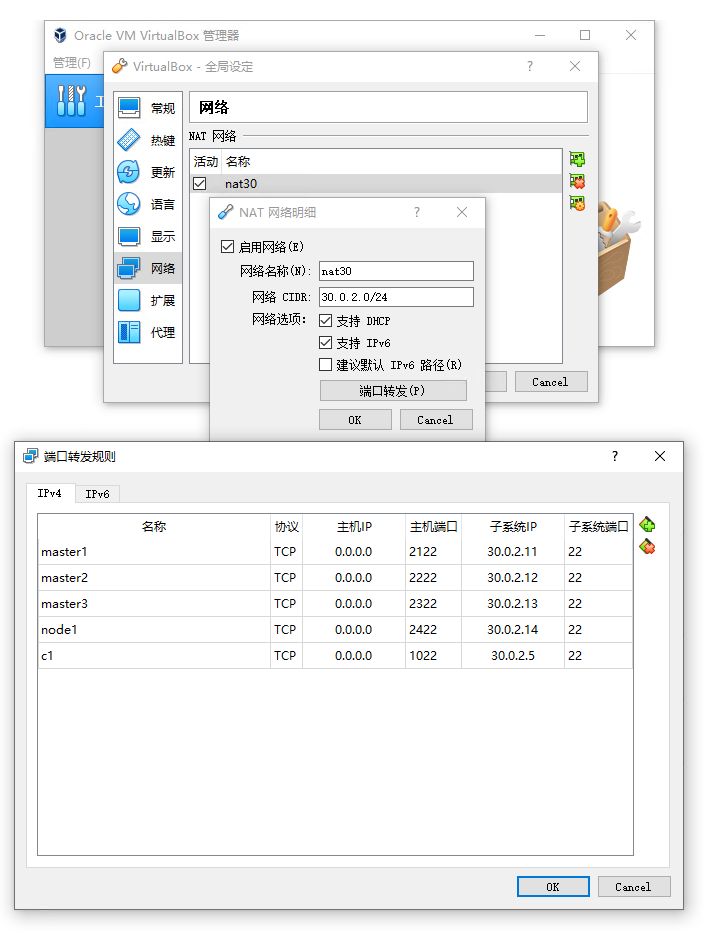

创建 VirtualBox NAT 网络:

管理 >> 全局设定 >> 网络 >> 添加新NAT网络 >> 编辑NAT网络(新添加的)

修改:

网络名称:nat30

网络CIDR:30.0.2.0/24

支持 DHCP:勾选

支持 IPv6:勾选

端口转发 >> IPv4

名称 | 协议 | 主机IP | 主机端口 |

master1 | TCP | 0.0.0.0 | 2122 | 30.0.2.11 | 22

master2 | TCP | 0.0.0.0 | 2222 | 30.0.2.12 | 22

master3 | TCP | 0.0.0.0 | 2322 | 30.0.2.13 | 22

node1 | TCP | 0.0.0.0 | 2422 | 30.0.2.14 | 22

c1 | TCP | 0.0.0.0 | 1022 | 30.0.2.5 | 22

创建虚拟机安装 CentOS:

VirtualBox 新建虚机

名称:(自定义)

类型:Linux

版本:Red Hat(64-bit)

内存大小:1024 MB

虚拟磁盘:现在创建虚拟硬盘(默认)

虚拟硬盘文件类型:VDI(VirtualBox 磁盘映像)

存储在物理硬盘上:动态分配

调整CPU设置:

选中虚拟机(新创建的)>> 控制 >> 设置 >> 系统 >> 处理器

处理器数量:2

调整网络设置:

选中虚拟机(新创建的)>> 控制 >> 设置 >> 网络 >> 网卡 1 >>

启用网络连接:勾选

连接方式:NAT 网络

界面名称:nat30

设置安装光盘:

选中虚拟机

控制 >> 设置 >> 存储 >> 控制器:IDE >> 没有盘片

分配光驱:第二 IDE 控制器主通道 >> 圆形图标 >> 选择一个虚拟光盘文件 >> 找到 CentOS-7-x86_64-DVD-1810.iso 文件

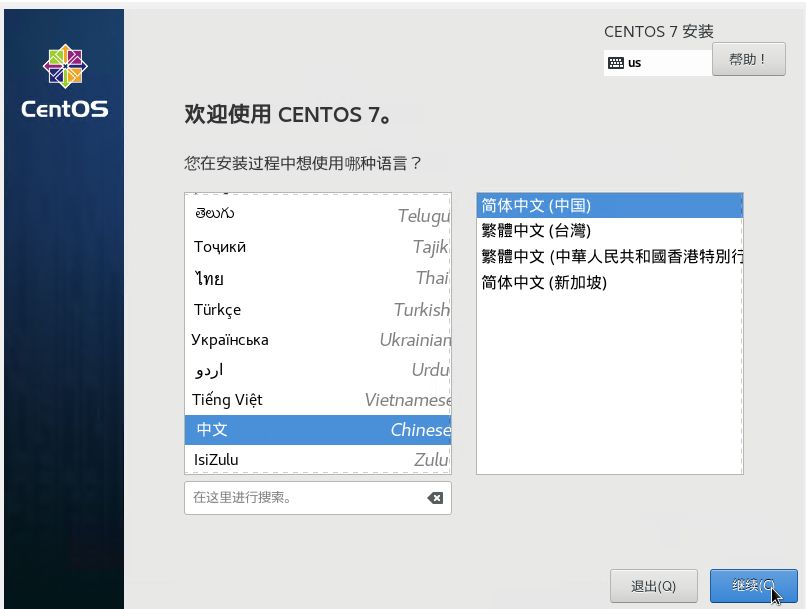

安装 CentOS-7-x86_64(跳过部分安装步骤明细):

选中虚拟机(新创建的)>> 启动

中文、简体中文(中国)

最小安装

没有说明的选项均为默认值

用root账号登录CentOS:

启用网卡:

sed -i "s/ONBOOT=no/ONBOOT=yes/g" /etc/sysconfig/network-scripts/ifcfg-enp0s3

service network restart停止、禁用CentOS防火墙:

systemctl stop firewalld

systemctl disable firewalld禁用 selinux:

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/sysconfig/selinux禁用UseDNS:

sed -i "s/#UseDNS yes/UseDNS no/g" /etc/ssh/sshd_config设置静态IP地址:

hip=30.0.2.114台服务器对应修改为(30.0.2.11、30.0.2.12、30.0.2.13、30.0.2.14)

hip=30.0.2.11

sed -i "s/BOOTPROTO=dhcp/BOOTPROTO=static/g" /etc/sysconfig/network-scripts/ifcfg-enp0s3

cat >>/etc/sysconfig/network-scripts/ifcfg-enp0s3<<EOF

IPADDR=${hip}

NETMASK=255.255.255.0

GATEWAY=30.0.2.1

DNS1=30.0.2.1

EOF修改主机名:

hostnamectl set-hostname master14台服务器需要对应的修改为(master1、master2、master3、node1):

hostnamectl set-hostname master1重启服务器:

reboot第二部分:4 台虚机安装 Docker

安装一些 Docker 必要的系统工具:

sudo yum install -y yum-utils device-mapper-persistent-data lvm2添加 docker yum 源信息:

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum makecache fast安装 Docker-ce:

yum -y install docker-ce启动 Docker 后台服务:

systemctl start docker && systemctl enable docker测试 Docker 版本:

docker --version目前我们安装完后的版本是 Docker version 18.09.6, build 481bc77156。

第三部分:Keepalived + HAProxy 安装配置

三台(Master)控制平面安装 Keepalived + HAProxy。

设置环境 IP:

yum install -y net-tools

vip=30.0.2.10

master1=30.0.2.11

master2=30.0.2.12

master3=30.0.2.13

node1=30.0.2.14

netswitch=`ifconfig | grep 'UP,BROADCAST,RUNNING,MULTICAST' | awk -F: '{print $1}'`设置 hosts:

cat >>/etc/hosts<<EOF

${master1} master1

${master2} master2

${master3} master3

${node1} node1

EOF配置 /etc/sysconfig/modules/ipvs.modules:

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4安装 Keepalived + HAProxy:

yum install -y keepalived haproxy ipvsadm ipset在 Master1 配置启动 Keepalived+HAProxy。

配置 Keepalived:

mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

nodename=`/bin/hostname`

cat >/etc/keepalived/keepalived.conf<<END4

! Configuration File for keepalived

global_defs {

router_id ${nodename}

}

vrrp_instance VI_1 {

state MASTER

interface ${netswitch}

virtual_router_id 88

advert_int 1

priority 100

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

${vip}/24

}

}

END4配置 HAProxy:

cat >/etc/haproxy/haproxy.cfg<<END1

global

chroot /var/lib/haproxy

daemon

group haproxy

user haproxy

log 127.0.0.1:514 local0 warning

pidfile /var/lib/haproxy.pid

maxconn 20000

spread-checks 3

nbproc 8

defaults

log global

mode tcp

retries 3

option redispatch

listen https-apiserver

bind ${vip}:8443

mode tcp

balance roundrobin

timeout server 15s

timeout connect 15s

server apiserver01 ${master1}:6443 check port 6443 inter 5000 fall 5

server apiserver02 ${master2}:6443 check port 6443 inter 5000 fall 5

server apiserver03 ${master3}:6443 check port 6443 inter 5000 fall 5

END1启动 Keepalived 和 HAProxy:

systemctl enable keepalived && systemctl start keepalived

systemctl enable haproxy && systemctl start haproxy 检查:

ping 30.0.2.10在 Master2、Master3 配置启动 Keepalived + HAProxy。

配置 Keepalived:

mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

nodename=`/bin/hostname`

cat >/etc/keepalived/keepalived.conf<<END4

! Configuration File for keepalived

global_defs {

router_id ${nodename}

}

vrrp_instance VI_1 {

state MASTER

interface ${netswitch}

virtual_router_id 88

advert_int 1

priority 90

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

${vip}/24

}

}

END4配置 HAProxy:

cat >/etc/haproxy/haproxy.cfg<<END1

global

chroot /var/lib/haproxy

daemon

group haproxy

user haproxy

log 127.0.0.1:514 local0 warning

pidfile /var/lib/haproxy.pid

maxconn 20000

spread-checks 3

nbproc 8

defaults

log global

mode tcp

retries 3

option redispatch

listen https-apiserver

bind ${vip}:8443

mode tcp

balance roundrobin

timeout server 15s

timeout connect 15s

server apiserver01 ${master1}:6443 check port 6443 inter 5000 fall 5

server apiserver02 ${master2}:6443 check port 6443 inter 5000 fall 5

server apiserver03 ${master3}:6443 check port 6443 inter 5000 fall 5

END1启动 Keepalived 和 HAProxy:

systemctl enable keepalived && systemctl start keepalived

systemctl enable haproxy && systemctl start haproxy检查:

ping 30.0.2.10第四部分:部署 Kubernetes HA cluster 控制平面

下载需要的 Kubernetes docker images:

MY_REGISTRY=registry.cn-hangzhou.aliyuncs.com/openthings

docker pull ${MY_REGISTRY}/k8s-gcr-io-kube-apiserver:v1.14.2

docker pull ${MY_REGISTRY}/k8s-gcr-io-kube-controller-manager:v1.14.2

docker pull ${MY_REGISTRY}/k8s-gcr-io-kube-scheduler:v1.14.2

docker pull ${MY_REGISTRY}/k8s-gcr-io-kube-proxy:v1.14.2

docker pull ${MY_REGISTRY}/k8s-gcr-io-etcd:3.3.10

docker pull ${MY_REGISTRY}/k8s-gcr-io-pause:3.1

docker pull ${MY_REGISTRY}/k8s-gcr-io-coredns:1.3.1

docker pull jmgao1983/flannel:v0.11.0-amd64

docker tag ${MY_REGISTRY}/k8s-gcr-io-kube-apiserver:v1.14.2 k8s.gcr.io/kube-apiserver:v1.14.2

docker tag ${MY_REGISTRY}/k8s-gcr-io-kube-controller-manager:v1.14.2 k8s.gcr.io/kube-controller-manager:v1.14.2

docker tag ${MY_REGISTRY}/k8s-gcr-io-kube-scheduler:v1.14.2 k8s.gcr.io/kube-scheduler:v1.14.2

docker tag ${MY_REGISTRY}/k8s-gcr-io-kube-proxy:v1.14.2 k8s.gcr.io/kube-proxy:v1.14.2

docker tag ${MY_REGISTRY}/k8s-gcr-io-etcd:3.3.10 k8s.gcr.io/etcd:3.3.10

docker tag ${MY_REGISTRY}/k8s-gcr-io-pause:3.1 k8s.gcr.io/pause:3.1

docker tag ${MY_REGISTRY}/k8s-gcr-io-coredns:1.3.1 k8s.gcr.io/coredns:1.3.1

docker tag jmgao1983/flannel:v0.11.0-amd64 quay.io/coreos/flannel:v0.11.0-amd64关闭 swap:

echo 'swapoff -a' >> /etc/profile

source /etc/profile生成公钥与私钥对:

ssh-keygen -t rsa配置 /etc/sysctl.d/k8s.conf:

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

vm.swappiness=0

EOF

sysctl --system设置 Kubernetes 安装源信息:

cat << EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum makecache fast安装 kube 组件:

yum install -y kubelet kubeadm kubectl配置 Cgroups:

echo 'Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=cgroupfs"' >> /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf启动 kubelet:

systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet设置初始化位置文件:

cat >kubeadm-init.yaml<<END1

apiVersion: kubeadm.k8s.io/v1beta1

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: ${master1}

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: master1

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiVersion: kubeadm.k8s.io/v1beta1

kind: ClusterConfiguration

apiServer:

timeoutForControlPlane: 4m0s

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "${vip}:6443"

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

kubernetesVersion: v1.14.2

networking:

dnsDomain: cluster.local

podSubnet: "10.244.0.0/16"

serviceSubnet: "10.245.0.0/16"

scheduler: {}

controllerManager: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: "ipvs"

END1初始化:

kubeadm init --config kubeadm-init.yaml输出:

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities and service account keys on each node and then running the following as root:

kubeadm join 30.0.2.10:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:5f491180e10907314ab6cac766bf13dae7e851a0a3749b035bd071c67697303c \

--experimental-control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 30.0.2.10:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:5f491180e10907314ab6cac766bf13dae7e851a0a3749b035bd071c67697303c部署完后,会显示类似以上的蓝色命令行,第一段 kubeadm join 命令是第 2、3 台 Master 加入 Kubernetes 集群用的,第二段 kubeadm join 是 Node 节点加入 Kubernetes 集群用的,两者的差异在于 Master 多了一个--experimental-control-plane参数。

设置 kube config:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config查看容器:

kubectl get cs

kubectl get pod --all-namespaces -o wide输出:

[root@master1 ~]# kubectl get pod --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-fb8b8dccf-jlwpw 0/1 Pending 0 7m22s <none> <none> <none> <none>

kube-system coredns-fb8b8dccf-mvzxv 0/1 Pending 0 7m22s <none> <none> <none> <none>

kube-system etcd-master1 1/1 Running 0 6m43s 30.0.2.11 master1 <none> <none>

kube-system kube-apiserver-master1 1/1 Running 0 6m35s 30.0.2.11 master1 <none> <none>

kube-system kube-controller-manager-master1 1/1 Running 0 6m47s 30.0.2.11 master1 <none> <none>

kube-system kube-proxy-c6tvk 1/1 Running 0 7m22s 30.0.2.11 master1 <none> <none>

kube-system kube-scheduler-master1 1/1 Running 0 6m30s 30.0.2.11 master1 <none> <none>下载 Flannel 配置:

yum install -y wget

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml应用 Flannel:

kubectl apply -f kube-flannel.yml查看容器:

kubectl -n kube-system get pod -o wide输出:

[root@master1 ~]# kubectl -n kube-system get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-fb8b8dccf-jlwpw 1/1 Running 0 11m 10.244.0.2 master1 <none> <none>

coredns-fb8b8dccf-mvzxv 1/1 Running 0 11m 10.244.0.3 master1 <none> <none>

etcd-master1 1/1 Running 0 11m 30.0.2.11 master1 <none> <none>

kube-apiserver-master1 1/1 Running 0 10m 30.0.2.11 master1 <none> <none>

kube-controller-manager-master1 1/1 Running 0 11m 30.0.2.11 master1 <none> <none>

kube-flannel-ds-amd64-6gx8f 1/1 Running 0 2m22s 30.0.2.11 master1 <none> <none>

kube-proxy-c6tvk 1/1 Running 0 11m 30.0.2.11 master1 <none> <none>

kube-scheduler-master1 1/1 Running 0 10m 30.0.2.11 master1 <none> <none>第 2、3 台控制平面(Master2、Master3)安装配置加入 Kubernetes HA 集群。

下载需要的 Kubernetes docker images:

MY_REGISTRY=registry.cn-hangzhou.aliyuncs.com/openthings

docker pull ${MY_REGISTRY}/k8s-gcr-io-kube-apiserver:v1.14.2

docker pull ${MY_REGISTRY}/k8s-gcr-io-kube-controller-manager:v1.14.2

docker pull ${MY_REGISTRY}/k8s-gcr-io-kube-scheduler:v1.14.2

docker pull ${MY_REGISTRY}/k8s-gcr-io-kube-proxy:v1.14.2

docker pull ${MY_REGISTRY}/k8s-gcr-io-etcd:3.3.10

docker pull ${MY_REGISTRY}/k8s-gcr-io-pause:3.1

docker pull ${MY_REGISTRY}/k8s-gcr-io-coredns:1.3.1

docker pull jmgao1983/flannel:v0.11.0-amd64

docker tag ${MY_REGISTRY}/k8s-gcr-io-kube-apiserver:v1.14.2 k8s.gcr.io/kube-apiserver:v1.14.2

docker tag ${MY_REGISTRY}/k8s-gcr-io-kube-controller-manager:v1.14.2 k8s.gcr.io/kube-controller-manager:v1.14.2

docker tag ${MY_REGISTRY}/k8s-gcr-io-kube-scheduler:v1.14.2 k8s.gcr.io/kube-scheduler:v1.14.2

docker tag ${MY_REGISTRY}/k8s-gcr-io-kube-proxy:v1.14.2 k8s.gcr.io/kube-proxy:v1.14.2

docker tag ${MY_REGISTRY}/k8s-gcr-io-etcd:3.3.10 k8s.gcr.io/etcd:3.3.10

docker tag ${MY_REGISTRY}/k8s-gcr-io-pause:3.1 k8s.gcr.io/pause:3.1

docker tag ${MY_REGISTRY}/k8s-gcr-io-coredns:1.3.1 k8s.gcr.io/coredns:1.3.1

docker tag jmgao1983/flannel:v0.11.0-amd64 quay.io/coreos/flannel:v0.11.0-amd64关闭 swap:

echo 'swapoff -a' >> /etc/profile

source /etc/profile复制公钥:

mkdir /root/.ssh

chmod 700 /root/.ssh

scp root@master1:/root/.ssh/id_rsa.pub /root/.ssh/authorized_keys复制 CA:

mkdir -p /etc/kubernetes/pki/etcd

scp root@master1:/etc/kubernetes/pki/\{ca.*,sa.*,front-proxy-ca.*\} /etc/kubernetes/pki/

scp root@master1:/etc/kubernetes/pki/etcd/ca.* /etc/kubernetes/pki/etcd/

scp root@master1:/etc/kubernetes/admin.conf /etc/kubernetes/

mkdir -p $HOME/.kube

scp root@master1:$HOME/.kube/config $HOME/.kube/config

配置/etc/sysctl.d/k8s.conf

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

vm.swappiness=0

EOF

sysctl --system设置 Kubernetes 安装源信息:

cat << EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum makecache fast安装 kube 组件:

yum install -y kubelet kubeadm kubectl配置 Cgroups:

echo 'Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=cgroupfs"' >> /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf

启动kubelet

systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet加入集群:

注意:以下是范例命令,需要根据 Master1 的实际输出命令运行。

kubeadm join 30.0.2.10:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:5f491180e10907314ab6cac766bf13dae7e851a0a3749b035bd071c67697303c \

--experimental-control-plane查看容器:

kubectl -n kube-system get pod -o wide第五部分:部署 Kubernetes HA cluster 数据平面

关闭 swap:

echo 'swapoff -a' >> /etc/profile

source /etc/profile设置环境 IP:

yum install -y net-tools

vip=30.0.2.10

master1=30.0.2.11

master2=30.0.2.12

master3=30.0.2.13

node1=30.0.2.14

netswitch=`ifconfig | grep 'UP,BROADCAST,RUNNING,MULTICAST' | awk -F: '{print $1}'`设置 hosts:

cat >>/etc/hosts<<EOF

${master1} master1

${master2} master2

${master3} master3

${node1} node1

EOF复制公钥:

mkdir /root/.ssh

chmod 700 /root/.ssh

scp root@master1:/root/.ssh/id_rsa.pub /root/.ssh/authorized_keys设置 k8s.conf:

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

vm.swappiness=0

EOF

sysctl --system配置 /etc/sysconfig/modules/ipvs.modules:

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4下载容器镜像:

MY_REGISTRY=registry.cn-hangzhou.aliyuncs.com/openthings

docker pull ${MY_REGISTRY}/k8s-gcr-io-kube-proxy:v1.14.2

docker pull ${MY_REGISTRY}/k8s-gcr-io-pause:3.1

docker pull jmgao1983/flannel:v0.11.0-amd64

docker tag ${MY_REGISTRY}/k8s-gcr-io-kube-proxy:v1.14.2 k8s.gcr.io/kube-proxy:v1.14.2

docker tag ${MY_REGISTRY}/k8s-gcr-io-pause:3.1 k8s.gcr.io/pause:3.1

docker tag jmgao1983/flannel:v0.11.0-amd64 quay.io/coreos/flannel:v0.11.0-amd64设置 Kubernetes repo:

cat << EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum makecache fast

yum install -y kubelet kubeadm kubectl设置 cgroupfs:

echo 'Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=cgroupfs"' >> /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf

systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet设置 kube config 文件:

mkdir -p $HOME/.kube

scp root@master1:$HOME/.kube/config $HOME/.kube/config加入集群:

注意:以下是范例命令,需要根据 Master1 的实际输出命令运行。

kubeadm join 30.0.2.10:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:5f491180e10907314ab6cac766bf13dae7e851a0a3749b035bd071c67697303c查看容器:

kubectl get nodes输出:

[root@node1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 Ready master 95m v1.14.3

master2 Ready master 30m v1.14.3

master3 Ready master 28m v1.14.3

node1 Ready <none> 90s v1.14.3kubectl -n kube-system get pod -o wide输出:

[root@node1 ~]# kubectl -n kube-system get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-fb8b8dccf-jlwpw 1/1 Running 0 96m 10.244.0.2 master1 <none> <none>

coredns-fb8b8dccf-mvzxv 1/1 Running 0 96m 10.244.0.3 master1 <none> <none>

etcd-master1 1/1 Running 0 96m 30.0.2.11 master1 <none> <none>

etcd-master2 1/1 Running 0 31m 30.0.2.12 master2 <none> <none>

etcd-master3 1/1 Running 0 30m 30.0.2.13 master3 <none> <none>

kube-apiserver-master1 1/1 Running 0 96m 30.0.2.11 master1 <none> <none>

kube-apiserver-master2 1/1 Running 1 31m 30.0.2.12 master2 <none> <none>

kube-apiserver-master3 1/1 Running 0 29m 30.0.2.13 master3 <none> <none>

kube-controller-manager-master1 1/1 Running 1 96m 30.0.2.11 master1 <none> <none>

kube-controller-manager-master2 1/1 Running 1 30m 30.0.2.12 master2 <none> <none>

kube-controller-manager-master3 1/1 Running 0 29m 30.0.2.13 master3 <none> <none>

kube-flannel-ds-amd64-4rkmg 1/1 Running 0 3m20s 30.0.2.14 node1 <none> <none>

kube-flannel-ds-amd64-6gx8f 1/1 Running 0 87m 30.0.2.11 master1 <none> <none>

kube-flannel-ds-amd64-9dpjf 1/1 Running 0 30m 30.0.2.13 master3 <none> <none>

kube-flannel-ds-amd64-r8qkx 1/1 Running 0 31m 30.0.2.12 master2 <none> <none>

kube-proxy-8gb5d 1/1 Running 0 30m 30.0.2.13 master3 <none> <none>

kube-proxy-c6tvk 1/1 Running 0 96m 30.0.2.11 master1 <none> <none>

kube-proxy-dzp2x 1/1 Running 0 3m20s 30.0.2.14 node1 <none> <none>

kube-proxy-q46t6 1/1 Running 0 31m 30.0.2.12 master2 <none> <none>

kube-scheduler-master1 1/1 Running 1 95m 30.0.2.11 master1 <none> <none>

kube-scheduler-master2 1/1 Running 0 30m 30.0.2.12 master2 <none> <none>

kube-scheduler-master3 1/1 Running 0 29m 30.0.2.13 master3 <none> <none>第六部分:测试一个 Nginx 无状态 Pod

cat << EOF > nginxdeployment.yaml

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 2 # tells deployment to run 2 pods matching the template

template: # create pods using pod definition in this template

metadata:

# unlike pod-nginx.yaml, the name is not included in the meta data as a unique name is

# generated from the deployment name

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

EOFkubectl create -f nginxdeployment.yaml

kubectl get pods输出:

[root@master1 ~]# kubectl create -f nginxdeployment.yaml

deployment.apps/nginx-deployment created

[root@master1 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deployment-6dd86d77d-9tvh9 1/1 Running 0 5m57s

nginx-deployment-6dd86d77d-fvzql 1/1 Running 0 5m57s创建 Nginx 无状态容器的服务,并测试 cluster-ip:

cat << EOF > nginxsvc.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

run: nginx

spec:

ports:

- port: 80

name: http

protocol: TCP

targetPort: 80

selector:

app: nginx

EOFkubectl create -f nginxsvc.yaml

kubectl get svc输出:

[root@master1 ~]# kubectl create -f nginxsvc.yaml

service/nginx created

[root@master1 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.245.0.1 <none> 443/TCP 108m

nginx ClusterIP 10.245.122.19 <none> 80/TCP 11s测试 Nginx 集群是否正常运行:

curl http://10.245.122.19输出:

[root@master1 ~]# curl http://10.245.122.19

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

博客介绍了手工以“进程”和“容器”方式部署,以及用 kubeadm 以“容器”方式部署。还详细说明了虚拟机搭建环境,包括 CPU、内存、操作系统等硬件和软件信息,以及虚拟机创建、设置和启动的具体步骤。

博客介绍了手工以“进程”和“容器”方式部署,以及用 kubeadm 以“容器”方式部署。还详细说明了虚拟机搭建环境,包括 CPU、内存、操作系统等硬件和软件信息,以及虚拟机创建、设置和启动的具体步骤。

541

541

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?