文章目录

dolphinscheduler3.1.1 部署

dolphinscheduler 官网地址: https://dolphinscheduler.apache.org/zh-cn/

dolphinscheduler 下载地址: https://dolphinscheduler.apache.org/zh-cn/download/download.html

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-dAemE0AY-1669973901677)(https://gitee.com/yx9119/imgs/raw/master/PidGo_imgs/image-20221202170937125.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-97kWA9NL-1669973901679)(https://gitee.com/yx9119/imgs/raw/master/PidGo_imgs/image-20221202171046057.png)]

DolphinScheduler 的目录结构

(来源官网):

├── LICENSE

│

├── NOTICE

│

├── licenses licenses存放目录

│

├── bin DolphinScheduler命令和环境变量配置存放目录

│ ├── dolphinscheduler-daemon.sh 启动/关闭DolphinScheduler服务脚本

│ ├── env 环境变量配置存放目录

│ │ ├── dolphinscheduler_env.sh 当使用`dolphinscheduler-daemon.sh`脚本起停服务时,运行此脚本加载环境变量配置文件 [如:JAVA_HOME,HADOOP_HOME, HIVE_HOME ...]

│ │ └── install_env.sh 当使用`install.sh` `start-all.sh` `stop-all.sh` `status-all.sh`脚本时,运行此脚本为DolphinScheduler安装加载环境变量配置

│ ├── install.sh 当使用`集群`模式或`伪集群`模式部署DolphinScheduler时,运行此脚本自动安装服务

│ ├── remove-zk-node.sh 清理zookeeper缓存文件脚本

│ ├── scp-hosts.sh 安装文件传输脚本

│ ├── start-all.sh 当使用`集群`模式或`伪集群`模式部署DolphinScheduler时,运行此脚本启动所有服务

│ ├── status-all.sh 当使用`集群`模式或`伪集群`模式部署DolphinScheduler时,运行此脚本获取所有服务状态

│ └── stop-all.sh 当使用`集群`模式或`伪集群`模式部署DolphinScheduler时,运行此脚本终止所有服务

│

├── alert-server DolphinScheduler alert-server命令、配置和依赖存放目录

│ ├── bin

│ │ └── start.sh DolphinScheduler alert-server启动脚本

│ ├── conf

│ │ ├── application.yaml alert-server配置文件

│ │ ├── bootstrap.yaml Spring Cloud 启动阶段配置文件, 通常不需要修改

│ │ ├── common.properties 公共服务(存储等信息)配置文件

│ │ ├── dolphinscheduler_env.sh alert-server环境变量配置加载脚本

│ │ └── logback-spring.xml alert-service日志配置文件

│ └── libs alert-server依赖jar包存放目录

│

├── api-server DolphinScheduler api-server命令、配置和依赖存放目录

│ ├── bin

│ │ └── start.sh DolphinScheduler api-server启动脚本

│ ├── conf

│ │ ├── application.yaml api-server配置文件

│ │ ├── bootstrap.yaml Spring Cloud 启动阶段配置文件, 通常不需要修改

│ │ ├── common.properties 公共服务(存储等信息)配置文件

│ │ ├── dolphinscheduler_env.sh api-server环境变量配置加载脚本

│ │ └── logback-spring.xml api-service日志配置文件

│ ├── libs api-server依赖jar包存放目录

│ └── ui api-server相关前端WEB资源存放目录

│

├── master-server DolphinScheduler master-server命令、配置和依赖存放目录

│ ├── bin

│ │ └── start.sh DolphinScheduler master-server启动脚本

│ ├── conf

│ │ ├── application.yaml master-server配置文件

│ │ ├── bootstrap.yaml Spring Cloud 启动阶段配置文件, 通常不需要修改

│ │ ├── common.properties 公共服务(存储等信息)配置文件

│ │ ├── dolphinscheduler_env.sh master-server环境变量配置加载脚本

│ │ └── logback-spring.xml master-service日志配置文件

│ └── libs master-server依赖jar包存放目录

│

├── standalone-server DolphinScheduler standalone-server命令、配置和依赖存放目录

│ ├── bin

│ │ └── start.sh DolphinScheduler standalone-server启动脚本

│ ├── conf

│ │ ├── application.yaml standalone-server配置文件

│ │ ├── bootstrap.yaml Spring Cloud 启动阶段配置文件, 通常不需要修改

│ │ ├── common.properties 公共服务(存储等信息)配置文件

│ │ ├── dolphinscheduler_env.sh standalone-server环境变量配置加载脚本

│ │ ├── logback-spring.xml standalone-service日志配置文件

│ │ └── sql DolphinScheduler元数据创建/升级sql文件

│ ├── libs standalone-server依赖jar包存放目录

│ └── ui standalone-server相关前端WEB资源存放目录

│

├── tools DolphinScheduler元数据工具命令、配置和依赖存放目录

│ ├── bin

│ │ └── upgrade-schema.sh DolphinScheduler元数据创建/升级脚本

│ ├── conf

│ │ ├── application.yaml 元数据工具配置文件

│ │ └── common.properties 公共服务(存储等信息)配置文件

│ ├── libs 元数据工具依赖jar包存放目录

│ └── sql DolphinScheduler元数据创建/升级sql文件

│

├── worker-server DolphinScheduler worker-server命令、配置和依赖存放目录

│ ├── bin

│ │ └── start.sh DolphinScheduler worker-server启动脚本

│ ├── conf

│ │ ├── application.yaml worker-server配置文件

│ │ ├── bootstrap.yaml Spring Cloud 启动阶段配置文件, 通常不需要修改

│ │ ├── common.properties 公共服务(存储等信息)配置文件

│ │ ├── dolphinscheduler_env.sh worker-server环境变量配置加载脚本

│ │ └── logback-spring.xml worker-service日志配置文件

│ └── libs worker-server依赖jar包存放目录

│

└── ui 前端WEB资源目录

上传到 apache-dolphinscheduler-3.1.1-bin.tar.gz 到对应的服务器目录(/opt/)

解压

tar -zxvf apache-dolphinscheduler-3.1.1-bin.tar.gz

配置 install_env.sh

vim bin/env/install_env.sh

# 安装DolphinScheduler的机器(包含 master、worker、api、alert)

# 如果以伪集群模式去发布,只要写伪集群模式的主机名即可

# 例如:

# - 主机名示例:ips="ds1,ds2,ds3,ds4,ds5"

# - IP示例:ips="192.168.8.1,192.168.8.2,192.168.8.3,192.168.8.4,192.168.8.5"

ips=${ips:-"ds1,ds2,ds3,ds4,ds5"}

# SSH协议的端口,默认22

sshPort=${sshPort:-"22"}

# Master服务器的主机名或ip地址(必须是ips的子集)

# 例如:

# - 主机名示例: masters="ds1,ds2"

# - Ip示例:masters="192.168.8.1,192.168.8.2"

masters=${masters:-"ds1,ds2"}

# Wrokers服务器的主机名或ip地址(必须是ips的子集)

# 例如:

# - 主机名示例:workers="ds1:default,ds2:default,ds3:default"

# - IP示例: workers="192.168.8.1:default,192.168.8.2:default,192.168.8.3:default"

workers=${workers:-"ds1:default,ds2:default,ds3:default,ds4:default,ds5:default"}

# AlertServer服务器的主机名或ip地址(必须是ips的子集)

# - 主机名示例:alertServer="ds3"

# - IP示例: alertServer="192.168.8.3"

alertServer=${alertServer:-"ds3"}

# APIServer(必须是ips的子集)

# - 主机名示例:alertServer="ds1"

# - IP示例: alertServer="192.168.8.1"

apiServers=${apiServers:-"ds1"}

# 此目录为上面配置的所有机器安装DolphinScheduler的目录,它会自动地被install.sh`创建

# 主要这个配置不要配置和当前路径一致,如果使用相对路径,不要添加引号

installPath=${installPath:-"/tmp/dolphinscheduler"}

# 用户将为上面配置的所有机器部署DolphinScheduler。

# 用户必须在运行“install.sh”脚本之前自己创建。

# 用户需要具有sudo权限和操作hdfs的权限。如果启用了hdfs,则需要该用户创建根目录

deployUser=${deployUser:-"dolphinscheduler"}

# zookeeper的根目录,现在DolphinScheduler默认注册服务器是zookeeper。

zkRoot=${zkRoot:-"/dolphinscheduler"}

#配置 zookeeper 注册表名称空间

registryNamespace="dolphinscheduler3"

配置 dolphinscheduler_env.sh

# 将使用该指定的JAVA_HOME来启动DolphinScheduler服务

export JAVA_HOME=${JAVA_HOME:-/opt/soft/java}

# 数据库相关的配置,设置数据库类型、用户名和密码

export DATABASE=${DATABASE:-postgresql}

export SPRING_PROFILES_ACTIVE=${DATABASE}

export SPRING_DATASOURCE_URL

export SPRING_DATASOURCE_USERNAME

export SPRING_DATASOURCE_PASSWORD

# DolphinScheduler服务相关的配置

export SPRING_CACHE_TYPE=${SPRING_CACHE_TYPE:-none}

export SPRING_JACKSON_TIME_ZONE=${SPRING_JACKSON_TIME_ZONE:-UTC}

export MASTER_FETCH_COMMAND_NUM=${MASTER_FETCH_COMMAND_NUM:-10}

# 注册中心配置,指定注册中心的类型以及连接地址相关的信息

export REGISTRY_TYPE=${REGISTRY_TYPE:-zookeeper}

export REGISTRY_ZOOKEEPER_CONNECT_STRING=${REGISTRY_ZOOKEEPER_CONNECT_STRING:-localhost:2181}

# 工作流任务相关的配置,根据任务的实际需求去修改相应的配置

export HADOOP_HOME=${HADOOP_HOME:-/opt/soft/hadoop}

export HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-/opt/soft/hadoop/etc/hadoop}

export SPARK_HOME1=${SPARK_HOME1:-/opt/soft/spark1}

export SPARK_HOME2=${SPARK_HOME2:-/opt/soft/spark2}

export PYTHON_HOME=${PYTHON_HOME:-/opt/soft/python}

export HIVE_HOME=${HIVE_HOME:-/opt/soft/hive}

export FLINK_HOME=${FLINK_HOME:-/opt/soft/flink}

export DATAX_HOME=${DATAX_HOME:-/opt/soft/datax}

# 导出上述配置的bin目录

export PATH=$HADOOP_HOME/bin:$SPARK_HOME1/bin:$SPARK_HOME2/bin:$PYTHON_HOME/bin:$JAVA_HOME/bin:$HIVE_HOME/bin:$FLINK_HOME/bin:$DATAX_HOME/bin:$PATH

配置 common.properties

# user data local directory path, please make sure the directory exists and have read write permissions

data.basedir.path=/tmp/dolphinscheduler3

# resource view suffixs

#resource.view.suffixs=txt,log,sh,bat,conf,cfg,py,java,sql,xml,hql,properties,json,yml,yaml,ini,js

# resource storage type: HDFS, S3, OSS, NONE

resource.storage.type=HDFS

# resource store on HDFS/S3 path, resource file will store to this base path, self configuration, please make sure the directory exists on hdfs and have read write permissions. "/dolphinscheduler" is recommended

resource.storage.upload.base.path=/dolphinscheduler3

# The AWS access key. if resource.storage.type=S3 or use EMR-Task, This configuration is required

resource.aws.access.key.id=minioadmin

# The AWS secret access key. if resource.storage.type=S3 or use EMR-Task, This configuration is required

resource.aws.secret.access.key=minioadmin

# The AWS Region to use. if resource.storage.type=S3 or use EMR-Task, This configuration is required

resource.aws.region=cn-north-1

# The name of the bucket. You need to create them by yourself. Otherwise, the system cannot start. All buckets in Amazon S3 share a single namespace; ensure the bucket is given a unique name.

resource.aws.s3.bucket.name=dolphinscheduler

# You need to set this parameter when private cloud s3. If S3 uses public cloud, you only need to set resource.aws.region or set to the endpoint of a public cloud such as S3.cn-north-1.amazonaws.com.cn

resource.aws.s3.endpoint=http://localhost:9000

# alibaba cloud access key id, required if you set resource.storage.type=OSS

resource.alibaba.cloud.access.key.id=<your-access-key-id>

# alibaba cloud access key secret, required if you set resource.storage.type=OSS

resource.alibaba.cloud.access.key.secret=<your-access-key-secret>

# alibaba cloud region, required if you set resource.storage.type=OSS

resource.alibaba.cloud.region=cn-hangzhou

# oss bucket name, required if you set resource.storage.type=OSS

resource.alibaba.cloud.oss.bucket.name=dolphinscheduler

# oss bucket endpoint, required if you set resource.storage.type=OSS

resource.alibaba.cloud.oss.endpoint=https://oss-cn-hangzhou.aliyuncs.com

# if resource.storage.type=HDFS, the user must have the permission to create directories under the HDFS root path

resource.hdfs.root.user=omm

# if resource.storage.type=S3, the value like: s3a://dolphinscheduler; if resource.storage.type=HDFS and namenode HA is enabled, you need to copy core-site.xml and hdfs-site.xml to conf dir

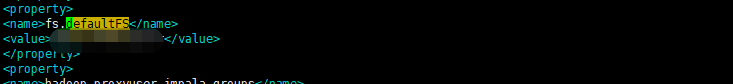

# (如果hdfs 配置了 HA 需要把 core-site.xml and hdfs-site.xml 放在各组件的conf 目录下 resource.hdfs.fs.defaultFS不能为空 或者 删除 对应的值 可以在 core-site.xml 找到 fs.defaultFS 的值 )

resource.hdfs.fs.defaultFS=hdfs://hacluster

# whether to startup kerberos

hadoop.security.authentication.startup.state=false

# java.security.krb5.conf path

java.security.krb5.conf.path=/opt/krb5.conf

# login user from keytab username

login.user.keytab.username=hdfs-mycluster@ESZ.COM

# login user from keytab path

login.user.keytab.path=/opt/hdfs.headless.keytab

# kerberos expire time, the unit is hour

kerberos.expire.time=2

# resourcemanager port, the default value is 8088 if not specified

resource.manager.httpaddress.port=8088

# if resourcemanager HA is enabled, please set the HA IPs; if resourcemanager is single, keep this value empty

#yarn.resourcemanager.ha.rm.ids=192.168.xx.xx,192.168.xx.xx

yarn.resourcemanager.ha.rm.ids=192.168.0.183,192.168.0.182

# if resourcemanager HA is enabled or not use resourcemanager, please keep the default value; If resourcemanager is single, you only need to replace ds1 to actual resourcemanager hostname

yarn.application.status.address=http://192.168.0.182:%s/ws/v1/cluster/apps/%s

# job history status url when application number threshold is reached(default 10000, maybe it was set to 1000)

yarn.job.history.status.address=http://192.168.0.182:19888/ws/v1/history/mapreduce/jobs/%s

# datasource encryption enable

datasource.encryption.enable=false

# datasource encryption salt

datasource.encryption.salt=!@#$%^&*

# data quality option

data-quality.jar.name=dolphinscheduler-data-quality-dev-SNAPSHOT.jar

#data-quality.error.output.path=/tmp/data-quality-error-data

# Network IP gets priority, default inner outer

# Whether hive SQL is executed in the same session

support.hive.oneSession=false

# use sudo or not, if set true, executing user is tenant user and deploy user needs sudo permissions; if set false, executing user is the deploy user and doesn't need sudo permissions

sudo.enable=true

setTaskDirToTenant.enable=false

# network interface preferred like eth0, default: empty

#dolphin.scheduler.network.interface.preferred=

# network IP gets priority, default: inner outer

#dolphin.scheduler.network.priority.strategy=default

# system env path

#dolphinscheduler.env.path=dolphinscheduler_env.sh

# development state

development.state=false

# rpc port

alert.rpc.port=50052

# set path of conda.sh

conda.path=/opt/anaconda3/etc/profile.d/conda.sh

# Task resource limit state

task.resource.limit.state=false

# mlflow task plugin preset repository

ml.mlflow.preset_repository=https://github.com/apache/dolphinscheduler-mlflow

# mlflow task plugin preset repository version

ml.mlflow.preset_repository_version="main"

215

215

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?