肖哥弹架构 跟大家“弹弹” Spring JDBCTemplate设计与实战应用,需要代码关注

欢迎 点赞,点赞,点赞。

关注公号Solomon肖哥弹架构获取更多精彩内容

历史热点文章

- MyCat应用实战:分布式数据库中间件的实践与优化(篇幅一)

- 图解深度剖析:MyCat 架构设计与组件协同 (篇幅二)

- 一个项目代码讲清楚DO/PO/BO/AO/E/DTO/DAO/ POJO/VO

- 写代码总被Dis:5个项目案例带你掌握SOLID技巧,代码有架构风格

- 里氏替换原则在金融交易系统中的实践,再不懂你咬我

📌 突破单机瓶颈! 本文深入讲解 Spring JDBCTemplate 的高级用法,涵盖 数据库分片路由、多数据源操作、动态方言支持、性能监控、全链路追踪 等企业级场景,助你轻松应对高并发、大数据量挑战!

✨ 核心亮点:

- 🔧 分库分表实战:手把手实现订单数据分片存储与查询,解决单机性能瓶颈。

- 🌐 多数据源管理:配置主从数据源,实现数据双向同步与事务隔离。

- 📊 性能监控与优化:集成 Micrometer 实时统计 SQL 执行指标,自动捕获慢查询并告警。

- 🔍 全链路追踪:结合 OpenTelemetry 追踪数据库操作,快速定位性能瓶颈。

- 🛠 动态方言支持:一套代码兼容 MySQL、Oracle、PostgreSQL 等多种数据库分页语法。

六 高级使用案例

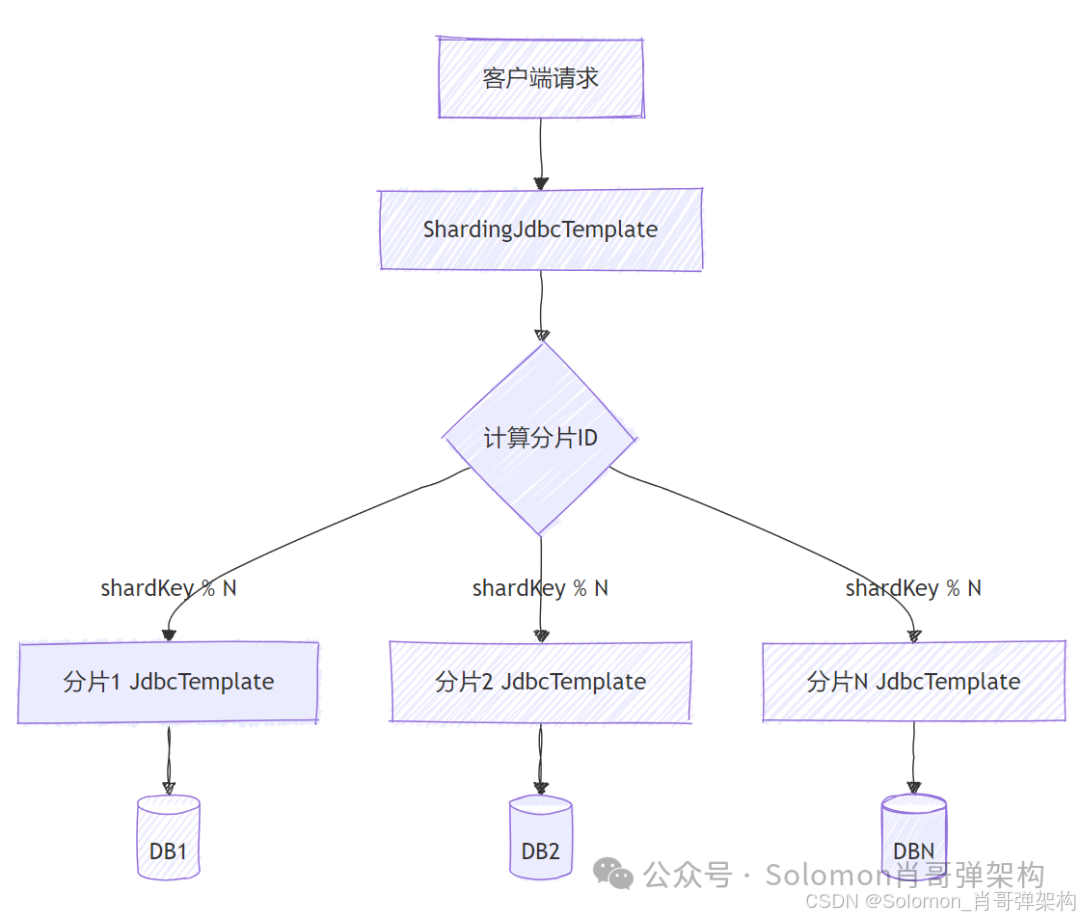

1. 数据库分片路由

数据库分片(Sharding)主要是为了解决以下几个问题:

- 性能瓶颈:单机数据库在数据量达到TB级别时性能急剧下降

- 存储限制:单机存储容量有限

- 高并发:单一数据库无法承受极高的并发请求

- 可用性:避免单点故障

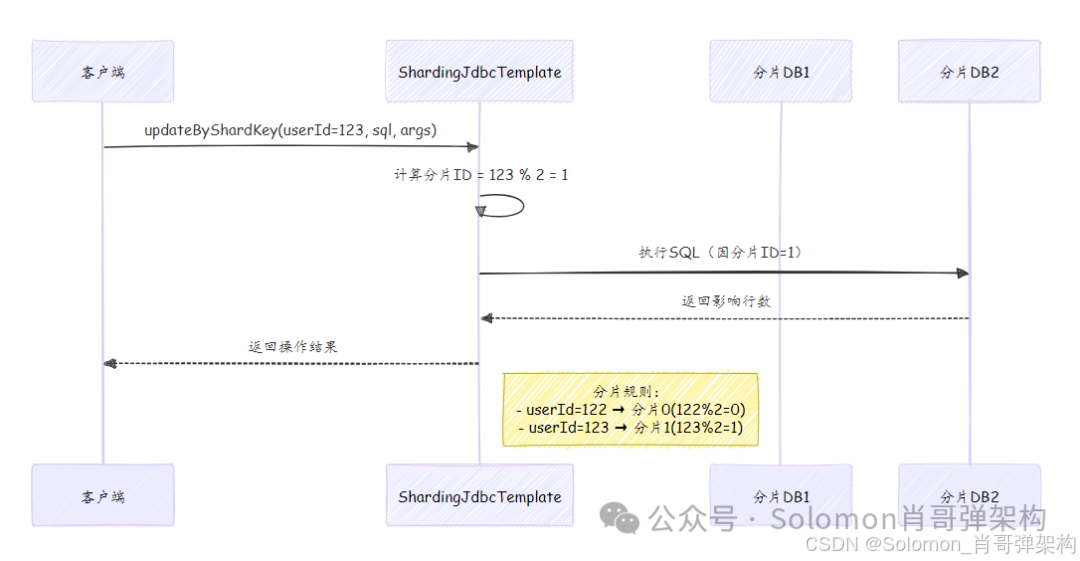

1.1. 首先定义分片核心代码

public class ShardingJdbcTemplate {

private final Map<Integer, JdbcTemplate> shards;

public ShardingJdbcTemplate(Map<Integer, DataSource> shardDataSources) {

this.shards = shardDataSources.entrySet().stream()

.collect(Collectors.toMap(

Map.Entry::getKey,

e -> new JdbcTemplate(e.getValue())

));

}

public <T> T queryByShardKey(int shardKey, String sql,

PreparedStatementSetter pss, ResultSetExtractor<T> rse) {

int shardId = shardKey % shards.size();

return shards.get(shardId).query(sql, pss, rse);

}

public int updateByShardKey(int shardKey, String sql, Object... args) {

int shardId = shardKey % shards.size();

return shards.get(shardId).update(sql, args);

}

}

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

1.2. 定义分片键和分片规则

/**

* 订单分片工具类

*/

public class OrderShardingUtil {

// 分片数量(通常与数据库实例数相同)

private static final int SHARD_COUNT = 4;

/**

* 根据订单ID计算分片ID

*/

public static int getShardIdByOrderId(long orderId) {

return (int) (orderId % SHARD_COUNT);

}

/**

* 根据用户ID计算分片ID(适合用户数据均匀分布的场景)

*/

public static int getShardIdByUserId(long userId) {

return (int) (userId % SHARD_COUNT);

}

}

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

1.3. 实现分片数据源配置

/**

* 分片数据源配置

*/

@Configuration

public class ShardingDataSourceConfig {

// 配置4个分片数据源(实际项目中从配置中心或环境变量获取)

@Bean

public Map<Integer, DataSource> shardDataSources() {

Map<Integer, DataSource> dataSources = new HashMap<>();

dataSources.put(0, createDataSource("jdbc:mysql://db-host-1:3306/order_db"));

dataSources.put(1, createDataSource("jdbc:mysql://db-host-2:3306/order_db"));

dataSources.put(2, createDataSource("jdbc:mysql://db-host-3:3306/order_db"));

dataSources.put(3, createDataSource("jdbc:mysql://db-host-4:3306/order_db"));

return dataSources;

}

private DataSource createDataSource(String url) {

HikariDataSource ds = new HikariDataSource();

ds.setJdbcUrl(url);

ds.setUsername("db_user");

ds.setPassword("db_password");

ds.setMaximumPoolSize(20);

return ds;

}

@Bean

public ShardingJdbcTemplate shardingJdbcTemplate(

Map<Integer, DataSource> shardDataSources) {

return new ShardingJdbcTemplate(shardDataSources);

}

}

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

1.4. 增强版的分片JdbcTemplate

/**

* 增强版分片JdbcTemplate(带实际业务方法)

*/

public class ShardingOrderRepository {

private final ShardingJdbcTemplate shardingTemplate;

public ShardingOrderRepository(ShardingJdbcTemplate shardingTemplate) {

this.shardingTemplate = shardingTemplate;

}

/**

* 插入订单(自动路由到对应分片)

*/

public void insertOrder(Order order) {

int shardId = OrderShardingUtil.getShardIdByOrderId(order.getOrderId());

String sql = "INSERT INTO orders(order_id, user_id, amount, create_time) VALUES(?, ?, ?, ?)";

shardingTemplate.updateByShardKey(

shardId,

sql,

order.getOrderId(),

order.getUserId(),

order.getAmount(),

order.getCreateTime()

);

System.out.printf("订单 %d 被路由到分片 %d 存储%n", order.getOrderId(), shardId);

}

/**

* 查询订单(自动路由到对应分片)

*/

public Order findOrderById(long orderId) {

int shardId = OrderShardingUtil.getShardIdByOrderId(orderId);

String sql = "SELECT * FROM orders WHERE order_id = ?";

return shardingTemplate.queryByShardKey(

shardId,

sql,

ps -> ps.setLong(1, orderId),

rs -> {

if (rs.next()) {

Order order = new Order();

order.setOrderId(rs.getLong("order_id"));

order.setUserId(rs.getLong("user_id"));

order.setAmount(rs.getBigDecimal("amount"));

order.setCreateTime(rs.getTimestamp("create_time"));

return order;

}

return null;

}

);

}

/**

* 查询用户的所有订单(需要查询所有分片)

*/

public List<Order> findOrdersByUserId(long userId) {

List<Order> allOrders = new ArrayList<>();

// 并行查询所有分片

IntStream.range(0, shardingTemplate.getShardCount()).parallel().forEach(shardId -> {

String sql = "SELECT * FROM orders WHERE user_id = ?";

List<Order> shardOrders = shardingTemplate.queryByShardKey(

shardId,

sql,

ps -> ps.setLong(1, userId),

rs -> {

List<Order> orders = new ArrayList<>();

while (rs.next()) {

Order order = new Order();

order.setOrderId(rs.getLong("order_id"));

order.setUserId(rs.getLong("user_id"));

order.setAmount(rs.getBigDecimal("amount"));

order.setCreateTime(rs.getTimestamp("create_time"));

orders.add(order);

}

return orders;

}

);

synchronized (allOrders) {

allOrders.addAll(shardOrders);

}

});

return allOrders;

}

}

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

- 51.

- 52.

- 53.

- 54.

- 55.

- 56.

- 57.

- 58.

- 59.

- 60.

- 61.

- 62.

- 63.

- 64.

- 65.

- 66.

- 67.

- 68.

- 69.

- 70.

- 71.

- 72.

- 73.

- 74.

- 75.

- 76.

- 77.

- 78.

- 79.

- 80.

- 81.

- 82.

- 83.

- 84.

- 85.

- 86.

- 87.

- 88.

- 89.

- 90.

- 91.

1.5. 实际使用

public class OrderService {

private final ShardingOrderRepository orderRepo;

// 模拟生成订单ID(实际项目中使用分布式ID生成器)

private final AtomicLong idGenerator = new AtomicLong(10000);

public OrderService(ShardingOrderRepository orderRepo) {

this.orderRepo = orderRepo;

}

public void processOrders() {

// 创建10个测试订单

for (int i = 0; i < 10; i++) {

Order order = new Order();

order.setOrderId(idGenerator.incrementAndGet()); // 生成订单ID

order.setUserId(ThreadLocalRandom.current().nextLong(1000));

order.setAmount(new BigDecimal("99.99"));

order.setCreateTime(new Timestamp(System.currentTimeMillis()));

orderRepo.insertOrder(order);

}

// 查询特定订单

long sampleOrderId = 10003L;

Order foundOrder = orderRepo.findOrderById(sampleOrderId);

System.out.println("查询到的订单: " + foundOrder);

// 查询用户的所有订单

long sampleUserId = 123L;

List<Order> userOrders = orderRepo.findOrdersByUserId(sampleUserId);

System.out.println("用户" + sampleUserId + "的所有订单: " + userOrders.size());

}

}

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

2. 数据库方言抽象层(多数据库方言支持)

/**

* 支持多数据库方言的JdbcTemplate扩展

* 主要解决不同数据库SQL语法差异问题,特别是分页查询语法

*/

public class DialectAwareJdbcTemplate extends JdbcTemplate {

private final DatabaseDialect dialect;

/**

* 构造函数

* @param dataSource 数据源

* @param dialect 数据库方言实现

*/

public DialectAwareJdbcTemplate(DataSource dataSource, DatabaseDialect dialect) {

super(dataSource);

this.dialect = dialect;

}

/**

* 获取当前数据库的分页子句

* @param limit 每页记录数

* @param offset 偏移量(从0开始)

* @return 数据库特定的分页子句

*/

public String getLimitClause(int limit, int offset) {

return dialect.getLimitClause(limit, offset);

}

/**

* 执行分页查询

* @param sql 基础SQL语句(不包含分页部分)

* @param args 查询参数

* @param rowMapper 行映射器

* @param page 页码(从1开始)

* @param size 每页大小

* @return 当前页的数据列表

*/

public <T> List<T> queryWithPagination(String sql,

Object[] args, RowMapper<T> rowMapper, int page, int size) {

// 计算实际偏移量

int offset = (page - 1) * size;

// 构建带分页的完整SQL

String paginatedSql = sql + " " + getLimitClause(size, offset);

return super.query(paginatedSql, args, rowMapper);

}

}

/**

* 数据库方言接口

* 定义不同数据库需要实现的特定语法

*/

public interface DatabaseDialect {

/**

* 生成分页限制子句

* @param limit 要返回的记录数

* @param offset 跳过的记录数

* @return 数据库特定的分页语法

*/

String getLimitClause(int limit, int offset);

/**

* 获取当前方言名称

* @return 方言名称(如MySQL、Oracle等)

*/

String getDialectName();

}

// ====================== 具体数据库方言实现 ======================

/**

* MySQL数据库方言实现

* MySQL使用 LIMIT offset, limit 语法

*/

public class MySQLDialect implements DatabaseDialect {

@Override

public String getLimitClause(int limit, int offset) {

return "LIMIT " + offset + "," + limit;

}

@Override

public String getDialectName() {

return "MySQL";

}

}

/**

* Oracle 12c及以上版本数据库方言实现

* Oracle 12c+ 使用 OFFSET-FETCH 语法

*/

public class OracleDialect implements DatabaseDialect {

@Override

public String getLimitClause(int limit, int offset) {

return "OFFSET " + offset + " ROWS FETCH NEXT " + limit + " ROWS ONLY";

}

@Override

public String getDialectName() {

return "Oracle";

}

}

/**

* PostgreSQL数据库方言实现

* PostgreSQL使用 LIMIT-OFFSET 语法

*/

public class PostgreSQLDialect implements DatabaseDialect {

@Override

public String getLimitClause(int limit, int offset) {

return "LIMIT " + limit + " OFFSET " + offset;

}

@Override

public String getDialectName() {

return "PostgreSQL";

}

}

/**

* SQL Server数据库方言实现

* SQL Server 2012+ 使用 OFFSET-FETCH 语法

*/

public class SQLServerDialect implements DatabaseDialect {

@Override

public String getLimitClause(int limit, int offset) {

return "OFFSET " + offset + " ROWS FETCH NEXT " + limit + " ROWS ONLY";

}

@Override

public String getDialectName() {

return "SQLServer";

}

}

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

- 51.

- 52.

- 53.

- 54.

- 55.

- 56.

- 57.

- 58.

- 59.

- 60.

- 61.

- 62.

- 63.

- 64.

- 65.

- 66.

- 67.

- 68.

- 69.

- 70.

- 71.

- 72.

- 73.

- 74.

- 75.

- 76.

- 77.

- 78.

- 79.

- 80.

- 81.

- 82.

- 83.

- 84.

- 85.

- 86.

- 87.

- 88.

- 89.

- 90.

- 91.

- 92.

- 93.

- 94.

- 95.

- 96.

- 97.

- 98.

- 99.

- 100.

- 101.

- 102.

- 103.

- 104.

- 105.

- 106.

- 107.

- 108.

- 109.

- 110.

- 111.

- 112.

- 113.

- 114.

- 115.

- 116.

- 117.

- 118.

- 119.

- 120.

- 121.

- 122.

- 123.

- 124.

- 125.

- 126.

- 127.

- 128.

- 129.

- 130.

- 131.

- 132.

- 133.

- 134.

- 135.

- 136.

- 137.

2.1 使用示例

// 1. 配置数据源和方言

DataSource mysqlDataSource = createMySQLDataSource();

DatabaseDialect dialect = new MySQLDialect();

// 2. 创建方言感知的JdbcTemplate

DialectAwareJdbcTemplate jdbcTemplate =

new DialectAwareJdbcTemplate(mysqlDataSource, dialect);

// 3. 执行分页查询(第2页,每页10条)

List<User> users = jdbcTemplate.queryWithPagination(

"SELECT * FROM users ORDER BY create_time DESC",

new Object[]{},

new BeanPropertyRowMapper<>(User.class),

2, // 页码

10 // 每页大小

);

// 生成的SQL将是:

// SELECT * FROM users ORDER BY create_time DESC LIMIT 10,10

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

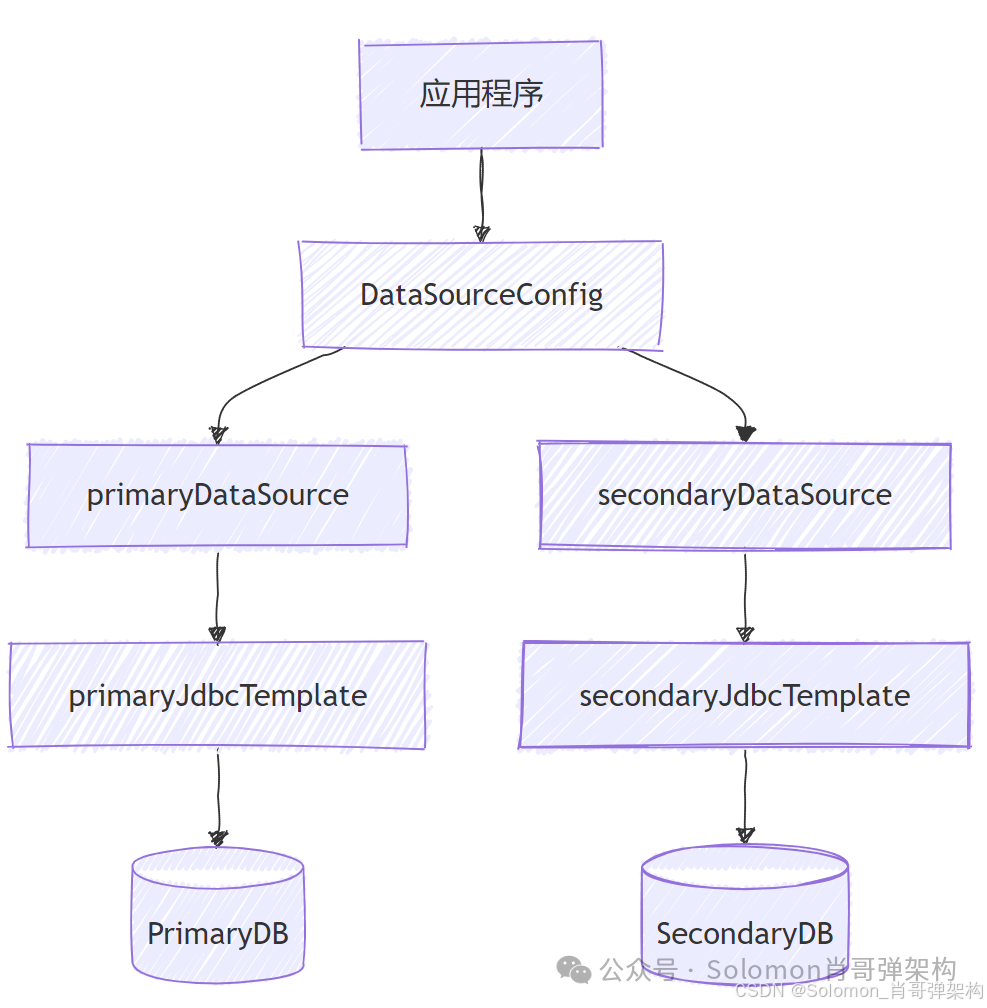

3. 多数据源操作

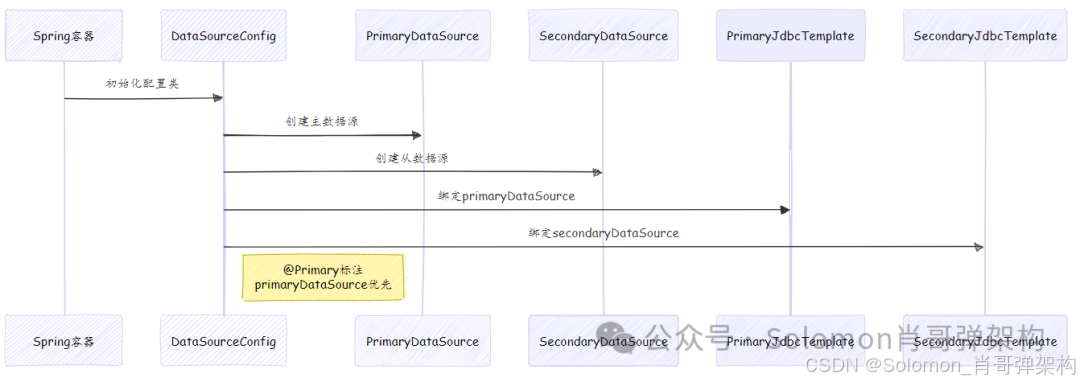

3.1. 数据源配置类

首先创建数据源配置类,配置多个数据源和对应的JdbcTemplate:

@Configuration

public class DataSourceConfig {

// 主数据源配置

@Bean(name = "primaryDataSource")

@ConfigurationProperties(prefix = "spring.datasource.primary")

@Primary // 标记为主数据源

public DataSource primaryDataSource() {

return DataSourceBuilder.create().build();

}

// 从数据源配置

@Bean(name = "secondaryDataSource")

@ConfigurationProperties(prefix = "spring.datasource.secondary")

public DataSource secondaryDataSource() {

return DataSourceBuilder.create().build();

}

// 主数据源JdbcTemplate

@Bean(name = "primaryJdbcTemplate")

public JdbcTemplate primaryJdbcTemplate(

@Qualifier("primaryDataSource") DataSource dataSource) {

return new JdbcTemplate(dataSource);

}

// 从数据源JdbcTemplate

@Bean(name = "secondaryJdbcTemplate")

public JdbcTemplate secondaryJdbcTemplate(

@Qualifier("secondaryDataSource") DataSource dataSource) {

return new JdbcTemplate(dataSource);

}

}

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

配置加载序列图:

3.2. application.yml配置

spring:

datasource:

primary:

jdbc-url: jdbc:mysql://primary-db-host:3306/primary_db

username: primary_user

password: primary_pass

driver-class-name: com.mysql.cj.jdbc.Driver

hikari:

maximum-pool-size: 10

connection-timeout: 30000

secondary:

jdbc-url: jdbc:mysql://secondary-db-host:3306/secondary_db

username: secondary_user

password: secondary_pass

driver-class-name: com.mysql.cj.jdbc.Driver

hikari:

maximum-pool-size: 5

connection-timeout: 30000

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

3.3. 数据同步服务实现

@Service

public class DataSyncService {

private static final Logger logger = LoggerFactory.getLogger(DataSyncService.class);

private final JdbcTemplate primaryJdbcTemplate;

private final JdbcTemplate secondaryJdbcTemplate;

@Autowired

public DataSyncService(

@Qualifier("primaryJdbcTemplate") JdbcTemplate primaryJdbcTemplate,

@Qualifier("secondaryJdbcTemplate") JdbcTemplate secondaryJdbcTemplate) {

this.primaryJdbcTemplate = primaryJdbcTemplate;

this.secondaryJdbcTemplate = secondaryJdbcTemplate;

}

/**

* 同步用户数据从secondary到primary

*/

@Transactional(transactionManager = "primaryTransactionManager")

public void syncUsers() {

// 1. 从secondary数据源查询数据

List<User> users = secondaryJdbcTemplate.query(

"SELECT id, username, email, created_at FROM users",

new BeanPropertyRowMapper<>(User.class));

logger.info("从secondary数据库查询到{}条用户记录", users.size());

// 2. 批量插入到primary数据源

int[] results = primaryJdbcTemplate.batchUpdate(

"INSERT INTO users(id, username, email, created_at) VALUES(?, ?, ?, ?) " +

"ON DUPLICATE KEY UPDATE username=VALUES(username), email=VALUES(email)",

users.stream()

.map(user -> new Object[]{

user.getId(),

user.getUsername(),

user.getEmail(),

user.getCreatedAt()

})

.collect(Collectors.toList()));

int totalAffected = Arrays.stream(results).sum();

logger.info("成功同步{}条用户记录到primary数据库", totalAffected);

}

/**

* 双向同步确保数据一致性

*/

@Transactional

public void bidirectionalSync() {

syncFromPrimaryToSecondary();

syncFromSecondaryToPrimary();

}

/**

* 从primary同步到secondary

*/

private void syncFromPrimaryToSecondary() {

// 实现类似的同步逻辑

}

// 用户实体类

public static class User {

private Long id;

private String username;

private String email;

private Date createdAt;

// getters and setters

}

}

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

- 51.

- 52.

- 53.

- 54.

- 55.

- 56.

- 57.

- 58.

- 59.

- 60.

- 61.

- 62.

- 63.

- 64.

- 65.

- 66.

- 67.

- 68.

- 69.

- 70.

- 71.

3.4. 事务管理配置

对于多数据源,需要单独配置每个数据源的事务管理器:

Spring的事务管理是基于PlatformTransactionManager接口实现的,每个事务管理器通常绑定到一个特定的数据源:

public interface PlatformTransactionManager {

TransactionStatus getTransaction(@Nullable TransactionDefinition definition)

throws TransactionException;

void commit(TransactionStatus status) throws TransactionException;

void rollback(TransactionStatus status) throws TransactionException;

}

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

不能共享事务管理器的原因:

- 事务资源绑定

- 每个事务管理器内部会维护自己的事务资源(通常是数据库连接):

- 事务资源与特定数据源绑定

- 无法跨数据源共享同一连接

- 不同数据库可能有不同的事务特性

- 每个事务管理器内部会维护自己的事务资源(通常是数据库连接):

@Configuration

@EnableTransactionManagement

public class TransactionConfig {

@Bean(name = "primaryTransactionManager")

@Primary

public PlatformTransactionManager primaryTransactionManager(

@Qualifier("primaryDataSource") DataSource dataSource) {

return new DataSourceTransactionManager(dataSource);

}

@Bean(name = "secondaryTransactionManager")

public PlatformTransactionManager secondaryTransactionManager(

@Qualifier("secondaryDataSource") DataSource dataSource) {

return new DataSourceTransactionManager(dataSource);

}

}

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

3.5. 使用示例

@SpringBootApplication

public class MultiDataSourceApplication implements CommandLineRunner {

@Autowired

private DataSyncService dataSyncService;

public static void main(String[] args) {

SpringApplication.run(MultiDataSourceApplication.class, args);

}

@Override

public void run(String... args) throws Exception {

// 执行数据同步

dataSyncService.syncUsers();

// 更复杂的多数据源操作示例

complexMultiDataSourceOperation();

}

private void complexMultiDataSourceOperation() {

// 1. 从数据源A查询

List<DataSyncService.User> sourceUsers = dataSyncService.secondaryJdbcTemplate.query(

"SELECT * FROM users WHERE updated_at > ?",

new BeanPropertyRowMapper<>(DataSyncService.User.class),

Timestamp.valueOf(LocalDateTime.now().minusDays(1)));

// 2. 转换数据

List<Object[]> batchArgs = sourceUsers.stream()

.map(user -> new Object[]{

user.getId(),

user.getUsername(),

user.getEmail()

})

.collect(Collectors.toList());

// 3. 批量插入到数据源B

dataSyncService.primaryJdbcTemplate.batchUpdate(

"INSERT INTO users(id, username, email) VALUES(?, ?, ?) " +

"ON DUPLICATE KEY UPDATE username=VALUES(username), email=VALUES(email)",

batchArgs);

// 4. 记录同步日志到两个数据库

String syncLog = "用户数据同步完成,共同步 " + sourceUsers.size() + " 条记录";

dataSyncService.primaryJdbcTemplate.update(

"INSERT INTO sync_logs(message) VALUES(?)", syncLog);

dataSyncService.secondaryJdbcTemplate.update(

"INSERT INTO sync_logs(message) VALUES(?)", syncLog);

}

}

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

七、监控\性能\追踪\审计\事务

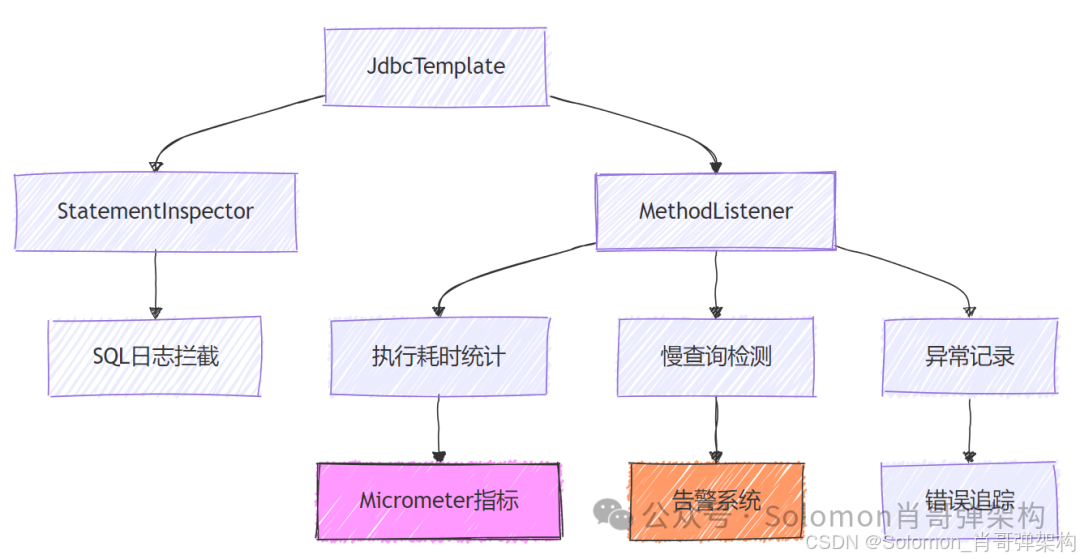

1. 监控和性能统计

1.1. 监控配置类

@Configuration

@Slf4j

public class JdbcMonitoringConfig {

// 监控统计指标

private final Counter sqlCounter = Metrics.counter("jdbc.sql.count");

private final Timer sqlTimer = Metrics.timer("jdbc.sql.time");

private final DistributionSummary sqlSizeSummary = Metrics.summary("jdbc.sql.size");

// 慢查询阈值(毫秒)

@Value("${jdbc.slow-query.threshold:500}")

private long slowQueryThreshold;

@Bean

public JdbcTemplate monitoredJdbcTemplate(DataSource dataSource) {

JdbcTemplate jdbcTemplate = new JdbcTemplate(dataSource);

// 基本配置

jdbcTemplate.setFetchSize(100);

jdbcTemplate.setQueryTimeout(30);

jdbcTemplate.setMaxRows(10000);

// 设置监控拦截器

jdbcTemplate.setStatementInspector(sql -> {

// 记录原始SQL用于调试

log.debug("Preparing SQL: {}", abbreviateSql(sql));

return sql;

});

// 添加执行监听器

jdbcTemplate.setMethodListener(new JdbcTemplate.MethodListener() {

@Override

public void methodCalled(String methodName, String sql, Object[] args, long startTime, long endTime, Throwable ex) {

long duration = endTime - startTime;

// 指标统计

sqlCounter.increment();

sqlTimer.record(duration, TimeUnit.MILLISECONDS);

if (sql != null) {

sqlSizeSummary.record(sql.length());

}

// 慢查询日志

if (duration > slowQueryThreshold) {

log.warn("Slow SQL detected - Execution time: {}ms\nSQL: {}\nParameters: {}",

duration, abbreviateSql(sql), formatArgs(args));

}

// 普通日志

if (log.isDebugEnabled()) {

log.debug("Executed SQL [{}ms]: {} - Parameters: {}",

duration, abbreviateSql(sql), formatArgs(args));

}

// 错误日志

if (ex != null) {

log.error("SQL execution failed: " + sql, ex);

}

}

});

return jdbcTemplate;

}

private String abbreviateSql(String sql) {

if (sql == null) return null;

return sql.length() > 200 ? sql.substring(0, 200) + "..." : sql;

}

private String formatArgs(Object[] args) {

if (args == null || args.length == 0) return "[]";

return Arrays.stream(args)

.map(arg -> arg != null ? arg.toString() : "NULL")

.collect(Collectors.joining(", ", "[", "]"));

}

}

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

- 51.

- 52.

- 53.

- 54.

- 55.

- 56.

- 57.

- 58.

- 59.

- 60.

- 61.

- 62.

- 63.

- 64.

- 65.

- 66.

- 67.

- 68.

- 69.

- 70.

- 71.

- 72.

- 73.

- 74.

- 75.

- 76.

1.2. 监控指标暴露端点

@RestController

@RequiredArgsConstructor

public class JdbcMetricsEndpoint {

private final MeterRegistry meterRegistry;

@GetMapping("/metrics/jdbc")

public Map<String, Object> getJdbcMetrics() {

Map<String, Object> metrics = new LinkedHashMap<>();

// SQL执行次数

metrics.put("sqlCount", meterRegistry.counter("jdbc.sql.count").count());

// SQL执行时间百分位

Timer timer = meterRegistry.timer("jdbc.sql.time");

metrics.put("sqlTimeAvg", timer.mean(TimeUnit.MILLISECONDS));

metrics.put("sqlTimeMax", timer.max(TimeUnit.MILLISECONDS));

metrics.put("sqlTimeP95", timer.percentile(0.95, TimeUnit.MILLISECONDS));

// SQL语句长度

DistributionSummary sizeSummary = meterRegistry.summary("jdbc.sql.size");

metrics.put("sqlSizeAvg", sizeSummary.mean());

metrics.put("sqlSizeMax", sizeSummary.max());

return metrics;

}

}

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

1.3. 慢查询告警服务

@Service

@Slf4j

public class SlowQueryAlertService {

@Value("${jdbc.slow-query.threshold:500}")

private long slowQueryThreshold;

@Value("${jdbc.slow-query.notification.enabled:false}")

private boolean notificationEnabled;

private final List<String> slowQueryCache = new ArrayList<>();

private final AtomicLong slowQueryCount = new AtomicLong();

@Scheduled(fixedDelay = 60000) // 每分钟检查一次

public void checkSlowQueries() {

if (slowQueryCount.get() > 0) {

String message = String.format("Detected %d slow queries in last minute (threshold: %dms)",

slowQueryCount.get(), slowQueryThreshold);

log.warn(message);

if (notificationEnabled) {

sendAlertNotification(message);

}

slowQueryCount.set(0);

slowQueryCache.clear();

}

}

public void recordSlowQuery(String sql, long duration) {

slowQueryCount.incrementAndGet();

if (slowQueryCache.size() < 10) { // 最多缓存10条慢查询

slowQueryCache.add(String.format("[%dms] %s", duration, sql));

}

}

private void sendAlertNotification(String message) {

// 实现邮件、短信等告警通知

}

public List<String> getRecentSlowQueries() {

return new ArrayList<>(slowQueryCache);

}

}

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

1.4. 性能优化建议配置

@Configuration

public class JdbcPerformanceConfig {

@Bean

public DataSource dataSource(

@Value("${spring.datasource.url}") String url,

@Value("${spring.datasource.username}") String username,

@Value("${spring.datasource.password}") String password) {

HikariConfig config = new HikariConfig();

config.setJdbcUrl(url);

config.setUsername(username);

config.setPassword(password);

// 性能优化配置

config.setMaximumPoolSize(20);

config.setMinimumIdle(5);

config.setConnectionTimeout(30000);

config.setIdleTimeout(600000);

config.setMaxLifetime(1800000);

config.setLeakDetectionThreshold(30000);

// 监控相关配置

config.setMetricRegistry(Metrics.globalRegistry);

config.setHealthCheckRegistry(new HealthCheckRegistry());

return new HikariDataSource(config);

}

}

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

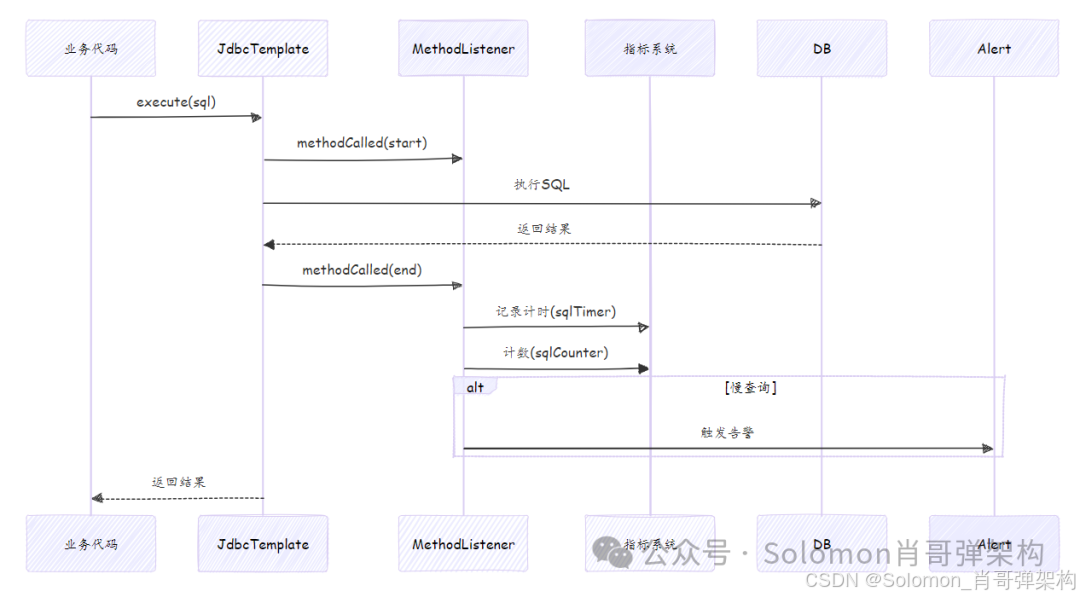

- 29.

监控流程序列图:

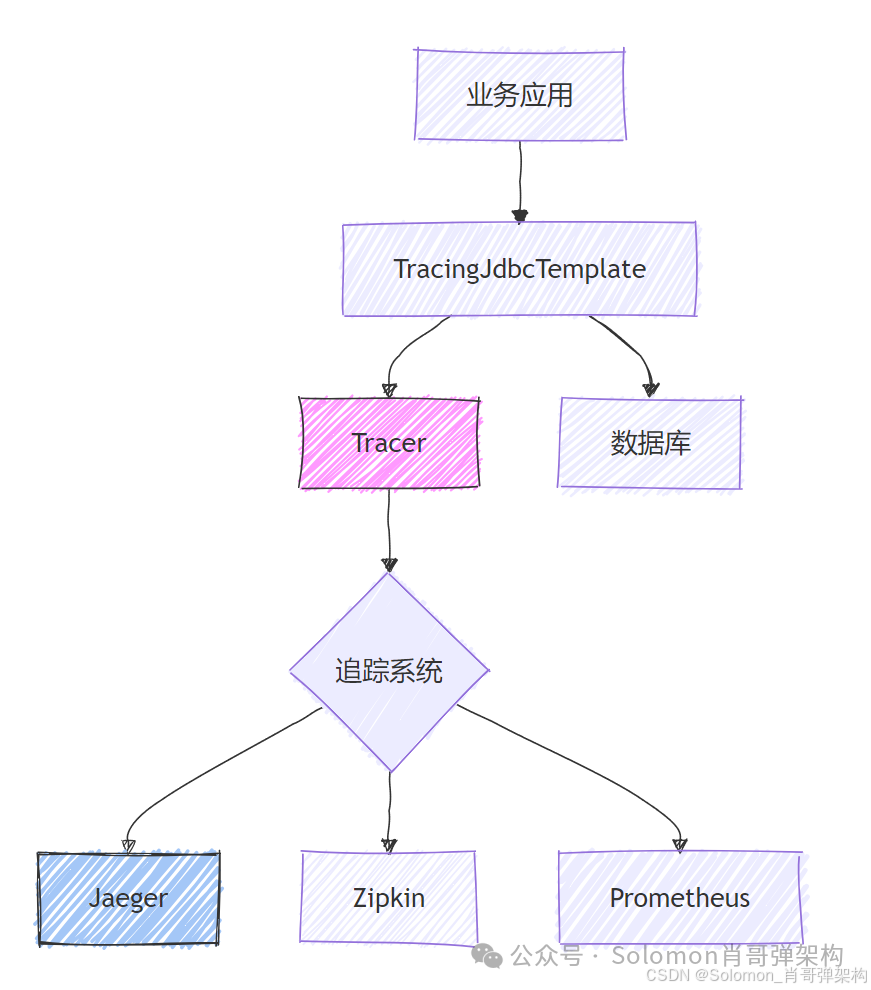

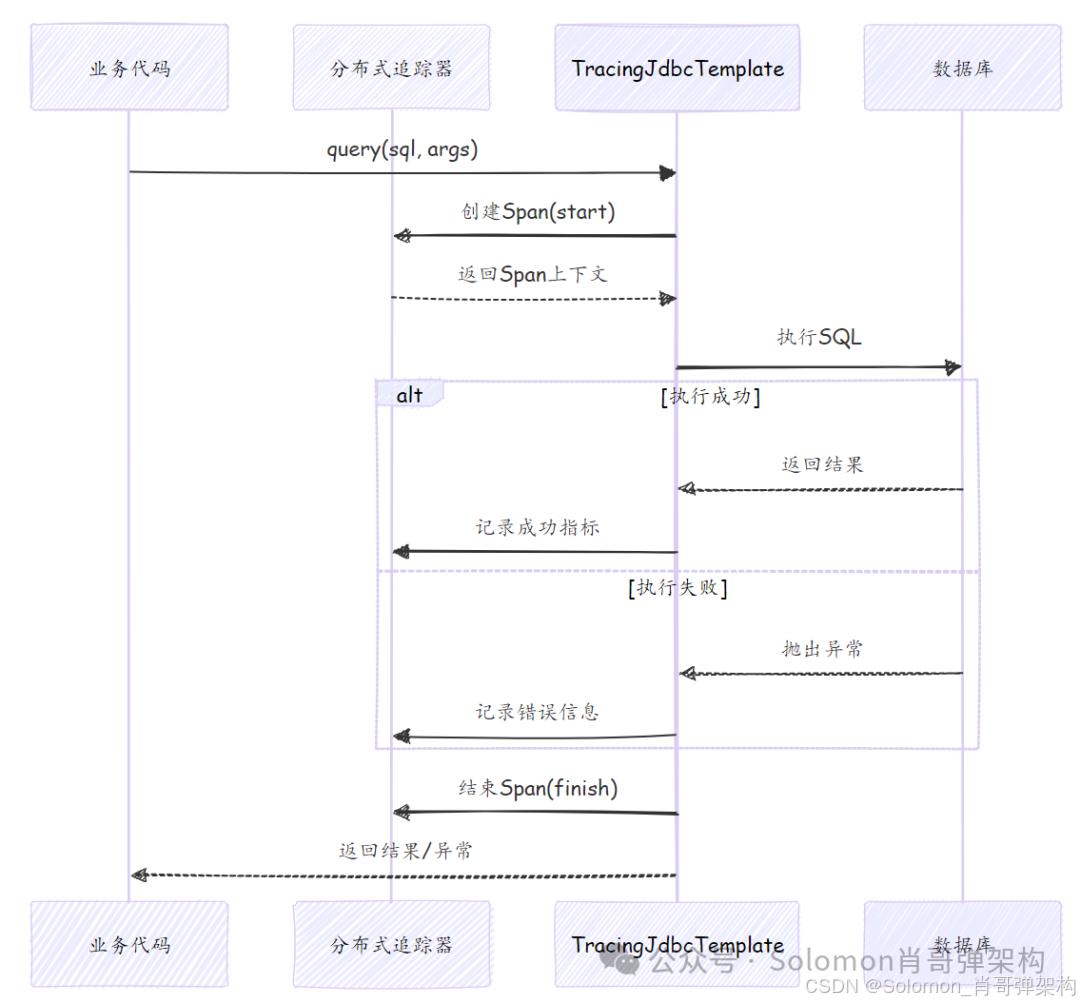

2. 数据库操作性能全链路性能追踪

2.1. 追踪实现类

/**

* 支持分布式追踪的JdbcTemplate扩展

* 集成OpenTracing/OpenTelemetry实现全链路SQL监控

*/

public class TracingJdbcTemplate extends JdbcTemplate {

private static final String DB_TYPE = "jdbc";

private final Tracer tracer;

private final boolean captureParameters;

private final int maxQueryLength;

/**

* @param dataSource 数据源

* @param tracer 分布式追踪器(OpenTracing/OpenTelemetry)

* @param captureParameters 是否捕获SQL参数

* @param maxQueryLength 记录SQL的最大长度

*/

public TracingJdbcTemplate(DataSource dataSource,

Tracer tracer,

boolean captureParameters,

int maxQueryLength) {

super(dataSource);

this.tracer = tracer;

this.captureParameters = captureParameters;

this.maxQueryLength = maxQueryLength;

// 性能优化配置

this.setFetchSize(100);

this.setQueryTimeout(30);

}

@Override

public <T> T execute(PreparedStatementCreator psc, PreparedStatementCallback<T> action) {

// 1. 创建Span

Span span = buildSpan(psc);

try (Scope scope = tracer.activateSpan(span)) {

// 2. 记录开始时间

long startTime = System.currentTimeMillis();

// 3. 执行数据库操作

T result = super.execute(psc, action);

// 4. 记录成功指标

recordSuccess(span, startTime);

return result;

} catch (Exception e) {

// 5. 记录失败信息

recordError(span, e);

throw e;

} finally {

// 6. 结束Span

span.finish();

}

}

private Span buildSpan(PreparedStatementCreator psc) {

Span span = tracer.buildSpan("jdbc.execute")

.withTag(Tags.SPAN_KIND.getKey(), Tags.SPAN_KIND_CLIENT)

.withTag(Tags.DB_TYPE.getKey(), DB_TYPE)

.start();

// 记录SQL语句

if (psc instanceof ParameterizedPreparedStatementCreator) {

String sql = ((ParameterizedPreparedStatementCreator) psc).getSql();

span.setTag(Tags.DB_STATEMENT.getKey(),

abbreviate(sql, maxQueryLength));

}

// 记录数据源信息

if (getDataSource() != null) {

try {

span.setTag("db.url", abbreviate(getDataSource().getConnection().getMetaData().getURL(), 200));

} catch (SQLException ignored) {

// 忽略获取元数据失败

}

}

return span;

}

private void recordSuccess(Span span, long startTime) {

long duration = System.currentTimeMillis() - startTime;

// 记录执行时间

span.setTag("duration.ms", duration);

// 记录为成功状态

Tags.HTTP_STATUS.set(span, 200);

// 记录自定义指标

span.log(ImmutableMap.of(

"event", "query_completed",

"status", "success",

"duration", duration

));

}

private void recordError(Span span, Exception e) {

// 标记为错误状态

Tags.ERROR.set(span, true);

// 记录异常详情

span.log(ImmutableMap.of(

"event", "error",

"error.object", e.getClass().getName(),

"message", e.getMessage(),

"stack", ExceptionUtils.getStackTrace(e)

));

// 特殊处理SQL异常

if (e instanceof SQLException) {

SQLException sqlEx = (SQLException) e;

span.setTag("db.error_code", sqlEx.getErrorCode());

span.setTag("db.sql_state", sqlEx.getSQLState());

}

}

@Override

public <T> T query(String sql, ResultSetExtractor<T> rse, Object... args) {

Span span = buildSpan(sql, args);

try (Scope scope = tracer.activateSpan(span)) {

T result = super.query(sql, rse, args);

recordSuccess(span, System.currentTimeMillis());

return result;

} catch (Exception e) {

recordError(span, e);

throw e;

} finally {

span.finish();

}

}

private Span buildSpan(String sql, Object[] args) {

Span span = tracer.buildSpan("jdbc.query")

.withTag(Tags.SPAN_KIND.getKey(), Tags.SPAN_KIND_CLIENT)

.withTag(Tags.DB_TYPE.getKey(), DB_TYPE)

.start();

// 记录SQL和参数

span.setTag(Tags.DB_STATEMENT.getKey(), abbreviate(sql, maxQueryLength));

if (captureParameters && args != null && args.length > 0) {

span.setTag("db.parameters", formatParameters(args));

}

return span;

}

private String abbreviate(String str, int maxLength) {

if (str == null) return null;

return str.length() > maxLength ? str.substring(0, maxLength) + "..." : str;

}

private String formatParameters(Object[] args) {

return Arrays.stream(args)

.map(arg -> {

if (arg == null) return "NULL";

String str = arg.toString();

return str.length() > 100 ? str.substring(0, 100) + "..." : str;

})

.collect(Collectors.joining(", ", "[", "]"));

}

}

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

- 51.

- 52.

- 53.

- 54.

- 55.

- 56.

- 57.

- 58.

- 59.

- 60.

- 61.

- 62.

- 63.

- 64.

- 65.

- 66.

- 67.

- 68.

- 69.

- 70.

- 71.

- 72.

- 73.

- 74.

- 75.

- 76.

- 77.

- 78.

- 79.

- 80.

- 81.

- 82.

- 83.

- 84.

- 85.

- 86.

- 87.

- 88.

- 89.

- 90.

- 91.

- 92.

- 93.

- 94.

- 95.

- 96.

- 97.

- 98.

- 99.

- 100.

- 101.

- 102.

- 103.

- 104.

- 105.

- 106.

- 107.

- 108.

- 109.

- 110.

- 111.

- 112.

- 113.

- 114.

- 115.

- 116.

- 117.

- 118.

- 119.

- 120.

- 121.

- 122.

- 123.

- 124.

- 125.

- 126.

- 127.

- 128.

- 129.

- 130.

- 131.

- 132.

- 133.

- 134.

- 135.

- 136.

- 137.

- 138.

- 139.

- 140.

- 141.

- 142.

- 143.

- 144.

- 145.

- 146.

- 147.

- 148.

- 149.

- 150.

- 151.

- 152.

- 153.

- 154.

- 155.

- 156.

- 157.

- 158.

- 159.

- 160.

- 161.

- 162.

- 163.

- 164.

- 165.

2.2. 配置类

@Configuration

public class TracingJdbcConfig {

@Bean

public JdbcTemplate jdbcTemplate(

DataSource dataSource,

Tracer tracer,

@Value("${jdbc.tracing.capture-parameters:false}") boolean captureParameters,

@Value("${jdbc.tracing.max-query-length:500}") int maxQueryLength) {

return new TracingJdbcTemplate(

dataSource,

tracer,

captureParameters,

maxQueryLength

);

}

@Bean

public Tracer tracer() {

// 实际项目中应配置真实的Tracer实现

// 例如使用Jaeger或Zipkin的Tracer

return new NoopTracerFactory().create();

}

}

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

2.3. 应用配置

application.yml配置示例:

追踪流程序列图:

4. SQL 执行拦截器(SQL 审计日志)

public class AuditingJdbcTemplate extends JdbcTemplate {

private final List<JdbcExecutionListener> listeners = new ArrayList<>();

public void addListener(JdbcExecutionListener listener) {

listeners.add(listener);

}

@Override

public <T> T execute(PreparedStatementCreator psc, PreparedStatementCallback<T> action) {

long startTime = System.currentTimeMillis();

String sql = null;

try {

if (psc instanceof ParameterizedPreparedStatementCreator) {

sql = ((ParameterizedPreparedStatementCreator) psc).getSql();

}

T result = super.execute(psc, action);

long duration = System.currentTimeMillis() - startTime;

notifySuccess(sql, duration);

return result;

} catch (DataAccessException ex) {

long duration = System.currentTimeMillis() - startTime;

notifyFailure(sql, ex, duration);

throw ex;

}

}

private void notifySuccess(String sql, long duration) {

for (JdbcExecutionListener listener : listeners) {

listener.onSuccess(sql, duration);

}

}

private void notifyFailure(String sql, DataAccessException ex, long duration) {

for (JdbcExecutionListener listener : listeners) {

listener.onFailure(sql, ex, duration);

}

}

}

public interface JdbcExecutionListener {

void onSuccess(String sql, long durationMs);

void onFailure(String sql, DataAccessException ex, long durationMs);

}

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

5. 事务操作

- 与 Spring 事务集成

6. 分布式事务支持(Seata)

@GlobalTransactional

public void distributedOperation() {

// 操作本地数据库

jdbcTemplate.update("UPDATE account SET balance = balance - 100 WHERE user_id = 1");

// 通过 REST 调用远程服务

restTemplate.postForEntity("http://inventory-service/deduct", ...);

// 操作另一个数据库

anotherJdbcTemplate.update("UPDATE inventory SET stock = stock - 1 WHERE product_id = 100");

}

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

1080

1080

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?