目录

✨ 摘要

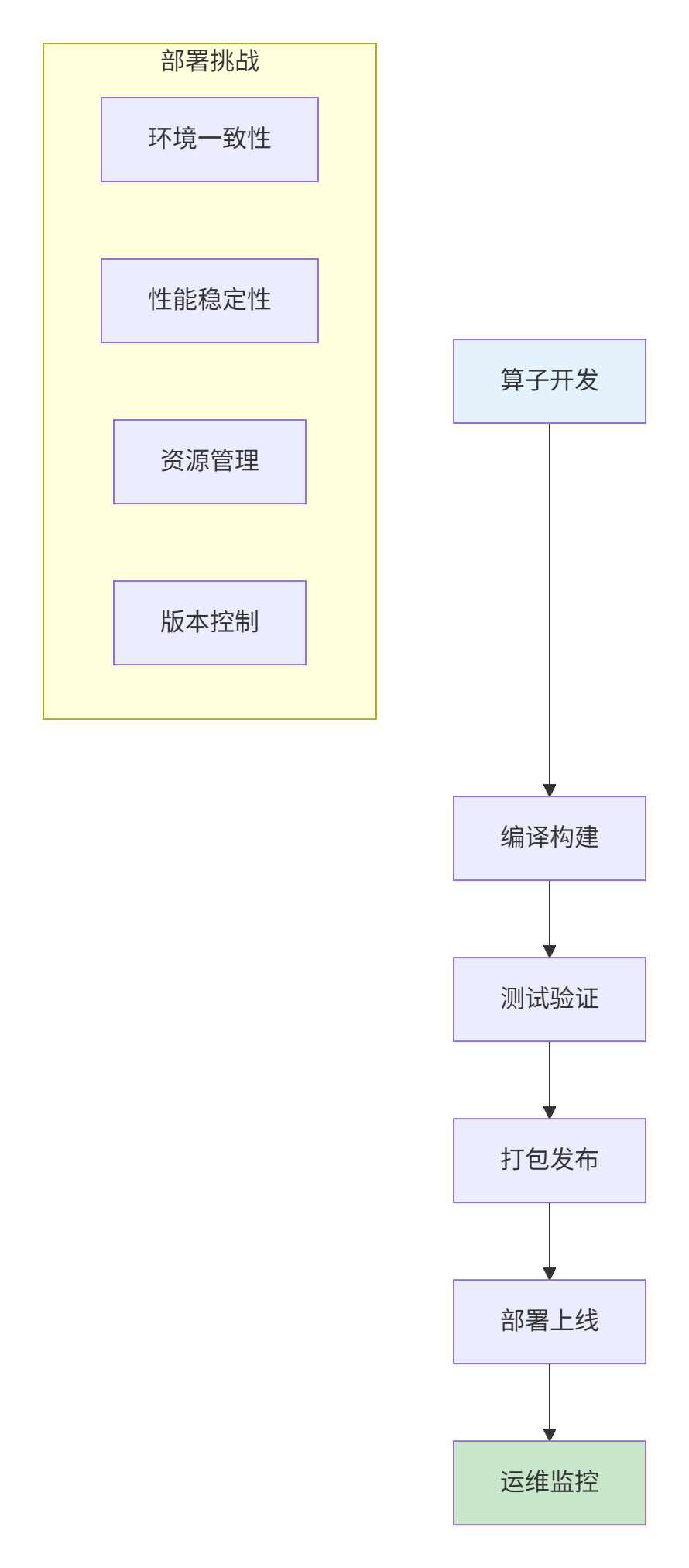

本文深度解析Ascend C算子从开发到生产部署的完整系统工程实践。从算子编译、打包、集成、部署到运维监控,我们将通过完整的工具链设计、自动化流水线、生产环境最佳实践,构建一套端到端的算子部署方法论。文章包含详细的部署架构、容器化方案、性能调优、监控告警等核心技术。

🎯 背景介绍:算子部署的系统工程挑战

在Ascend C算子开发完成后,如何高效、可靠地部署到生产环境是一个复杂的系统工程问题。您的PPT素材中隐含了从开发到部署的完整流程。

⚙️ 第一部分:持续集成与自动化构建

1.1 企业级构建系统设计

// build_system.h - 自动化构建系统

class AscendBuildSystem {

private:

struct BuildConfig {

std::string compiler_version;

std::string cann_version;

std::vector<std::string> optimization_flags;

bool enable_debug;

bool enable_profiling;

std::string target_architecture;

};

struct DependencyManager {

std::unordered_map<std::string, std::string> library_versions;

std::vector<std::string> system_dependencies;

std::vector<std::string> ascend_dependencies;

};

public:

// 自动化构建流程

BuildResult build_operator(const std::string& source_path,

const BuildConfig& config) {

BuildResult result;

try {

// 1. 环境检查

if (!check_build_environment(config)) {

throw BuildError("环境检查失败");

}

// 2. 依赖解析

auto dependencies = resolve_dependencies(source_path, config);

// 3. 编译优化

auto compile_result = compile_with_optimizations(source_path, config, dependencies);

// 4. 链接打包

result = link_and_package(compile_result, config);

// 5. 质量验证

if (!validate_build_quality(result)) {

throw BuildError("构建质量验证失败");

}

} catch (const std::exception& e) {

result.success = false;

result.error_message = e.what();

log_build_error(e.what());

}

return result;

}

private:

CompileResult compile_with_optimizations(const std::string& source_path,

const BuildConfig& config,

const DependencyManager& deps) {

// 生成优化的编译命令

auto compile_command = generate_optimized_compile_command(config, deps);

// 执行编译

auto compile_output = execute_compile_command(compile_command, source_path);

// 优化建议分析

auto optimization_suggestions = analyze_optimization_potential(compile_output);

return {

.object_files = compile_output.object_files,

.optimization_suggestions = optimization_suggestions,

.compile_time = compile_output.elapsed_time

};

}

std::string generate_optimized_compile_command(const BuildConfig& config,

const DependencyManager& deps) {

std::stringstream cmd;

cmd << "ascend-cc "

<< "-O" << (config.enable_debug ? "0" : "3") << " "

<< "--std=c++17 "

<< "-mcpu=ai-core "

<< "-march=ascend910 ";

// 添加优化标志

for (const auto& flag : config.optimization_flags) {

cmd << flag << " ";

}

// 添加依赖库

for (const auto& lib : deps.ascend_dependencies) {

cmd << "-l" << lib << " ";

}

return cmd.str();

}

};1.2 自动化CI/CD流水线

# .github/workflows/ascend-ci.yml

name: Ascend Operator CI/CD

on:

push:

branches: [ main, develop ]

pull_request:

branches: [ main ]

env:

CANN_VERSION: 5.0.2

ASCEND_COMPILER_VERSION: 1.2.0

jobs:

build-and-test:

runs-on: [self-hosted, ascend-env]

strategy:

matrix:

arch: [ascend310, ascend910]

precision: [fp16, fp32]

steps:

- name: Checkout code

uses: actions/checkout@v3

- name: Setup Ascend Environment

uses: ascend/setup-cann@v1

with:

version: ${{ env.CANN_VERSION }}

compiler-version: ${{ env.ASCEND_COMPILER_VERSION }}

- name: Build Operator

run: |

mkdir build && cd build

cmake -DARCH=${{ matrix.arch }} \

-DPRECISION=${{ matrix.precision }} \

-DENABLE_TESTS=ON ..

make -j$(nproc)

- name: Run Unit Tests

run: |

cd build && ctest --output-on-failure

- name: Performance Benchmark

run: |

python benchmarks/run_performance_tests.py \

--arch ${{ matrix.arch }} \

--precision ${{ matrix.precision }}

- name: Package Artifacts

run: |

cd build && make package

ls -la *.deb *.rpm

- name: Upload Artifacts

uses: actions/upload-artifact@v3

with:

name: operator-package-${{ matrix.arch }}-${{ matrix.precision }}

path: build/*.deb

deploy-staging:

needs: build-and-test

runs-on: [self-hosted, ascend-staging]

if: github.ref == 'refs/heads/main'

steps:

- name: Download Artifacts

uses: actions/download-artifact@v3

- name: Deploy to Staging

run: |

sudo dpkg -i operator-package-*.deb

systemctl restart ascend-operator-service🏗️ 第二部分:容器化部署方案

2.1 生产级Docker镜像构建

# Dockerfile.ascend

FROM ascendhub/cann:5.0.2-runtime

# 元数据

LABEL maintainer="ai-team@company.com"

LABEL version="1.0.0"

LABEL description="Ascend C Operator Runtime"

# 环境变量

ENV OPERATOR_HOME=/opt/ascend/operator

ENV LD_LIBRARY_PATH=$OPERATOR_HOME/lib:$LD_LIBRARY_PATH

ENV PATH=$OPERATOR_HOME/bin:$PATH

# 安装系统依赖

RUN apt-get update && apt-get install -y \

libssl-dev \

libboost-all-dev \

libgflags-dev \

&& rm -rf /var/lib/apt/lists/*

# 创建非root用户

RUN groupadd -r ascend && useradd -r -g ascend ascend

# 复制算子包

COPY --chown=ascend:ascend build/output/ $OPERATOR_HOME/

# 配置健康检查

HEALTHCHECK --interval=30s --timeout=10s --start-period=5s --retries=3 \

CMD $OPERATOR_HOME/bin/health_check

# 配置安全上下文

USER ascend

WORKDIR $OPERATOR_HOME

# 暴露监控端口

EXPOSE 8080 9090

# 启动命令

CMD ["/opt/ascend/operator/bin/operator_service"]2.2 Kubernetes部署配置

# k8s/operator-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: ascend-operator

namespace: ai-inference

labels:

app: ascend-operator

version: v1.0.0

spec:

replicas: 3

selector:

matchLabels:

app: ascend-operator

template:

metadata:

labels:

app: ascend-operator

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "9090"

prometheus.io/path: "/metrics"

spec:

# 节点选择 - 仅调度到有Ascend设备的节点

nodeSelector:

ascend.ai/hardware: "910"

tolerations:

- key: "ascend.ai/hardware"

operator: "Equal"

value: "910"

effect: "NoSchedule"

containers:

- name: operator-runtime

image: registry.company.com/ascend-operator:v1.0.0

imagePullPolicy: IfNotPresent

# 资源限制

resources:

limits:

ascend.ai/npu: 1

memory: "4Gi"

cpu: "2"

requests:

ascend.ai/npu: 1

memory: "2Gi"

cpu: "1"

# 环境变量配置

env:

- name: OPERATOR_CONFIG_PATH

value: "/etc/ascend/operator/config.yaml"

- name: LOG_LEVEL

value: "INFO"

- name: MODEL_CACHE_SIZE

value: "1024"

# 健康检查

livenessProbe:

httpGet:

path: /health

port: 8080

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

readinessProbe:

httpGet:

path: /ready

port: 8080

initialDelaySeconds: 5

periodSeconds: 5

# 卷挂载

volumeMounts:

- name: config-volume

mountPath: /etc/ascend/operator

- name: model-volume

mountPath: /var/lib/ascend/models

- name: log-volume

mountPath: /var/log/ascend

# 安全上下文

securityContext:

runAsUser: 1000

runAsGroup: 1000

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

capabilities:

drop:

- ALL

# 端口配置

ports:

- containerPort: 8080

name: http

- containerPort: 9090

name: metrics

volumes:

- name: config-volume

configMap:

name: operator-config

- name: model-volume

persistentVolumeClaim:

claimName: model-pvc

- name: log-volume

emptyDir: {}

# 亲和性配置

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- ascend-operator

topologyKey: kubernetes.io/hostname🔧 第三部分:配置管理与服务发现

3.1 动态配置管理系统

// config_manager.h - 配置管理

class OperatorConfigManager {

private:

struct RuntimeConfig {

// 性能配置

struct PerformanceConfig {

int batch_size;

int thread_count;

bool enable_async;

int stream_priority;

size_t memory_pool_size;

};

// 监控配置

struct MonitoringConfig {

bool enable_metrics;

int metrics_port;

std::string log_level;

std::vector<std::string> custom_metrics;

};

// 功能配置

struct FeatureConfig {

bool enable_precision_mode;

bool enable_benchmark_mode;

std::string fallback_strategy;

};

};

std::shared_ptr<ConfigSource> config_source_;

std::unordered_map<std::string, RuntimeConfig> config_cache_;

std::mutex config_mutex_;

public:

// 动态配置更新

void watch_config_updates() {

config_source_->watch([this](const ConfigUpdate& update) {

std::lock_guard<std::mutex> lock(config_mutex_);

auto new_config = parse_config_update(update);

if (validate_config(new_config)) {

apply_config_update(new_config);

notify_config_change(new_config);

}

});

}

// 热更新配置

bool update_runtime_config(const std::string& key,

const RuntimeConfig& new_config) {

if (!validate_config(new_config)) {

return false;

}

{

std::lock_guard<std::mutex> lock(config_mutex_);

config_cache_[key] = new_config;

}

// 应用配置变更

apply_config_changes(key, new_config);

return true;

}

private:

void apply_config_changes(const std::string& key,

const RuntimeConfig& config) {

// 应用性能配置变更

if (config.performance.batch_size !=

config_cache_[key].performance.batch_size) {

adjust_batch_size(config.performance.batch_size);

}

// 应用线程配置变更

if (config.performance.thread_count !=

config_cache_[key].performance.thread_count) {

adjust_thread_pool(config.performance.thread_count);

}

// 记录配置变更

log_config_change(key, config);

}

};3.2 服务注册与发现

# config/service-discovery.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: operator-service-discovery

namespace: ai-inference

data:

service-registry.json: |

{

"services": {

"ascend-operator": {

"instances": [

{

"id": "operator-1",

"address": "10.0.1.10",

"port": 8080,

"tags": ["ascend910", "fp16", "high-memory"],

"meta": {

"version": "1.0.0",

"region": "us-west-1",

"az": "us-west-1a"

},

"checks": [

{

"http": "http://10.0.1.10:8080/health",

"interval": "10s",

"timeout": "1s"

}

]

}

],

"load_balancing": {

"policy": "round_robin",

"health_check": {

"interval": "30s",

"timeout": "5s"

}

}

}

}

}📊 第四部分:监控与可观测性

4.1 多层次监控体系

// monitoring_system.h - 监控系统

class OperatorMonitoringSystem {

public:

struct PerformanceMetrics {

// 基础性能指标

double qps; // 每秒查询数

double latency_p50; // P50延迟

double latency_p95; // P95延迟

double latency_p99; // P99延迟

// 资源使用指标

double npu_utilization; // NPU利用率

double memory_utilization; // 内存利用率

double power_consumption; // 功耗

// 业务指标

uint64_t total_requests; // 总请求数

uint64_t successful_requests; // 成功请求数

uint64_t failed_requests; // 失败请求数

};

// 实时指标收集

void collect_realtime_metrics() {

auto metrics_collector = create_metrics_collector();

// 性能指标

metrics_collector->add_gauge("operator.qps",

[this]() { return calculate_current_qps(); });

metrics_collector->add_histogram("operator.latency",

[this]() { return collect_latency_histogram(); });

// 资源指标

metrics_collector->add_gauge("npu.utilization",

[this]() { return get_npu_utilization(); });

metrics_collector->add_gauge("memory.usage",

[this]() { return get_memory_usage(); });

}

// 告警规则管理

void setup_alert_rules() {

AlertManager::add_rule({

.name = "high_latency_alert",

.expr = "operator_latency_p99 > 100",

.for = "2m",

.labels = {{"severity", "warning"}},

.annotations = {{"summary", "P99延迟过高"}}

});

AlertManager::add_rule({

.name = "npu_over_utilization",

.expr = "npu_utilization > 0.9",

.for = "1m",

.labels = {{"severity", "critical"}},

.annotations = {{"summary", "NPU利用率过高"}}

});

}

private:

// 性能数据聚合

PerformanceMetrics aggregate_metrics(const std::vector<MetricSample>& samples) {

PerformanceMetrics metrics;

// 计算分位数延迟

auto latencies = extract_latencies(samples);

std::sort(latencies.begin(), latencies.end());

metrics.latency_p50 = calculate_percentile(latencies, 0.5);

metrics.latency_p95 = calculate_percentile(latencies, 0.95);

metrics.latency_p99 = calculate_percentile(latencies, 0.99);

// 计算QPS

metrics.qps = calculate_qps(samples);

return metrics;

}

};4.2 Grafana监控仪表板

{

"dashboard": {

"title": "Ascend Operator监控面板",

"panels": [

{

"title": "QPS监控",

"type": "graph",

"targets": [

{

"expr": "rate(operator_requests_total[5m])",

"legendFormat": "{{instance}} QPS"

}

],

"yaxes": {

"format": "reqps"

}

},

{

"title": "延迟分布",

"type": "heatmap",

"targets": [

{

"expr": "histogram_quantile(0.95, rate(operator_latency_seconds_bucket[5m]))",

"legendFormat": "P95延迟"

}

]

},

{

"title": "NPU利用率",

"type": "gauge",

"targets": [

{

"expr": "npu_utilization",

"legendFormat": "利用率"

}

],

"thresholds": {

"steps": [

{"value": 0, "color": "green"},

{"value": 0.8, "color": "yellow"},

{"value": 0.9, "color": "red"}

]

}

}

],

"refresh": "10s"

}

}🛡️ 第五部分:安全与合规

5.1 安全加固配置

// security_hardener.h - 安全加固

class OperatorSecurityHardener {

public:

struct SecurityConfig {

// 网络安全

bool enable_tls;

std::string certificate_path;

std::string private_key_path;

// 访问控制

bool enable_authentication;

std::string jwt_secret;

std::vector<std::string> allowed_origins;

// 数据安全

bool enable_encryption;

std::string encryption_key;

bool enable_audit_log;

};

// 应用安全配置

void harden_operator_runtime(SecurityConfig& config) {

// 1. 网络安全加固

if (config.enable_tls) {

setup_tls_encryption(config.certificate_path, config.private_key_path);

}

// 2. 访问控制加固

if (config.enable_authentication) {

setup_jwt_authentication(config.jwt_secret);

setup_cors_policy(config.allowed_origins);

}

// 3. 数据安全加固

if (config.enable_encryption) {

setup_data_encryption(config.encryption_key);

}

// 4. 审计日志

if (config.enable_audit_log) {

setup_audit_logging();

}

// 5. 系统级安全

apply_system_hardening();

}

private:

void apply_system_hardening() {

// 限制系统调用

prctl(PR_SET_NO_NEW_PRIVS, 1, 0, 0, 0);

// 设置安全计算模式

prctl(PR_SET_SECCOMP, SECCOMP_MODE_FILTER, &seccomp_filter);

// 限制资源使用

rlimit64 rlim = {.rlim_cur = 1024, .rlim_max = 1024};

setrlimit64(RLIMIT_NOFILE, &rlim);

}

void setup_audit_logging() {

// 配置审计日志

AuditConfig config = {

.enable = true,

.log_file = "/var/log/ascend/audit.log",

.max_size = 100 * 1024 * 1024, // 100MB

.retention_days = 30

};

setup_audit_system(config);

}

};🔄 第六部分:版本管理与回滚策略

6.1 智能版本管理

# helm/operator/values.yaml

replicaCount: 3

image:

repository: registry.company.com/ascend-operator

tag: "v1.0.0"

pullPolicy: IfNotPresent

# 滚动更新配置

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

# 版本管理

versioning:

autoRollback: true

rollbackWindow: "5m"

versionCheck: true

# 金丝雀发布配置

canary:

enabled: false

percentage: 10

duration: "1h"

# 资源版本映射

resourceMapping:

v1.0.0:

cannVersion: "5.0.2"

modelFormat: "v3"

apiVersion: "v1"6.2 自动化回滚机制

# rollback_manager.py - 回滚管理

class RollbackManager:

def __init__(self, k8s_client, prometheus_client):

self.k8s = k8s_client

self.prometheus = prometheus_client

self.rollback_thresholds = self.load_rollback_thresholds()

def monitor_deployment_health(self, deployment_name, namespace):

"""监控部署健康状态"""

while True:

try:

# 检查应用指标

metrics = self.get_deployment_metrics(deployment_name, namespace)

# 检查是否需要回滚

if self.should_rollback(metrics):

self.execute_rollback(deployment_name, namespace)

break

time.sleep(30) # 30秒检查一次

except Exception as e:

logging.error(f"健康检查失败: {e}")

# 在监控失败时保守回滚

self.execute_rollback(deployment_name, namespace)

break

def should_rollback(self, metrics: DeploymentMetrics) -> bool:

"""判断是否需要回滚"""

checks = [

# 错误率检查

metrics.error_rate > self.rollback_thresholds['max_error_rate'],

# 延迟检查

metrics.p95_latency > self.rollback_thresholds['max_latency'],

# 资源使用检查

metrics.cpu_usage > self.rollback_thresholds['max_cpu_usage'],

# NPU使用检查

metrics.npu_utilization > self.rollback_thresholds['max_npu_usage']

]

return any(checks)

def execute_rollback(self, deployment_name: str, namespace: str):

"""执行回滚操作"""

logging.info(f"开始回滚部署 {deployment_name}")

try:

# 1. 获取上一个稳定版本

previous_version = self.get_previous_stable_version(deployment_name)

# 2. 执行回滚

self.k8s.rollback_deployment(

name=deployment_name,

namespace=namespace,

revision=previous_version

)

# 3. 验证回滚结果

if self.verify_rollback_success(deployment_name, namespace):

logging.info("回滚成功")

self.notify_rollback_success(deployment_name, previous_version)

else:

logging.error("回滚验证失败")

self.notify_rollback_failure(deployment_name)

except Exception as e:

logging.error(f"回滚执行失败: {e}")

self.notify_rollback_failure(deployment_name)📈 第七部分:性能调优与容量规划

7.1 生产环境性能调优

# tuning/production-tuning.yaml

performance:

# 批处理优化

batching:

enabled: true

max_batch_size: 32

timeout_ms: 10

preferred_batch_size: 16

# 内存优化

memory:

pool_size: "2Gi"

allocation_strategy: "pooled"

enable_memory_reuse: true

# 计算优化

computation:

stream_priority: "high"

enable_async_execution: true

computation_hints: "high_throughput"

# 资源规划

capacity_planning:

# 基于性能测试结果的容量规划

requests_per_second: 1000

required_replicas: 3

resource_requirements:

npu: 1

cpu: "2"

memory: "4Gi"

# 自动扩缩容配置

autoscaling:

enabled: true

min_replicas: 1

max_replicas: 10

target_cpu_utilization: 70

target_memory_utilization: 80通过本文的完整部署体系,我们建立了从代码到生产的全链路算子部署解决方案,为Ascend C算子的工业化应用提供了可靠的工程实践。

🔗 参考链接

-

华为昇腾CANN部署指南 - 官方Ascend算子部署最佳实践和容器化方案

-

Kubernetes Operator部署模式 - Kubernetes官方Operator部署模式和最佳实践

-

Prometheus监控指标体系 - Prometheus监控指标类型和采集配置指南

-

云原生安全最佳实践 - Google云原生Kubernetes安全加固指南

-

CI/CD流水线设计模式 - Red Hat CI/CD流水线设计模式和自动化实践

🚀 官方介绍

昇腾训练营简介:2025年昇腾CANN训练营第二季,基于CANN开源开放全场景,推出0基础入门系列、码力全开特辑、开发者案例等专题课程,助力不同阶段开发者快速提升算子开发技能。获得Ascend C算子中级认证,即可领取精美证书,完成社区任务更有机会赢取华为手机,平板、开发板等大奖。

报名链接: https://www.hiascend.com/developer/activities/cann20252#cann-camp-2502-intro

期待在训练营的硬核世界里,与你相遇!

Ascend C算子全链路部署实践

Ascend C算子全链路部署实践

1036

1036

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?