Author:rab

目录

一、场景

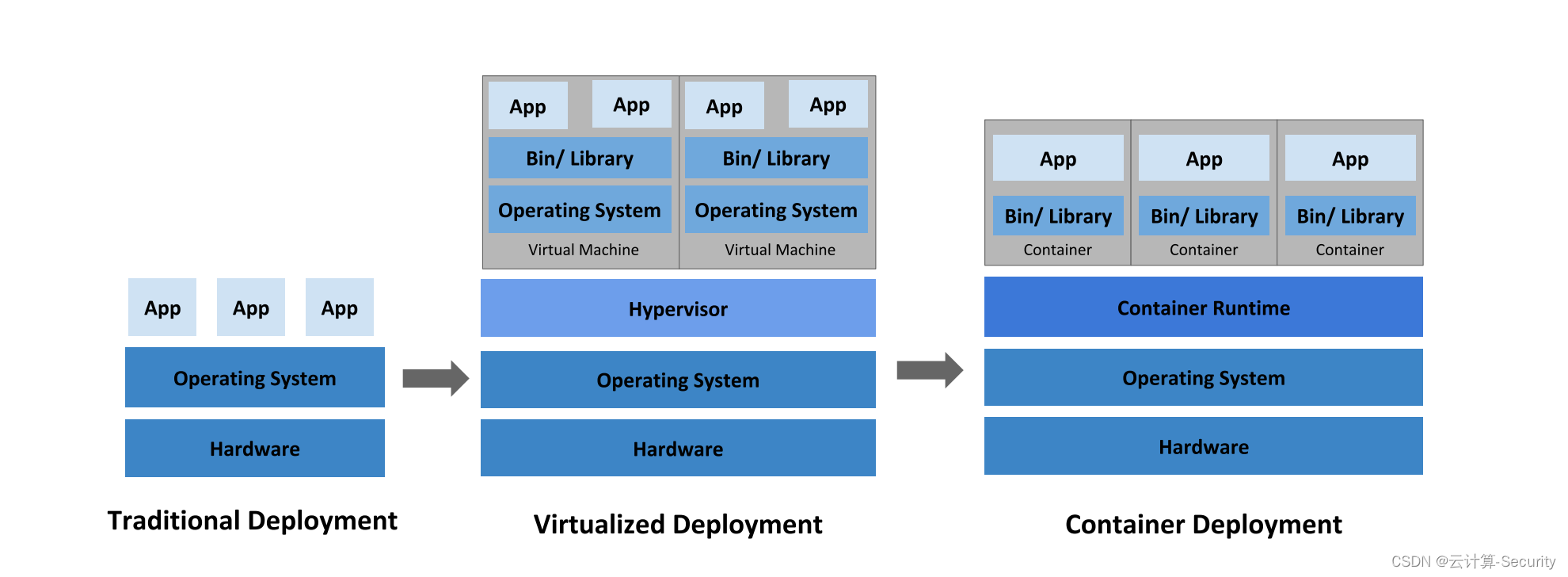

1.1 部署演进

- 传统部署 ——> 虚拟化部署 ——> 容器化部署

- 单体架构 ——> 微服务架构

1.2 应用场景

-

单 K8s 节点 ——> 用于开发测试验证

-

单 K8s-master 集群 ——> 存在 master 单点故障 ——> 一般用于测试环境

-

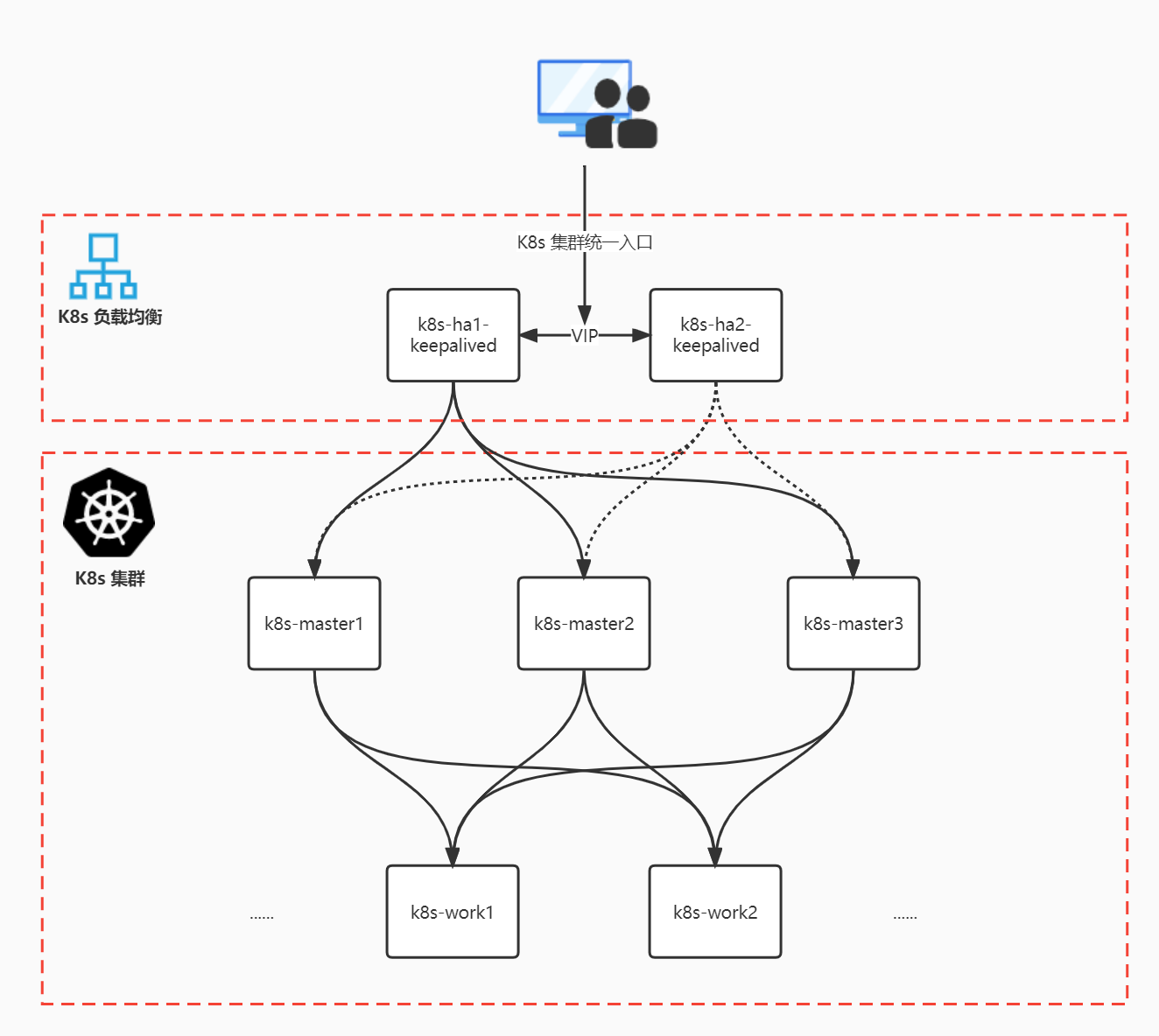

K8s-master 高可用集群架构(解决单点故障)——> keepalived 高可用 + LB 负载均衡 ——> 企业级 K8s 集群架构 ——> 用于正式环境

1.3 K8s 能做什么?

在生产环境中, 你需要管理运行着应用程序的容器,并确保服务不会下线。在没有引进 K8s 之前,我们可通过简单的 Docker swarm 集群来实现应用程序下线后自动拉起,保证可用性。而 K8s 能实现的功能却比 Docker swarm 更加丰富,当然也能保证应用程序的可用性,比如一个容器发生故障,那么 K8s 会进行自动修复。它具有以下这些特性:

-

服务发现和负载均衡

K8s 可以使用 DNS 名称或自己的 IP 地址来曝露容器。 如果进入容器的流量很大, K8s 可以负载均衡并分配网络流量,从而使部署稳定。

-

存储编排

K8s 允许你自动挂载你选择的存储系统,例如本地存储、公有云提供商等。

-

自动部署和回滚

你可以使用 K8s 描述已部署容器的所需状态, 它可以以受控的速率将实际状态更改为期望状态。 例如,你可以自动化 K8s 来为你的部署创建新容器, 删除现有容器并将它们的所有资源用于新容器。

-

自动完成装箱计算

你为 K8s 提供许多节点组成的集群,在这个集群上运行容器化的任务。 你告诉 K8s 每个容器需要多少 CPU 和内存 (RAM)。 K8s 可以将这些容器按实际情况调度到你的节点上,以最佳方式利用你的资源。

-

自我修复

K8s 将重新启动失败的容器、替换容器、杀死不响应用户定义的运行状况检查的容器, 并且在准备好服务之前不将其通告给客户端。

-

密钥与配置管理

K8s 允许你存储和管理敏感信息,例如密码、OAuth 令牌和 ssh 密钥。 你可以在不重建容器镜像的情况下部署和更新密钥和应用程序配置,也无需在堆栈配置中暴露密钥。

二、架构

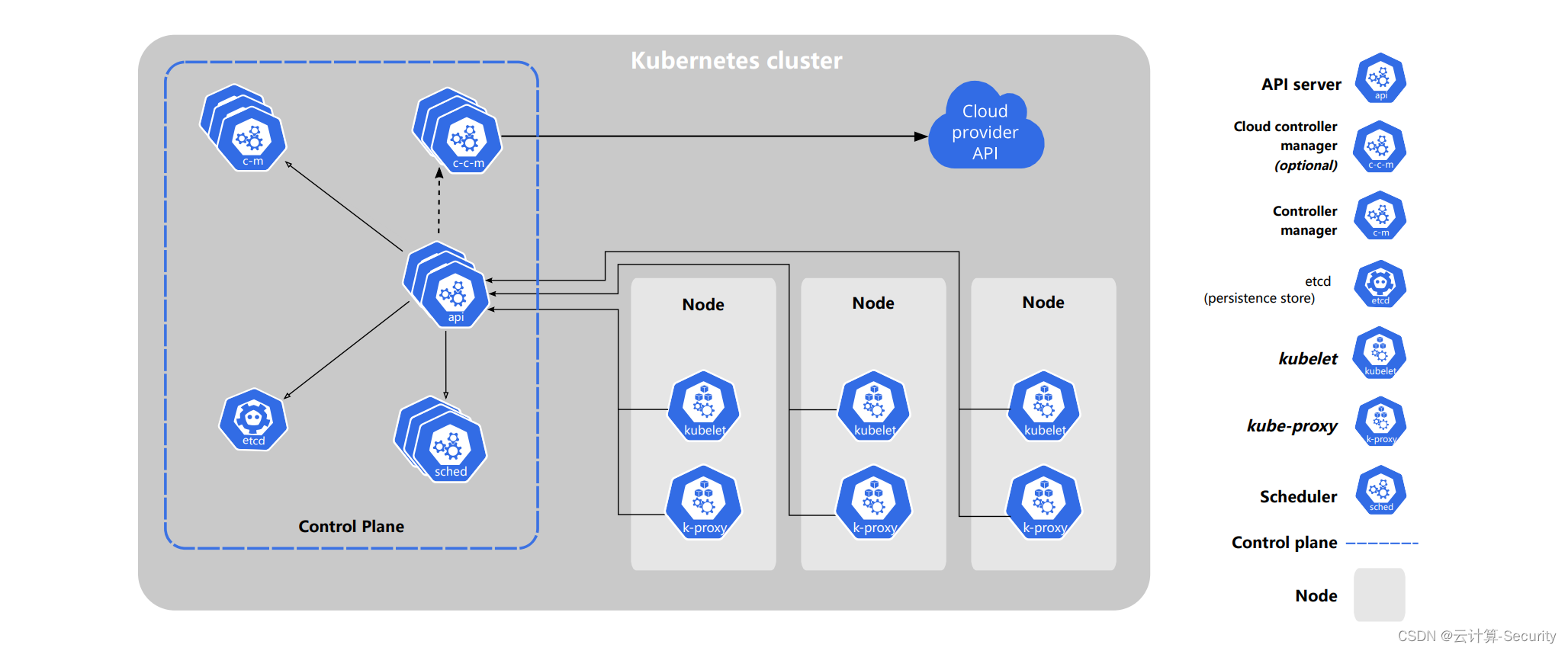

2.1 官方架构图

K8s 集群由一组被称为节点(node)的服务器组成,这些节点上会运行由 K8s 所管理的容器化应用,且每个集群至少有一个工作节点。

工作节点会托管所谓的 Pods,而 Pod 就是作为应用负载的组件。控制平面(就是 Master 管理节点)管理集群中的工作节点和 Pods。 为集群提供故障转移和高可用性, 这些控制平面一般跨多主机运行,而集群也会跨多个节点运行。看下图就够就很清晰了。

2.2 生产环境架构

下图仅为大体示意图,具体的组件并没有详细展示。

三、规划

3.1 主机规划

| Host | Hostname | Node | 说明 |

|---|---|---|---|

| 192.168.56.171(2C2G) | k8s-master1 | k8s-master1、ETCD | k8s主节点、ETCD节点 |

| 192.168.56.172(2C2G) | k8s-master2 | k8s-master2、ETCD | k8s主节点、ETCD节点 |

| 192.168.56.173(2C2G) | k8s-master3 | k8s-master3、ETCD | k8s主节点、ETCD节点 |

| 192.168.56.174(2C2G) | k8s-work1 | k8s-work1 | k8s工作节点 |

| 192.168.56.175(2C2G) | k8s-work2 | k8s-work2 | k8s工作节点 |

| 192.168.56.176(2C2G) | k8s-ha1 | k8s-ha1、keepalived | k8s负载均衡(负载master) |

| 192.168.56.177(2C2G) | k8s-ha2 | k8s-ha2、keepalived | k8s负载均衡(负载master) |

| 192.168.56.178(VIP) | - | - | 虚拟 IP,集群统一入口 |

说明:以上为测试演示用,实际生产环境中至少 8C/16G + 的服务器配置。公有云的话,VIP 为公有云的负载均衡的 IP。

3.2 版本规划

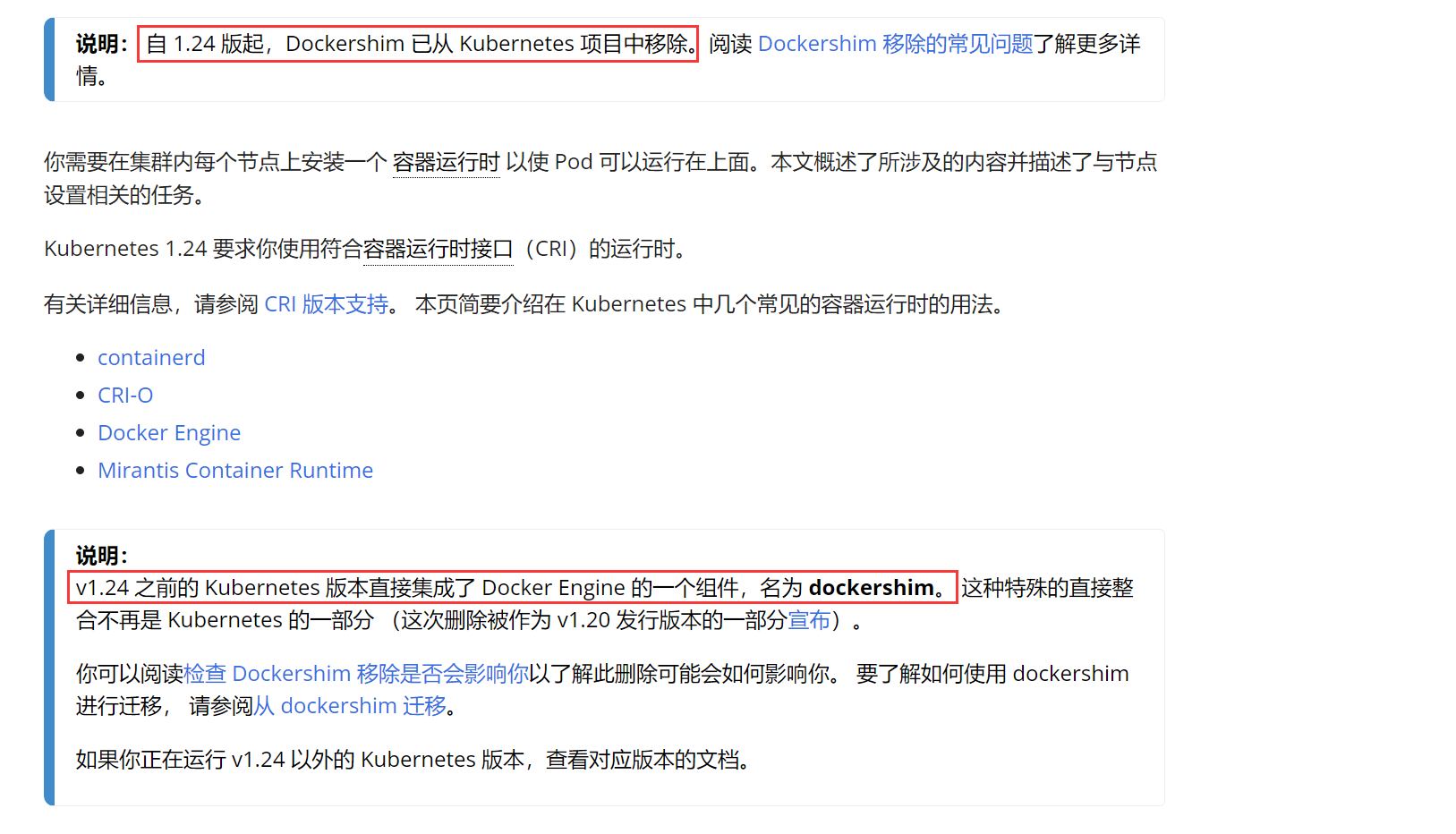

本次部署的 K8s 版本为 1.24.x ,v1.24 之前的 Kubernetes 版本直接集成了 Docker Engine 的一个组件,名为 dockershim。 自 1.24 版起,Dockershim 已从 Kubernetes 项目中正式移除。

| 软件名称 | 版本 |

|---|---|

| CentOS 7 | kernel:3.10 |

| K8s(kube-apiserver、kube-controller-manager、kube-scheduler、kubelet、kube-proxy) | v1.24.4 |

| etcd | v3.5.4 |

| calico | v3.23 |

| coredns | v1.9.3 |

| docker | v20.10.17 |

| haproxy | v5.18 |

| keepalived | v3.5 |

3.3 目录规划

在任意一台 k8s-master 节点创建即可,生成的相关文件(证书、私钥等)再通过 scp 等方式进行分发。

| DIR | 说明 |

|---|---|

| /data/k8s-work/ | k8s-master 节点工作目录 |

| /data/k8s-work/cfssl | 用于创建各种证书文件的目录 |

| /data/k8s-work/etcd | ETCD 二进制包存放目录 |

| /data/k8s-work/k8s | K8s 二进制包存放目录 |

| /data/k8s-work/calico | calico 配置文件 |

| /data/k8s-work/coredns | coredns 配置文件 |

| /data/etcd/{conf,data,ssl} | ETCD 集群服务(配置文件、数据、证书)目录 |

| /data/kubernetes/{conf,logs,ssl,tokenfile} | K8s 集群服务(配置文件、数据、证书)目录 |

3.4 网络分配

| 网络名称 | 网段 |

|---|---|

| Node 节点网络 | 192.168.56.0/24 |

| Service 网络 | 10.96.0.0/16 |

| Pod 网络 | 10.244.0.0/16 |

3.5 容器引擎

本次采用 Docker 作为 K8s 的编排对象,但要清楚,从 1.20+ 版本开始,K8s 已不再唯一支持 Docker 作为编排对象,且 1.24+ 版本开始完全移除了 Dockershim,进而支持 Contained 工业级容器,当然我们的 Docker 还是能继续使用的,可通过 cri-docker 接口实现,下文会有详细介绍。

四、部署

4.1 服务器初始化

4.1.1 统一主机名

对应主机执行

hostnamectl set-hostname k8s-master1

hostnamectl set-hostname k8s-master2

hostnamectl set-hostname k8s-master3

hostnamectl set-hostname k8s-work1

hostnamectl set-hostname k8s-work2

hostnamectl set-hostname k8s-ha1

hostnamectl set-hostname k8s-ha1

4.1.2 互作本地解析

所有主机均执行

192.168.56.171 k8s-master1

192.168.56.172 k8s-master2

192.168.56.173 k8s-master3

192.168.56.174 k8s-work1

192.168.56.175 k8s-work2

192.168.56.176 k8s-ha1

192.168.56.177 k8s-ha1

4.1.3 主机系统优化

所有主机均执行

1、关闭 firewalld

systemctl stop firewalld

systemctl disable firewalld

2、关闭 Selinux

setenforce 0

sed -i 's/enforcing/disabled/' /etc/selinux/config

3、停用交互分区

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

4、集群系统时间同步

yum install -y ntpdate && ntpdate time.nist.gov && hwclock --systohc

5、加载 br_netfilter 模块

# 确保 br_netfilter 模块被加载

# 加载模块

modprobe br_netfilter

# 查看加载情况

lsmod | grep br_netfilter

br_netfilter 22256 0

bridge 151336 1 br_netfilter

# 永久生效

cat <<EOF | tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

6、允许 iptables 检查桥接流量

# 设置所需的 sysctl 参数,参数在重新启动后保持不变

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# 应用 sysctl 参数而不重新启动

sudo sysctl --system

7、文件描述符

cat <<EOF >> /etc/security/limits.conf

* soft nofile 655360

* hard nofile 655360

* soft nproc 655350

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

EOF

9、修改虚拟内存最大限制

cat <<EOF >> /etc/sysctl.conf

vm.max_map_count = 655360

fs.file-max=655360

EOF

系统级别

cat <<EOF >> /etc/systemd/system.conf

DefaultLimitNOFILE=655360

DefaultLimitNPROC=655360

DefaultLimitMEMLOCK=infinity

EOF

使生效

sysctl -p

10、MAC 地址和 product_uuid 的唯一性

一般来讲,硬件设备会拥有唯一的地址,但是有些虚拟机的地址可能会重复。 Kubernetes 使用这些值来唯一确定集群中的节点。 如果这些值在每个节点上不唯一,可能会导致安装失败。

可以使用命令 ip link 或 ifconfig -a 来获取网络接口的 MAC 地址

可以使用 sudo cat /sys/class/dmi/id/product_uuid 命令对 product_uuid 校验

4.1.4 配置免密登录

k8s-master1 节点免密钥登录其他节点,安装过程中生成配置文件和证书均在 k8s-master1 上操作,集群管理也在 k8s-master1 上操作,阿里云或者 AWS 上需要单独一台 kubectl 服务器。

# 一路回车即可

ssh-keygen

# 免密登录

ssh-copy-id k8s-master2

ssh-copy-id k8s-master3

ssh-copy-id k8s-work1

ssh-copy-id k8s-work2

4.2 K8s 负载均衡配置

在 k8s-ha1、k8s-ha2 服务器上执行

4.2.1 Haproxy

1、安装

yum -y install haproxy

2、配置

cat >/etc/haproxy/haproxy.cfg<<"EOF"

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

frontend k8s-master

bind 0.0.0.0:6443

bind 127.0.0.1:6443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-master1 192.168.56.171:6443 check

server k8s-master2 192.168.56.172:6443 check

server k8s-master3 192.168.56.173:6443 check

EOF

3、启动

systemctl start haproxy.service

systemctl enable haproxy.service

4.2.2 Keepalived

1、安装

yum install -y keepalived

# 配置文件路径:/etc/keepalived/keepalived.conf

2、配置

- k8s-ha1(主)

cat >/etc/keepalived/keepalived.conf<<"EOF"

! Configuration File for keepalived

global_defs {

router_id k8s-master

}

vrrp_script check_k8s-master {

script "/etc/keepalived/check_k8s-master_status.sh"

interval 5

}

vrrp_instance VI_1 {

state MASTER

interface ens33

mcast_src_ip 192.168.56.176

virtual_router_id 90

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass K8S_PASSWD

}

virtual_ipaddress {

192.168.56.178/24

}

track_script {

check_k8s-master

}

}

EOF

- k8s-ha2(备)

cat >/etc/keepalived/keepalived.conf<<"EOF"

! Configuration File for keepalived

global_defs {

router_id k8s-master

}

vrrp_script check_k8s-master {

script "/etc/keepalived/check_k8s-master_status.sh"

interval 5

}

vrrp_instance VI_2 {

state BACKUP

interface ens33

mcast_src_ip 192.168.56.177

virtual_router_id 90

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass K8S_PASSWD

}

virtual_ipaddress {

192.168.56.178/24

}

track_script {

check_k8s-master

}

}

EOF

3、K8s-master 健康检测脚本

主备均创建该文件

cat > /etc/keepalived/check_k8s-master_status.sh <<"EOF"

#!/bin/bash

err=0

for k in $(seq 1 3)

do

check_code=$(pgrep haproxy)

if [[ $check_code == "" ]]; then

err=$(expr $err + 1)

sleep 1

continue

else

err=0

break

fi

done

if [[ $err != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

EOF

4、启动

systemctl start keepalived.service

systemctl enable keepalived.service

4.3 K8s 集群组件部署

4.3.1 ETCD 集群

4.3.1.1 部署 cfssl 工具

1、创建工作目录

mkdir -p /data/k8s-work/cfssl

2、安装 cfssl 工具

# 我用的版本是1.6.1(大家根据实际选择)

cd /data/k8s-work/cfssl

# 上传文件到当前目录下(这里用到了三个文件)

[root@k8s-master1 cfssl]# ll

total 40232

-rw-r--r-- 1 root root 16659824 May 31 22:12 cfssl_1.6.1_linux_amd64

-rw-r--r-- 1 root root 13502544 May 31 21:49 cfssl-certinfo_1.6.1_linux_amd64

-rw-r--r-- 1 root root 11029744 May 31 21:50 cfssljson_1.6.1_linux_amd64

# 说明

# cfssl文件:命令行工具

# cfssljson文件:用来从cfssl程序获取JSON输出,并将证书,密钥,CSR和bundle写入文件中

# cfssl-certinfo文件:证书相关信息查看工具

3、软链接

chmod +x ./cfssl*

ln -s /data/k8s-work/cfssl/cfssl_1.6.1_linux_amd64 /usr/sbin/cfssl

ln -s /data/k8s-work/cfssl/cfssl-certinfo_1.6.1_linux_amd64 /usr/sbin/cfssl-certinfo

ln -s /data/k8s-work/cfssl/cfssljson_1.6.1_linux_amd64 /usr/sbin/cfssljson

4、验证

[root@k8s-master1 cfssl]# cfssl version

Version: 1.6.1

Runtime: go1.12.12

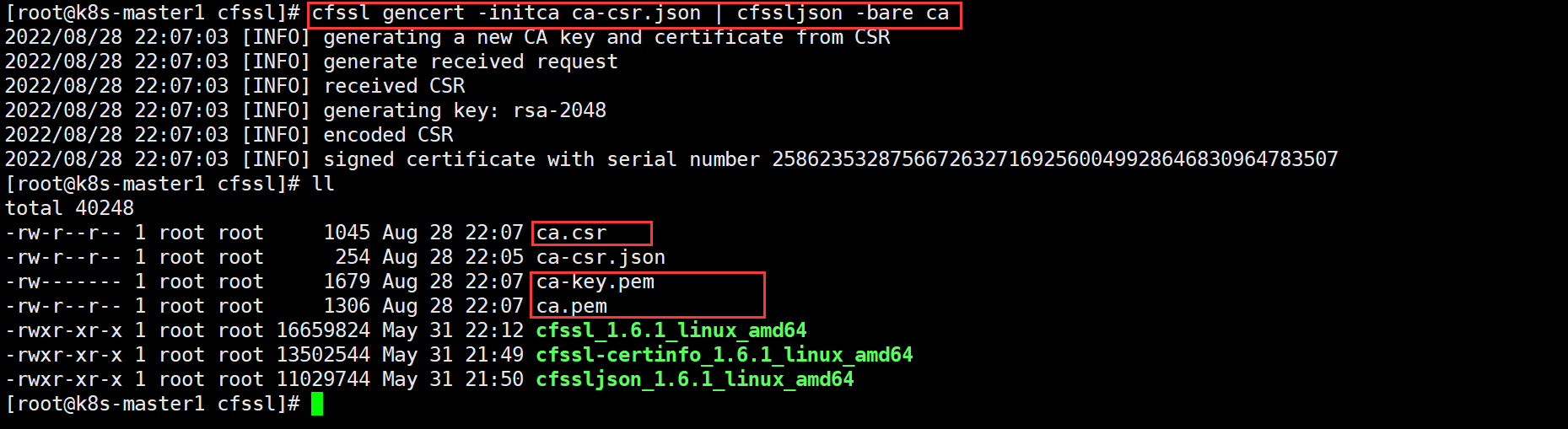

4.3.1.2 生成 CA 证书

1、配置 CA 证书请求文件

cd /data/k8s-work/cfssl

cat > ca-csr.json <<"EOF"

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "xgxy",

"OU": "ops"

}

],

"ca": {

"expiry": "87600h"

}

}

EOF

2、创建 ca 证书

会生成三个文件:ca.csr 请求文件、ca-key.pem请求 key、ca.pem 证书

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

3、CA 证书策略

你可以通过 cfssl 命令行工具来默认生成,然后再修改。

cfssl print-defaults config > ca-config.json

生成后,修改为下面案例即可(或就直接使用下面的示例配置即可)

cat > ca-config.json <<"EOF"

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

EOF

# 说明

# server auth:表示client客户端可以使用ca对server提供的证书进行验证

# client auth:表示server客户端可以使用ca对client提供的证书进行验证

4.3.1.3 生成 ETCD 证书

1、配置 ETCD 证书请求文件

为了方便后期扩容可以多写几个预留的 IP

cat > etcd-csr.json <<"EOF"

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.56.171",

"192.168.56.172",

"192.168.56.173"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "xgxy",

"OU": "ops"

}]

}

EOF

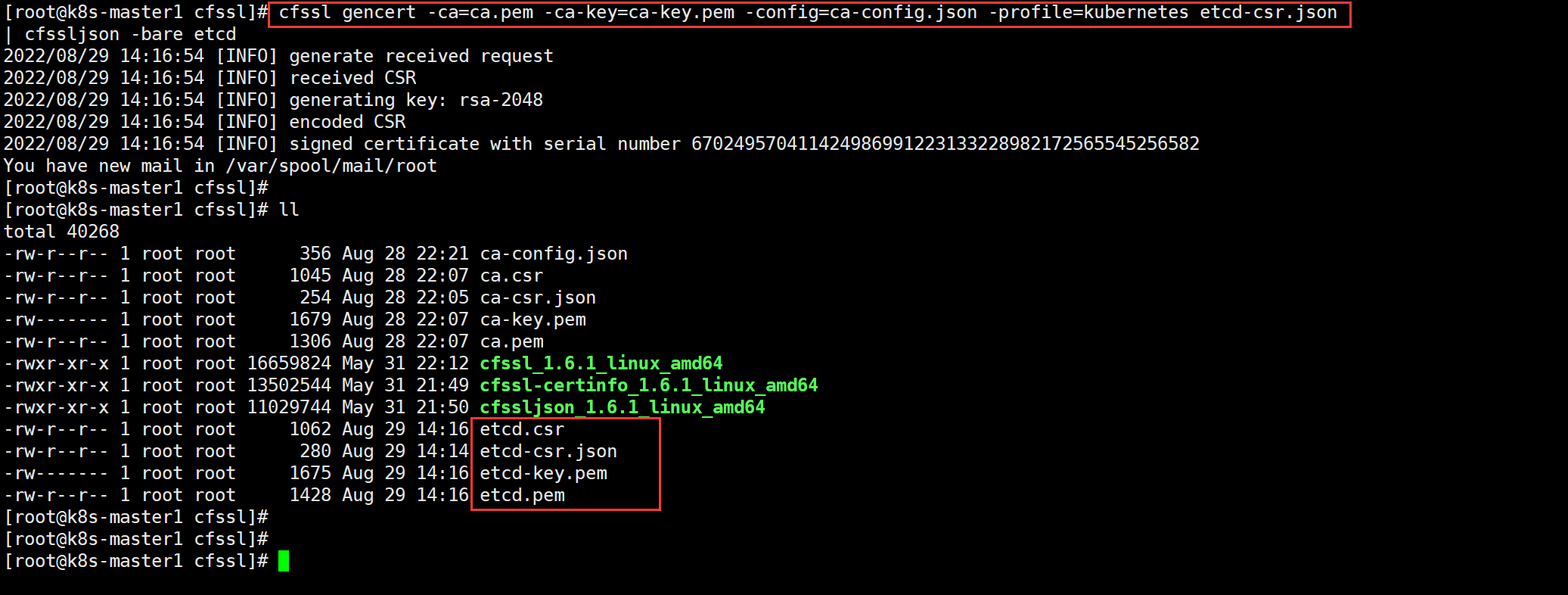

2、生成 ETCD 证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

4.3.1.4 部署 ETCD 集群

1、下载 ETCD 软件包并上传至服务器

mkdir /data/k8s-work/etcd

tar xzf etcd-v3.5.4-linux-amd64.tar.gz

mv etcd-v3.5.4-linux-amd64 etcd-v3.5.4

2、做软链接

ln -s /data/k8s-work/etcd/etcd-v3.5.4/etcd* /usr/bin/

3、版本验证

[root@k8s-master1 etcd]# etcd --version

etcd Version: 3.5.4

Git SHA: 08407ff76

Go Version: go1.16.15

Go OS/Arch: linux/amd64

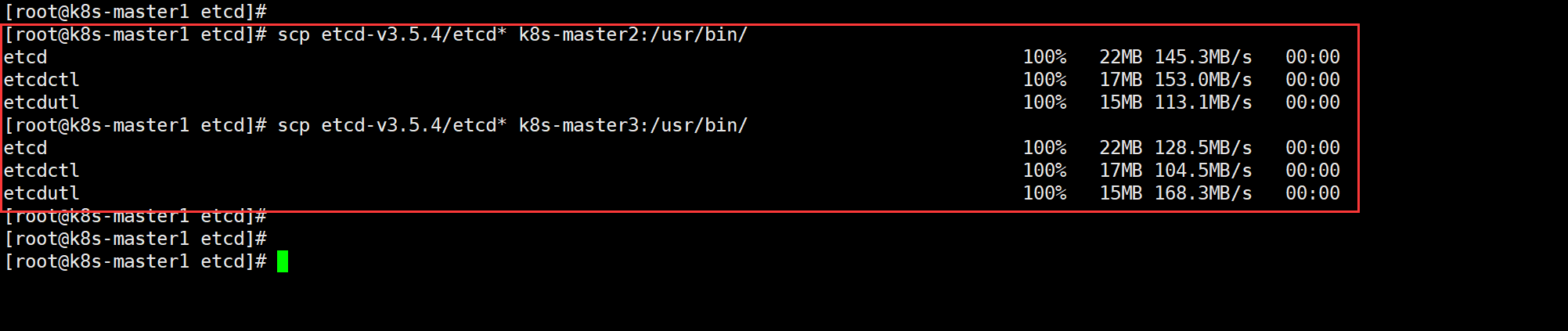

4、分发 ETCD 二进制工具至其他 ETCD 集群节点主机上

scp etcd-v3.5.4/etcd* k8s-master2:/usr/bin/

scp etcd-v3.5.4/etcd* k8s-master3:/usr/bin/

5、新建 ETCD 集群相关目录

所有 ETCD 集群节点均操作

mkdir -p /data/etcd/{conf,data,ssl}

6、创建 ETCD 配置文件

所有 ETCD 集群节点均操作

-

k8s-master1

cat > /data/etcd/conf/etcd.conf <<"EOF" #[Member] ETCD_NAME="etcd-1" ETCD_DATA_DIR="/data/etcd/data" ETCD_LISTEN_PEER_URLS="https://192.168.56.171:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.56.171:2379,http://127.0.0.1:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.56.171:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.56.171:2379" ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.56.171:2380,etcd-2=https://192.168.56.172:2380,etcd-3=https://192.168.56.173:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" #[Security] ETCD_CERT_FILE="/data/etcd/ssl/etcd.pem" ETCD_KEY_FILE="/data/etcd/ssl/etcd-key.pem" ETCD_TRUSTED_CA_FILE="/data/etcd/ssl/ca.pem" ETCD_CLIENT_CERT_AUTH="true" ETCD_PEER_CERT_FILE="/data/etcd/ssl/etcd.pem" ETCD_PEER_KEY_FILE="/data/etcd/ssl/etcd-key.pem" ETCD_PEER_TRUSTED_CA_FILE="/data/etcd/ssl/ca.pem" ETCD_PEER_CLIENT_CERT_AUTH="true" EOF -

k8s-master2

cat > /data/etcd/conf/etcd.conf <<"EOF" #[Member] ETCD_NAME="etcd-2" ETCD_DATA_DIR="/data/etcd/data" ETCD_LISTEN_PEER_URLS="https://192.168.56.172:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.56.172:2379,http://127.0.0.1:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.56.172:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.56.172:2379" ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.56.171:2380,etcd-2=https://192.168.56.172:2380,etcd-3=https://192.168.56.173:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" #[Security] ETCD_CERT_FILE="/data/etcd/ssl/etcd.pem" ETCD_KEY_FILE="/data/etcd/ssl/etcd-key.pem" ETCD_TRUSTED_CA_FILE="/data/etcd/ssl/ca.pem" ETCD_CLIENT_CERT_AUTH="true" ETCD_PEER_CERT_FILE="/data/etcd/ssl/etcd.pem" ETCD_PEER_KEY_FILE="/data/etcd/ssl/etcd-key.pem" ETCD_PEER_TRUSTED_CA_FILE="/data/etcd/ssl/ca.pem" ETCD_PEER_CLIENT_CERT_AUTH="true" EOF -

k8s-master3

cat > /data/etcd/conf/etcd.conf <<"EOF" #[Member] ETCD_NAME="etcd-3" ETCD_DATA_DIR="/data/etcd/data" ETCD_LISTEN_PEER_URLS="https://192.168.56.173:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.56.173:2379,http://127.0.0.1:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.56.173:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.56.173:2379" ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.56.171:2380,etcd-2=https://192.168.56.172:2380,etcd-3=https://192.168.56.173:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" #[Security] ETCD_CERT_FILE="/data/etcd/ssl/etcd.pem" ETCD_KEY_FILE="/data/etcd/ssl/etcd-key.pem" ETCD_TRUSTED_CA_FILE="/data/etcd/ssl/ca.pem" ETCD_CLIENT_CERT_AUTH="true" ETCD_PEER_CERT_FILE="/data/etcd/ssl/etcd.pem" ETCD_PEER_KEY_FILE="/data/etcd/ssl/etcd-key.pem" ETCD_PEER_TRUSTED_CA_FILE="/data/etcd/ssl/ca.pem" ETCD_PEER_CLIENT_CERT_AUTH="true" EOF说明: ETCD_NAME:节点名称,集群中唯一 ETCD_DATA_DIR:数据目录(自定义) ETCD_LISTEN_PEER_URLS:集群通信监听地址 ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址 ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址 ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址 ETCD_INITIAL_CLUSTER:集群节点地址(所有ETCD节点地址) ETCD_INITIAL_CLUSTER_TOKEN:集群Token(ETCD集群节点统一口令) ETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new是新集群,existing 表示加入已有集群 ETCD_CERT_FILE:etcd.pem文件 ETCD_KEY_FILE:etcd-key.pem文件 ETCD_TRUSTED_CA_FILE:ca.pem文件 ETCD_CLIENT_CERT_AUTH="true" ETCD_PEER_CERT_FILE:etcd.pem文件 ETCD_PEER_KEY_FILE:etcd-key.pem文件 ETCD_PEER_TRUSTED_CA_FILE:ca.pem文件 ETCD_PEER_CLIENT_CERT_AUTH="true"

7、复制 ETCD 证书至刚创建对应的目录

# k8s-master1

cd /data/k8s-work/cfssl

cp ca*.pem etcd*.pem /data/etcd/ssl/

# k8s-master2

scp ca*.pem etcd*.pem k8s-master2:/data/etcd/ssl/

# k8s-master3

scp ca*.pem etcd*.pem k8s-master3:/data/etcd/ssl/

8、配置 systemd 管理

ETCD 三台集群节点均操作

cat << EOF | tee /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=-/data/etcd/conf/etcd.conf

ExecStart=/usr/bin/etcd

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

9、启动 ETCD 集群

systemctl daemon-reload

systemctl start etcd.service

systemctl enable etcd.service

这里注意:启动第一个ETCD节点后,它就会等待其他集群节点加入,如果特定时间内其他节点未加入,则启动会失败

因此,我们需要在特定时间内启动ETCD集群,避免超时启动失败

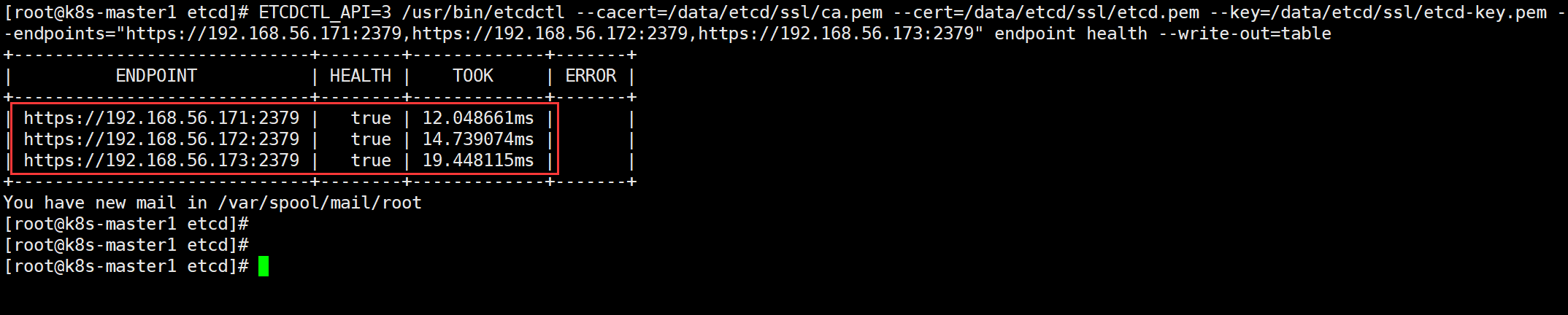

10、集群验证

-

节点可用性验证

ETCDCTL_API=3 /usr/bin/etcdctl --cacert=/data/etcd/ssl/ca.pem --cert=/data/etcd/ssl/etcd.pem --key=/data/etcd/ssl/etcd-key.pem --endpoints="https://192.168.56.171:2379,https://192.168.56.172:2379,https://192.168.56.173:2379" endpoint health --write-out=table

-

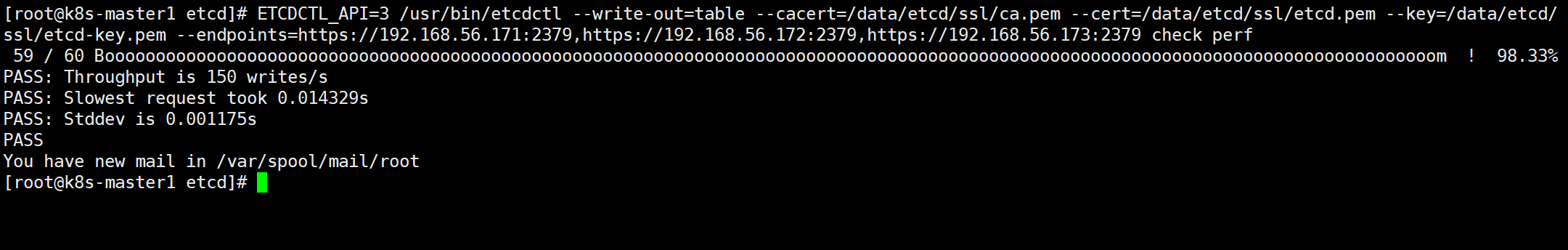

ETCD 数据库性能验证

ETCDCTL_API=3 /usr/bin/etcdctl --write-out=table --cacert=/data/etcd/ssl/ca.pem --cert=/data/etcd/ssl/etcd.pem --key=/data/etcd/ssl/etcd-key.pem --endpoints=https://192.168.56.171:2379,https://192.168.56.172:2379,https://192.168.56.173:2379 check perf

-

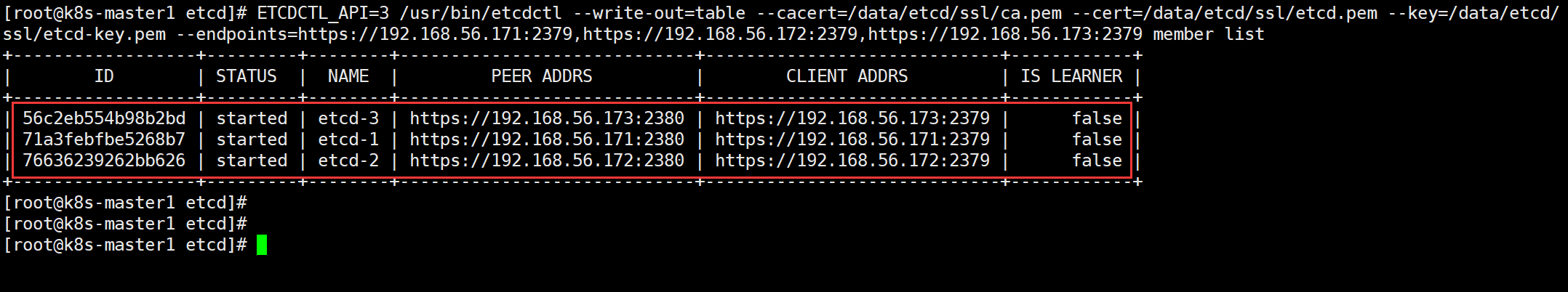

集群节点成员列表

ETCDCTL_API=3 /usr/bin/etcdctl --write-out=table --cacert=/data/etcd/ssl/ca.pem --cert=/data/etcd/ssl/etcd.pem --key=/data/etcd/ssl/etcd-key.pem --endpoints=https://192.168.56.171:2379,https://192.168.56.172:2379,https://192.168.56.173:2379 member list

这里看不了谁是 Leader,继续看下一条测试命令。

-

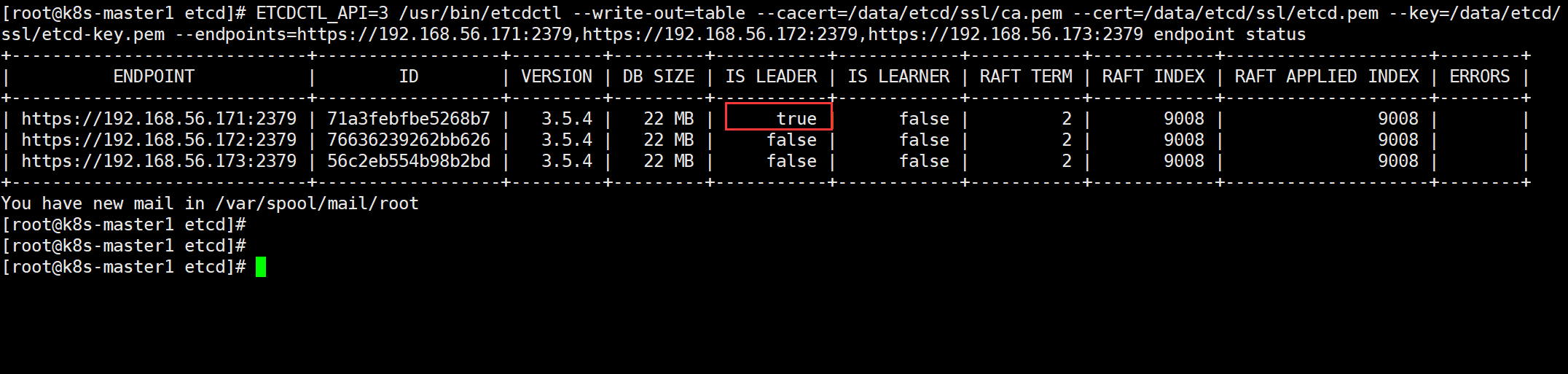

查看集群 Leader

ETCDCTL_API=3 /usr/bin/etcdctl --write-out=table --cacert=/data/etcd/ssl/ca.pem --cert=/data/etcd/ssl/etcd.pem --key=/data/etcd/ssl/etcd-key.pem --endpoints=https://192.168.56.171:2379,https://192.168.56.172:2379,https://192.168.56.173:2379 endpoint status

4.3.2 K8s 集群部署

4.3.2.1 Master 节点

k8s-master 必须的节点:kube-apiserver、kube-controller-manager、kube-scheduler、kubectl(k8s-master 客户端工具)

4.3.2.1.1 kubernetes

K8s 二进制包下载并分发

1、创建工作目录

mkdir /data/k8s-work/k8s

2、下载并上传 K8s 包至服务器

tar xzf kubernetes-server-linux-amd64.tar.gz

# 这个二进制包包含了master、work的所有组件,所以下载一个二进制包即可

3、做软链接

ln -s /data/k8s-work/k8s/kubernetes/server/bin/kube-apiserver /usr/bin/

ln -s /data/k8s-work/k8s/kubernetes/server/bin/kube-controller-manager /usr/bin/

ln -s /data/k8s-work/k8s/kubernetes/server/bin/kube-scheduler /usr/bin/

ln -s /data/k8s-work/k8s/kubernetes/server/bin/kubectl /usr/bin/

# 如果你希望将你的 k8s-master 节点也用于工作负载,那还需要分发以下二进制组件。本次我不希望在master节点上进行工作负载

# ln -s /data/k8s-work/k8s/kubernetes/server/bin/kubelet /usr/bin/

# ln -s /data/k8s-work/k8s/kubernetes/server/bin/kube-proxy /usr/bin/

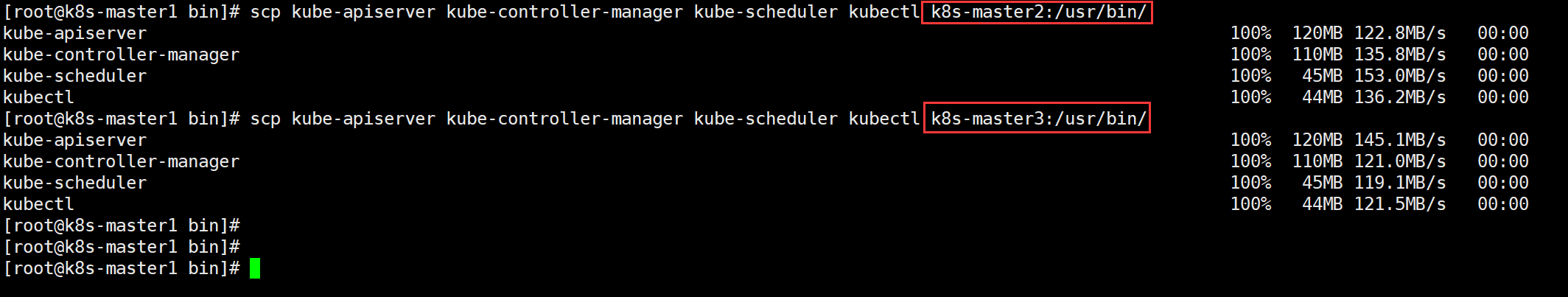

4、二进制组件分发

分发二进制命令至其他 k8s-master 节点,这是 k8s-master 必须的二进制组件。

scp kube-apiserver kube-controller-manager kube-scheduler kubectl k8s-master2:/usr/bin/

scp kube-apiserver kube-controller-manager kube-scheduler kubectl k8s-master3:/usr/bin/

如果你希望将你的 k8s-master 节点也用于

工作负载,那还需要分发这几个二进制组件。

scp kubelet kube-proxy k8s-master2:/usr/bin/

scp kubelet kube-proxy k8s-master3:/usr/bin/

4.3.2.1.2 apiserver

1、配置 apiserver 证书请求文件

# 同样,进入到我们的cfssl目录下创建

cd /data/k8s-work/cfssl

cat > kube-apiserver-csr.json << "EOF"

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.56.171",

"192.168.56.172",

"192.168.56.173",

"192.168.56.174",

"192.168.56.175",

"192.168.56.176",

"192.168.56.177",

"192.168.56.178",

"192.168.56.179",

"192.168.56.180",

"10.96.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "xgxy",

"OU": "ops"

}

]

}

EOF

以上 IP 为我们的 k8s-master 节点 IP、k8s-work 节点 IP、k8s-ha 节点 IP、VIP,且这些 IP 都是必要的。

为了方便后期扩容可以多写几个预留的 IP,方便 master 或 work 的加入。

注意:hosts 字段不仅可写 IP,也可写域名。

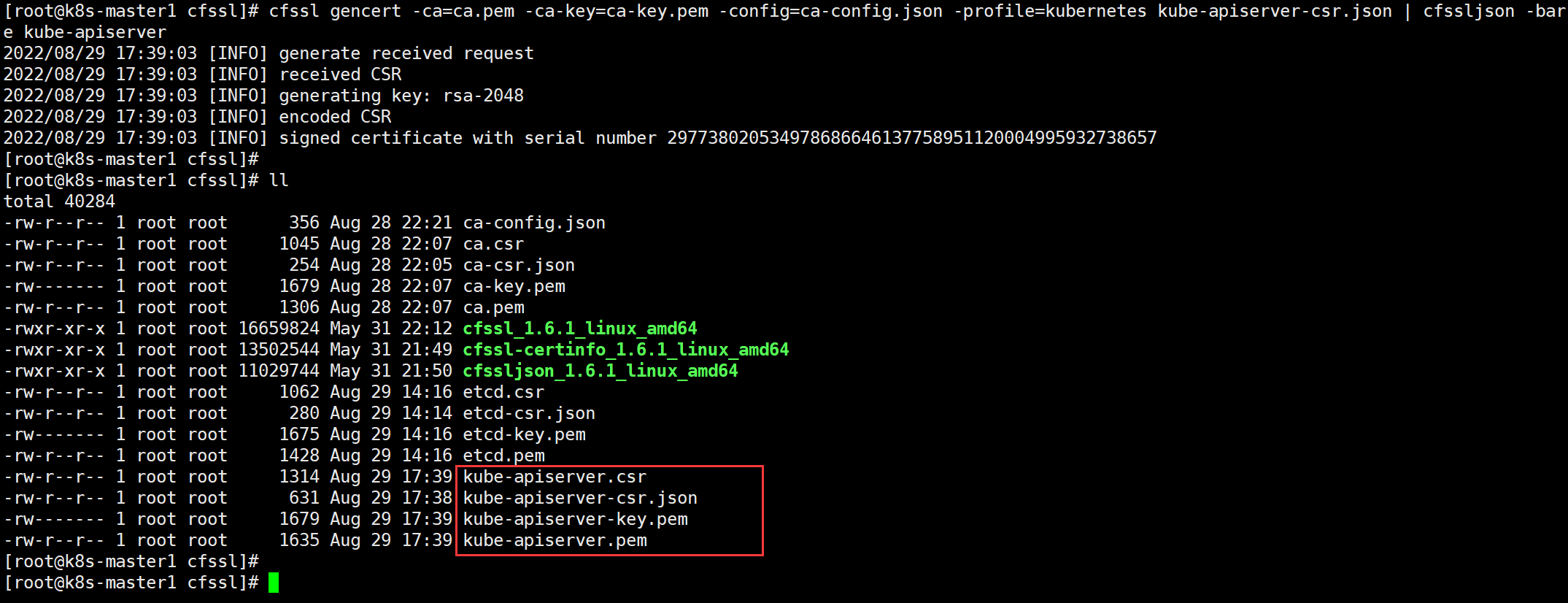

2、生成 apiserver 证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-apiserver-csr.json | cfssljson -bare kube-apiserver

3、配置 token文件

其目的是为了实现自动签发证书,因为 Master apiserver 启用 TLS 认证后,work 节点的 kubelet、kube-proxy 与 kube-apiserver 进行通信时必须使用 CA 签发的有效证书,如果我有几百上千台 work 节点,那每次进行通信无疑都会增加工作量。

为了简化流程,Kubernetes 引入了 TLS bootstraping 机制来实现动态颁发客户端证书。目前主要用于kubelet,kube-proxy 还是由我们统一颁发一个证书。

cat > token.csv << EOF

$(head -c 16 /dev/urandom | od -An -t x | tr -d ' '),kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

4、创建 apiserver 配置文件

其实你会发现,流程与部署 ETCD 集群类似

mkdir -p /data/kubernetes/{conf,tokenfile,ssl,logs/kube-apiserver}

cd /data/kubernetes/conf

-

k8s-master1

cat > /data/kubernetes/conf/kube-apiserver.conf << "EOF" KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \ --anonymous-auth=false \ --bind-address=192.168.56.171 \ --secure-port=6443 \ --advertise-address=192.168.56.171 \ --authorization-mode=Node,RBAC \ --runtime-config=api/all=true \ --enable-bootstrap-token-auth \ --service-cluster-ip-range=10.96.0.0/16 \ --token-auth-file=/data/kubernetes/tokenfile/token.csv \ --service-node-port-range=30000-50000 \ --tls-cert-file=/data/kubernetes/ssl/kube-apiserver.pem \ --tls-private-key-file=/data/kubernetes/ssl/kube-apiserver-key.pem \ --client-ca-file=/data/kubernetes/ssl/ca.pem \ --kubelet-client-certificate=/data/kubernetes/ssl/kube-apiserver.pem \ --kubelet-client-key=/data/kubernetes/ssl/kube-apiserver-key.pem \ --service-account-key-file=/data/kubernetes/ssl/ca-key.pem \ --service-account-signing-key-file=/data/kubernetes/ssl/ca-key.pem \ --service-account-issuer=api \ --etcd-cafile=/data/etcd/ssl/ca.pem \ --etcd-certfile=/data/etcd/ssl/etcd.pem \ --etcd-keyfile=/data/etcd/ssl/etcd-key.pem \ --etcd-servers=https://192.168.56.171:2379,https://192.168.56.172:2379,https://192.168.56.173:2379 \ --allow-privileged=true \ --apiserver-count=3 \ --audit-log-maxage=30 \ --audit-log-maxbackup=3 \ --audit-log-maxsize=100 \ --audit-log-path=/data/kubernetes/logs/kube-apiserver/kube-apiserver-audit.log \ --event-ttl=1h \ --alsologtostderr=true \ --logtostderr=false \ --log-dir=/data/kubernetes/logs/kube-apiserver \ --v=4" EOF -

k8s-master2

cat > /data/kubernetes/conf/kube-apiserver.conf << "EOF" KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \ --anonymous-auth=false \ --bind-address=192.168.56.172 \ --secure-port=6443 \ --advertise-address=192.168.56.172 \ --authorization-mode=Node,RBAC \ --runtime-config=api/all=true \ --enable-bootstrap-token-auth \ --service-cluster-ip-range=10.96.0.0/16 \ --token-auth-file=/data/kubernetes/tokenfile/token.csv \ --service-node-port-range=30000-50000 \ --tls-cert-file=/data/kubernetes/ssl/kube-apiserver.pem \ --tls-private-key-file=/data/kubernetes/ssl/kube-apiserver-key.pem \ --client-ca-file=/data/kubernetes/ssl/ca.pem \ --kubelet-client-certificate=/data/kubernetes/ssl/kube-apiserver.pem \ --kubelet-client-key=/data/kubernetes/ssl/kube-apiserver-key.pem \ --service-account-key-file=/data/kubernetes/ssl/ca-key.pem \ --service-account-signing-key-file=/data/kubernetes/ssl/ca-key.pem \ --service-account-issuer=api \ --etcd-cafile=/data/etcd/ssl/ca.pem \ --etcd-certfile=/data/etcd/ssl/etcd.pem \ --etcd-keyfile=/data/etcd/ssl/etcd-key.pem \ --etcd-servers=https://192.168.56.171:2379,https://192.168.56.172:2379,https://192.168.56.173:2379 \ --allow-privileged=true \ --apiserver-count=3 \ --audit-log-maxage=30 \ --audit-log-maxbackup=3 \ --audit-log-maxsize=100 \ --audit-log-path=/data/kubernetes/logs/kube-apiserver/kube-apiserver-audit.log \ --event-ttl=1h \ --alsologtostderr=true \ --logtostderr=false \ --log-dir=/data/kubernetes/logs/kube-apiserver \ --v=4" EOF -

k8s-master3

cat > /data/kubernetes/conf/kube-apiserver.conf << "EOF" KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \ --anonymous-auth=false \ --bind-address=192.168.56.173 \ --secure-port=6443 \ --advertise-address=192.168.56.173 \ --authorization-mode=Node,RBAC \ --runtime-config=api/all=true \ --enable-bootstrap-token-auth \ --service-cluster-ip-range=10.96.0.0/16 \ --token-auth-file=/data/kubernetes/tokenfile/token.csv \ --service-node-port-range=30000-50000 \ --tls-cert-file=/data/kubernetes/ssl/kube-apiserver.pem \ --tls-private-key-file=/data/kubernetes/ssl/kube-apiserver-key.pem \ --client-ca-file=/data/kubernetes/ssl/ca.pem \ --kubelet-client-certificate=/data/kubernetes/ssl/kube-apiserver.pem \ --kubelet-client-key=/data/kubernetes/ssl/kube-apiserver-key.pem \ --service-account-key-file=/data/kubernetes/ssl/ca-key.pem \ --service-account-signing-key-file=/data/kubernetes/ssl/ca-key.pem \ --service-account-issuer=api \ --etcd-cafile=/data/etcd/ssl/ca.pem \ --etcd-certfile=/data/etcd/ssl/etcd.pem \ --etcd-keyfile=/data/etcd/ssl/etcd-key.pem \ --etcd-servers=https://192.168.56.171:2379,https://192.168.56.172:2379,https://192.168.56.173:2379 \ --allow-privileged=true \ --apiserver-count=3 \ --audit-log-maxage=30 \ --audit-log-maxbackup=3 \ --audit-log-maxsize=100 \ --audit-log-path=/data/kubernetes/logs/kube-apiserver/kube-apiserver-audit.log \ --event-ttl=1h \ --alsologtostderr=true \ --logtostderr=false \ --log-dir=/data/kubernetes/logs/kube-apiserver \ --v=4" EOF

5、配置 systemd 管理

cat > /usr/lib/systemd/system/kube-apiserver.service << "EOF"

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=etcd.service

Wants=etcd.service

[Service]

EnvironmentFile=-/data/kubernetes/conf/kube-apiserver.conf

ExecStart=/usr/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

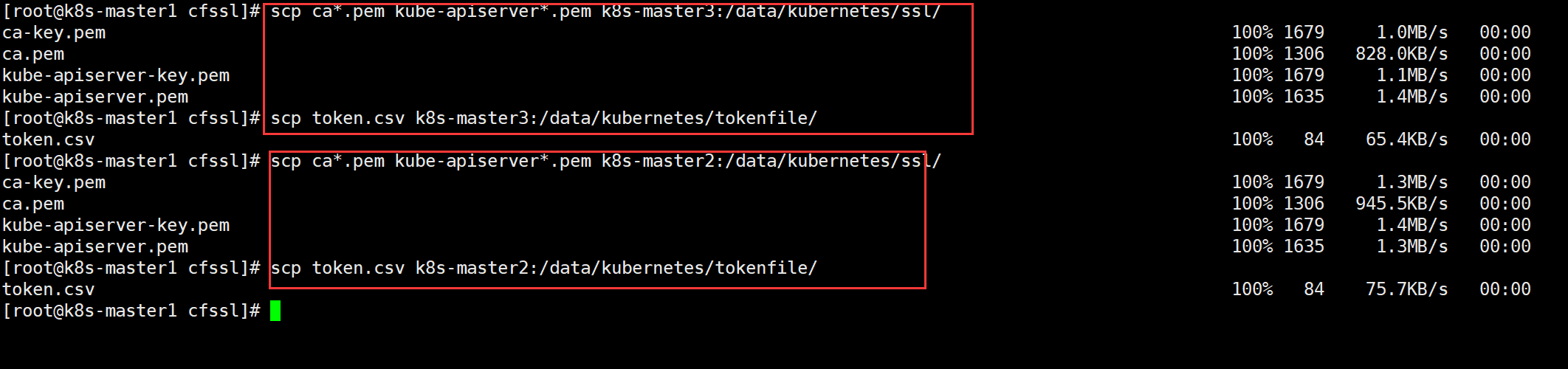

6、根据配置文件中的配置,复制相关文件到指定目录中

# k8s-master1

cd /data/k8s-work/cfssl/

cp ca*.pem kube-apiserver*.pem /data/kubernetes/ssl/

cp token.csv /data/kubernetes/tokenfile/

# 分发至k8s-master2

cd /data/k8s-work/cfssl/

scp ca*.pem kube-apiserver*.pem k8s-master2:/data/kubernetes/ssl/

scp token.csv k8s-master2:/data/kubernetes/tokenfile/

# 分发至k8s-master3

cd /data/k8s-work/cfssl/

scp ca*.pem kube-apiserver*.pem k8s-master3:/data/kubernetes/ssl/

scp token.csv k8s-master3:/data/kubernetes/tokenfile/

7、启动 apiserver

三台 k8s-master 均启动

systemctl daemon-reload

systemctl start kube-apiserver.service

systemctl enable kube-apiserver.service

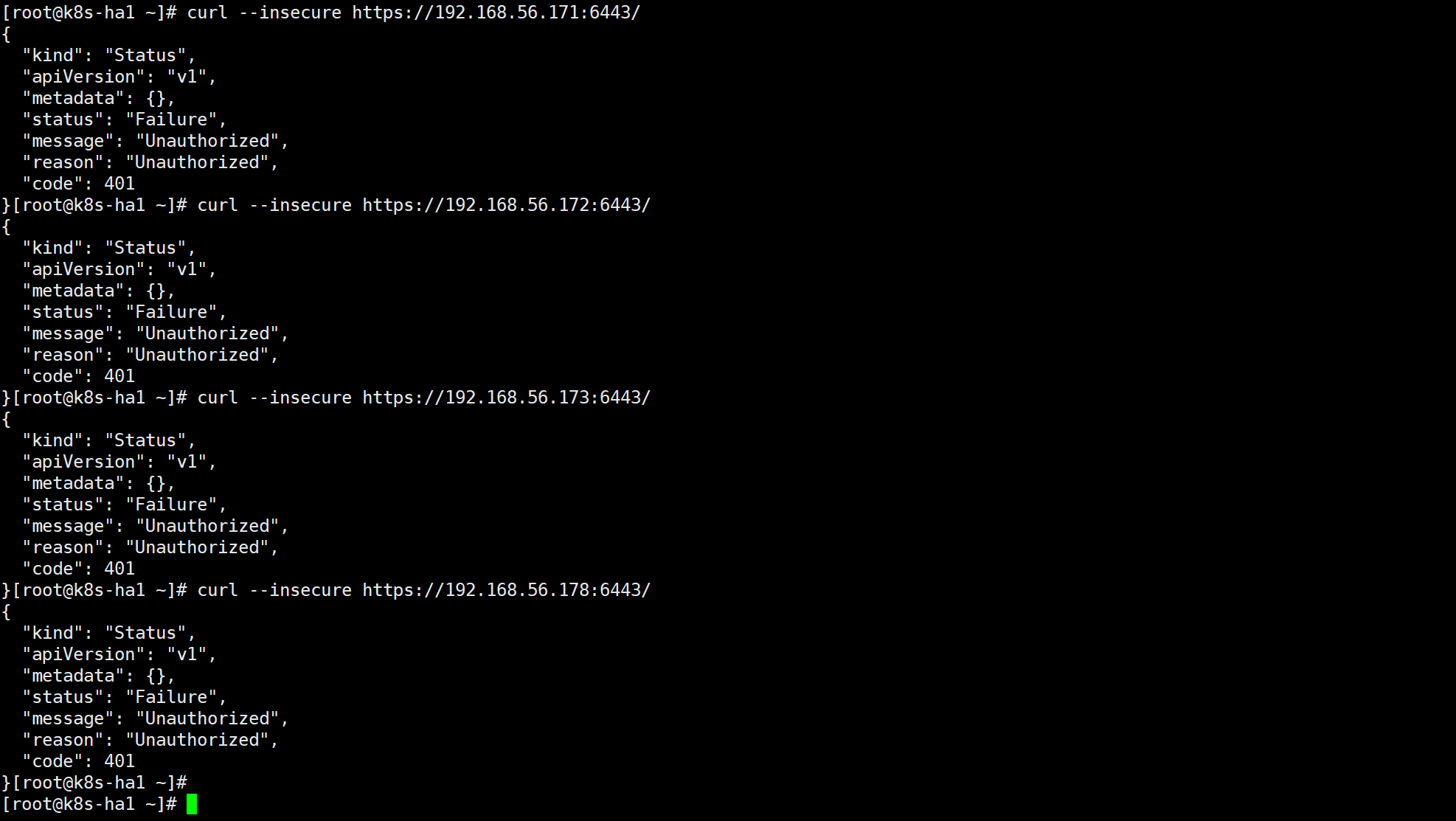

8、验证

curl --insecure https://192.168.56.171:6443/

curl --insecure https://192.168.56.172:6443/

curl --insecure https://192.168.56.173:6443/

curl --insecure https://192.168.56.178:6443/

# 均为验证,401,通过curl没有通过身份验证,所以是正常

4.3.2.1.3 kubectl

严格意义上来讲,kubectl 并不是 k8s-master 的组件,而是一个客户端工具,也就是说没有 kubectl,那我的 K8s 集群也是可以正常运行的。

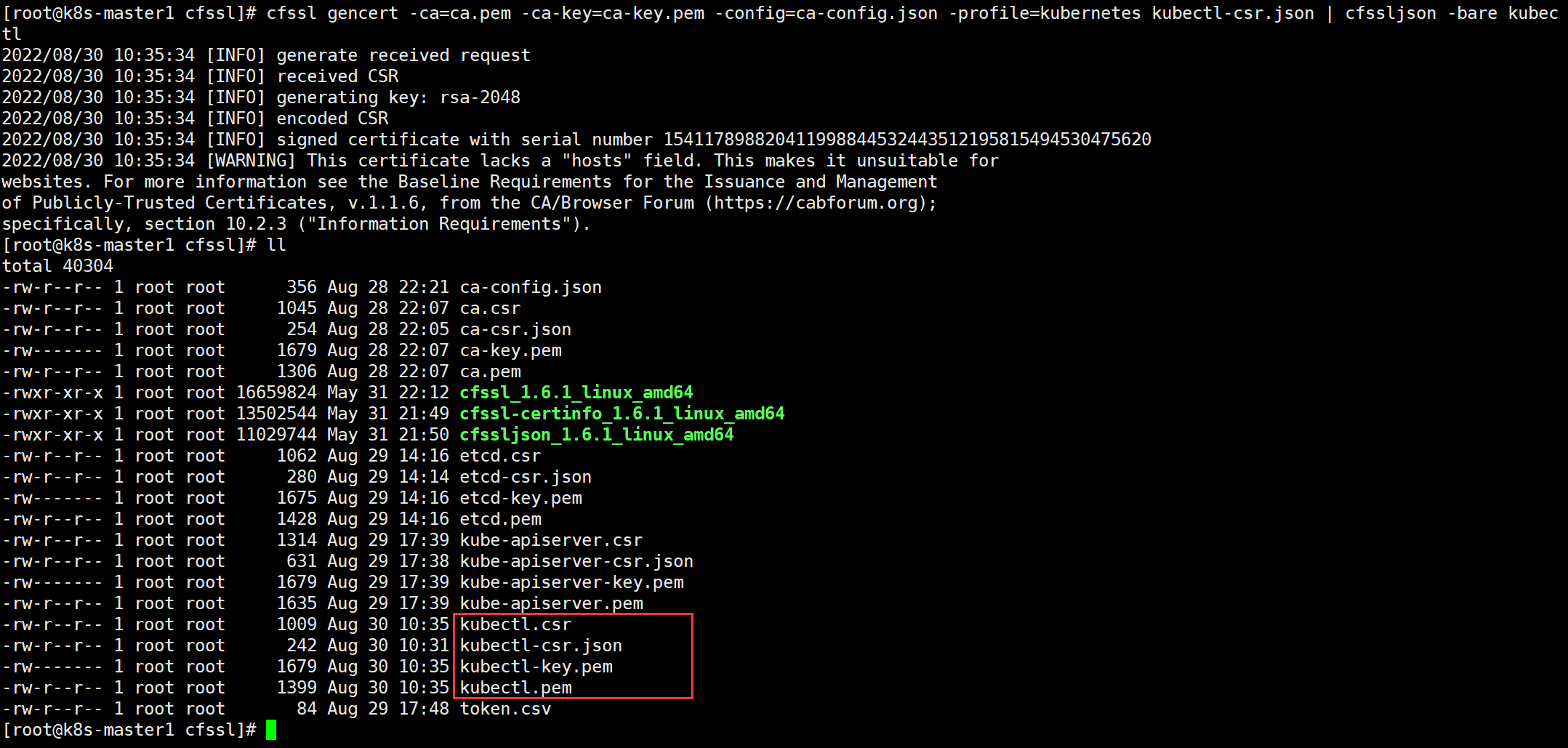

1、配置 kubectl 证书请求文件

cd /data/k8s-work/cfssl

cat > kubectl-csr.json << "EOF"

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:masters",

"OU": "system"

}

]

}

EOF

# 这个admin 证书,是将来生成管理员用的kubeconfig 配置文件用的

# 现在我们一般建议使用RBAC 来对kubernetes 进行角色权限控制

# kubernetes 将证书中的CN 字段 作为User, O 字段作为 Group;

# "O": "system:masters", 必须是system:masters,否则后面kubectl create clusterrolebinding报错。

2、生成 kubectl 证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kubectl-csr.json | cfssljson -bare kubectl

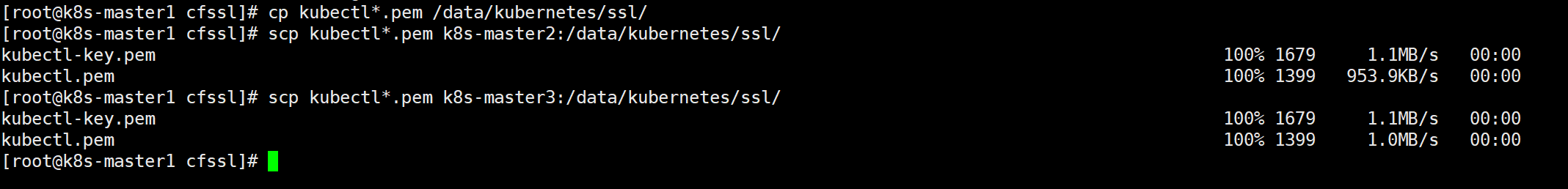

3、复制相关证书到 k8s-master 目录下

# k8s-master1

cp kubectl*.pem /data/kubernetes/ssl/

# 分发至k8s-master2

scp kubectl*.pem k8s-master2:/data/kubernetes/ssl/

# 分发至k8s-master3

scp kubectl*.pem k8s-master3:/data/kubernetes/ssl/

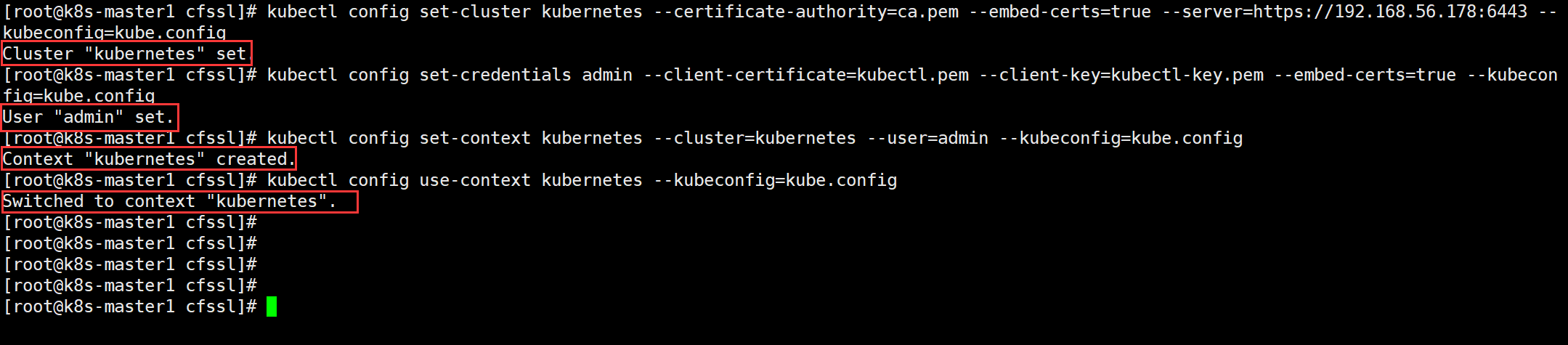

4、生成 kubeconfig 配置文件

cd /data/k8s-work/cfssl

# 192.168.56.178 为VIP,如果没有做master高可用,则为master节点IP

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.56.178:6443 --kubeconfig=kube.config

kubectl config set-credentials admin --client-certificate=kubectl.pem --client-key=kubectl-key.pem --embed-certs=true --kubeconfig=kube.config

kubectl config set-context kubernetes --cluster=kubernetes --user=admin --kubeconfig=kube.config

kubectl config use-context kubernetes --kubeconfig=kube.config

# kube.config为kubectl的配置文件,包含访问apiserver的所有信息,如apiserver地址、CA证书和自身使用的证书等

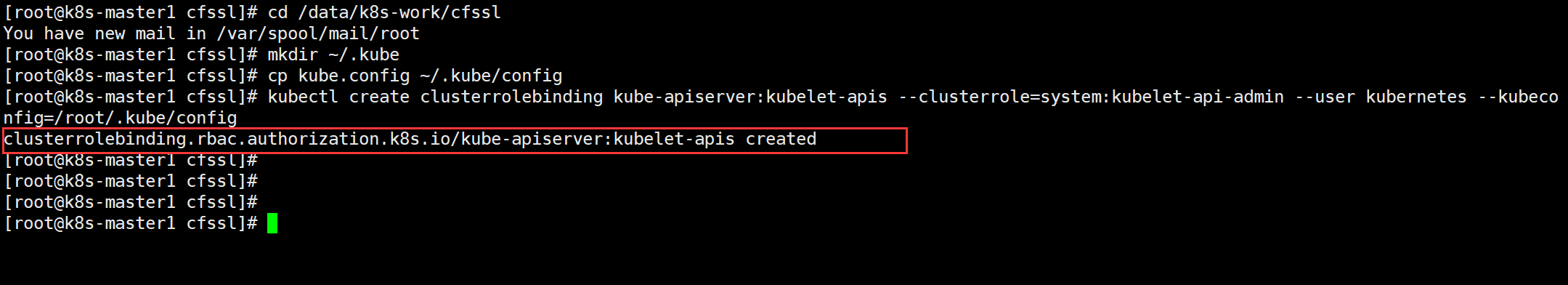

5、对 kubeconfig 配置文件进行角色绑定

也就是说我当前 centos 操作系统的登录用户为 root,那我们一般会将该 kube.config 复制到当前用户家目录下进行管理,以此来管理我整个 K8s 集群。

cd /data/k8s-work/cfssl

mkdir ~/.kube

cp kube.config ~/.kube/config

kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes --kubeconfig=/root/.kube/config

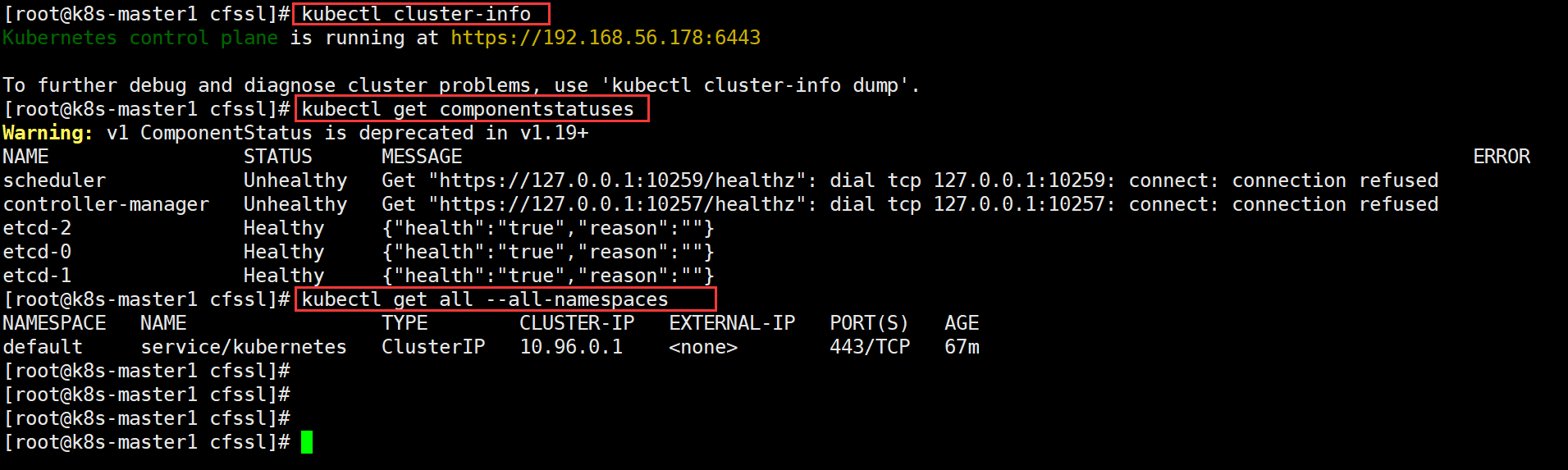

6、kubectl 命令验证

查看集群信息

kubectl cluster-info

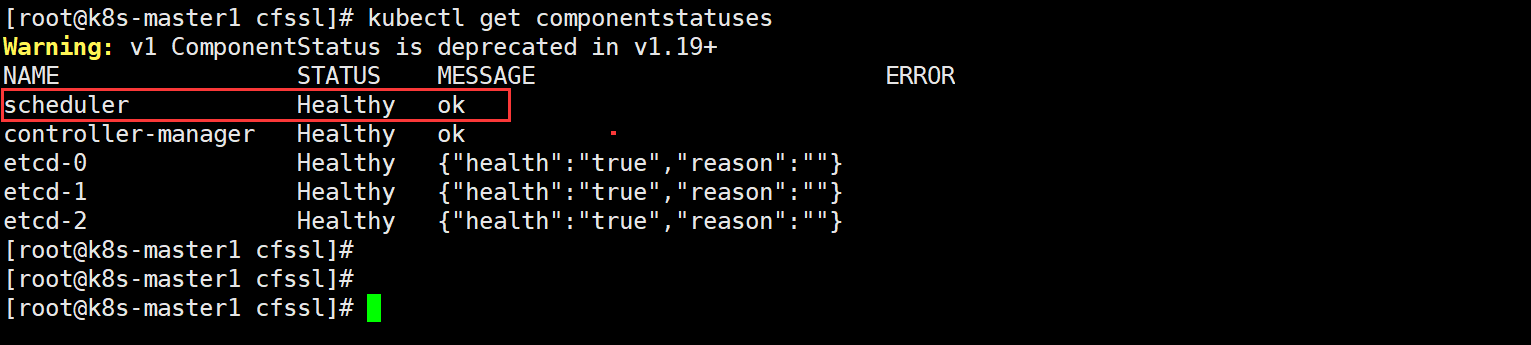

查看集群组件状态,可看到警告提示:ComponentStatu在1.19+版本已经被弃用了(我当前为1.24.4)

kubectl get componentstatuses

查看命名空间中资源对象

kubectl get all --all-namespaces

如果你也希望其他 k8s-master 节点也具备管理能力,那你需要复制相关证书文件至其他 k8s-master 节点

证书在上面已经分发到 k8s-master 的其他节点了,接下来只需要在其他 k8s-master 节点家目录创建相关文件,并将 k8s-master1 上配置好的 config 配置文件分发至其他 k8s-master 节点对应目录下即可。

-

先在其他 k8s-master 节点创建目录

# k8s-master2: mkdir /root/.kube # k8s-master3: mkdir /root/.kube -

在 k8s-master1 分发配置文件

scp /root/.kube/config k8s-master2:/root/.kube/config scp /root/.kube/config k8s-master3:/root/.kube/config

这样的话,所有 k8s-master 节点都具备 K8s 集群的管理能力了。

kubectl 命令补全(所有 k8s-master 节点执行)

yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

kubectl completion bash > ~/.kube/completion.bash.inc

source '/root/.kube/completion.bash.inc'

source $HOME/.bash_profile

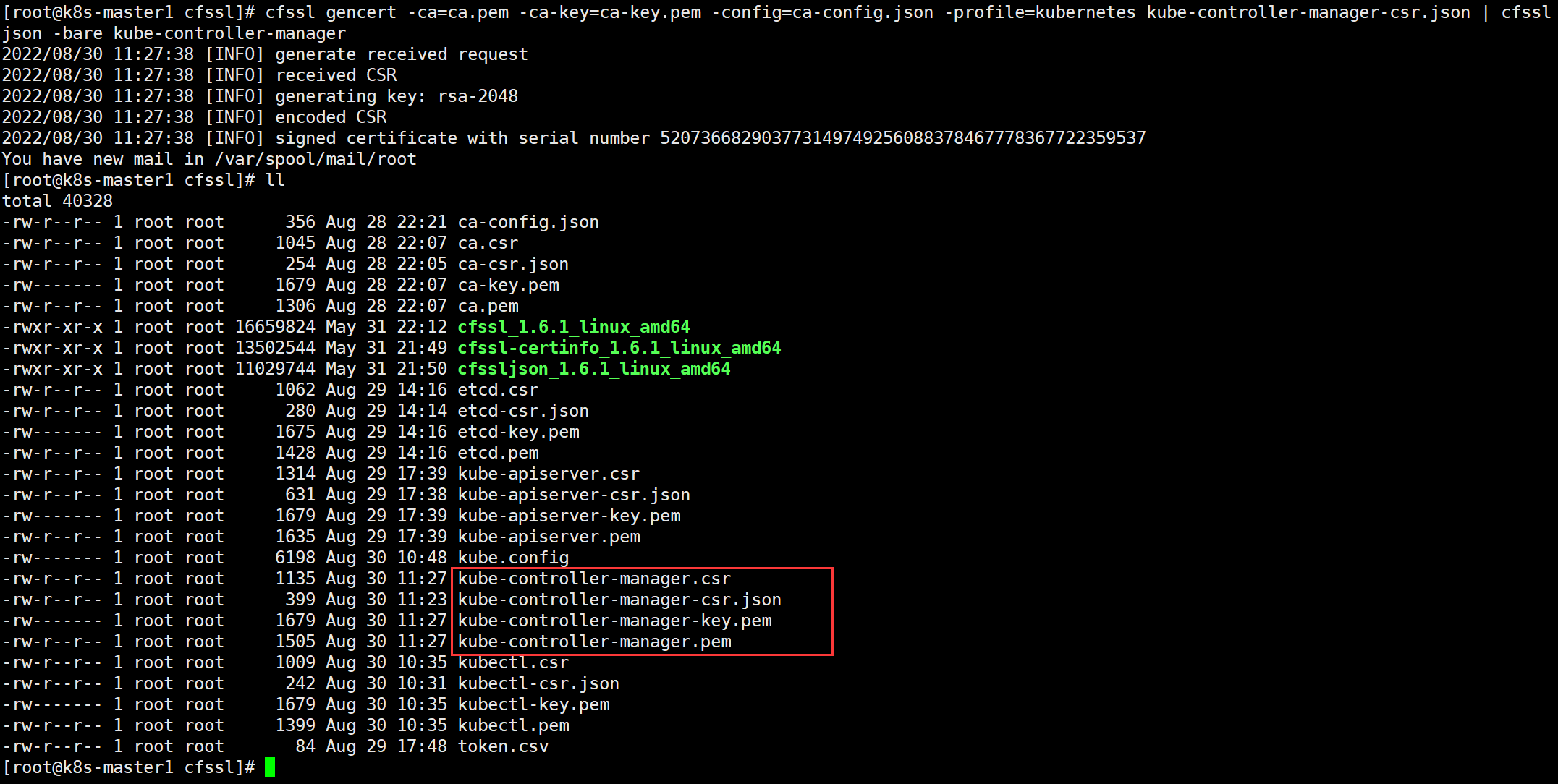

4.3.2.1.4 kube-controller-manager

1、配置 kube-controller-manager 证书请求文件

因为我们部署的是 k8s-master 的高可用,所以 kube-controller-manager 也是配置的高可用

同理,也是统一在 k8s-master 节点 cfssl 目录下执行

cd /data/k8s-work/cfssl

cat > kube-controller-manager-csr.json << "EOF"

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"hosts": [

"127.0.0.1",

"192.168.56.171",

"192.168.56.172",

"192.168.56.173"

],

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-controller-manager",

"OU": "system"

}

]

}

EOF

# hosts列表包含所有kube-controller-manager节点IP

# CN为system:kube-controller-manager

# O为system:kube-controller-manager,其为kubernetes内置的ClusterRoleBindings

# system:kube-controller-manager赋予kube-controller-manager工作所需的权限

2、生成 kube-controller-manager 证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

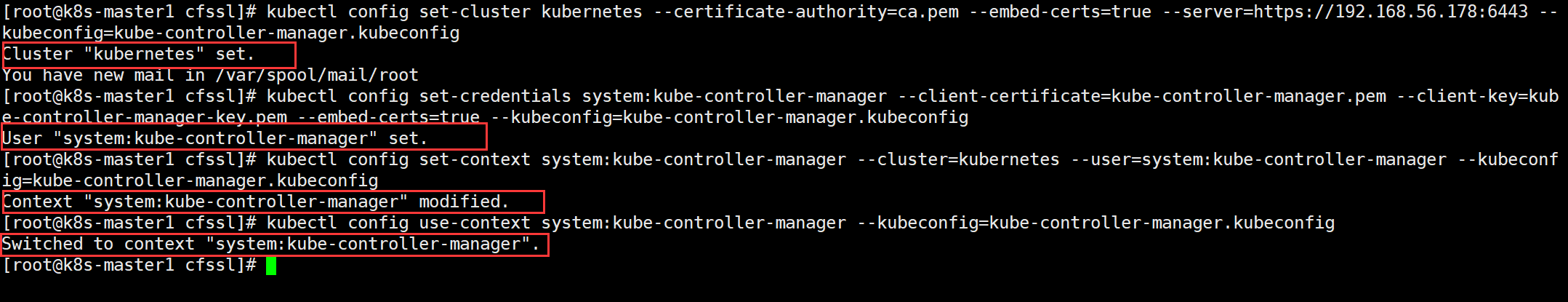

3、生成 kube-controller-manager 的 .kubeconfig 配置文件

与 kubectl 类似,想要进行 k8s 集群控制,就需要进行相关配置。

同样是在 k8s-master1 的 cfssl 目录下执行

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.56.178:6443 --kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-credentials system:kube-controller-manager --client-certificate=kube-controller-manager.pem --client-key=kube-controller-manager-key.pem --embed-certs=true --kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-context system:kube-controller-manager --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

4、创建 kube-controller-manager 的 .conf 配置文件

同样是在 k8s-master1 的 cfssl 目录下执行。

cat > kube-controller-manager.conf << "EOF"

KUBE_CONTROLLER_MANAGER_OPTS="--secure-port=10257 \

--bind-address=127.0.0.1 \

--kubeconfig=/data/kubernetes/conf/kube-controller-manager.kubeconfig \

--service-cluster-ip-range=10.96.0.0/16 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/data/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/data/kubernetes/ssl/ca-key.pem \

--allocate-node-cidrs=true \

--cluster-cidr=10.244.0.0/16 \

--experimental-cluster-signing-duration=87600h \

--root-ca-file=/data/kubernetes/ssl/ca.pem \

--service-account-private-key-file=/data/kubernetes/ssl/ca-key.pem \

--leader-elect=true \

--feature-gates=RotateKubeletServerCertificate=true \

--controllers=*,bootstrapsigner,tokencleaner \

--tls-cert-file=/data/kubernetes/ssl/kube-controller-manager.pem \

--tls-private-key-file=/data/kubernetes/ssl/kube-controller-manager-key.pem \

--use-service-account-credentials=true \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/data/kubernetes/logs/kube-controller-manager \

--v=2"

EOF

# 创建kube-controller-manager日志目录(所有k8s-master节点均执行)

mkdir /data/kubernetes/logs/kube-controller-manager

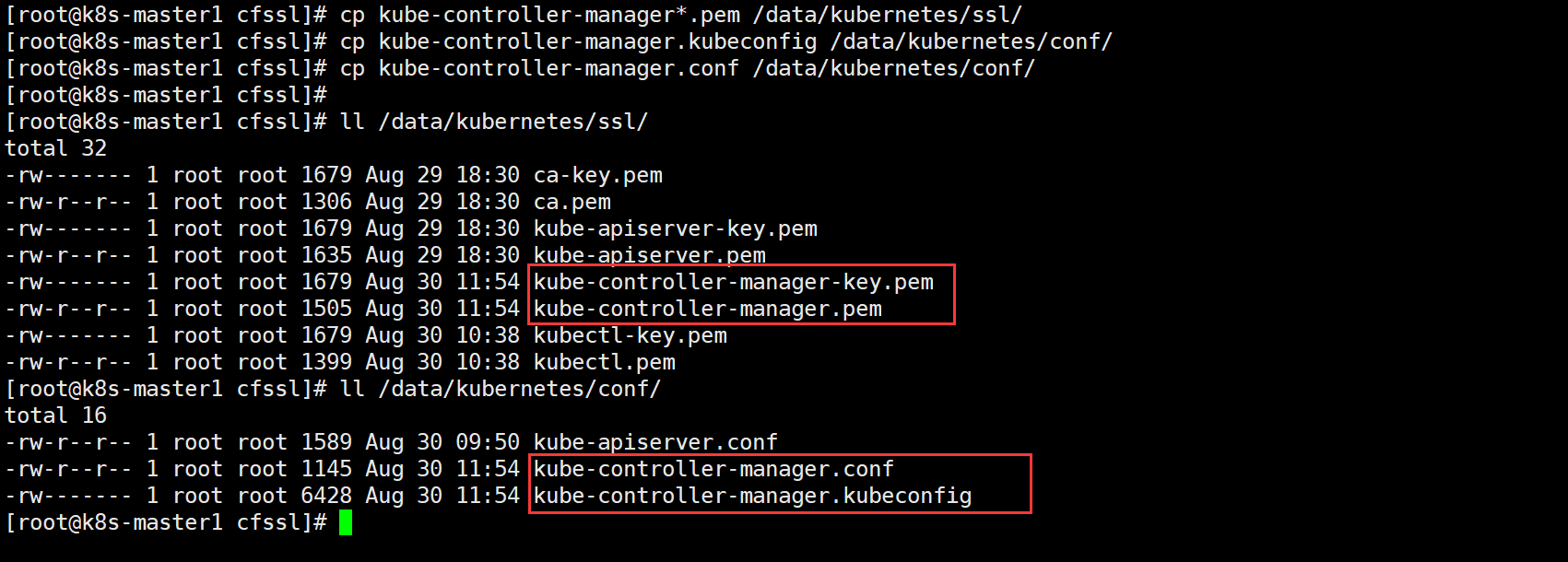

5、复制相关证书文件至配置文件中的指定目录

# k8s-master1

cd /data/k8s-work/cfssl

cp kube-controller-manager*.pem /data/kubernetes/ssl/

cp kube-controller-manager.kubeconfig /data/kubernetes/conf/

cp kube-controller-manager.conf /data/kubernetes/conf/

# 分发至k8s-master2

scp kube-controller-manager*.pem k8s-master2:/data/kubernetes/ssl/

scp kube-controller-manager.kubeconfig k8s-master2:/data/kubernetes/conf/

scp kube-controller-manager.conf k8s-master2:/data/kubernetes/conf/

# 分发至k8s-master3

scp kube-controller-manager*.pem k8s-master3:/data/kubernetes/ssl/

scp kube-controller-manager.kubeconfig k8s-master3:/data/kubernetes/conf/

scp kube-controller-manager.conf k8s-master3:/data/kubernetes/conf/

6、配置 systemd 管理

k8s-master 所有节点均执行,或者在 k8s-master1 上执行完成后再分发至其他 master 节点

cat > /usr/lib/systemd/system/kube-controller-manager.service << "EOF"

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/data/kubernetes/conf/kube-controller-manager.conf

ExecStart=/usr/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

7、启动 kube-controller-manager 服务

systemctl daemon-reload

systemctl start kube-controller-manager.service

systemctl enable kube-controller-manager.service

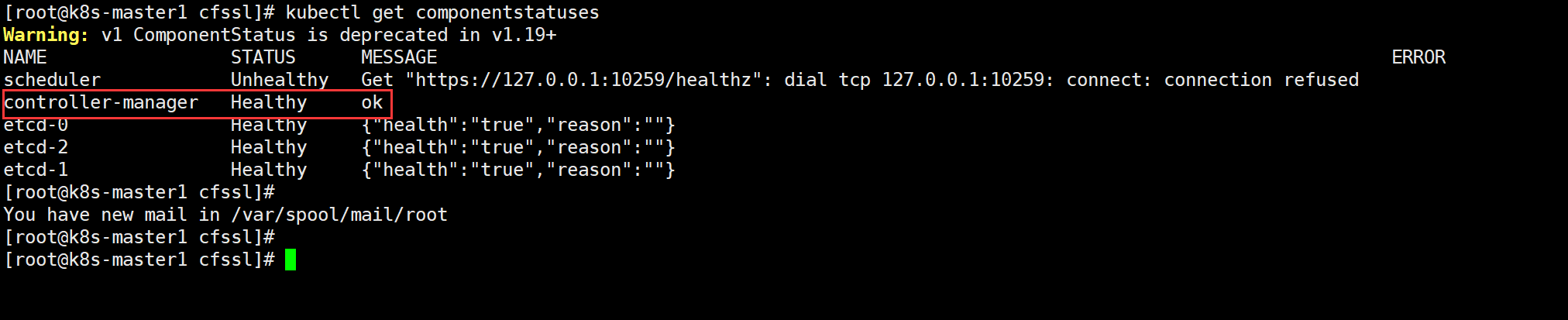

8、验证

kubectl get componentstatuses

4.3.2.1.5 kube-scheduler

1、配置 kube-scheduler 证书请求文件

cd /data/k8s-work/cfssl

cat > kube-scheduler-csr.json << "EOF"

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"192.168.56.171",

"192.168.56.172",

"192.168.56.173"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-scheduler",

"OU": "system"

}

]

}

EOF

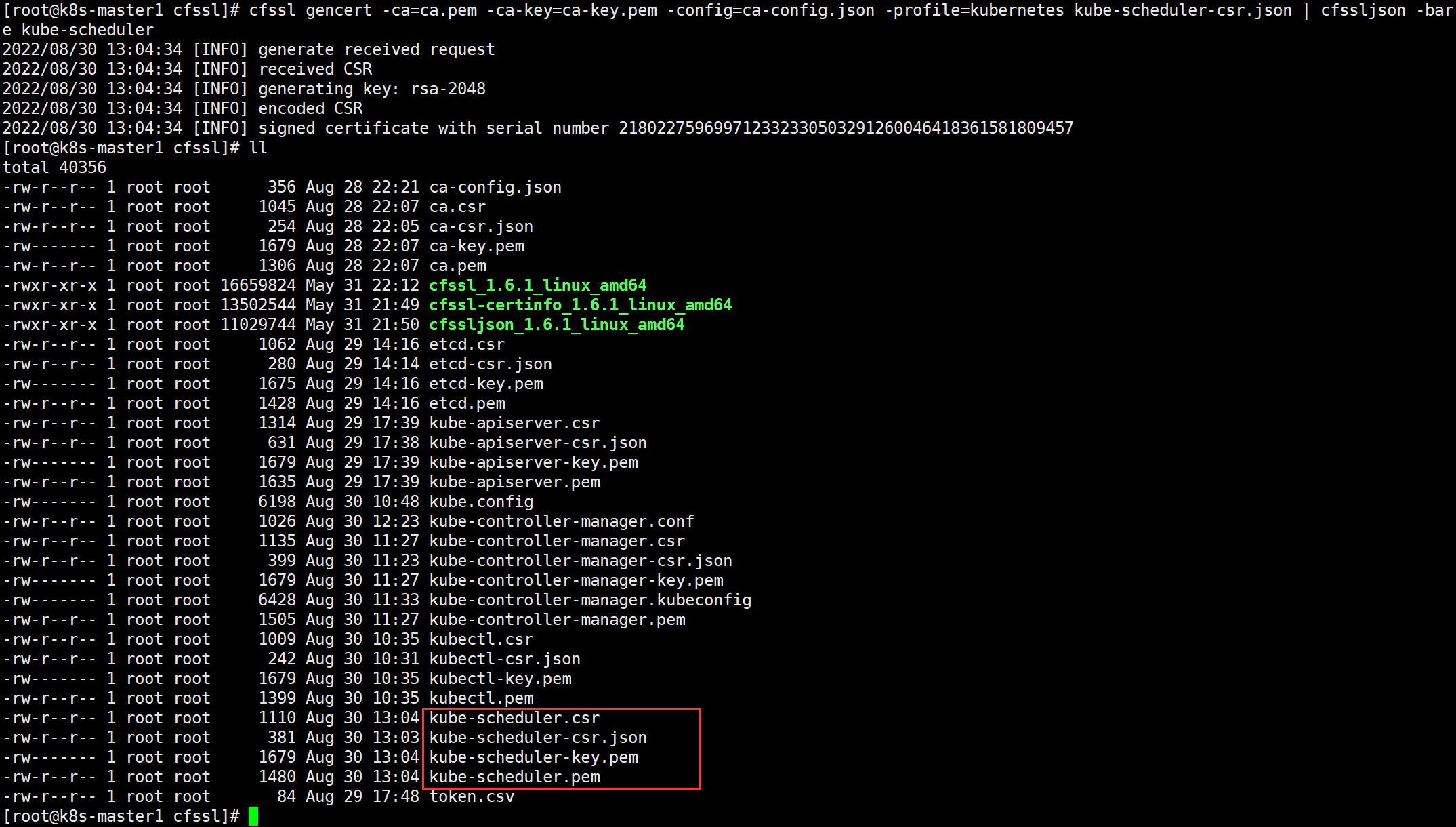

2、生成 kube-scheduler 证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

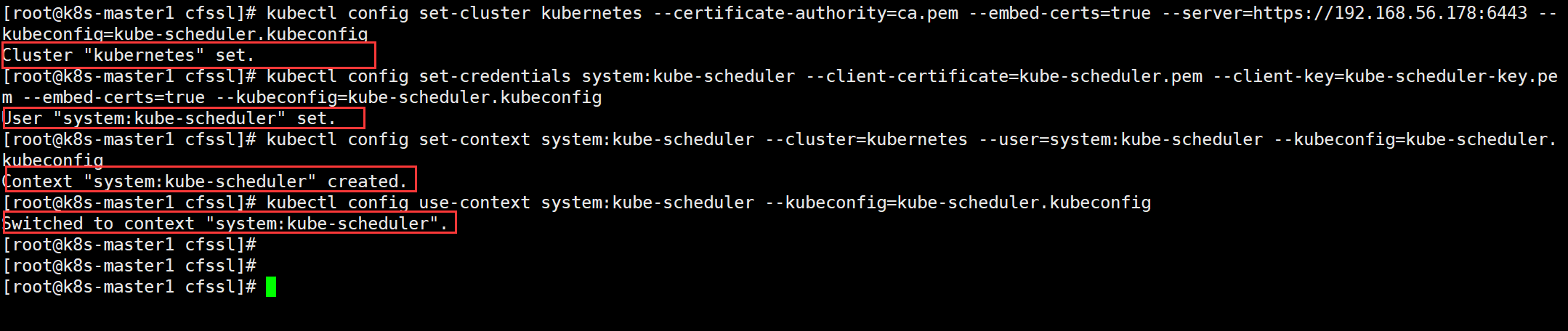

3、生成 kube-scheduler 的 .kubeconfig 配置文件

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.56.178:6443 --kubeconfig=kube-scheduler.kubeconfig

kubectl config set-credentials system:kube-scheduler --client-certificate=kube-scheduler.pem --client-key=kube-scheduler-key.pem --embed-certs=true --kubeconfig=kube-scheduler.kubeconfig

kubectl config set-context system:kube-scheduler --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

kubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

4、创建 kube-scheduler 的 .conf 配置文件

cd /data/k8s-work/cfssl

cat > kube-scheduler.conf << "EOF"

KUBE_SCHEDULER_OPTS="--kubeconfig=/data/kubernetes/conf/kube-scheduler.kubeconfig \

--leader-elect=true \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/data/kubernetes/logs/kube-scheduler \

--v=2"

EOF

# 创建kube-scheduler日志目录(所有k8s-master节点均执行)

mkdir /data/kubernetes/logs/kube-scheduler

5、复制相关证书文件至配置文件中的指定目录

# k8s-master1

cd /data/k8s-work/cfssl

cp kube-scheduler*.pem /data/kubernetes/ssl/

cp kube-scheduler.kubeconfig /data/kubernetes/conf/

cp kube-scheduler.conf /data/kubernetes/conf/

# 分发至k8s-master2

scp kube-scheduler*.pem k8s-master2:/data/kubernetes/ssl/

scp kube-scheduler.kubeconfig k8s-master2:/data/kubernetes/conf/

scp kube-scheduler.conf k8s-master2:/data/kubernetes/conf/

# 分发至k8s-master3

scp kube-scheduler*.pem k8s-master3:/data/kubernetes/ssl/

scp kube-scheduler.kubeconfig k8s-master3:/data/kubernetes/conf/

scp kube-scheduler.conf k8s-master3:/data/kubernetes/conf/

6、配置 systemd 管理

k8s-master 所有节点均执行,或者在 k8s-master1 上执行完成后再分发至其他 master 节点

cat > /usr/lib/systemd/system/kube-scheduler.service << "EOF"

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/data/kubernetes/conf/kube-scheduler.conf

ExecStart=/usr/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

7、启动 kube-controller-manager 服务

systemctl daemon-reload

systemctl start kube-scheduler.service

systemctl enable kube-scheduler.service

8、验证

kubectl get componentstatuses

至此,k8s-master 节点的所有组件已经部署完成,并已成功在运行。接下来就是部署 k8s-work 节点组件了。

4.3.2.2 Work 节点

k8s-master 必须的组件:kubelet、kube-proxy

k8s 在 1.20+ 开始,不再唯一支持 docker,而且也支持 Containerd,而 1.24+ 版本开始,完全移除 dockershim(不代表不可用,而是以其他方式进行使用 docker),对于 work 节点来说,容器化引擎是必须的。

4.3.2.2.1 部署说明

看看官方的解释

本次我将采用 Docker 的方式部署

4.3.2.2.2 组件分发

在上面,我们下载了 kubernetes 的二进制包,里面包含了 master 节点和 work 节点的所有组件,因此在 k8s-master 节点上分发到 work 节点上即可。

cd /data/k8s-work/k8s/kubernetes/server/bin

scp kubelet kube-proxy k8s-work1:/usr/bin/

scp kubelet kube-proxy k8s-work2:/usr/bin/

4.3.2.2.3 docker

1、安装 docker 引擎

sh docker_install.sh

# 需要安装脚本的私信

2、修改 cgroup

k8s 和 docker 的 cgroup 必须保持一致,这里官方推荐为 systemd。

cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://q1rw9tzz.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

修改完成后重启 Docker 即可

systemctl restart docker.service

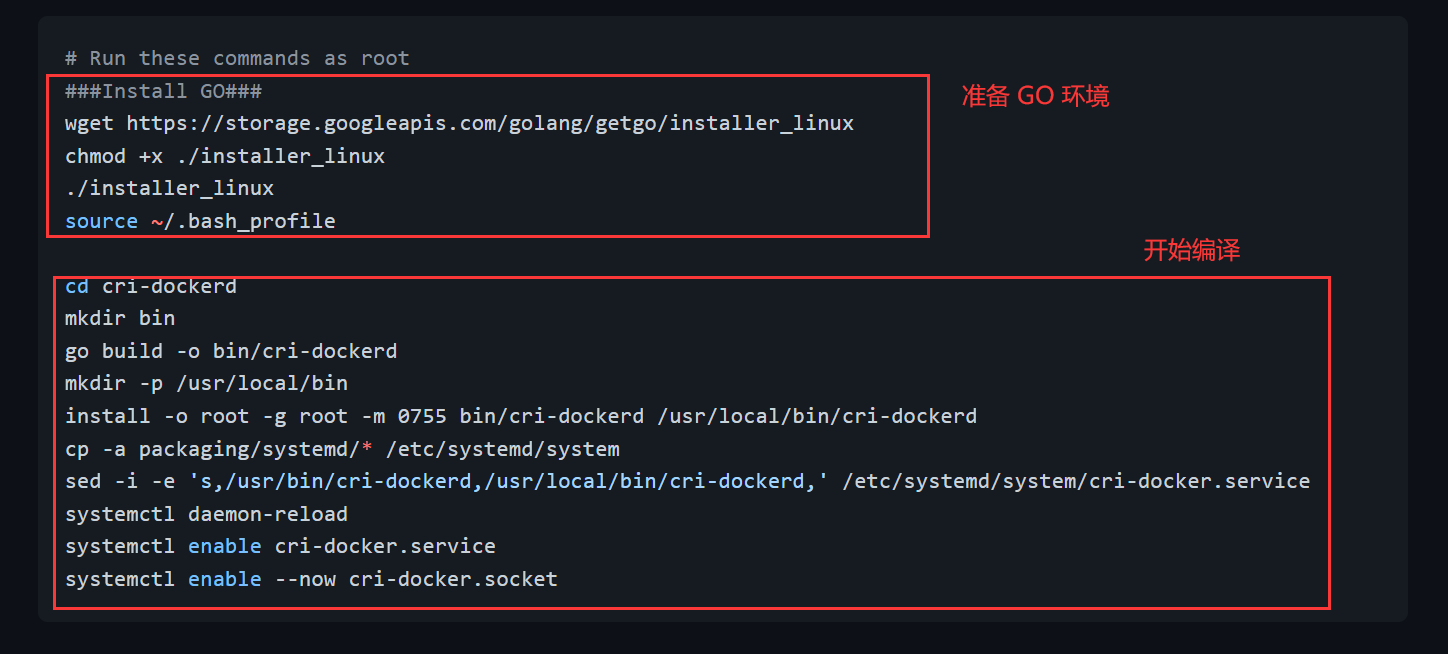

4.3.2.2.4 cri-dockerd

想要使用 docker 作为 k8s 的编排对象,那需要安装 cri-docker 来作为 dockershim。

cri-docker 源码安装地址:https://github.com/Mirantis/cri-dockerd

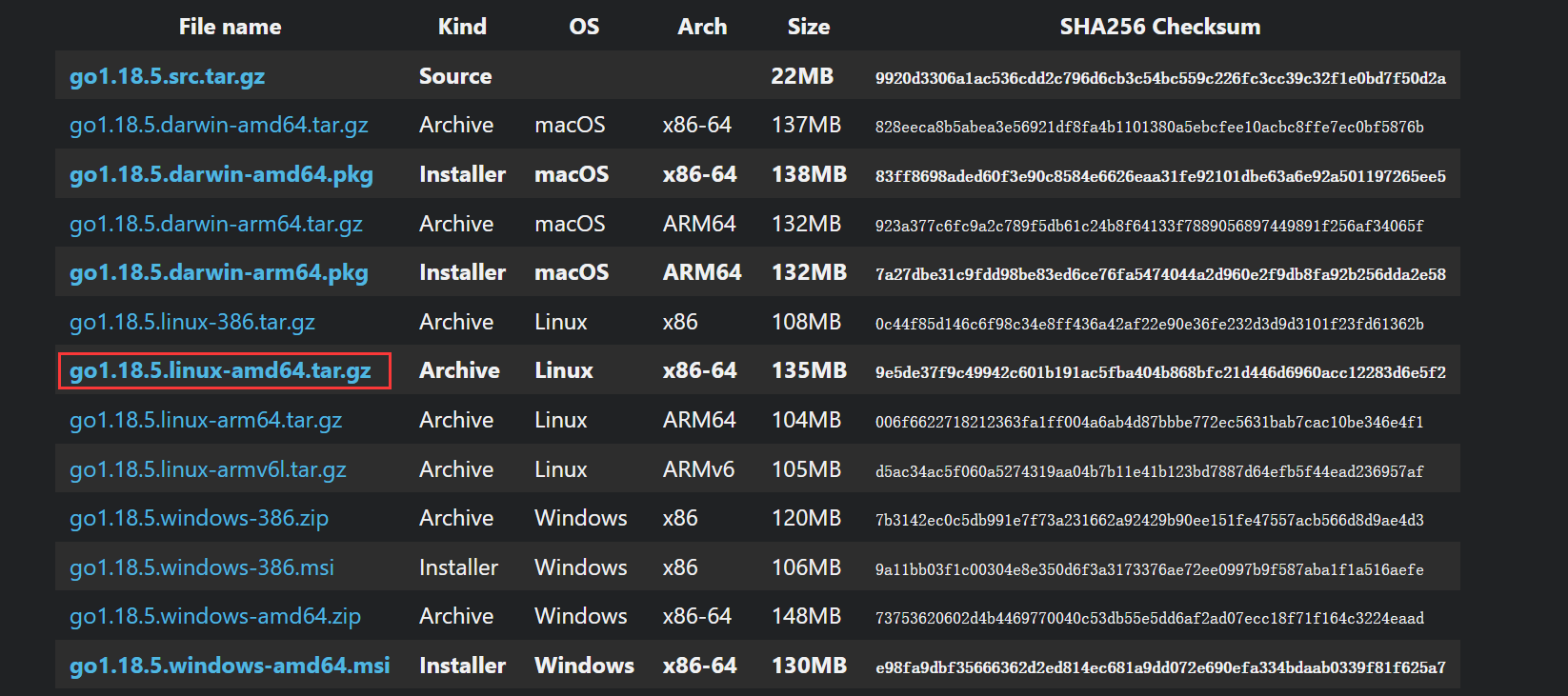

1、安装 Go 环境

k8s-work 节点执行

因为 cri-dockerd 由 go 编写,所以 k8s-work 主机需具备 go 环境。go 二进制包下载地址:https://golang.google.cn/dl/

# k8s-work1

tar xzf go1.18.5.linux-amd64.tar.gz -C /opt/

ln -s /opt/go/bin/* /usr/bin/

# k8s-work2

tar xzf go1.18.5.linux-amd64.tar.gz -C /opt/

ln -s /opt/go/bin/* /usr/bin/

2、clone 源码项目并编译

安装官方文档来部署即可

# GO环境我们已经有了

git clone https://github.com/Mirantis/cri-dockerd.git

cd cri-dockerd

mkdir bin

go build -o bin/cri-dockerd

mkdir -p /usr/local/bin

install -o root -g root -m 0755 bin/cri-dockerd /usr/local/bin/cri-dockerd

cp -a packaging/systemd/* /etc/systemd/system

sed -i -e 's,/usr/bin/cri-dockerd,/usr/local/bin/cri-dockerd,' /etc/systemd/system/cri-docker.service

systemctl daemon-reload

systemctl enable cri-docker.service

systemctl enable --now cri-docker.socket

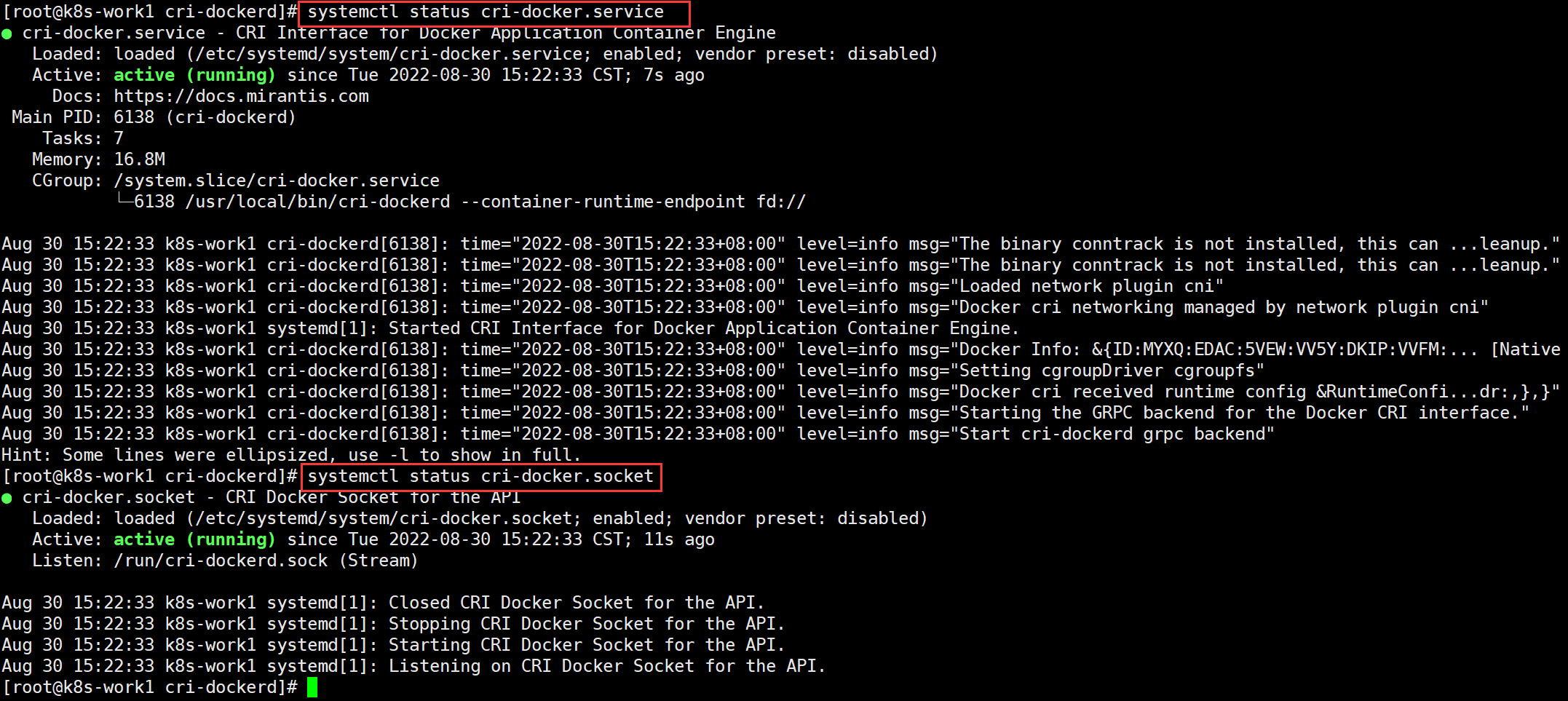

最后看看是否启动成功(下图,成功启动并正在监听)

3、修改 cri-docker 的 systemd 文件

-

修改前

[Unit] Description=CRI Interface for Docker Application Container Engine Documentation=https://docs.mirantis.com After=network-online.target firewalld.service docker.service Wants=network-online.target Requires=cri-docker.socket [Service] Type=notify ExecStart=/usr/local/bin/cri-dockerd --container-runtime-endpoint fd:// ExecReload=/bin/kill -s HUP $MAINPID TimeoutSec=0 RestartSec=2 Restart=always # Note that StartLimit* options were moved from "Service" to "Unit" in systemd 229. # Both the old, and new location are accepted by systemd 229 and up, so using the old location # to make them work for either version of systemd. StartLimitBurst=3 # Note that StartLimitInterval was renamed to StartLimitIntervalSec in systemd 230. # Both the old, and new name are accepted by systemd 230 and up, so using the old name to make # this option work for either version of systemd. StartLimitInterval=60s # Having non-zero Limit*s causes performance problems due to accounting overhead # in the kernel. We recommend using cgroups to do container-local accounting. LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity # Comment TasksMax if your systemd version does not support it. # Only systemd 226 and above support this option. TasksMax=infinity Delegate=yes KillMode=process [Install] WantedBy=multi-user.target -

修改后

[Unit] Description=CRI Interface for Docker Application Container Engine Documentation=https://docs.mirantis.com After=network-online.target firewalld.service docker.service Wants=network-online.target Requires=cri-docker.socket [Service] Type=notify ExecStart=/usr/local/bin/cri-dockerd --container-runtime-endpoint fd:// --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.7 ExecReload=/bin/kill -s HUP $MAINPID TimeoutSec=0 RestartSec=2 Restart=always # Note that StartLimit* options were moved from "Service" to "Unit" in systemd 229. # Both the old, and new location are accepted by systemd 229 and up, so using the old location # to make them work for either version of systemd. StartLimitBurst=3 # Note that StartLimitInterval was renamed to StartLimitIntervalSec in systemd 230. # Both the old, and new name are accepted by systemd 230 and up, so using the old name to make # this option work for either version of systemd. StartLimitInterval=60s # Having non-zero Limit*s causes performance problems due to accounting overhead # in the kernel. We recommend using cgroups to do container-local accounting. LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity # Comment TasksMax if your systemd version does not support it. # Only systemd 226 and above support this option. TasksMax=infinity Delegate=yes KillMode=process [Install] WantedBy=multi-user.target

4.3.2.2.5 kubelet

同样在 k8s-master1 上执行

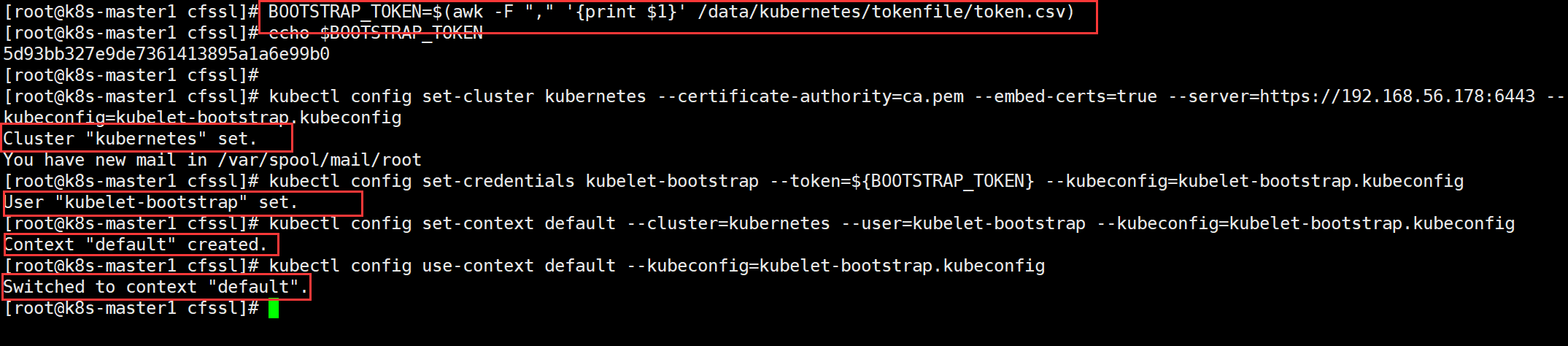

1、创建 kubelet 的 .kubeconfig 配置文件

cd /data/k8s-work/cfssl/

BOOTSTRAP_TOKEN=$(awk -F "," '{print $1}' /data/kubernetes/tokenfile/token.csv)

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.56.178:6443 --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl config use-context default --kubeconfig=kubelet-bootstrap.kubeconfig

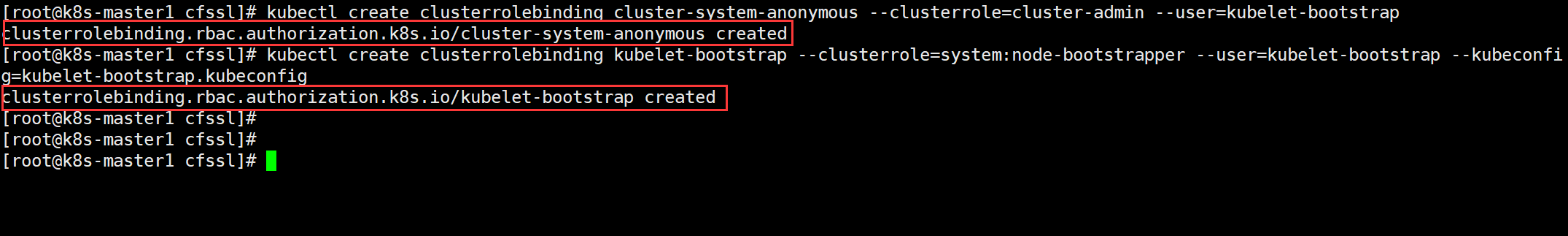

指定角色

kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=kubelet-bootstrap

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.kubeconfig

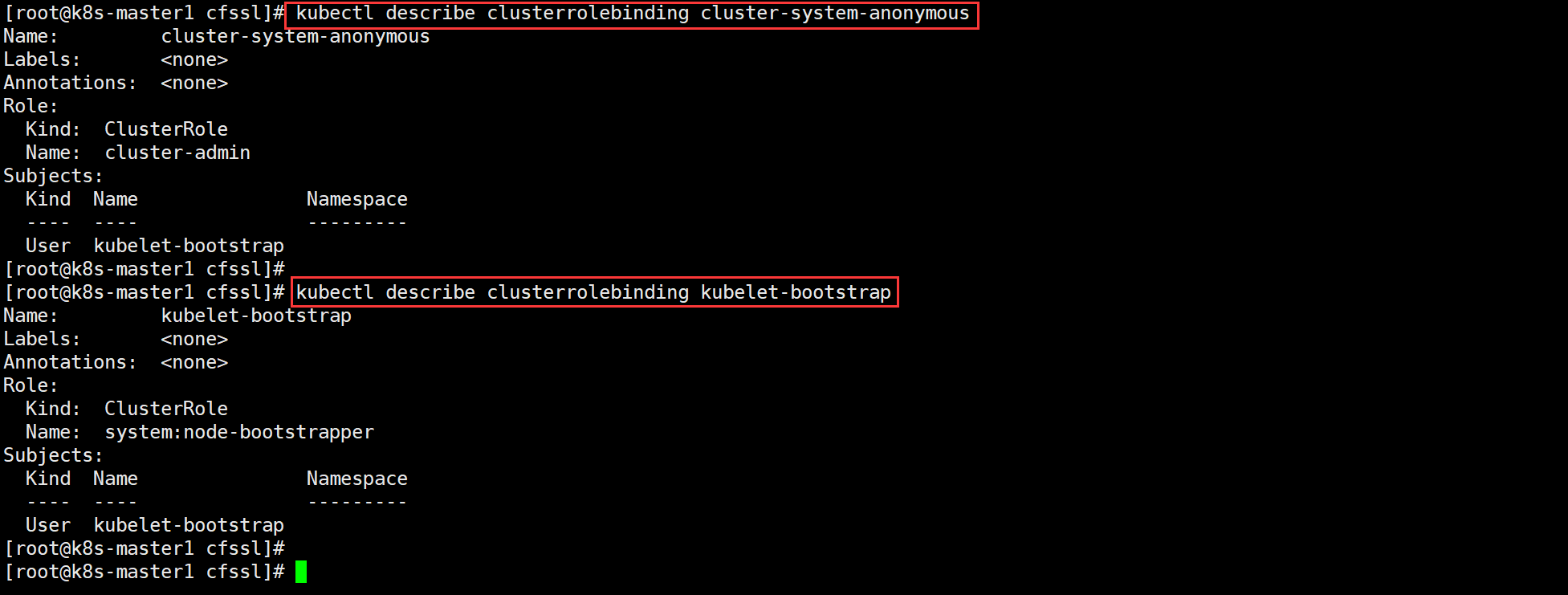

基础验证

kubectl describe clusterrolebinding cluster-system-anonymous

kubectl describe clusterrolebinding kubelet-bootstrap

4、创建 kubelet 的 .conf 配置文件

cat > kubelet.json << "EOF"

{

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

"authentication": {

"x509": {

"clientCAFile": "/data/kubernetes/ssl/ca.pem"

},

"webhook": {

"enabled": true,

"cacheTTL": "2m0s"

},

"anonymous": {

"enabled": false

}

},

"authorization": {

"mode": "Webhook",

"webhook": {

"cacheAuthorizedTTL": "5m0s",

"cacheUnauthorizedTTL": "30s"

}

},

"address": "192.168.56.174",

"port": 10250,

"readOnlyPort": 10255,

"cgroupDriver": "systemd",

"hairpinMode": "promiscuous-bridge",

"serializeImagePulls": false,

"clusterDomain": "cluster.local.",

"clusterDNS": ["10.96.0.2"]

}

EOF

# address:work节点对应的IP(分发至其他work节点后记得修改IP地址)

5、复制相关证书文件至配置文件中的指定 work 节点目录

# 首先在k8s-work节点创建相关目录

mkdir -p /data/kubernetes/{conf,ssl,logs/kubelet}

# 分发至k8s-work1

scp kubelet-bootstrap.kubeconfig kubelet.json k8s-work1:/data/kubernetes/conf/

scp ca.pem k8s-work1:/data/kubernetes/ssl/

# 分发至k8s-work2

scp kubelet-bootstrap.kubeconfig kubelet.json k8s-work2:/data/kubernetes/conf/

scp ca.pem k8s-work2:/data/kubernetes/ssl/

6、配置 systemd 管理

k8s-work 所有节点均执行

cat > /usr/lib/systemd/system/kubelet.service << "EOF"

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=docker.service

Requires=docker.service

[Service]

ExecStart=/usr/bin/kubelet \

--bootstrap-kubeconfig=/data/kubernetes/conf/kubelet-bootstrap.kubeconfig \

--cert-dir=/data/kubernetes/ssl \

--kubeconfig=/data/kubernetes/conf/kubelet.kubeconfig \

--config=/data/kubernetes/conf/kubelet.json \

--container-runtime=remote \

--container-runtime-endpoint=unix:///var/run/cri-dockerd.sock \

--rotate-certificates \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/data/kubernetes/logs/kubelet \

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

7、启动 kubelet 组件

systemctl daemon-reload

systemctl start kubelet.service

systemctl enable kubelet.service

8、验证

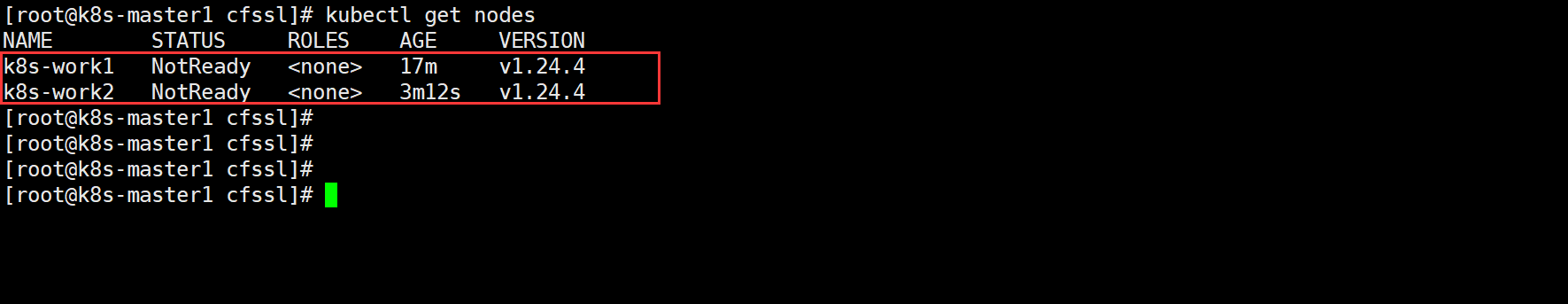

kubectl get nodes

# 注意:我的master节点并没有进工作负载

4.3.2.2.6 kube-proxy

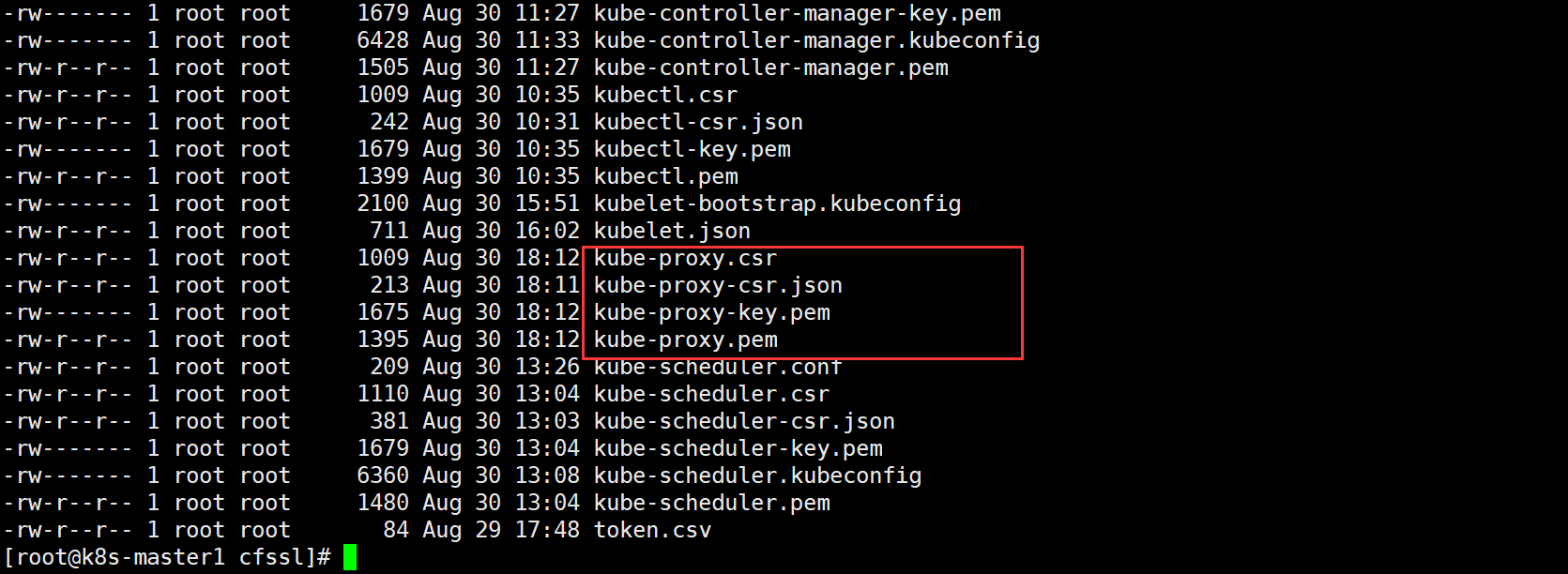

1、配置 kube-proxy 证书请求文件

cd /data/k8s-work/cfssl

cat > kube-proxy-csr.json << "EOF"

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "xgxy",

"OU": "ops"

}

]

}

EOF

2、生成 kube-proxy 证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

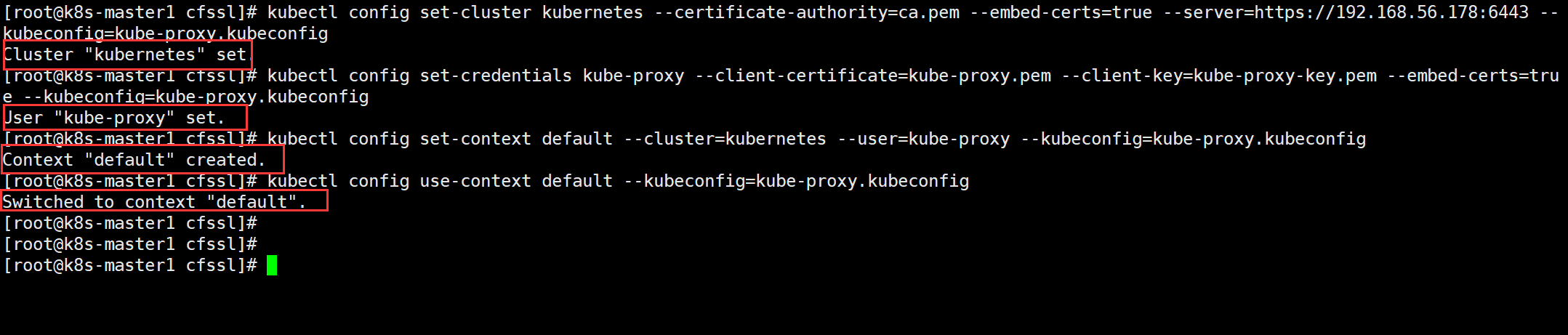

3、生成 kube-proxy 的 .kubeconfig 配置文件

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.56.178:6443 --kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy --client-certificate=kube-proxy.pem --client-key=kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

4、创建 kube-proxy 的 .yaml 配置文件

cd /data/k8s-work/cfssl

cat > kube-proxy.yaml << "EOF"

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 192.168.56.174

clientConnection:

kubeconfig: /data/kubernetes/conf/kube-proxy.kubeconfig

clusterCIDR: 10.244.0.0/16

healthzBindAddress: 192.168.56.174:10256

kind: KubeProxyConfiguration

metricsBindAddress: 192.168.56.174:10249

mode: "ipvs"

EOF

5、复制相关证书文件至配置文件中的指定目录

# 分发至k8s-work1

scp kube-proxy*.pem k8s-work1:/data/kubernetes/ssl/

scp kube-proxy.kubeconfig kube-proxy.yaml k8s-work1:/data/kubernetes/conf/

# 分发至k8s-work2

scp kube-proxy*.pem k8s-work2:/data/kubernetes/ssl/

scp kube-proxy.kubeconfig kube-proxy.yaml k8s-work2:/data/kubernetes/conf/

# 分发后记得修改IP

6、配置 systemd 管理

k8s-work 所有节点均执行

cat > /usr/lib/systemd/system/kube-proxy.service << "EOF"

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/bin/kube-proxy \

--config=/data/kubernetes/conf/kube-proxy.yaml \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/data/kubernetes/logs/kube-proxy \

--v=2

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

# 创建kube-proxy日志目录(所有k8s-work节点均执行)

mkdir /data/kubernetes/logs/kube-proxy

7、启动 kube-proxy 服务

systemctl daemon-reload

systemctl start kube-proxy.service

systemctl enable kube-proxy.service

至此,k8s-work 节点的所有组件已经部署完成,并已成功在运行。接下来就是部署 k8s 网络组件了。

4.4 网络组件部署(Calico)

对于高可用集群架构来说,在任意一台 master 节点上执行即可,因为Calico会以容器的方式部署于 work 节点上

Calico是一个纯三层的数据中心网络方案,是目前Kubernetes主流的网络方案。在 3.4 小节的网络规划中,说到了 pod 的网络规划,那 Calico 就是用来分配该网络(IP)的。

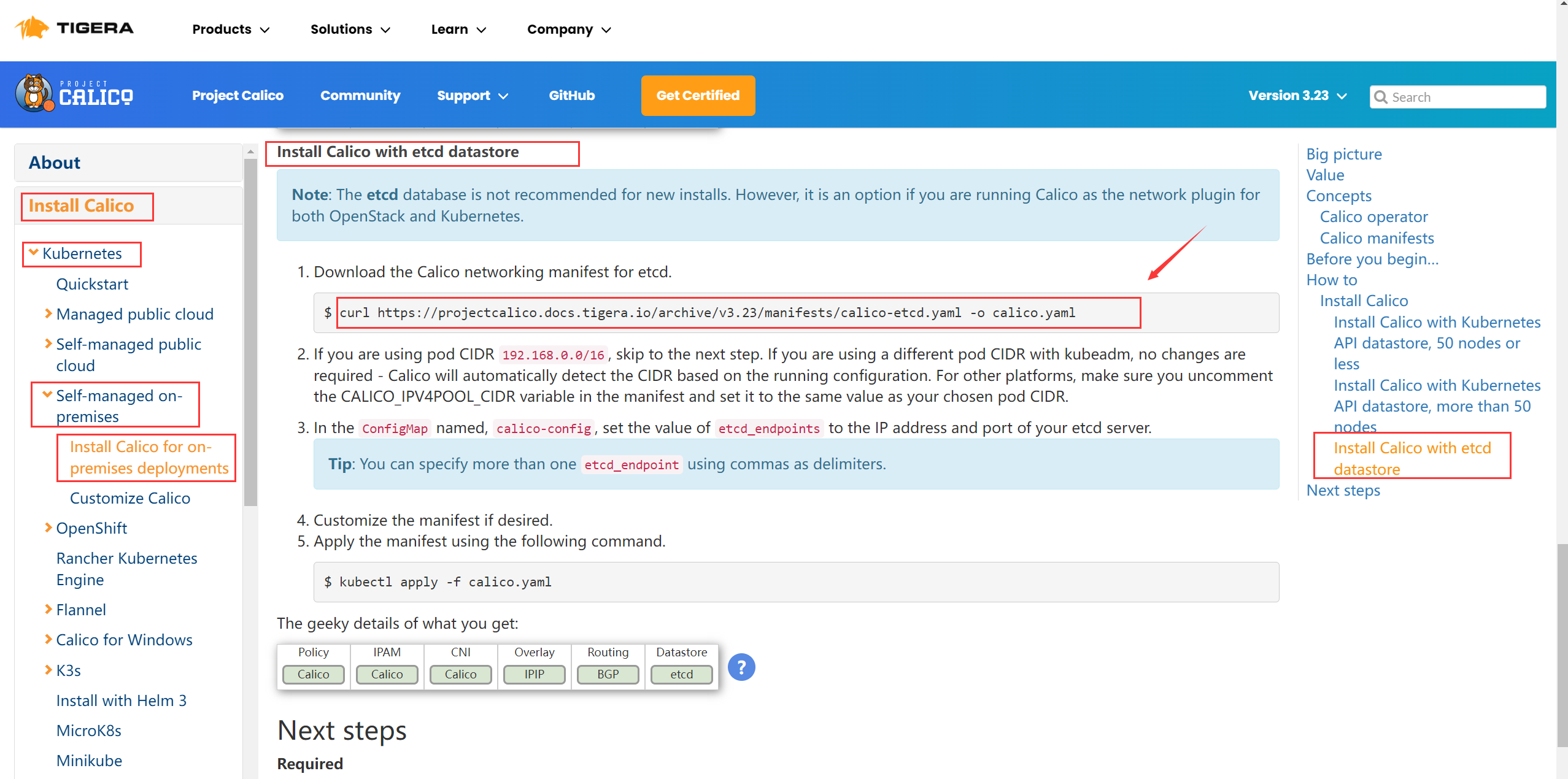

4.4.1 下载 yaml 文件

yaml 文件下载地址:https://docs.projectcalico.org/

# 同样在k8s-master1执行

# 先创建一个工作目录,用于存放yaml文件

mkdir /data/k8s-work/calico && cd /data/k8s-work/calico

curl https://projectcalico.docs.tigera.io/archive/v3.23/manifests/calico-etcd.yaml -o calico.yaml

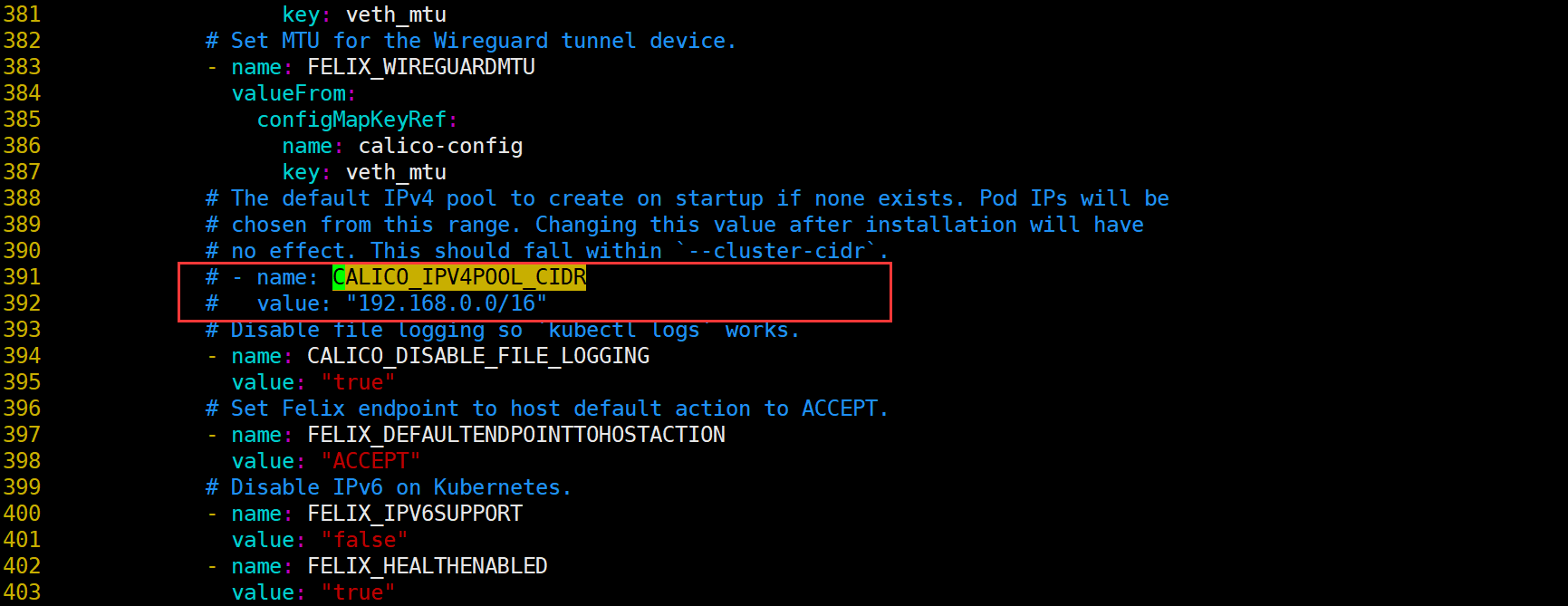

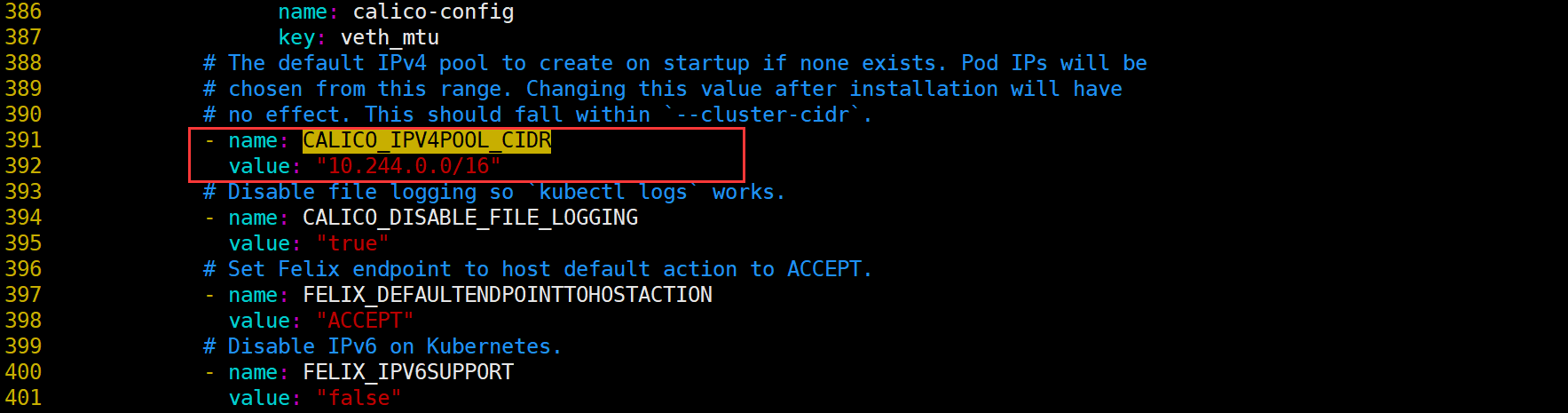

4.4.2 修改文件配置

在 391 行处,修改配置 value 值为上面定义的 10.244.0.0/16

修改后

4.4.3 应用配置文件

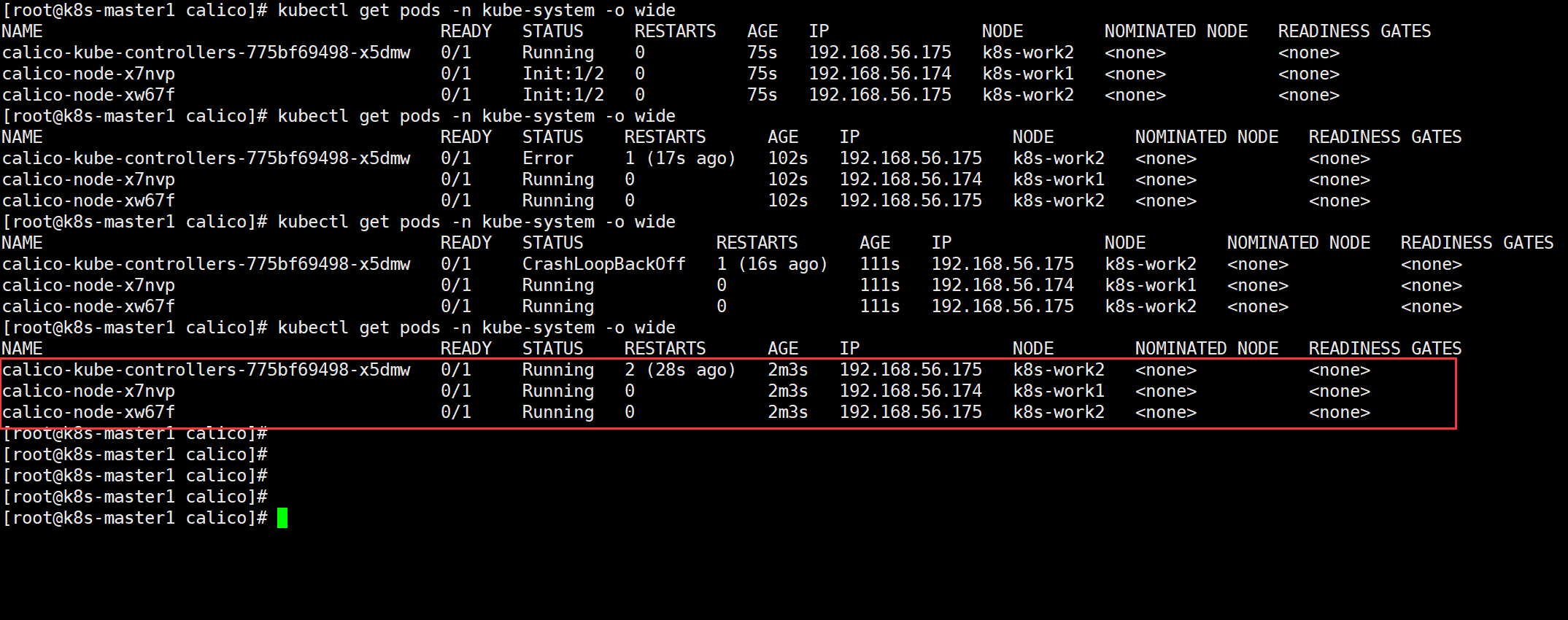

kubectl apply -f calico.yaml

4.4.4 验证 Calico 网络

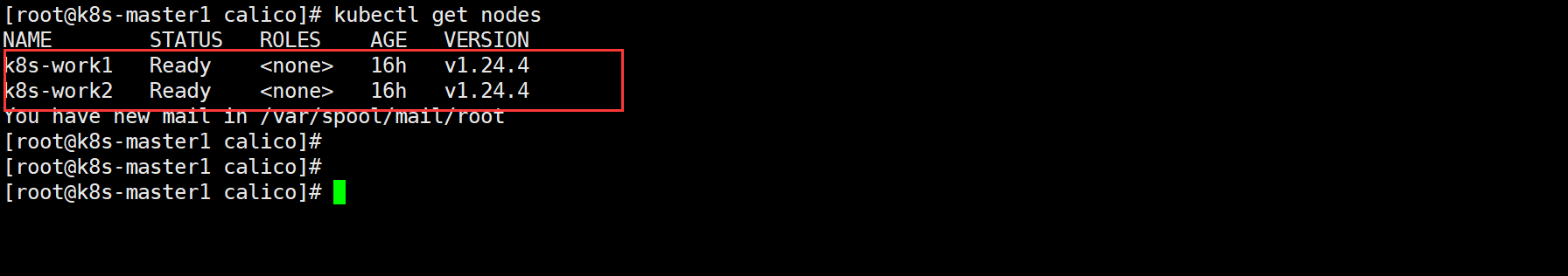

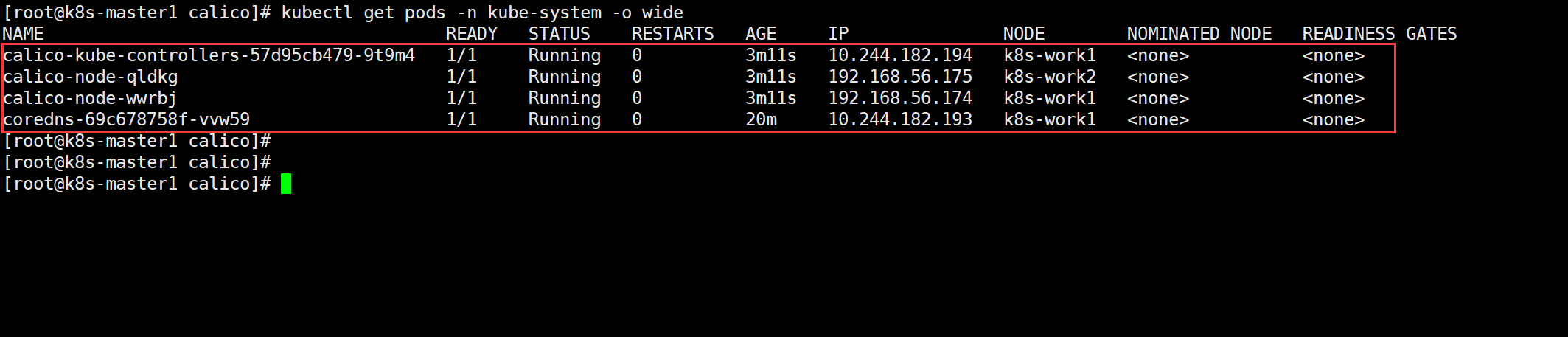

包括之前未就绪的 work 节点,现在已经就绪了(Ready)

如果以上 calico 版本的不能成功运行,建议降低版本,使用以下版本

https://docs.projectcalico.org/v3.19/manifests/calico.yaml

4.5 CoreDNS 部署

对于高可用集群架构来说,在任意一台 master 节点上执行即可,因为 CoreDNS 会以容器的方式部署于 work 节点上

4.5.1 下载 yaml 文件

参考:https://github.com/coredns/deployment/blob/master/kubernetes/coredns.yaml.sed

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes CLUSTER_DOMAIN REVERSE_CIDRS {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . UPSTREAMNAMESERVER {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}STUBDOMAINS

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. Default is 1.

# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

containers:

- name: coredns

image: coredns/coredns:1.9.3

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: CLUSTER_DNS_IP

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

4.5.2 修改文件配置

修改部分:

forward . UPSTREAMNAMESERVER ——> forward . /etc/resolv.conf

clusterIP: CLUSTER_DNS_IP ——> clusterIP: 10.96.0.2

cat > coredns.yaml << "EOF"

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. Default is 1.

# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

containers:

- name: coredns

image: coredns/coredns:1.9.3

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.96.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

EOF

4.5.3 应用配置文件

kubectl apply -f calico.yaml

4.5.4 验证 CoreDNS

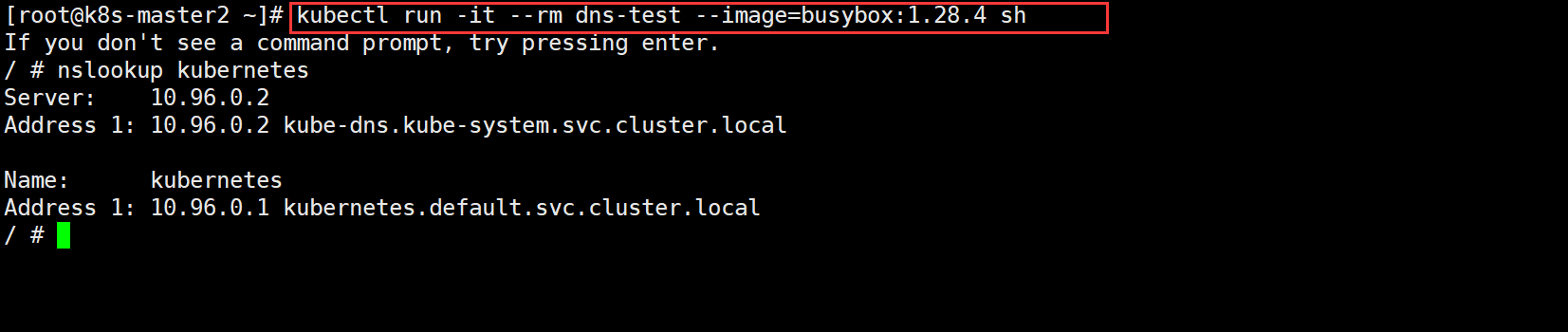

看看是否能正常解析:

[root@k8s-master2 ~]# kubectl run -it --rm dns-test --image=busybox:1.28.4 sh

If you don't see a command prompt, try pressing enter.

/ # nslookup kubernetes

Server: 10.96.0.2

Address 1: 10.96.0.2 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

如果以上 coredns yaml 配置文件中的镜像版本的不能成功运行,建议降低版本,使用以下版本

image: coredns/coredns:1.8.4

五、验证

5.1 部署 Nginx

cat > nginx.yaml << "EOF"

---

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx-web

spec:

replicas: 2

selector:

name: nginx

template:

metadata:

labels:

name: nginx

spec:

containers:

- name: nginx

image: nginx:1.19.6

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service-nodeport

spec:

ports:

- port: 80

targetPort: 80

nodePort: 30001

protocol: TCP

type: NodePort

selector:

name: nginx

EOF

5.2 Host 访问验证

至此,K8s 高可用集群架构部署完毕!!

六、FAQ

6.1 ETCD 启动报错

6.1.1 报错类型

当我配置完 ETCD 的 systemd 管理后,启动包如下错误:

conflicting environment variable is shadowed by corresponding command-line flag

大概意思就是 ETCD 的环境变量冲突问题,查阅了一番资料,再 ETCD3.4+ 版本中已经可以自动读取配置文件。

原始的 systemd 管理文件:

cat << EOF | tee /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/data/etcd/conf/etcd.conf

ExecStart=/usr/bin/etcd \

--name=\${ETCD_NAME} \

--data-dir=\${ETCD_DATA_DIR} \

--listen-peer-urls=\${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=\${ETCD_LISTEN_CLIENT_URLS} \

--advertise-client-urls=\${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=\${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=\${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=\${ETCD_INITIAL_CLUSTER_TOKEN} \

--initial-cluster-state=\${ETCD_INITIAL_CLUSTER_STATE} \

--cert-file=\${ETCD_CERT_FILE} \

--key-file=\${ETCD_KEY_FILE} \

--peer-cert-file=\${ETCD_PEER_CERT_FILE} \

--peer-key-file=\${ETCD_PEER_KEY_FILE} \

--trusted-ca-file=\${ETCD_TRUSTED_CA_FILE} \

--client-cert-auth=\${ETCD_CLIENT_CERT_AUTH} \

--peer-client-cert-auth=\${ETCD_PEER_CLIENT_CERT_AUTH} \

--peer-trusted-ca-file=\${ETCD_PEER_TRUSTED_CA_FILE}

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

6.2.1 解决方案

-

方案一:去掉 systemd 管理文件中的

EnvironmentFile参数,ExecStart部分就可以使用--xxx=xxx参数了 -

方案二:去掉

ExecStart部分后的--xxx=xxx参数,因为该 ETCD 版本会自动读取。cat << EOF | tee /usr/lib/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify EnvironmentFile=-/data/etcd/conf/etcd.conf ExecStart=/usr/bin/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

6.2 apiserver 启动报错

6.2.1 报错类型

kube-apiserver[1706]: Error: unknown flag: --insecure-port

kube-apiserver[2196]: Error: unknown flag: --enable-swagger-ui

大致意思就是 kube-apiserver 不知道这些变量,看了一下官方文档,在 1.24+ 版本中已经遗弃了 --insecure-port、–enable-swagger-ui 这两个参数了。

6.2.2 解决方案

修改 .conf 配置文件

修改前:

cat > /data/kubernetes/conf/kube-apiserver.conf << "EOF"

KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--anonymous-auth=false \

--bind-address=192.168.56.171 \

--secure-port=6443 \

--advertise-address=192.168.56.171 \

--insecure-port=8080 \

--authorization-mode=Node,RBAC \

--runtime-config=api/all=true \

--enable-bootstrap-token-auth \

--service-cluster-ip-range=10.96.0.0/16 \

--token-auth-file=/data/kubernetes/tokenfile/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/data/kubernetes/ssl/kube-apiserver.pem \

--tls-private-key-file=/data/kubernetes/ssl/kube-apiserver-key.pem \

--client-ca-file=/data/kubernetes/ssl/ca.pem \

--kubelet-client-certificate=/data/kubernetes/ssl/kube-apiserver.pem \

--kubelet-client-key=/data/kubernetes/ssl/kube-apiserver-key.pem \

--service-account-key-file=/data/kubernetes/ssl/ca-key.pem \

--service-account-signing-key-file=/data/kubernetes/ssl/ca-key.pem \

--service-account-issuer=api \

--etcd-cafile=/data/etcd/ssl/ca.pem \

--etcd-certfile=/data/etcd/ssl/etcd.pem \

--etcd-keyfile=/data/etcd/ssl/etcd-key.pem \

--etcd-servers=https://192.168.56.171:2379,https://192.168.56.172:2379,https://192.168.56.173:2379 \

--enable-swagger-ui=true \

--allow-privileged=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/data/kubernetes/logs/kube-apiserver/kube-apiserver-audit.log \

--event-ttl=1h \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/data/kubernetes/logs/kube-apiserver \

--v=4"

EOF

修改后:去掉 --insecure-port、–enable-swagger-ui 选项参数即可。

cat > /data/kubernetes/conf/kube-apiserver.conf << "EOF"

KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--anonymous-auth=false \

--bind-address=192.168.56.171 \

--secure-port=6443 \

--advertise-address=192.168.56.171 \

--authorization-mode=Node,RBAC \

--runtime-config=api/all=true \

--enable-bootstrap-token-auth \

--service-cluster-ip-range=10.96.0.0/16 \

--token-auth-file=/data/kubernetes/tokenfile/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/data/kubernetes/ssl/kube-apiserver.pem \

--tls-private-key-file=/data/kubernetes/ssl/kube-apiserver-key.pem \

--client-ca-file=/data/kubernetes/ssl/ca.pem \

--kubelet-client-certificate=/data/kubernetes/ssl/kube-apiserver.pem \

--kubelet-client-key=/data/kubernetes/ssl/kube-apiserver-key.pem \

--service-account-key-file=/data/kubernetes/ssl/ca-key.pem \

--service-account-signing-key-file=/data/kubernetes/ssl/ca-key.pem \

--service-account-issuer=api \

--etcd-cafile=/data/etcd/ssl/ca.pem \

--etcd-certfile=/data/etcd/ssl/etcd.pem \

--etcd-keyfile=/data/etcd/ssl/etcd-key.pem \

--etcd-servers=https://192.168.56.171:2379,https://192.168.56.172:2379,https://192.168.56.173:2379 \

--allow-privileged=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/data/kubernetes/logs/kube-apiserver/kube-apiserver-audit.log \

--event-ttl=1h \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/data/kubernetes/logs/kube-apiserver \

--v=4"

EOF

6.3 controller-manager 启动报错

与 apiserver 启动报错类似,在该 k8s 版本中,有些选项参数已经被遗弃了,根据提示去掉即可。

6.3.1 报错类型

kube-controller-manager[3505]: Error: unknown flag: --port

kube-controller-manager[4159]: Error: unknown flag: --horizontal-pod-autoscaler-use-rest-clients

6.3.2 解决方案

修改 .conf 配置文件

修改前:

cat > /data/kubernetes/conf/kube-controller-manager.conf << "EOF"

KUBE_CONTROLLER_MANAGER_OPTS="--port=10252 \

--secure-port=10257 \

--bind-address=127.0.0.1 \

--kubeconfig=/data/kubernetes/conf/kube-controller-manager.kubeconfig \

--service-cluster-ip-range=10.96.0.0/16 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/data/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/data/kubernetes/ssl/ca-key.pem \

--allocate-node-cidrs=true \

--cluster-cidr=10.244.0.0/16 \

--experimental-cluster-signing-duration=87600h \

--root-ca-file=/data/kubernetes/ssl/ca.pem \

--service-account-private-key-file=/data/kubernetes/ssl/ca-key.pem \

--leader-elect=true \

--feature-gates=RotateKubeletServerCertificate=true \

--controllers=*,bootstrapsigner,tokencleaner \

--horizontal-pod-autoscaler-use-rest-clients=true \

--horizontal-pod-autoscaler-sync-period=10s \

--tls-cert-file=/data/kubernetes/ssl/kube-controller-manager.pem \

--tls-private-key-file=/data/kubernetes/ssl/kube-controller-manager-key.pem \

--use-service-account-credentials=true \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/data/kubernetes/logs/kube-controller-manager \

--v=2"

EOF

修改后:

cat > /data/kubernetes/conf/kube-controller-manager.conf << "EOF"

KUBE_CONTROLLER_MANAGER_OPTS="--secure-port=10257 \

--bind-address=127.0.0.1 \

--kubeconfig=/data/kubernetes/conf/kube-controller-manager.kubeconfig \

--service-cluster-ip-range=10.96.0.0/16 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/data/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/data/kubernetes/ssl/ca-key.pem \

--allocate-node-cidrs=true \

--cluster-cidr=10.244.0.0/16 \

--experimental-cluster-signing-duration=87600h \

--root-ca-file=/data/kubernetes/ssl/ca.pem \

--service-account-private-key-file=/data/kubernetes/ssl/ca-key.pem \

--leader-elect=true \

--feature-gates=RotateKubeletServerCertificate=true \

--controllers=*,bootstrapsigner,tokencleaner \

--tls-cert-file=/data/kubernetes/ssl/kube-controller-manager.pem \

--tls-private-key-file=/data/kubernetes/ssl/kube-controller-manager-key.pem \

--use-service-account-credentials=true \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/data/kubernetes/logs/kube-controller-manager \

--v=2"

EOF

6.4 scheduler 启动报错

6.4.1 报错类型

kube-scheduler[5036]: Error: unknown flag: --address

6.4.2 解决方案

修改前:

cat > /data/kubernetes/conf/kube-scheduler.conf << "EOF"

KUBE_SCHEDULER_OPTS="--address=127.0.0.1 \

--kubeconfig=/data/kubernetes/conf/kube-scheduler.kubeconfig \

--leader-elect=true \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/data/kubernetes/logs/kube-scheduler \

--v=2"

EOF

修改后:

cat > /data/kubernetes/conf/kube-scheduler.conf << "EOF"

KUBE_SCHEDULER_OPTS="--kubeconfig=/data/kubernetes/conf/kube-scheduler.kubeconfig \

--leader-elect=true \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/data/kubernetes/logs/kube-scheduler \

--v=2"

EOF

6.5 kubelet 启动报错

6.5.1 报错类型

kubelet[5036]: Error: unknown flag: --network-plugin

6.5.2 解决方案

修改前:

cat > /usr/lib/systemd/system/kubelet.service << "EOF"

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=docker.service

Requires=docker.service

[Service]

ExecStart=/usr/bin/kubelet \

--bootstrap-kubeconfig=/data/kubernetes/conf/kubelet-bootstrap.kubeconfig \

--cert-dir=/data/kubernetes/ssl \

--kubeconfig=/data/kubernetes/conf/kubelet.kubeconfig \

--config=/data/kubernetes/conf/kubelet.json \

--container-runtime=remote \

--container-runtime-endpoint=unix:///var/run/cri-dockerd.sock \

--network-plugin=cni \

--rotate-certificates \

--pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.2 \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/data/kubernetes/logs/kubelet \

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

修改后:去掉 --network-plugin 和 --pod-infra-container-image 因为,cri-docker 的 systemd 文件已经指定了。

cat > /usr/lib/systemd/system/kubelet.service << "EOF"

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=docker.service

Requires=docker.service

[Service]

ExecStart=/usr/bin/kubelet \

--bootstrap-kubeconfig=/data/kubernetes/conf/kubelet-bootstrap.kubeconfig \

--cert-dir=/data/kubernetes/ssl \

--kubeconfig=/data/kubernetes/conf/kubelet.kubeconfig \

--config=/data/kubernetes/conf/kubelet.json \

--container-runtime=remote \

--container-runtime-endpoint=unix:///var/run/cri-dockerd.sock \

--rotate-certificates \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/data/kubernetes/logs/kubelet \

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

5724

5724

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?