目录

一,简介

本文在Catlike Coding 实现的【Custom SRP 2.5.0】基础上参考了知乎文章【Unity URP实现SSAO】增加了屏幕空间环境光遮蔽(SSAO)功能,并给出相关步骤。

二,环境

Unity :2022.3.18f1

CRP Library :14.0.10

URP基本结构 : Custom SRP 2.5.0

三 ,实现简要步骤

这里不细讲环境光遮蔽的原理,只讲述简要的步骤,SSAO是在渲染屏幕每一个像素点的时候计算当前像素点对应的物体表面被周围环境影响程度,所以在可行的情况下,我们取表面往上半球范围内随机几个点来近似计算影响程度。

为了实现这个操作,我们需要屏幕空间的法线以及屏幕空间的深度图,这样我们就能知道屏幕每个像素对应物体表面的世界坐标,进而可以选点来计算周围环境的影响程度。

四,实现过程

1,生成屏幕空间法线贴图

1.1 CPU层面

由于在 Custiom SRP 2.5.0 中没有保存屏幕空间法线的贴图,所以需要自己获取。

首先在CameraRendererTextures 中添加depthNormalAttachment 句柄

using UnityEngine.Experimental.Rendering.RenderGraphModule;

public readonly ref struct CameraRendererTextures

{

public readonly TextureHandle

colorAttachment, depthAttachment, depthNormalAttachment,

colorCopy, depthCopy;

public CameraRendererTextures(

TextureHandle colorAttachment,

TextureHandle depthAttachment,

TextureHandle depthNormalAttachment,

TextureHandle colorCopy,

TextureHandle depthCopy)

{

this.colorAttachment = colorAttachment;

this.depthAttachment = depthAttachment;

this.depthNormalAttachment = depthNormalAttachment;

this.colorCopy = colorCopy;

this.depthCopy = depthCopy;

}

}

在SetupPass中为depthNormalAttachment 初始化

...........

if (copyDepth)

{

desc.name = "Depth Copy";

depthCopy = renderGraph.CreateTexture(desc);

}

var dnTD = new TextureDesc(attachmentSize)

{

colorFormat = GraphicsFormat.R8G8B8A8_SNorm,

name = "Depth Normal Attachment"

};

depthNormalAttachment = builder.WriteTexture(renderGraph.CreateTexture(dnTD));

}

else

{

depthNormalAttachment = colorAttachment = depthAttachment = pass.colorAttachment = pass.depthAttachment =

builder.WriteTexture(renderGraph.ImportBackbuffer(

BuiltinRenderTextureType.CameraTarget));

}

builder.AllowPassCulling(false);

builder.SetRenderFunc<SetupPass>(

static (pass, context) => pass.Render(context));

return new CameraRendererTextures(

colorAttachment, depthAttachment, depthNormalAttachment, colorCopy, depthCopy);

dnTD 的 colorFormat 我写死的GraphicsFormat.R8G8B8A8_SNorm ,也可以和前面的保持一致判断是不是HDR选择格式【SystemInfo.GetGraphicsFormat(useHDR ? DefaultFormat.HDR : DefaultFormat.LDR)】

创建新的文件CameraDepthNormalsPass 并添加如下代码

using UnityEngine;

using UnityEngine.Experimental.Rendering;

using UnityEngine.Experimental.Rendering.RenderGraphModule;

using UnityEngine.Rendering;

using UnityEngine.Rendering.RendererUtils;

public class CameraDepthNormalsPass

{

static readonly ProfilingSampler sampler = new("CameraDepthNormalsPass");

static readonly ShaderTagId[] shaderTagIds = {

new("CameraDepthNormals")

};

static readonly int

CameraDepthNormalsID = Shader.PropertyToID("_CameraDepthNormalsTexture");

TextureHandle CameraDepthNormals,TempDepth;

RendererListHandle list;

void Render(RenderGraphContext context)

{

CommandBuffer buffer = context.cmd;

context.renderContext.ExecuteCommandBuffer(buffer);

buffer.Clear();

}

public static void Record(RenderGraph renderGraph, Camera camera, CullingResults cullingResults,

bool useHDR, int renderingLayerMask, Vector2Int attachmentSize, in CameraRendererTextures textures)

{

using RenderGraphBuilder builder =

renderGraph.AddRenderPass(sampler.name, out CameraDepthNormalsPass pass, sampler);

//指定渲染不透明物体的[CameraDepthNormals] Pass

pass.list = builder.UseRendererList(renderGraph.CreateRendererList(

new RendererListDesc(shaderTagIds, cullingResults, camera)

{

sortingCriteria =SortingCriteria.CommonOpaque,

rendererConfiguration = PerObjectData.None,

renderQueueRange = RenderQueueRange.opaque ,

renderingLayerMask = (uint)renderingLayerMask

}));

//申请读写法线图

pass.CameraDepthNormals = builder.ReadWriteTexture(textures.depthNormalAttachment);

var dnTD = new TextureDesc(attachmentSize)

{

colorFormat = SystemInfo.GetGraphicsFormat(useHDR ? DefaultFormat.HDR : DefaultFormat.LDR),

depthBufferBits = DepthBits.Depth32,

name = "TempDepth"

};

//创建一个临时深度图、这个图不一定必要

pass.TempDepth = builder.ReadWriteTexture(renderGraph.CreateTexture(dnTD));

builder.SetRenderFunc<CameraDepthNormalsPass>(

static (pass, context) => pass.Render(context));

}

}

补充Render操作,DrawRendererList 会把所有不透明的物体的法线写入到深度图上。注意,在这一步中我调整了渲染目标【SetRenderTarget】 为了后面渲染正确,这个要在之后调整回来,你也可以在绑定法线图后调整回来。

void Render(RenderGraphContext context)

{

CommandBuffer buffer = context.cmd;

//设置接下来的渲染目标为法线图,深度图我用了个临时图代替

buffer.SetRenderTarget(

CameraDepthNormals, RenderBufferLoadAction.DontCare, RenderBufferStoreAction.Store

, TempDepth, RenderBufferLoadAction.DontCare, RenderBufferStoreAction.DontCare);

buffer.ClearRenderTarget(true, true, Color.clear);

buffer.DrawRendererList(list);

//绑定法线图,这样可以在后面生成AO图的时候访问这张贴图了

buffer.SetGlobalTexture(CameraDepthNormalsID, CameraDepthNormals);

context.renderContext.ExecuteCommandBuffer(buffer);

buffer.Clear();

}

调整SkyboxPass.cs , 把渲染目标调整回来

public class SkyboxPass

{

static readonly ProfilingSampler sampler = new("Skybox");

Camera camera;

TextureHandle colorAttachment, depthAttachment;

void Render(RenderGraphContext context)

{

CommandBuffer buffer = context.cmd;

buffer.SetRenderTarget(

colorAttachment, RenderBufferLoadAction.Load, RenderBufferStoreAction.Store,

depthAttachment, RenderBufferLoadAction.Load, RenderBufferStoreAction.Store

);

context.renderContext.ExecuteCommandBuffer(context.cmd);

buffer.Clear();

context.renderContext.DrawSkybox(camera);

}

public static void Record(RenderGraph renderGraph, Camera camera,

in CameraRendererTextures textures)

{

if (camera.clearFlags == CameraClearFlags.Skybox)

{

using RenderGraphBuilder builder = renderGraph.AddRenderPass(

sampler.name, out SkyboxPass pass, sampler);

pass.camera = camera;

pass.colorAttachment = builder.ReadWriteTexture(textures.colorAttachment);

pass.depthAttachment = builder.ReadTexture(textures.depthAttachment);

builder.SetRenderFunc<SkyboxPass>(

static (pass, context) => pass.Render(context));

}

}

}调整CameraRenderer.cs 我选择把CameraDepthNormalsPass 的渲染放在了不透明物体渲染的后面,天空盒渲染的前面,如果顺序调整了的话记得要把渲染目标调整好

..............................

using (renderGraph.RecordAndExecute(renderGraphParameters))

{

// Add passes here.

using var _ = new RenderGraphProfilingScope(renderGraph, cameraSampler);

LightResources lightResources = LightingPass.Record(renderGraph, cullingResults, shadowSettings, useLightsPerObject, cameraSettings.maskLights ? cameraSettings.renderingLayerMask : -1);

CameraRendererTextures textures = SetupPass.Record(renderGraph, useIntermediateBuffer, useColorTexture, useDepthTexture, bufferSettings.allowHDR, bufferSize, camera);

GeometryPass.Record(renderGraph, camera, cullingResults, useLightsPerObject, cameraSettings.renderingLayerMask, true, textures, lightResources);

CameraDepthNormalsPass.Record(renderGraph, camera, cullingResults, bufferSettings.allowHDR, cameraSettings.renderingLayerMask, bufferSize, textures);

SkyboxPass.Record(renderGraph, camera, textures);

......................

1.2 shader层面

修改Fragment.hlsl 添加纹理 _CameraDepthNormalsTexture 。不一定要添加在这个文件,其他地方均可。

#ifndef FRAGMENT_INCLUDED

#define FRAGMENT_INCLUDED

TEXTURE2D(_CameraColorTexture);

TEXTURE2D(_CameraDepthTexture);

TEXTURE2D(_CameraDepthNormalsTexture);

float4 _CameraBufferSize;

struct Fragment {

...........修改LitInput.hlsl 里法线贴图的采样器,原本采样器用的是sampler_BaseMap ,但我们新写的Pass中并没有加载BaseMap,所以不能直接使用sampler_BaseMap,改为sampler_NormalMap

..............

TEXTURE2D(_NormalMap);

SAMPLER(sampler_NormalMap);

...................

float3 GetNormalTS(InputConfig c) {

float4 map = SAMPLE_TEXTURE2D(_NormalMap, sampler_NormalMap, c.baseUV);

...............

}

新建一个CameraDepthNormals.hlsl 文件,添加如下代码。主要思路就是模型没有法线贴图就输出模型本身的法线。这一段是照着LitPass.hlsl 删改的

#ifndef CAMERA_DEPTH_NORMALS_INCLUDED

#define CAMERA_DEPTH_NORMALS_INCLUDED

#include "Surface.hlsl"

#include "Shadows.hlsl"

#include "Light.hlsl"

#include "BRDF.hlsl"

struct Attributes {

float3 positionOS : POSITION;

float3 normalOS : NORMAL;

float4 tangentOS : TANGENT;

float2 baseUV : TEXCOORD0;

UNITY_VERTEX_INPUT_INSTANCE_ID

};

struct Varyings {

float4 positionCS_SS : SV_POSITION;

float3 positionWS : VAR_POSITION;

float3 normalWS : VAR_NORMAL;

#if defined(_NORMAL_MAP)

float4 tangentWS : VAR_TANGENT;

#endif

float2 baseUV : VAR_BASE_UV;

UNITY_VERTEX_INPUT_INSTANCE_ID

};

Varyings CameraDepthNormalsPassVertex(Attributes input) {

Varyings output;

UNITY_SETUP_INSTANCE_ID(input);

UNITY_TRANSFER_INSTANCE_ID(input, output);

output.positionWS = TransformObjectToWorld(input.positionOS);

output.positionCS_SS = TransformWorldToHClip(output.positionWS);

output.normalWS = TransformObjectToWorldNormal(input.normalOS);

#if defined(_NORMAL_MAP)

output.tangentWS = float4(

TransformObjectToWorldDir(input.tangentOS.xyz), input.tangentOS.w

);

#endif

output.baseUV = TransformBaseUV(input.baseUV);

return output;

}

float4 CameraDepthNormalsPassFragment(Varyings input) : SV_TARGET{

UNITY_SETUP_INSTANCE_ID(input);

InputConfig config = GetInputConfig(input.positionCS_SS, input.baseUV);

#if defined(_NORMAL_MAP)

float3 normal = NormalTangentToWorld(

GetNormalTS(config),

input.normalWS, input.tangentWS

);

#else

float3 normal = normalize(input.normalWS);

#endif

return float4(normal, 0.0);

}

#endif

修改Lit.shader 把 Pass添加进去,注意: #include 里面的路径需要对应你的CameraDepthNormals.hlsl路径

.....................................

#pragma vertex MetaPassVertex

#pragma fragment MetaPassFragment

#include "../ShaderLibrary/MetaPass.hlsl"

ENDHLSL

}

Pass {

Tags {

"LightMode" = "CameraDepthNormals"

}

ZWrite On

Cull[_Cull]

HLSLPROGRAM

#pragma target 4.5

#pragma shader_feature _NORMAL_MAP

#pragma vertex CameraDepthNormalsPassVertex

#pragma fragment CameraDepthNormalsPassFragment

#include "../ShaderLibrary/CameraDepthNormals.hlsl"

ENDHLSL

}

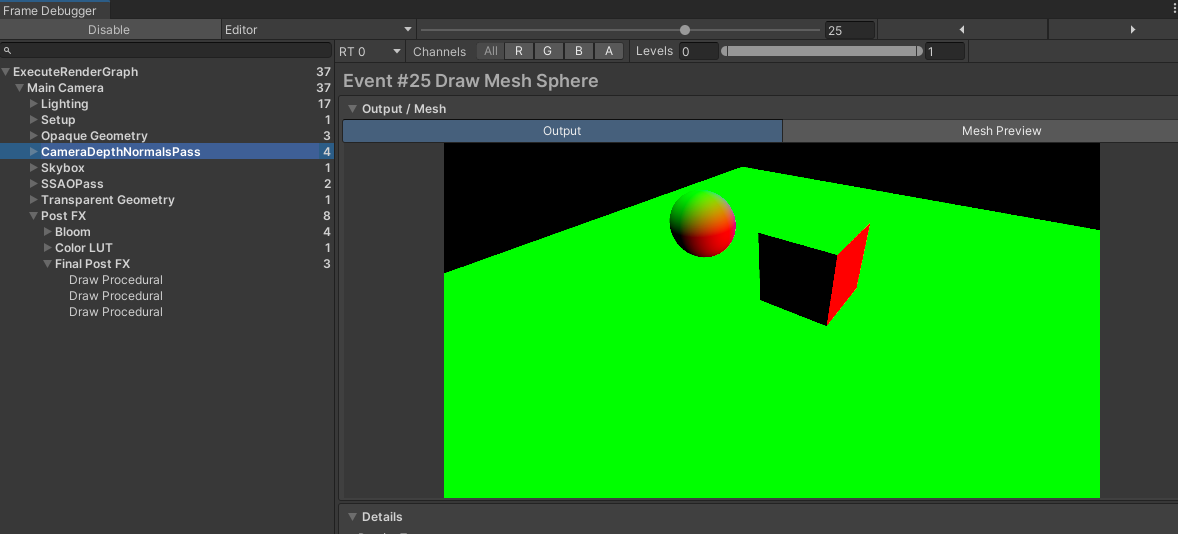

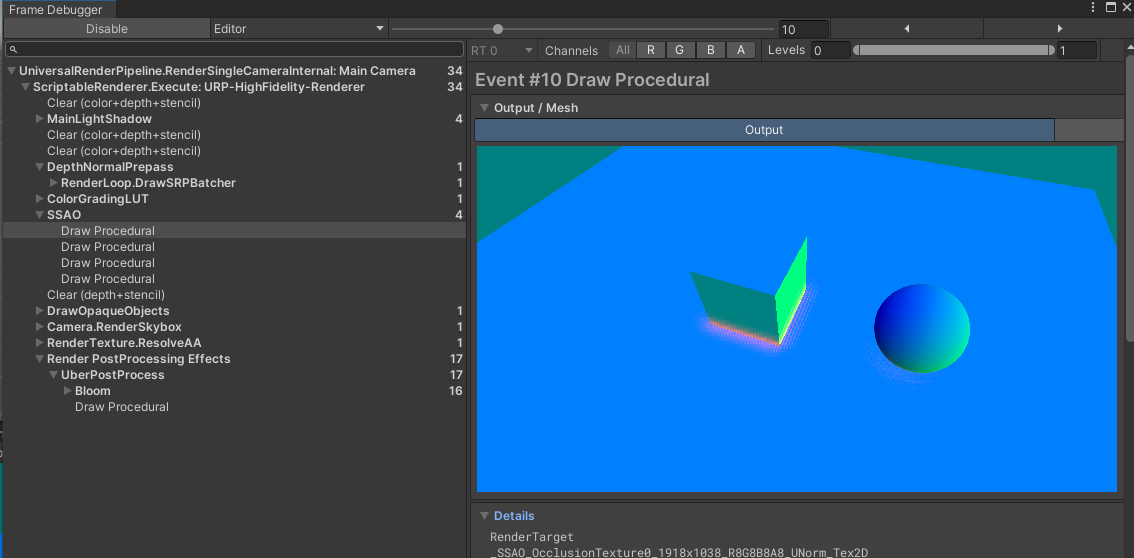

1.3 效果

2. 生成AO贴图

2.1 CPU层面

新建一个class 命名为SSAOParams ,这个是SSAO需要用到的一些调整参数

[Serializable]

public class SSAOParams

{

[SerializeField]

Shader SSAOshader = default;

[System.NonSerialized]

Material SSAOmaterial;

public Material SSAOMaterial

{

get

{

if (SSAOmaterial == null && SSAOshader != null)

{

SSAOmaterial = new Material(SSAOshader);

SSAOmaterial.hideFlags = HideFlags.HideAndDontSave;

}

return SSAOmaterial;

}

}

public bool allowSSAO;

[Range(0f, 2)]

public float RADIUS;

[Range(0f, 2)]

public float INTENSITY;

[Range(0f, 100f)]

public float DOWNSAMPLE;

[Range(0f, 1000f)]

public float FALLOFF;

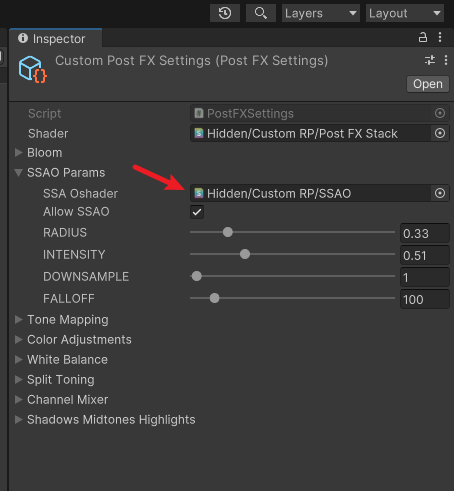

}添加一个引用到PostFXSettings.cs 中

public class PostFXSettings : ScriptableObject

{

.......

public SSAOParams sSAOParams = default;

.......

}新建SSAOPass.cs 并添加如下代码

using UnityEngine;

using UnityEngine.Experimental.Rendering;

using UnityEngine.Experimental.Rendering.RenderGraphModule;

using UnityEngine.Rendering;

public class SSAOPass

{

static readonly ProfilingSampler sampler = new("SSAOPass");

Camera Camera;

SSAOParams SSAOParams;

public enum SSAOPassEnum

{

SSAO,

Final,

}

void Render(RenderGraphContext context)

{

CommandBuffer buffer = context.cmd;

context.renderContext.ExecuteCommandBuffer(buffer);

buffer.Clear();

}

public static void Record(RenderGraph renderGraph, Camera camera, Vector2Int BufferSize, SSAOParams SSAOParams,

in CameraRendererTextures textures)

{

using RenderGraphBuilder builder = renderGraph.AddRenderPass(sampler.name, out SSAOPass pass, sampler);

pass.Camera = camera;

pass.SSAOParams = SSAOParams;

builder.SetRenderFunc<SSAOPass>(

static (pass, context) => pass.Render(context));

}

}增加SSAOBaseText, depthAttachmentHandle, colorAttachmentHandle 贴图句柄,SSAOBaseText用来保存过程中生成的AO贴图,depthAttachmentHandle 深度图 colorAttachmentHandle用在后面附加AO贴图

public class SSAOPass

{

........

TextureHandle SSAOBaseText, depthAttachmentHandle;

........

public static void Record(RenderGraph renderGraph, Camera camera, Vector2Int BufferSize, SSAOParams SSAOParams,

in CameraRendererTextures textures)

{

........

var desc = new TextureDesc(BufferSize)

{

colorFormat = GraphicsFormat.R8G8B8A8_UNorm,

name = "SSAOBase"

};

//设置贴图读写状态

pass.SSAOBaseText = builder.ReadWriteTexture(renderGraph.CreateTexture(desc));

pass.depthAttachmentHandle = builder.ReadTexture(textures.depthAttachment);

//读取之前生成的法线图

builder.ReadTexture(textures.depthNormalAttachment);

builder.SetRenderFunc<SSAOPass>(

static (pass, context) => pass.Render(context));

}

}准备渲染前需要的数据

public class SSAOPass

{

.......

static readonly int

ProjectionParams2 = Shader.PropertyToID("_ProjectionParams2"),

CameraViewTopLeftCorner = Shader.PropertyToID("_CameraViewTopLeftCorner"),

CameraViewXExtent = Shader.PropertyToID("_CameraViewXExtent"),

CameraViewYExtent = Shader.PropertyToID("_CameraViewYExtent"),

SSAOPramasID = Shader.PropertyToID("_SSAOPramas"),

SSAODepthTexture = Shader.PropertyToID("_SSAODepthTexture"),

SSAOBaseTexture = Shader.PropertyToID("_SSAOBaseTexture");

.......

void Render(RenderGraphContext context)

{

CommandBuffer buffer = context.cmd;

Matrix4x4 view = Camera.worldToCameraMatrix;

Matrix4x4 proj = Camera.projectionMatrix;

// 将camera view space 的平移置为0,用来计算world space下相对于相机的vector

Matrix4x4 cview = view;

cview.SetColumn(3, new Vector4(0.0f, 0.0f, 0.0f, 1.0f));

Matrix4x4 cviewProj = proj * cview;

// 计算viewProj逆矩阵,即从裁剪空间变换到世界空间

Matrix4x4 cviewProjInv = cviewProj.inverse;

// 计算世界空间下,近平面四个角的坐标

var near = Camera.nearClipPlane;

Vector4 topLeftCorner = cviewProjInv.MultiplyPoint(new Vector4(-1.0f, 1.0f, -1.0f, 1.0f));

Vector4 topRightCorner = cviewProjInv.MultiplyPoint(new Vector4(1.0f, 1.0f, -1.0f, 1.0f));

Vector4 bottomLeftCorner = cviewProjInv.MultiplyPoint(new Vector4(-1.0f, -1.0f, -1.0f, 1.0f));

// 计算相机近平面上方向向量

Vector4 cameraXExtent = topRightCorner - topLeftCorner;

Vector4 cameraYExtent = bottomLeftCorner - topLeftCorner;

//设置参数并计算AO图

buffer.SetGlobalVector(CameraViewTopLeftCorner, topLeftCorner);

buffer.SetGlobalVector(CameraViewXExtent, cameraXExtent);

buffer.SetGlobalVector(CameraViewYExtent, cameraYExtent);

buffer.SetGlobalVector(ProjectionParams2, new Vector4(1.0f / near, Camera.transform.position.x, Camera.transform.position.y, Camera.transform.position.z));

buffer.SetGlobalVector(SSAOPramasID, new Vector4(SSAOParams.RADIUS, SSAOParams.INTENSITY, SSAOParams.DOWNSAMPLE, SSAOParams.FALLOFF));

buffer.SetGlobalTexture(SSAODepthTexture, depthAttachmentHandle);

buffer.SetRenderTarget(SSAOBaseText, RenderBufferLoadAction.DontCare, RenderBufferStoreAction.Store);

buffer.DrawProcedural(Matrix4x4.identity, SSAOParams.SSAOMaterial, (int)SSAOPassEnum.SSAO, MeshTopology.Triangles, 3);

........

}

........

}在CameraRenderer.cs 中添加SSAOPass的调用

............

GeometryPass.Record(renderGraph, camera, cullingResults, useLightsPerObject, cameraSettings.renderingLayerMask, true, textures, lightResources);

CameraDepthNormalsPass.Record(renderGraph, camera, cullingResults, bufferSettings.allowHDR, cameraSettings.renderingLayerMask, bufferSize, textures);

SkyboxPass.Record(renderGraph, camera, textures);

if(postFXSettings.sSAOParams.allowSSAO)

SSAOPass.Record(renderGraph, camera, bufferSize, postFXSettings.sSAOParams, textures);

var copier = new CameraRendererCopier(material, camera, cameraSettings.finalBlendMode);

CopyAttachmentsPass.Record(renderGraph, useColorTexture, useDepthTexture, copier, textures);

GeometryPass.Record(renderGraph, camera, cullingResults, useLightsPerObject, cameraSettings.renderingLayerMask, false, textures, lightResources);

..................

2.2 shader层面

创建一个 SSAO.hlsl 文件,添加如下代码

这个shader 主要参考了Unity URP实现SSAO 以及urp 自带的shader库里的ssao.hlsl 文件里的内容编写,PackAONormal将每个像素点的AO和Normal都打包在不同的通道里面了,这样R通道存的就是计算的AO值,GBA通道存储的便是法线值

#ifndef SSAO_INCLUDED

#define SSAO_INCLUDED

TEXTURE2D(_SSAODepthTexture);

SAMPLER(sampler_SSAODepthTexture);

TEXTURE2D(_SSAOBaseTexture);

SAMPLER(sampler_SSAOBaseTexture);

#define SAMPLE_NORMAL(uv) half4(SAMPLE_TEXTURE2D_LOD(_CameraDepthNormalsTexture, sampler_linear_clamp, uv,0));

#define SAMPLE_BASE(uv) half4(SAMPLE_TEXTURE2D_LOD(_SSAOBaseTexture, sampler_SSAOBaseTexture, uv, 0));

static const int SAMPLE_COUNT = 3;

static const float SKY_DEPTH_VALUE = 0.00001;

static const half kGeometryCoeff = half(0.8);

static const half kBeta = half(0.004);

static const half kEpsilon = half(0.0001);

float4 _ProjectionParams2;

float4 _CameraViewTopLeftCorner;

float4 _CameraViewXExtent;

float4 _CameraViewYExtent;

float4 _SSAOPramas;

#define RADIUS _SSAOPramas.x

#define INTENSITY _SSAOPramas.y

#define DOWNSAMPLE _SSAOPramas.z

#define FALLOFF _SSAOPramas.w

struct Varyings {

float4 positionCS_SS : SV_POSITION;

float2 screenUV : VAR_SCREEN_UV;

};

half4 PackAONormal(half ao, half3 n)

{

n *= 0.5;

n += 0.5;

return half4(ao, n);

}

Varyings DefaultPassVertex(uint vertexID : SV_VertexID) {

Varyings output;

output.positionCS_SS = float4(

vertexID <= 1 ? -1.0 : 3.0,

vertexID == 1 ? 3.0 : -1.0,

0.0, 1.0

);

output.screenUV = float2(

vertexID <= 1 ? 0.0 : 2.0,

vertexID == 1 ? 2.0 : 0.0

);

if (_ProjectionParams.x < 0.0) {

output.screenUV.y = 1.0 - output.screenUV.y;

}

return output;

}

// 根据线性深度值和屏幕UV,获取屏幕像素点对应的物体表面坐标

half3 ReconstructViewPos(float2 uv, float linearEyeDepth) {

// Screen is y-inverted

uv.y = 1.0 - uv.y;

float zScale = linearEyeDepth * _ProjectionParams2.x; // divide by near plane

float3 viewPos = _CameraViewTopLeftCorner.xyz + _CameraViewXExtent.xyz * uv.x + _CameraViewYExtent.xyz * uv.y;

viewPos *= zScale;

return viewPos;

}

float Random(float2 p) {

return frac(sin(dot(p, float2(12.9898, 78.233))) * 43758.5453);

}

// 获取半球上随机一点

half3 PickSamplePoint(float2 uv, int sampleIndex, half rcpSampleCount, half3 normal) {

// 一坨随机数

half gn = InterleavedGradientNoise(uv * _ScreenParams.xy, sampleIndex);

half u = frac(Random(half2(0.0, sampleIndex)) + gn) * 2.0 - 1.0;

half theta = Random(half2(1.0, sampleIndex) + gn) * TWO_PI;

half u2 = sqrt(1.0 - u * u);

// 全球上随机一点

half3 v = half3(u2 * cos(theta), u2 * sin(theta), u);

v *= sqrt(sampleIndex * rcpSampleCount); // 随着采样次数越向外采样

// 半球上随机一点 逆半球法线翻转

// https://thebookofshaders.com/glossary/?search=faceforward

v = faceforward(v, -normal, v); // 确保v跟normal一个方向

// 缩放到[0, RADIUS]

v *= RADIUS;

return v;

}

half4 SSAOFragment(Varyings input) : SV_TARGET{

//采样深度

float depth = SAMPLE_DEPTH_TEXTURE_LOD(_SSAODepthTexture, sampler_SSAODepthTexture, input.screenUV, 0);

if (depth < SKY_DEPTH_VALUE) {

return PackAONormal(0,0);

}

depth = IsOrthographicCamera() ? OrthographicDepthBufferToLinear(depth) : LinearEyeDepth(depth, _ZBufferParams);

//采样法线

half4 normal = SAMPLE_NORMAL(input.screenUV);

//计算屏幕像素点对应的物体表面坐标

float3 vpos = ReconstructViewPos(input.screenUV, depth);

const half rcpSampleCount = rcp(SAMPLE_COUNT);

half ao = 0.0;

UNITY_UNROLL

for (int i = 0; i < SAMPLE_COUNT; i++) {

// 取正半球上随机一点

half3 offset = PickSamplePoint(input.screenUV, i, rcpSampleCount, normal);

half3 vpos2 = vpos + offset;

// 把采样点从世界坐标变换到裁剪空间

half4 spos2 = mul(UNITY_MATRIX_VP, vpos2);

// 计算采样点的屏幕uv

half2 uv2 = half2(spos2.x, spos2.y * _ProjectionParams.x) / spos2.w * 0.5 + 0.5;

// 计算采样点的depth

float rawDepth2 = SAMPLE_DEPTH_TEXTURE_LOD(_SSAODepthTexture, sampler_point_clamp, uv2, 0);

float linearDepth2 = LinearEyeDepth(rawDepth2, _ZBufferParams);

// 判断采样点是否被遮蔽

half IsInsideRadius = abs(spos2.w - linearDepth2) < RADIUS ? 1.0 : 0.0;

// 光线与着色点夹角越大,贡献越小

half3 difference = ReconstructViewPos(uv2, linearDepth2) - vpos; // 光线向量

half inten = max(dot(difference, normal) - kBeta * depth, 0.0) * rcp(dot(difference, difference) + kEpsilon);

ao += inten * IsInsideRadius;

}

//强度

ao *= RADIUS;

// Calculate falloff...

half falloff = 1.0f - depth * half(rcp(FALLOFF));

falloff = falloff * falloff;

ao = PositivePow(saturate(ao * INTENSITY * falloff * rcpSampleCount), 0.6);

return PackAONormal(ao, normal);

}

#endif

创建SSAO.shader,添加如下代码,还是和之前一样,#include的路径需要自行调整

Shader "Hidden/Custom RP/SSAO"

{

SubShader{

HLSLINCLUDE

#include "../ShaderLibrary/Common.hlsl"

#include "../ShaderLibrary/SSAO.hlsl"

ENDHLSL

Pass{

Name "SSAO"

Cull Off

ZTest Always

ZWrite Off

HLSLPROGRAM

#pragma vertex DefaultPassVertex

#pragma fragment SSAOFragment

ENDHLSL

}

}

}在后处理设置上添加SSAO.shader的引用

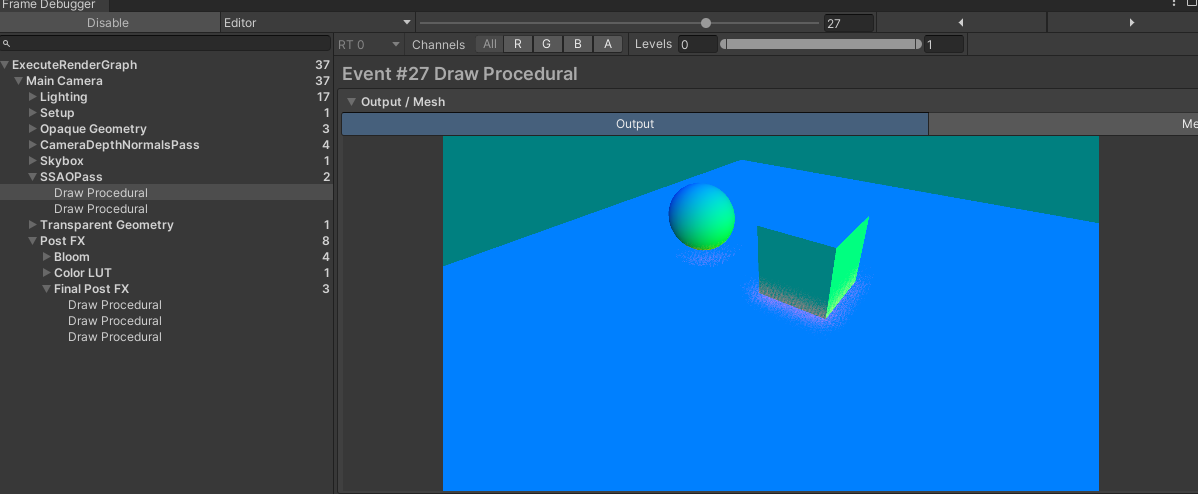

2.3 效果

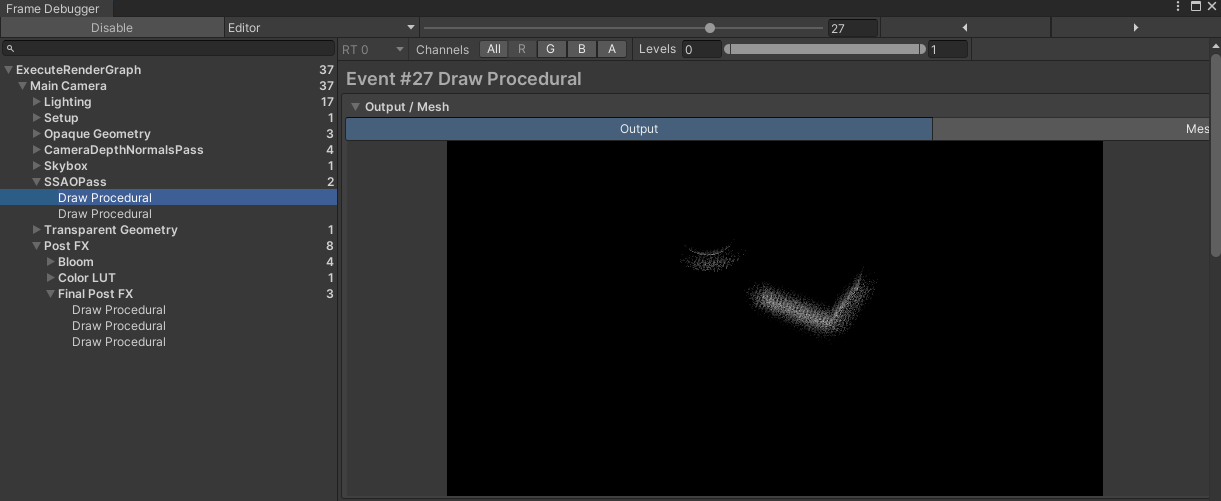

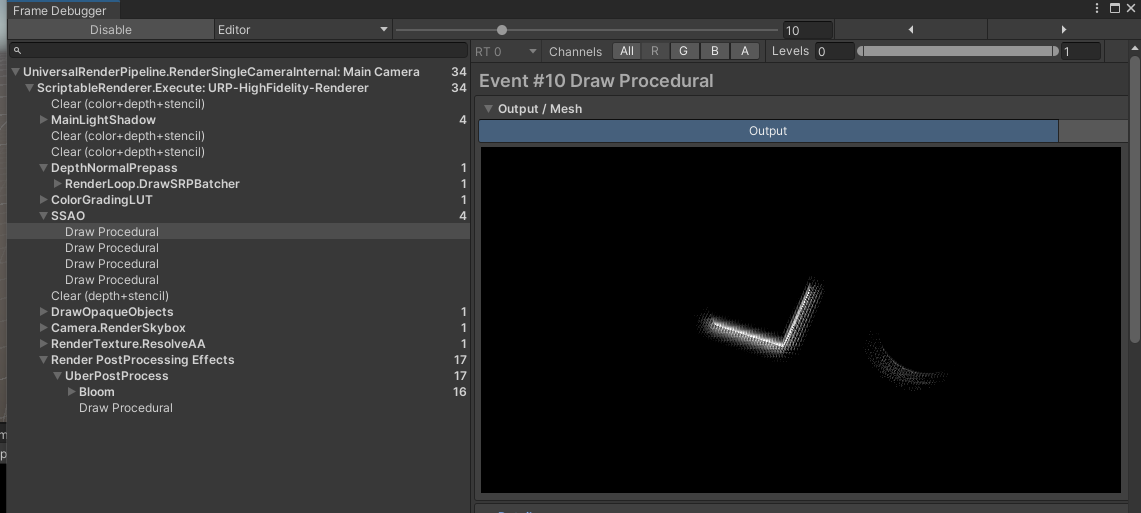

R通道对应的AO值:

GBA通道对应的每个屏幕像素点的法线值

URP自带的SSAO

R通道

效果还是有差别的,毕竟只是简化版本,而且与unity的不同,官方是在渲染物体之前先生成了AO贴图,这边我放在了渲染了物体之后,主要是因为unity在渲染法线图的时候把深度值也合并在了同一张贴图里。但这并不影响AO图的生成,AO图生成核心还是在这里,效果的优化也主要关心这一段。

3.模糊AO贴图并应用

3.1 CPU层面

在SSAOPass.cs 添加如下代码

public class SSAOPass

{

........

TextureHandle SSAOBaseText, depthAttachmentHandle, colorAttachmentHandle;

........

void Render(RenderGraphContext context)

{

........

buffer.DrawProcedural(Matrix4x4.identity, SSAOParams.SSAOMaterial, (int)SSAOPassEnum.SSAO, MeshTopology.Triangles, 3);

//Final

buffer.SetGlobalTexture(SSAOBaseTexture, SSAOBaseText);

buffer.SetRenderTarget(colorAttachmentHandle, RenderBufferLoadAction.Load, RenderBufferStoreAction.Store);

buffer.DrawProcedural(Matrix4x4.identity, SSAOParams.SSAOMaterial, (int)SSAOPassEnum.Final, MeshTopology.Triangles, 3);

........

}

public static void Record(RenderGraph renderGraph, Camera camera, Vector2Int BufferSize, SSAOParams SSAOParams,

in CameraRendererTextures textures)

{

........

//设置贴图读写状态

pass.SSAOBaseText = builder.ReadWriteTexture(renderGraph.CreateTexture(desc));

pass.depthAttachmentHandle = builder.ReadTexture(textures.depthAttachment);

pass.colorAttachmentHandle = builder.ReadWriteTexture(textures.colorAttachment);

........

}

}3.2 shader层面

修改SSAO.hlsl文件,添加如下代码

#ifndef SSAO_INCLUDED

#define SSAO_INCLUDED

.......

half4 PackAONormal(half ao, half3 n)

{

n *= 0.5;

n += 0.5;

return half4(ao, n);

}

half3 GetPackedNormal(half4 p)

{

return p.gba * 2.0 - 1.0;

}

half GetPackedAO(half4 p)

{

return p.r;

}

half CompareNormal(half3 d1, half3 d2)

{

return smoothstep(kGeometryCoeff, 1.0, dot(d1, d2));

}

...........

half BlurSmall(const float2 uv, const float2 delta)

{

half4 p0 = SAMPLE_BASE(uv);

half4 p1 = SAMPLE_BASE(uv + float2(-delta.x, -delta.y));

half4 p2 = SAMPLE_BASE(uv + float2(delta.x, -delta.y));

half4 p3 = SAMPLE_BASE(uv + float2(-delta.x, delta.y));

half4 p4 = SAMPLE_BASE(uv + float2(delta.x, delta.y));

half3 n0 = GetPackedNormal(p0);

half w0 = 1.0;

half w1 = CompareNormal(n0, GetPackedNormal(p1));

half w2 = CompareNormal(n0, GetPackedNormal(p2));

half w3 = CompareNormal(n0, GetPackedNormal(p3));

half w4 = CompareNormal(n0, GetPackedNormal(p4));

half s = 0.0;

s += GetPackedAO(p0) * w0;

s += GetPackedAO(p1) * w1;

s += GetPackedAO(p2) * w2;

s += GetPackedAO(p3) * w3;

s += GetPackedAO(p4) * w4;

return s *= rcp(w0 + w1 + w2 + w3 + w4);

}

half4 FinalBlur(Varyings input) : SV_Target

{

UNITY_SETUP_STEREO_EYE_INDEX_POST_VERTEX(input);

const float2 uv = input.screenUV;

const float2 delta = _CameraBufferSize.xy * rcp(DOWNSAMPLE);

return 1.0 - BlurSmall(uv, delta);

}

half4 frag_final(Varyings input) : SV_TARGET{

half ao = FinalBlur(input).r;

return half4(0.0, 0.0, 0.0, ao);

}

#endif

修改SSAO.shader,添加如下代码

Shader "Hidden/Custom RP/SSAO"

{

SubShader{

..........

Pass

{

ZTest NotEqual

ZWrite Off

Cull Off

Blend One SrcAlpha, Zero One

BlendOp Add, Add

Name "Final Blue"

HLSLPROGRAM

#pragma vertex DefaultPassVertex

#pragma fragment frag_final

ENDHLSL

}

}

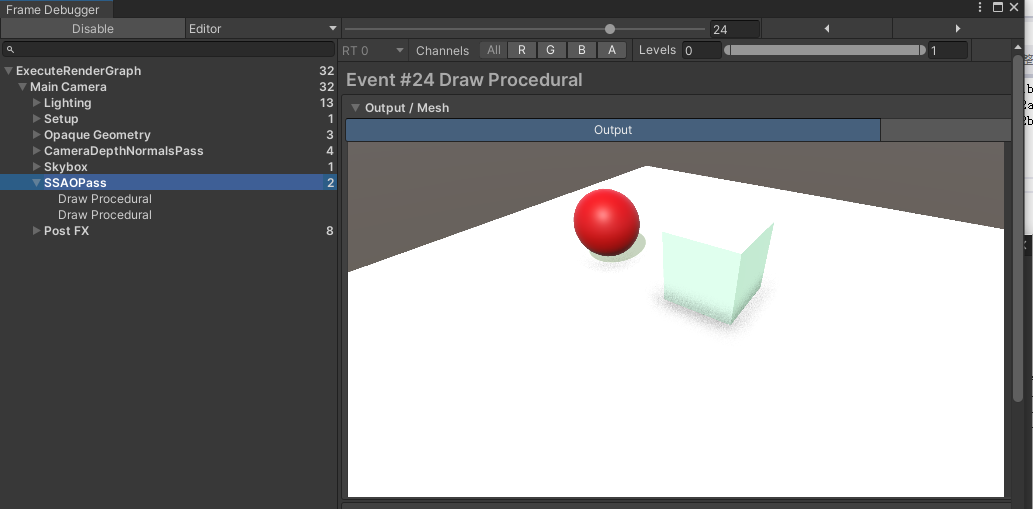

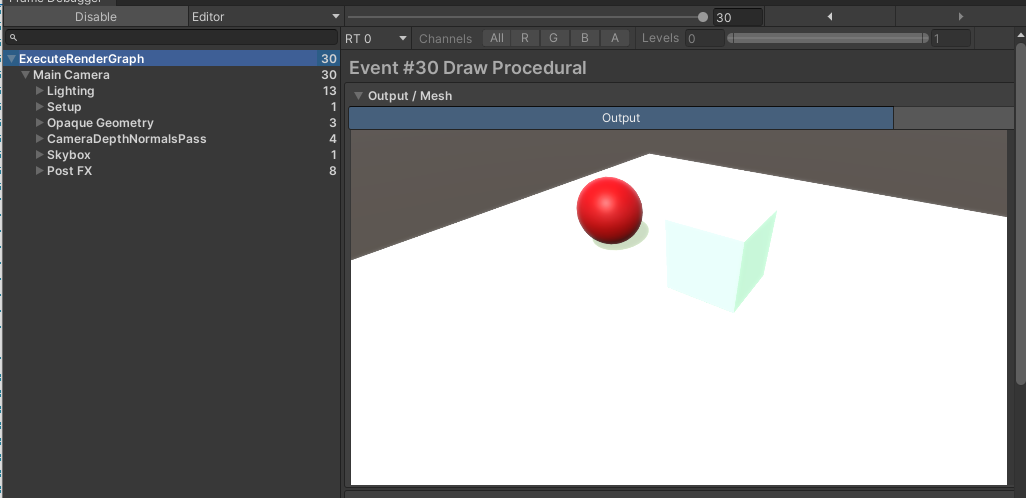

}3.3 效果

关闭SSAO

五.完整代码

SSAOPass.cs

using UnityEngine;

using UnityEngine.Experimental.Rendering;

using UnityEngine.Experimental.Rendering.RenderGraphModule;

using UnityEngine.Rendering;

public class SSAOPass

{

static readonly ProfilingSampler sampler = new("SSAOPass");

static readonly int

ProjectionParams2 = Shader.PropertyToID("_ProjectionParams2"),

CameraViewTopLeftCorner = Shader.PropertyToID("_CameraViewTopLeftCorner"),

CameraViewXExtent = Shader.PropertyToID("_CameraViewXExtent"),

CameraViewYExtent = Shader.PropertyToID("_CameraViewYExtent"),

SSAOPramasID = Shader.PropertyToID("_SSAOPramas"),

SSAODepthTexture = Shader.PropertyToID("_SSAODepthTexture"),

SSAOBaseTexture = Shader.PropertyToID("_SSAOBaseTexture");

TextureHandle SSAOBaseText, depthAttachmentHandle, colorAttachmentHandle;

Camera Camera;

SSAOParams SSAOParams;

public enum SSAOPassEnum

{

SSAO,

Final,

//SSAO_Bilateral_HorizontalBlur,

//SSAO_Bilateral_VerticalBlur,

//SSAO_Bilateral_FinalBlur,

}

void Render(RenderGraphContext context)

{

CommandBuffer buffer = context.cmd;

Matrix4x4 view = Camera.worldToCameraMatrix;

Matrix4x4 proj = Camera.projectionMatrix;

// 将camera view space 的平移置为0,用来计算world space下相对于相机的vector

Matrix4x4 cview = view;

cview.SetColumn(3, new Vector4(0.0f, 0.0f, 0.0f, 1.0f));

Matrix4x4 cviewProj = proj * cview;

// 计算viewProj逆矩阵,即从裁剪空间变换到世界空间

Matrix4x4 cviewProjInv = cviewProj.inverse;

// 计算世界空间下,近平面四个角的坐标

var near = Camera.nearClipPlane;

Vector4 topLeftCorner = cviewProjInv.MultiplyPoint(new Vector4(-1.0f, 1.0f, -1.0f, 1.0f));

Vector4 topRightCorner = cviewProjInv.MultiplyPoint(new Vector4(1.0f, 1.0f, -1.0f, 1.0f));

Vector4 bottomLeftCorner = cviewProjInv.MultiplyPoint(new Vector4(-1.0f, -1.0f, -1.0f, 1.0f));

// 计算相机近平面上方向向量

Vector4 cameraXExtent = topRightCorner - topLeftCorner;

Vector4 cameraYExtent = bottomLeftCorner - topLeftCorner;

//设置参数并计算AO图

buffer.SetGlobalVector(CameraViewTopLeftCorner, topLeftCorner);

buffer.SetGlobalVector(CameraViewXExtent, cameraXExtent);

buffer.SetGlobalVector(CameraViewYExtent, cameraYExtent);

buffer.SetGlobalVector(ProjectionParams2, new Vector4(1.0f / near, Camera.transform.position.x, Camera.transform.position.y, Camera.transform.position.z));

buffer.SetGlobalVector(SSAOPramasID, new Vector4(SSAOParams.RADIUS, SSAOParams.INTENSITY, SSAOParams.DOWNSAMPLE, SSAOParams.FALLOFF));

buffer.SetGlobalTexture(SSAODepthTexture, depthAttachmentHandle);

buffer.SetRenderTarget(SSAOBaseText, RenderBufferLoadAction.DontCare, RenderBufferStoreAction.Store);

buffer.DrawProcedural(Matrix4x4.identity, SSAOParams.SSAOMaterial, (int)SSAOPassEnum.SSAO, MeshTopology.Triangles, 3);

//Final

buffer.SetGlobalTexture(SSAOBaseTexture, SSAOBaseText);

buffer.SetRenderTarget(colorAttachmentHandle, RenderBufferLoadAction.Load, RenderBufferStoreAction.Store);

buffer.DrawProcedural(Matrix4x4.identity, SSAOParams.SSAOMaterial, (int)SSAOPassEnum.Final, MeshTopology.Triangles, 3);

context.renderContext.ExecuteCommandBuffer(buffer);

buffer.Clear();

}

public static void Record(RenderGraph renderGraph, Camera camera, Vector2Int BufferSize, SSAOParams SSAOParams,

in CameraRendererTextures textures)

{

using RenderGraphBuilder builder = renderGraph.AddRenderPass(sampler.name, out SSAOPass pass, sampler);

pass.Camera = camera;

pass.SSAOParams = SSAOParams;

var desc = new TextureDesc(BufferSize)

{

colorFormat = GraphicsFormat.R8G8B8A8_UNorm,

name = "SSAOBase"

};

//设置贴图读写状态

pass.SSAOBaseText = builder.ReadWriteTexture(renderGraph.CreateTexture(desc));

pass.depthAttachmentHandle = builder.ReadTexture(textures.depthAttachment);

pass.colorAttachmentHandle = builder.ReadWriteTexture(textures.colorAttachment);

//读取之前生成的法线图

builder.ReadTexture(textures.depthNormalAttachment);

builder.SetRenderFunc<SSAOPass>(

static (pass, context) => pass.Render(context));

}

}SSAO.hlsl

#ifndef SSAO_INCLUDED

#define SSAO_INCLUDED

TEXTURE2D(_SSAODepthTexture);

SAMPLER(sampler_SSAODepthTexture);

TEXTURE2D(_SSAOBaseTexture);

SAMPLER(sampler_SSAOBaseTexture);

#define SAMPLE_NORMAL(uv) half4(SAMPLE_TEXTURE2D_LOD(_CameraDepthNormalsTexture, sampler_linear_clamp, uv,0));

#define SAMPLE_BASE(uv) half4(SAMPLE_TEXTURE2D_LOD(_SSAOBaseTexture, sampler_SSAOBaseTexture, uv, 0));

static const int SAMPLE_COUNT = 3;

static const float SKY_DEPTH_VALUE = 0.00001;

static const half kGeometryCoeff = half(0.8);

static const half kBeta = half(0.004);

static const half kEpsilon = half(0.0001);

float4 _ProjectionParams2;

float4 _CameraViewTopLeftCorner;

float4 _CameraViewXExtent;

float4 _CameraViewYExtent;

float4 _SSAOPramas;

#define RADIUS _SSAOPramas.x

#define INTENSITY _SSAOPramas.y

#define DOWNSAMPLE _SSAOPramas.z

#define FALLOFF _SSAOPramas.w

struct Varyings {

float4 positionCS_SS : SV_POSITION;

float2 screenUV : VAR_SCREEN_UV;

};

half4 PackAONormal(half ao, half3 n)

{

n *= 0.5;

n += 0.5;

return half4(ao, n);

}

half3 GetPackedNormal(half4 p)

{

return p.gba * 2.0 - 1.0;

}

half GetPackedAO(half4 p)

{

return p.r;

}

half CompareNormal(half3 d1, half3 d2)

{

return smoothstep(kGeometryCoeff, 1.0, dot(d1, d2));

}

Varyings DefaultPassVertex(uint vertexID : SV_VertexID) {

Varyings output;

output.positionCS_SS = float4(

vertexID <= 1 ? -1.0 : 3.0,

vertexID == 1 ? 3.0 : -1.0,

0.0, 1.0

);

output.screenUV = float2(

vertexID <= 1 ? 0.0 : 2.0,

vertexID == 1 ? 2.0 : 0.0

);

if (_ProjectionParams.x < 0.0) {

output.screenUV.y = 1.0 - output.screenUV.y;

}

return output;

}

// 根据线性深度值和屏幕UV,还原世界空间下,相机到顶点的位置偏移向量

half3 ReconstructViewPos(float2 uv, float linearEyeDepth) {

// Screen is y-inverted

uv.y = 1.0 - uv.y;

float zScale = linearEyeDepth * _ProjectionParams2.x; // divide by near plane

float3 viewPos = _CameraViewTopLeftCorner.xyz + _CameraViewXExtent.xyz * uv.x + _CameraViewYExtent.xyz * uv.y;

viewPos *= zScale;

return viewPos;

}

float Random(float2 p) {

return frac(sin(dot(p, float2(12.9898, 78.233))) * 43758.5453);

}

// 获取半球上随机一点

half3 PickSamplePoint(float2 uv, int sampleIndex, half rcpSampleCount, half3 normal) {

// 一坨随机数

half gn = InterleavedGradientNoise(uv * _ScreenParams.xy, sampleIndex);

half u = frac(Random(half2(0.0, sampleIndex)) + gn) * 2.0 - 1.0;

half theta = Random(half2(1.0, sampleIndex) + gn) * TWO_PI;

half u2 = sqrt(1.0 - u * u);

// 全球上随机一点

half3 v = half3(u2 * cos(theta), u2 * sin(theta), u);

v *= sqrt(sampleIndex * rcpSampleCount); // 随着采样次数越向外采样

// 半球上随机一点 逆半球法线翻转

// https://thebookofshaders.com/glossary/?search=faceforward

v = faceforward(v, -normal, v); // 确保v跟normal一个方向

// 缩放到[0, RADIUS]

v *= RADIUS;

return v;

}

half4 SSAOFragment(Varyings input) : SV_TARGET{

//采样深度

float depth = SAMPLE_DEPTH_TEXTURE_LOD(_SSAODepthTexture, sampler_SSAODepthTexture, input.screenUV, 0);

if (depth < SKY_DEPTH_VALUE) {

return PackAONormal(0,0);

}

depth = IsOrthographicCamera() ? OrthographicDepthBufferToLinear(depth) : LinearEyeDepth(depth, _ZBufferParams);

//采样法线

half4 normal = SAMPLE_NORMAL(input.screenUV);

float3 vpos = ReconstructViewPos(input.screenUV, depth);

const half rcpSampleCount = rcp(SAMPLE_COUNT);

half ao = 0.0;

UNITY_UNROLL

for (int i = 0; i < SAMPLE_COUNT; i++) {

// 取正半球上随机一点

half3 offset = PickSamplePoint(input.screenUV, i, rcpSampleCount, normal);

half3 vpos2 = vpos + offset;

// 把采样点从世界坐标变换到裁剪空间

half4 spos2 = mul(UNITY_MATRIX_VP, vpos2);

// 计算采样点的屏幕uv

half2 uv2 = half2(spos2.x, spos2.y * _ProjectionParams.x) / spos2.w * 0.5 + 0.5;

// 计算采样点的depth

float rawDepth2 = SAMPLE_DEPTH_TEXTURE_LOD(_SSAODepthTexture, sampler_point_clamp, uv2, 0);

float linearDepth2 = LinearEyeDepth(rawDepth2, _ZBufferParams);

// 判断采样点是否被遮蔽

half IsInsideRadius = abs(spos2.w - linearDepth2) < RADIUS ? 1.0 : 0.0;

// 光线与着色点夹角越大,贡献越小

half3 difference = ReconstructViewPos(uv2, linearDepth2) - vpos; // 光线向量

half inten = max(dot(difference, normal) - kBeta * depth, 0.0) * rcp(dot(difference, difference) + kEpsilon);

ao += inten * IsInsideRadius;

}

//强度

ao *= RADIUS;

// Calculate falloff...

half falloff = 1.0f - depth * half(rcp(FALLOFF));

falloff = falloff * falloff;

ao = PositivePow(saturate(ao * INTENSITY * falloff * rcpSampleCount), 0.6);

return PackAONormal(ao, normal);

}

half4 Blur(const float2 uv, const float2 delta) : SV_Target

{

half4 p0 = SAMPLE_BASE(uv);

half4 p1a = SAMPLE_BASE(uv - delta * 1.3846153846);

half4 p1b = SAMPLE_BASE(uv + delta * 1.3846153846);

half4 p2a = SAMPLE_BASE(uv - delta * 3.2307692308);

half4 p2b = SAMPLE_BASE(uv + delta * 3.2307692308);

half3 n0 = GetPackedNormal(p0);

half w0 = half(0.2270270270);

half w1a = CompareNormal(n0, GetPackedNormal(p1a)) * half(0.3162162162);

half w1b = CompareNormal(n0, GetPackedNormal(p1b)) * half(0.3162162162);

half w2a = CompareNormal(n0, GetPackedNormal(p2a)) * half(0.0702702703);

half w2b = CompareNormal(n0, GetPackedNormal(p2b)) * half(0.0702702703);

half s = half(0.0);

s += GetPackedAO(p0) * w0;

s += GetPackedAO(p1a) * w1a;

s += GetPackedAO(p1b) * w1b;

s += GetPackedAO(p2a) * w2a;

s += GetPackedAO(p2b) * w2b;

s *= rcp(w0 + w1a + w1b + w2a + w2b);

return PackAONormal(s, n0);

}

half BlurSmall(const float2 uv, const float2 delta)

{

half4 p0 = SAMPLE_BASE(uv);

half4 p1 = SAMPLE_BASE(uv + float2(-delta.x, -delta.y));

half4 p2 = SAMPLE_BASE(uv + float2(delta.x, -delta.y));

half4 p3 = SAMPLE_BASE(uv + float2(-delta.x, delta.y));

half4 p4 = SAMPLE_BASE(uv + float2(delta.x, delta.y));

half3 n0 = GetPackedNormal(p0);

half w0 = 1.0;

half w1 = CompareNormal(n0, GetPackedNormal(p1));

half w2 = CompareNormal(n0, GetPackedNormal(p2));

half w3 = CompareNormal(n0, GetPackedNormal(p3));

half w4 = CompareNormal(n0, GetPackedNormal(p4));

half s = 0.0;

s += GetPackedAO(p0) * w0;

s += GetPackedAO(p1) * w1;

s += GetPackedAO(p2) * w2;

s += GetPackedAO(p3) * w3;

s += GetPackedAO(p4) * w4;

return s *= rcp(w0 + w1 + w2 + w3 + w4);

}

half4 HorizontalBlur(Varyings input) : SV_TARGET{

UNITY_SETUP_STEREO_EYE_INDEX_POST_VERTEX(input);

const float2 uv = input.screenUV;

const float2 delta = float2(_CameraBufferSize.x * rcp(DOWNSAMPLE), 0.0);

return Blur(uv, delta);

}

half4 VerticalBlur(Varyings input) : SV_Target

{

UNITY_SETUP_STEREO_EYE_INDEX_POST_VERTEX(input);

const float2 uv = input.screenUV;

const float2 delta = float2(0.0, _CameraBufferSize.y * rcp(DOWNSAMPLE));

return Blur(uv, delta);

}

half4 FinalBlur(Varyings input) : SV_Target

{

UNITY_SETUP_STEREO_EYE_INDEX_POST_VERTEX(input);

const float2 uv = input.screenUV;

const float2 delta = _CameraBufferSize.xy * rcp(DOWNSAMPLE);

return 1.0 - BlurSmall(uv, delta);

}

half4 frag_final(Varyings input) : SV_TARGET{

half ao = FinalBlur(input).r;

return half4(0.0, 0.0, 0.0, ao);

}

#endifCameraDepthNormalsPass.cs

using UnityEngine;

using UnityEngine.Experimental.Rendering;

using UnityEngine.Experimental.Rendering.RenderGraphModule;

using UnityEngine.Rendering;

using UnityEngine.Rendering.RendererUtils;

public class CameraDepthNormalsPass

{

static readonly ProfilingSampler sampler = new("CameraDepthNormalsPass");

static readonly ShaderTagId[] shaderTagIds = {

new("CameraDepthNormals")

};

static readonly int

CameraDepthNormalsID = Shader.PropertyToID("_CameraDepthNormalsTexture");

TextureHandle CameraDepthNormals,TempDepth;

RendererListHandle list;

void Render(RenderGraphContext context)

{

CommandBuffer buffer = context.cmd;

//设置接下来的渲染目标为法线图,深度图我用了个临时图代替

buffer.SetRenderTarget(

CameraDepthNormals, RenderBufferLoadAction.DontCare, RenderBufferStoreAction.Store

, TempDepth, RenderBufferLoadAction.DontCare, RenderBufferStoreAction.DontCare);

buffer.ClearRenderTarget(true, true, Color.clear);

buffer.DrawRendererList(list);

//绑定法线图,这让可以在后面生成AO图的时候访问了

buffer.SetGlobalTexture(CameraDepthNormalsID, CameraDepthNormals);

context.renderContext.ExecuteCommandBuffer(buffer);

buffer.Clear();

}

public static void Record(RenderGraph renderGraph, Camera camera, CullingResults cullingResults,

bool useHDR, int renderingLayerMask, Vector2Int attachmentSize, in CameraRendererTextures textures)

{

using RenderGraphBuilder builder =

renderGraph.AddRenderPass(sampler.name, out CameraDepthNormalsPass pass, sampler);

//指定渲染不透明物体的[CameraDepthNormals] Pass

pass.list = builder.UseRendererList(renderGraph.CreateRendererList(

new RendererListDesc(shaderTagIds, cullingResults, camera)

{

sortingCriteria =SortingCriteria.CommonOpaque,

rendererConfiguration = PerObjectData.None,

renderQueueRange = RenderQueueRange.opaque ,

renderingLayerMask = (uint)renderingLayerMask

}));

pass.CameraDepthNormals = builder.ReadWriteTexture(textures.depthNormalAttachment);

var dnTD = new TextureDesc(attachmentSize)

{

colorFormat = SystemInfo.GetGraphicsFormat(useHDR ? DefaultFormat.HDR : DefaultFormat.LDR),

depthBufferBits = DepthBits.Depth32,

name = "TempDepth"

};

pass.TempDepth = builder.ReadWriteTexture(renderGraph.CreateTexture(dnTD));

builder.SetRenderFunc<CameraDepthNormalsPass>(

static (pass, context) => pass.Render(context));

}

}CameraDepthNormals.hlsl

#ifndef CAMERA_DEPTH_NORMALS_INCLUDED

#define CAMERA_DEPTH_NORMALS_INCLUDED

#include "Surface.hlsl"

#include "Shadows.hlsl"

#include "Light.hlsl"

#include "BRDF.hlsl"

struct Attributes {

float3 positionOS : POSITION;

float3 normalOS : NORMAL;

float4 tangentOS : TANGENT;

float2 baseUV : TEXCOORD0;

UNITY_VERTEX_INPUT_INSTANCE_ID

};

struct Varyings {

float4 positionCS_SS : SV_POSITION;

float3 positionWS : VAR_POSITION;

float3 normalWS : VAR_NORMAL;

#if defined(_NORMAL_MAP)

float4 tangentWS : VAR_TANGENT;

#endif

float2 baseUV : VAR_BASE_UV;

UNITY_VERTEX_INPUT_INSTANCE_ID

};

Varyings CameraDepthNormalsPassVertex(Attributes input) {

Varyings output;

UNITY_SETUP_INSTANCE_ID(input);

UNITY_TRANSFER_INSTANCE_ID(input, output);

output.positionWS = TransformObjectToWorld(input.positionOS);

output.positionCS_SS = TransformWorldToHClip(output.positionWS);

output.normalWS = TransformObjectToWorldNormal(input.normalOS);

#if defined(_NORMAL_MAP)

output.tangentWS = float4(

TransformObjectToWorldDir(input.tangentOS.xyz), input.tangentOS.w

);

#endif

output.baseUV = TransformBaseUV(input.baseUV);

return output;

}

float4 CameraDepthNormalsPassFragment(Varyings input) : SV_TARGET{

UNITY_SETUP_INSTANCE_ID(input);

InputConfig config = GetInputConfig(input.positionCS_SS, input.baseUV);

#if defined(_NORMAL_MAP)

float3 normal = NormalTangentToWorld(

GetNormalTS(config),

input.normalWS, input.tangentWS

);

#else

float3 normal = normalize(input.normalWS);

#endif

return float4(normal, 0.0);

}

#endif

698

698

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?