目录

2.3.5.4 sentinelRedisInstance结构解析

2.3.5.5 Sentinel Redis Instance

2.3.5.6 createSentinelRedisInstance#loadServerConfig

2.3.5.7 sentinelGetMasterByName#loadServerConfig

2.4.1 sentinelCheckTiltCondition-TILT 模式判断

2.4.2 sentinelHandleDictOfRedisInstances-执行周期性任务

2.4.2.1 sentinelHandleRedisInstance-处理Redis实例

2.4.2.1.1 sentinelReconnectInstance-重新建立连接

2.4.2.1.1.2 redisAsyncContext结构体

2.4.2.1.1.4 关联事件循环与redis异步连接的上下文

2.4.2.1.2 sentinelSendPeriodicCommands-发送监控命令

2.4.2.1.3 sentinelCheckSubjectivelyDown-判断节点的主观下线状态

2.4.2.1.4 sentinelCheckObjectivelyDown-判断主节点的客观下线状态

2.4.2.1.5 sentinelFailoverStateMachine-对主节点执行故障转移

2.4.2.1.5.1 sentinelFailoverWaitStart-故障转移开始

2.4.2.1.5.2 sentinelFailoverSelectSlave-选择一个要晋升的从节点

2.4.2.1.5.3 sentinelFailoverSendSlaveOfNoOne-使从节点变为主节点

2.4.2.1.5.4 sentinelFailoverReconfNextSlave-从节点同步新的主节点

2.4.2.1.6 sentinelAskMasterStateToOtherSentinels-更新主节点的状态

2.4.2.2 sentinelHandleDictOfRedisInstances-处理主从切换

2.4.3 sentinelRunPendingScripts-执行脚本任务

2.4.4 sentinelCollectTerminatedScripts-脚本清理工作

2.4.4 sentinelKillTimedoutScripts-杀死超时脚本

0.阅读引用

1.复习一下

1.1 配置文件

/监控一个名称为mymaster的Redis Master服务,地址和端口号为127.0.0.1:6379, quorum为2

sentinel monitor mymaster 127.0.0.16379 2

//如果哨兵60s内未收到mymaster的有效ping回复,则认为mymaster处于down的状态

sentinel down-after-milliseconds mymaster 60000

sentinel failover-timeout mymaster 180000//执行切换的超时

时间为180s

//切换完成后同时向新的Redis Master发起同步数据请求的Redis Slave个 数为1,即切换完成后依次让

每个Slave去同步数据,前一个Slave同步完成后下一个Slave才发起同步 数据的请求

sentinel parallel-syncs mymaster 1

//监控一个名称为resque的Redis Master服务,地址和端口号为127.0.0.1:6380,quorum为4

sentinel monitor resque 192.168.1.36380 4

sentinel down-after-milliseconds mymaster 10000

sentinel failover-timeout mymaster 180000

sentinel parallel-syncs mymaster 5

quorum在哨兵中有两层含义:

第一层含义为:如果某个哨兵认为其监听的Master处于下线的状态,这个状态在Redis中标记为S_DOWN,即

主观下线。假设quorum配置为2,则当有两个哨兵同时认为一个Master处于下线的状态时,会标记该Master

为O_DOWN,即客观下线. 只有一个Master处于客观下线状态时才会开始执行切换。

第二层含义为:假设有5个哨兵,quorum配置为4. 首先, 判断客观下线需要4个哨兵才能认定. 其次,当开始

执行切换时,会从5个哨兵中选择一个leader执行该次选举,此时一个哨兵也必须得到4票才能被选举为

leader,而不是3票(即哨兵的大多数)。1.2 哨兵的启动模式

哨兵可以直接使用redis-server命令启动,如下:

./redis-server ../sentinel.conf --sentinel

或者

./redis-sentinel ../sentinel.conf

注意:哨兵的配置文件必须要有写权限

问题:如果我想要定制我的持久化策略什么的?如何在哨兵模式下实现?

现在看这个启动方式好像并没有指定啊.

其实没有关系,就是三个正常的redis-server进程和三个一sentilnel模型运行的进程

或者是三个redis-server进程,三个redis-sentinel进程.傻啦

2.源码分析

2.1 相关源码路径

E:\004-代码\007-redis\redis-6.0.8.tar\redis-6.0.8\redis-6.0.8\src\server.h

E:\004-代码\007-redis\redis-6.0.8.tar\redis-6.0.8\redis-6.0.8\src\server.c

E:\004-代码\007-redis\redis-6.0.8.tar\redis-6.0.8\redis-6.0.8\src\sentinel.c2.2 sentinelcmds

struct redisCommand sentinelcmds[] = {

{"ping",pingCommand,1,"",0,NULL,0,0,0,0,0},

{"sentinel",sentinelCommand,-2,"",0,NULL,0,0,0,0,0},

{"subscribe",subscribeCommand,-2,"",0,NULL,0,0,0,0,0},

{"unsubscribe",unsubscribeCommand,-1,"",0,NULL,0,0,0,0,0},

{"psubscribe",psubscribeCommand,-2,"",0,NULL,0,0,0,0,0},

{"punsubscribe",punsubscribeCommand,-1,"",0,NULL,0,0,0,0,0},

{"publish",sentinelPublishCommand,3,"",0,NULL,0,0,0,0,0},

{"info",sentinelInfoCommand,-1,"",0,NULL,0,0,0,0,0},

{"role",sentinelRoleCommand,1,"ok-loading",0,NULL,0,0,0,0,0},

{"client",clientCommand,-2,"read-only no-script",0,NULL,0,0,0,0,0},

{"shutdown",shutdownCommand,-1,"",0,NULL,0,0,0,0,0},

{"auth",authCommand,2,"no-auth no-script ok-loading ok-stale fast",0,NULL,0,0,0,0,0},

{"hello",helloCommand,-2,"no-auth no-script fast",0,NULL,0,0,0,0,0}

};

可以看到,哨兵中只可以执行有限的几种命令。这里主要介绍哨兵中独有的命令:sentinel。类似其他命令的执行流程,该命令会调用sentinelCommand函数。接下来,我们详细介绍该命令的几种重点形式。

1)sentinel masters:返回该哨兵监控的所有Master的相关信息。

2)SENTINEL MASTER <name>:返回指定名称Master的相关信息。

3)SENTINEL SLAVES <master-name>:返回指定名称Master的所有Slave的相关信息。

4)SENTINEL SENTINELS <master-name>:返回指定名称Master的所有哨兵的相关信息。

5)SENTINEL IS-MASTER-DOWN-BY-ADDR <ip> <port> <current-epoch><runid>:如果runid是*,返回由IP和Port指定的Master是否处于主观下线状态。如果runid是某个哨兵的ID,则同时会要求对该runid进行选举投票。

6)SENTINEL RESET <pattern>:重置所有该哨兵监控的匹配模式(pattern)的Masters(刷新状态,重新建立各类连接)。

7)SENTINEL GET-MASTER-ADDR-BY-NAME <master-name>:返回指定名称的Master对应的IP和Port。

8)SENTINEL FAILOVER <master-name>:对指定的Mmaster手动强制执行一次切换。

9)SENTINEL MONITOR <name> <ip> <port> <quorum>:指定该哨兵监听一个Master。

10)SENTINEL flushconfig:将配置文件刷新到磁盘。

11)SENTINEL REMOVE <name>:从监控中去除掉指定名称的Master。

12)SENTINEL CKQUORUM <name>:根据可用哨兵数量,计算哨兵可用数量是否满足配置数量(认定客观下线的数量);

13)SENTINEL SET <mastername> [<option> <value> ...]:设置指定名称的Master的各类参数(例如超时时间等)。

14)SENTINEL SIMULATE-FAILURE <flag> <flag> ... <flag>:模拟崩溃。flag可以为crash-after-election或者crash-after-promotion,分别代表切换时选举完成主哨兵之后崩溃以及将被选中的从服务器推举为Master之后崩溃。本节通过一个常见的哨兵部署方案介绍哨兵的主要功能,然后通过哨兵启动过程的追踪和主从切换的过程介绍了哨兵在Redis中具体的实现逻辑。最后介绍了哨兵中sentinel相关的常用命令。

2.3 主程序启动流程

2.3.1 主流程的脉络

当以

./redis-server ../sentinel.conf --sentinel

命令启动起服务,就是以哨兵的模式启动一个redis-server服务.

【Redis-6.0.8】

// 主流程中对sentinel做的工作只是进行初始化

int main(int argc, char **argv) {

if (server.sentinel_mode) {

role_char = 'X'; /* Sentinel. */

}

...

/* 检测是否以sentinel模式启动, 有两种启动模式 */

server.sentinel_mode = checkForSentinelMode(argc,argv); // line 5141

....

if (server.sentinel_mode) {

initSentinelConfig();//初始化哨兵的配置,设置监听端口和保护模式

initSentinel();// 初始化哨兵,line 5160

}

....

...

// sentinelHandleConfiguration作用是解析配置文件并初始化

loadServerConfig(configfile,options);// line 5233 main->loadServerConfig->loadServerConfigFromString->sentinelHandleConfiguration

...

if (!server.sentinel_mode) {

// 在不是哨兵模式下,会载入AOF文件和RDB文件,打印内存警告,集群模式载入数据等等操作。

} else {

InitServerLast(); // 哨兵模式下也要是多线程的

sentinelIsRunning();//line 5295,随机生成一个40字节的哨兵ID,打印日志

}

...

}

以上过程可以大致分为四步:

- 检查是否开启哨兵模式;

- 初始化哨兵的配置和哨兵;

- 解析配置文件并初始化;

- 进行服务的最后初始化,准备开始哨兵的工作.

2.3.2 checkForSentinelMode

/* Returns 1 if there is --sentinel among the arguments or if

* argv[0] contains "redis-sentinel". */

int checkForSentinelMode(int argc, char **argv) {

int j;

if (strstr(argv[0],"redis-sentinel") != NULL) return 1;

for (j = 1; j < argc; j++)

if (!strcmp(argv[j],"--sentinel")) return 1;

return 0;

}

2.3.3 initSentinelConfig

配置文件中的protected-mode解释:

# Protected mode is a layer of security protection, in order to avoid that

# Redis instances left open on the internet are accessed and exploited.

#

# When protected mode is on and if:

#

# 1) The server is not binding explicitly to a set of addresses using the

# "bind" directive.

# 2) No password is configured.

#

# The server only accepts connections from clients connecting from the

# IPv4 and IPv6 loopback addresses 127.0.0.1 and ::1, and from Unix domain

# sockets.

#

# By default protected mode is enabled. You should disable it only if

# you are sure you want clients from other hosts to connect to Redis

# even if no authentication is configured, nor a specific set of interfaces

# are explicitly listed using the "bind" directive.

protected-mode yes

sentinel.c中关于REDIS_SENTINEL_PORT的定义:

#define REDIS_SENTINEL_PORT 26379

struct redisServer {

...

int protected_mode; /* Don't accept external connections. */

...

}

/* This function overwrites a few normal Redis config default with Sentinel

* specific defaults. */

void initSentinelConfig(void) {

server.port = REDIS_SENTINEL_PORT;/* 设置Sentinel的默认端口, 覆盖服务器的默认属性 */

server.protected_mode = 0; /* Sentinel must be exposed. 哨兵必须暴露出来 */

}2.3.4 initSentinel

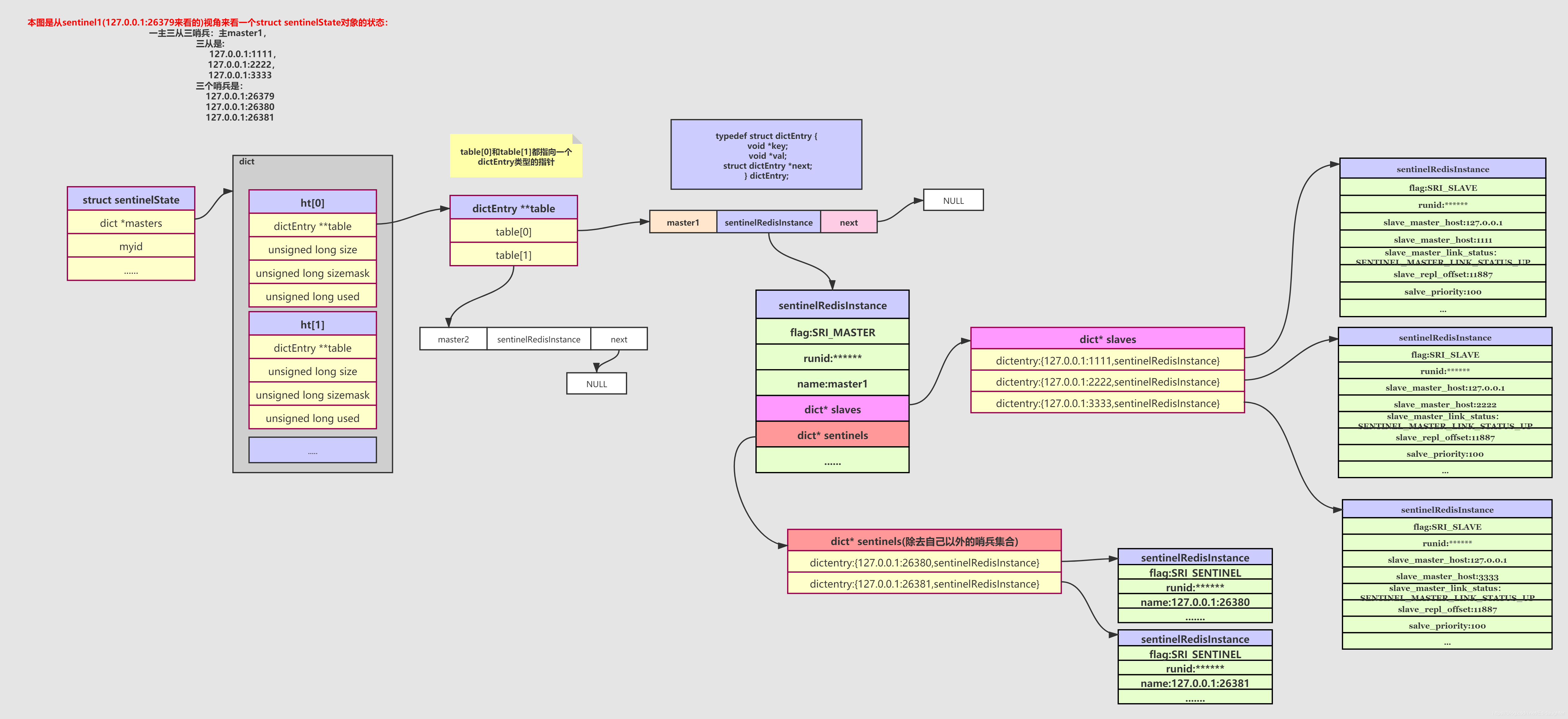

1.首先看下sentinel的定义

/* Main state. */

struct sentinelState {

/* 当前哨兵的id*/

char myid[CONFIG_RUN_ID_SIZE+1]; /* This sentinel ID. */

/* 当前纪元 */

uint64_t current_epoch; /* Current epoch. */

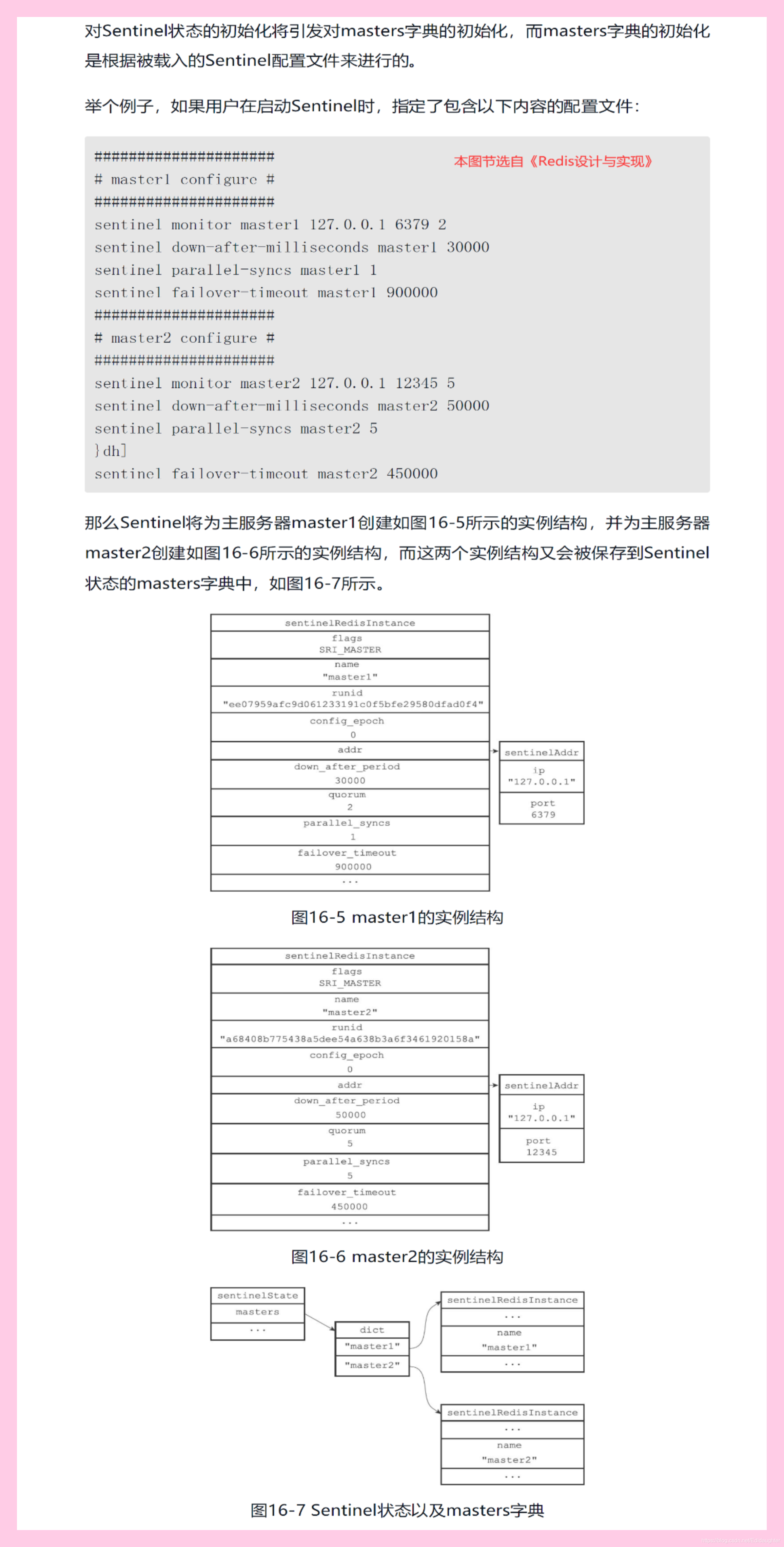

/*

当前哨兵节点监控的主节点字典,字典的键是主节点实例的名字,字典的值是一个指针,指向一个

sentinelRedisInstance类型的结构,可监控多个master

*/

dict *masters; /* Dictionary of master sentinelRedisInstances.

Key is the instance name, value is the

sentinelRedisInstance structure pointer. */

int tilt; /* Are we in TILT mode? */

/* 当前正在执行的脚本的数量 */

int running_scripts; /* Number of scripts in execution right now. */

mstime_t tilt_start_time; /* When TITL started. */

mstime_t previous_time; /* Last time we ran the time handler. */

/* 保存要执行用户脚本的队列 */

list *scripts_queue; /* Queue of user scripts to execute. */

char *announce_ip; /* IP addr that is gossiped to other sentinels if

not NULL. */

int announce_port; /* Port that is gossiped to other sentinels if

non zero. */

unsigned long simfailure_flags; /* Failures simulation. */

int deny_scripts_reconfig; /* Allow SENTINEL SET ... to change script

paths at runtime? */

} sentinel;

2.看看initSentinel的实现

/* Perform the Sentinel mode initialization. */

void initSentinel(void) {

unsigned int j;

/* 将命令列表中的命令都清空,然后只添加哨兵模式下的命令 */

/* Remove usual Redis commands from the command table, then just add

* the SENTINEL command. */

dictEmpty(server.commands,NULL);

for (j = 0; j < sizeof(sentinelcmds)/sizeof(sentinelcmds[0]); j++) {

int retval;

struct redisCommand *cmd = sentinelcmds+j;

retval = dictAdd(server.commands, sdsnew(cmd->name), cmd);

serverAssert(retval == DICT_OK);

/* Translate the command string flags description into an actual

* set of flags. */

if (populateCommandTableParseFlags(cmd,cmd->sflags) == C_ERR)

serverPanic("Unsupported command flag");

}

/* Initialize various data structures. */

/* 初始化各种哨兵模式下的数据结构 */

/* 当前纪元的初始化 */

sentinel.current_epoch = 0;

/* 监控的主节点信息的字典初始化 */

sentinel.masters = dictCreate(&instancesDictType,NULL);

/* TILT模式是否开启初始化 */

sentinel.tilt = 0;

/* TILT模式的开始时间初始化 */

sentinel.tilt_start_time = 0;

/* 最后执行时间处理程序的时间初始化 */

sentinel.previous_time = mstime();

/* 正在执行的脚本数量初始化 */

sentinel.running_scripts = 0;

/* 用户脚本的队列初始化 */

sentinel.scripts_queue = listCreate();

/* 主服务器的ip和port初始化(Sentinel通过gossip协议接收) */

sentinel.announce_ip = NULL;

sentinel.announce_port = 0;

/* 故障模拟标志初始化 */

sentinel.simfailure_flags = SENTINEL_SIMFAILURE_NONE;

/* 是否允许运行时修改脚本路径标志的初始化*/

sentinel.deny_scripts_reconfig = SENTINEL_DEFAULT_DENY_SCRIPTS_RECONFIG;

/* myid的初始化*/

memset(sentinel.myid,0,sizeof(sentinel.myid));

}

2.3.5 loadServerConfig

2.3.5.1 loadServerConfig的实现

/* Load the server configuration from the specified filename.

* The function appends the additional configuration directives stored

* in the 'options' string to the config file before loading.

*

* Both filename and options can be NULL, in such a case are considered

* empty. This way loadServerConfig can be used to just load a file or

* just load a string. */

void loadServerConfig(char *filename, char *options) {

sds config = sdsempty();

char buf[CONFIG_MAX_LINE+1];

/* Load the file content */

if (filename) {

FILE *fp;

if (filename[0] == '-' && filename[1] == '\0') {

fp = stdin;

} else {

if ((fp = fopen(filename,"r")) == NULL) {

serverLog(LL_WARNING,

"Fatal error, can't open config file '%s': %s",

filename, strerror(errno));

exit(1);

}

}

while(fgets(buf,CONFIG_MAX_LINE+1,fp) != NULL)

config = sdscat(config,buf);

if (fp != stdin) fclose(fp);

}

/* Append the additional options */

if (options) {

config = sdscat(config,"\n");

config = sdscat(config,options);

}

loadServerConfigFromString(config); // 关键步骤

sdsfree(config);

}

2.3.5.2 loadServerConfig的实现

void loadServerConfigFromString(char *config) {

...

for (i = 0; i < totlines; i++) {

...

else if (!strcasecmp(argv[0],"sentinel")) {

/* argc == 1 is handled by main() as we need to enter the sentinel

* mode ASAP. */

if (argc != 1) {

if (!server.sentinel_mode) {

err = "sentinel directive while not in sentinel mode";

goto loaderr;

}

err = sentinelHandleConfiguration(argv+1,argc-1);

if (err) goto loaderr;

}

}

}

...

}2.3.5.3 loadServerConfig的实现

3.sentinelHandleConfiguration的实现

/* ============================ Config handling ============================= */

char *sentinelHandleConfiguration(char **argv, int argc) {

sentinelRedisInstance *ri;

if (!strcasecmp(argv[0],"monitor") && argc == 5) {

/* monitor <name> <host> <port> <quorum> */

int quorum = atoi(argv[4]);

if (quorum <= 0) return "Quorum must be 1 or greater.";

if (createSentinelRedisInstance(argv[1],SRI_MASTER,argv[2],

atoi(argv[3]),quorum,NULL) == NULL)

{

switch(errno) {

case EBUSY: return "Duplicated master name.";

case ENOENT: return "Can't resolve master instance hostname.";

case EINVAL: return "Invalid port number";

}

}

} else if (!strcasecmp(argv[0],"down-after-milliseconds") && argc == 3) {

/* down-after-milliseconds <name> <milliseconds> */

ri = sentinelGetMasterByName(argv[1]);

if (!ri) return "No such master with specified name.";

ri->down_after_period = atoi(argv[2]);

if (ri->down_after_period <= 0)

return "negative or zero time parameter.";

sentinelPropagateDownAfterPeriod(ri);

} else if (!strcasecmp(argv[0],"failover-timeout") && argc == 3) {

/* failover-timeout <name> <milliseconds> */

ri = sentinelGetMasterByName(argv[1]);

if (!ri) return "No such master with specified name.";

ri->failover_timeout = atoi(argv[2]);

if (ri->failover_timeout <= 0)

return "negative or zero time parameter.";

} else if (!strcasecmp(argv[0],"parallel-syncs") && argc == 3) {

/* parallel-syncs <name> <milliseconds> */

ri = sentinelGetMasterByName(argv[1]);

if (!ri) return "No such master with specified name.";

ri->parallel_syncs = atoi(argv[2]);

} else if (!strcasecmp(argv[0],"notification-script") && argc == 3) {

/* notification-script <name> <path> */

ri = sentinelGetMasterByName(argv[1]);

if (!ri) return "No such master with specified name.";

if (access(argv[2],X_OK) == -1)

return "Notification script seems non existing or non executable.";

ri->notification_script = sdsnew(argv[2]);

} else if (!strcasecmp(argv[0],"client-reconfig-script") && argc == 3) {

/* client-reconfig-script <name> <path> */

ri = sentinelGetMasterByName(argv[1]);

if (!ri) return "No such master with specified name.";

if (access(argv[2],X_OK) == -1)

return "Client reconfiguration script seems non existing or "

"non executable.";

ri->client_reconfig_script = sdsnew(argv[2]);

} else if (!strcasecmp(argv[0],"auth-pass") && argc == 3) {

/* auth-pass <name> <password> */

ri = sentinelGetMasterByName(argv[1]);

if (!ri) return "No such master with specified name.";

ri->auth_pass = sdsnew(argv[2]);

} else if (!strcasecmp(argv[0],"auth-user") && argc == 3) {

/* auth-user <name> <username> */

ri = sentinelGetMasterByName(argv[1]);

if (!ri) return "No such master with specified name.";

ri->auth_user = sdsnew(argv[2]);

} else if (!strcasecmp(argv[0],"current-epoch") && argc == 2) {

/* current-epoch <epoch> */

unsigned long long current_epoch = strtoull(argv[1],NULL,10);

if (current_epoch > sentinel.current_epoch)

sentinel.current_epoch = current_epoch;

} else if (!strcasecmp(argv[0],"myid") && argc == 2) {

if (strlen(argv[1]) != CONFIG_RUN_ID_SIZE)

return "Malformed Sentinel id in myid option.";

memcpy(sentinel.myid,argv[1],CONFIG_RUN_ID_SIZE);

} else if (!strcasecmp(argv[0],"config-epoch") && argc == 3) {

/* config-epoch <name> <epoch> */

ri = sentinelGetMasterByName(argv[1]);

if (!ri) return "No such master with specified name.";

ri->config_epoch = strtoull(argv[2],NULL,10);

/* The following update of current_epoch is not really useful as

* now the current epoch is persisted on the config file, but

* we leave this check here for redundancy. */

if (ri->config_epoch > sentinel.current_epoch)

sentinel.current_epoch = ri->config_epoch;

} else if (!strcasecmp(argv[0],"leader-epoch") && argc == 3) {

/* leader-epoch <name> <epoch> */

ri = sentinelGetMasterByName(argv[1]);

if (!ri) return "No such master with specified name.";

ri->leader_epoch = strtoull(argv[2],NULL,10);

} else if ((!strcasecmp(argv[0],"known-slave") ||

!strcasecmp(argv[0],"known-replica")) && argc == 4)

{

sentinelRedisInstance *slave;

/* known-replica <name> <ip> <port> */

ri = sentinelGetMasterByName(argv[1]);

if (!ri) return "No such master with specified name.";

if ((slave = createSentinelRedisInstance(NULL,SRI_SLAVE,argv[2],

atoi(argv[3]), ri->quorum, ri)) == NULL)

{

return "Wrong hostname or port for replica.";

}

} else if (!strcasecmp(argv[0],"known-sentinel") &&

(argc == 4 || argc == 5)) {

sentinelRedisInstance *si;

if (argc == 5) { /* Ignore the old form without runid. */

/* known-sentinel <name> <ip> <port> [runid] */

ri = sentinelGetMasterByName(argv[1]);

if (!ri) return "No such master with specified name.";

if ((si = createSentinelRedisInstance(argv[4],SRI_SENTINEL,argv[2],

atoi(argv[3]), ri->quorum, ri)) == NULL)

{

return "Wrong hostname or port for sentinel.";

}

si->runid = sdsnew(argv[4]);

sentinelTryConnectionSharing(si);

}

} else if (!strcasecmp(argv[0],"rename-command") && argc == 4) {

/* rename-command <name> <command> <renamed-command> */

ri = sentinelGetMasterByName(argv[1]);

if (!ri) return "No such master with specified name.";

sds oldcmd = sdsnew(argv[2]);

sds newcmd = sdsnew(argv[3]);

if (dictAdd(ri->renamed_commands,oldcmd,newcmd) != DICT_OK) {

sdsfree(oldcmd);

sdsfree(newcmd);

return "Same command renamed multiple times with rename-command.";

}

} else if (!strcasecmp(argv[0],"announce-ip") && argc == 2) {

/* announce-ip <ip-address> */

if (strlen(argv[1]))

sentinel.announce_ip = sdsnew(argv[1]);

} else if (!strcasecmp(argv[0],"announce-port") && argc == 2) {

/* announce-port <port> */

sentinel.announce_port = atoi(argv[1]);

} else if (!strcasecmp(argv[0],"deny-scripts-reconfig") && argc == 2) {

/* deny-scripts-reconfig <yes|no> */

if ((sentinel.deny_scripts_reconfig = yesnotoi(argv[1])) == -1) {

return "Please specify yes or no for the "

"deny-scripts-reconfig options.";

}

} else {

return "Unrecognized sentinel configuration statement.";

}

return NULL;

}其实sentinelRedisInstance做的事情就是将配置文件中解析出来的参数都加入到 sentinelRedisInstance *类型的变量ri中,所以接下来我们需要认真看一下sentinelRedisInstance这个结构体是如何定义的.

2.3.5.4 sentinelRedisInstance结构解析

typedef struct sentinelRedisInstance {

/* 标识值,记录了当前Redis实例的类型和状态 */

int flags; /* See SRI_... defines */

/* master实例的名字,主节点的名字由用户在配置文件中设置 */

char *name; /* Master name from the point of view of this sentinel. */

/* 当前实例的运行id或者是一个哨兵的唯一id */

char *runid; /* Run ID of this instance, or unique ID if is a Sentinel.*/

/* 配置纪元,用于实现故障转移*/

uint64_t config_epoch; /* Configuration epoch. */

/* 实例地址:ip和port */

sentinelAddr *addr; /* Master host. */

/* 实例的连接,有可能是被Sentinel共享的 */

instanceLink *link; /* Link to the instance, may be shared for Sentinels. */

/* 最近一次通过 Pub/Sub 发送信息的时间 */

mstime_t last_pub_time; /* Last time we sent hello via Pub/Sub. */

/* 最近一次接收到从Sentinel发送来hello的时间,仅仅当有SRI_SENTINEL属性时才有效 */

mstime_t last_hello_time; /* Only used if SRI_SENTINEL is set. Last time

we received a hello from this Sentinel

via Pub/Sub. */

/* 最近一次回复【SENTINEL is-master-down】这个命令的时间*/

mstime_t last_master_down_reply_time; /* Time of last reply to

SENTINEL is-master-down command. */

/* 实例被判断为主观下线的时间 */

mstime_t s_down_since_time; /* Subjectively down since time. */

/* 实例被判断为客观下线的时间 */

mstime_t o_down_since_time; /* Objectively down since time. */

/* 实例无响应多少毫秒之后被判断为主观下线,由SENTINEL down-after-millisenconds配置设定 */

mstime_t down_after_period; /* Consider it down after that period. */

/* 从实例获取INFO命令回复的时间 */

mstime_t info_refresh; /* Time at which we received INFO output from it. */

/* 被重命名之后的命令集合 */

dict *renamed_commands; /* Commands renamed in this instance:

Sentinel will use the alternative commands

mapped on this table to send things like

SLAVEOF, CONFING, INFO, ... */

/* Role and the first time we observed it.

* This is useful in order to delay replacing what the instance reports

* with our own configuration. We need to always wait some time in order

* to give a chance to the leader to report the new configuration before

* we do silly things. */

/* 实例的角色 */

int role_reported;

/* 角色更新的时间 */

mstime_t role_reported_time;

/* 最近一次从节点的主节点地址变更的时间 */

mstime_t slave_conf_change_time; /* Last time slave master addr changed. */

/* Master specific. */

/*----------------------------------主节点特有的属性----------------------------------*/

/* 其他监控相同主节点的Sentinel */

dict *sentinels; /* Other sentinels monitoring the same master. */

/*

如果当前实例是主节点,那么slaves保存着该主节点的所有从节点实例,

键是从节点命令,值是从节点服务器对应的sentinelRedisInstance

*/

dict *slaves; /* Slaves for this master instance. */

/*

判定该主节点客观下线的投票数,

是【SENTINEL monitor <master-name> <ip> <port> <quorum>】配置中的<quorum>

*/

unsigned int quorum;/* Number of sentinels that need to agree on failure. */

/*

在故障转移时,可以同时对新的主节点进行同步的从节点数量,

由【sentinel parallel-syncs <master-name> <number>】配置中的<number>

*/

int parallel_syncs; /* How many slaves to reconfigure at same time. */

/* 连接主节点和从节点的认证密码 */

char *auth_pass; /* Password to use for AUTH against master & replica. */

/* 连接主节点和从节点的用于ACLs验证的用户名 */

char *auth_user; /* Username for ACLs AUTH against master & replica. */

/* Slave specific. */

/*----------------------------------从节点特有的属性----------------------------------*/

/* 从节点复制操作断开时间 */

mstime_t master_link_down_time; /* Slave replication link down time. */

/* 按照INFO命令输出的从节点优先级 */

int slave_priority; /* Slave priority according to its INFO output. */

/* 故障转移时,从节点发送【SLAVEOF <new>】命令的时间 */

mstime_t slave_reconf_sent_time; /* Time at which we sent SLAVE OF <new> */

/* 如果当前实例是从节点, master保存该从节点连接的主节点实例 */

struct sentinelRedisInstance *master; /* Master instance if it's slave. */

/* INFO命令的回复中记录的主节点的IP */

char *slave_master_host; /* Master host as reported by INFO */

/* INFO命令的回复中记录的主节点的port */

int slave_master_port; /* Master port as reported by INFO */

/* INFO命令的回复中记录的主从服务器连接的状态 */

int slave_master_link_status; /* Master link status as reported by INFO */

/* 从节点复制偏移量 */

unsigned long long slave_repl_offset; /* Slave replication offset. */

/* Failover */

/*----------------------------------故障转移的属性----------------------------------*/

/*

如果这是一个主节点实例,那么leader保存的是执行故障转移的Sentinel的runid,

如果这是一个Sentinel实例,那么leader保存的是当前这个Sentinel实例选举出来的领头的runid

*/

char *leader; /* If this is a master instance, this is the runid of

the Sentinel that should perform the failover. If

this is a Sentinel, this is the runid of the Sentinel

that this Sentinel voted as leader. */

/* leader字段的纪元 */

uint64_t leader_epoch; /* Epoch of the 'leader' field. */

/* 当前执行故障转移的纪元 */

uint64_t failover_epoch; /* Epoch of the currently started failover. */

/* 故障转移操作的状态 */

int failover_state; /* See SENTINEL_FAILOVER_STATE_* defines. */

/* 故障转移操作状态改变的时间 */

mstime_t failover_state_change_time;

/* 最近一次故障转移尝试开始的时间 */

mstime_t failover_start_time; /* Last failover attempt start time. */

/* 更新故障转移状态的最大超时时间 */

mstime_t failover_timeout; /* Max time to refresh failover state. */

/* 记录故障转移延迟的时间 */

mstime_t failover_delay_logged; /* For what failover_start_time value we

logged the failover delay. */

/* 晋升为新主节点的从节点实例 */

struct sentinelRedisInstance *promoted_slave; /* Promoted slave instance. */

/* Scripts executed to notify admin or reconfigure clients: when they

* are set to NULL no script is executed. */

/* 通知admin的可执行脚本的地址,如果设置为空,则没有执行的脚本 */

char *notification_script;

/* 客户端重新配置的可执行脚本的地址,如果设置为空,则没有执行的脚本 */

char *client_reconfig_script;

/* 缓存INFO命令的输出 */

sds info; /* cached INFO output */

} sentinelRedisInstance;2.3.5.5 Sentinel Redis Instance

/* A Sentinel Redis Instance object is monitoring. */

#define SRI_MASTER (1<<0)

#define SRI_SLAVE (1<<1)

#define SRI_SENTINEL (1<<2)

#define SRI_S_DOWN (1<<3) /* Subjectively down (no quorum). */

#define SRI_O_DOWN (1<<4) /* Objectively down (confirmed by others). */

#define SRI_MASTER_DOWN (1<<5) /* A Sentinel with this flag set thinks that

its master is down. */

#define SRI_FAILOVER_IN_PROGRESS (1<<6) /* Failover is in progress for

this master. */

#define SRI_PROMOTED (1<<7) /* Slave selected for promotion. */

#define SRI_RECONF_SENT (1<<8) /* SLAVEOF <newmaster> sent. */

#define SRI_RECONF_INPROG (1<<9) /* Slave synchronization in progress. */

#define SRI_RECONF_DONE (1<<10) /* Slave synchronized with new master. */

#define SRI_FORCE_FAILOVER (1<<11) /* Force failover with master up. */

#define SRI_SCRIPT_KILL_SENT (1<<12) /* SCRIPT KILL already sent on -BUSY */2.3.5.6 createSentinelRedisInstance#loadServerConfig

/* ========================== sentinelRedisInstance ========================= */

/* Create a redis instance, the following fields must be populated by the

* caller if needed:

* runid: set to NULL but will be populated once INFO output is received.

* info_refresh: is set to 0 to mean that we never received INFO so far.

*

* If SRI_MASTER is set into initial flags the instance is added to

* sentinel.masters table.

*

* if SRI_SLAVE or SRI_SENTINEL is set then 'master' must be not NULL and the

* instance is added into master->slaves or master->sentinels table.

*

* If the instance is a slave or sentinel, the name parameter is ignored and

* is created automatically as hostname:port.

*

* The function fails if hostname can't be resolved or port is out of range.

* When this happens NULL is returned and errno is set accordingly to the

* createSentinelAddr() function.

*

* The function may also fail and return NULL with errno set to EBUSY if

* a master with the same name, a slave with the same address, or a sentinel

* with the same ID already exists. */

sentinelRedisInstance *createSentinelRedisInstance(char *name, int flags, char *hostname, int port, int quorum, sentinelRedisInstance *master) {

sentinelRedisInstance *ri;

sentinelAddr *addr;

dict *table = NULL;

char slavename[NET_PEER_ID_LEN], *sdsname;

serverAssert(flags & (SRI_MASTER|SRI_SLAVE|SRI_SENTINEL));

serverAssert((flags & SRI_MASTER) || master != NULL);

/* Check address validity. */

addr = createSentinelAddr(hostname,port);

if (addr == NULL) return NULL;

/* For slaves use ip:port as name. */

if (flags & SRI_SLAVE) {

anetFormatAddr(slavename, sizeof(slavename), hostname, port);

name = slavename;

}

/* Make sure the entry is not duplicated. This may happen when the same

* name for a master is used multiple times inside the configuration or

* if we try to add multiple times a slave or sentinel with same ip/port

* to a master. */

if (flags & SRI_MASTER) table = sentinel.masters;

else if (flags & SRI_SLAVE) table = master->slaves;

else if (flags & SRI_SENTINEL) table = master->sentinels;

sdsname = sdsnew(name);

if (dictFind(table,sdsname)) {

releaseSentinelAddr(addr);

sdsfree(sdsname);

errno = EBUSY;

return NULL;

}

/* Create the instance object. */

ri = zmalloc(sizeof(*ri));

/* Note that all the instances are started in the disconnected state,

* the event loop will take care of connecting them. */

ri->flags = flags;

ri->name = sdsname;

ri->runid = NULL;

ri->config_epoch = 0;

ri->addr = addr;

ri->link = createInstanceLink();

ri->last_pub_time = mstime();

ri->last_hello_time = mstime();

ri->last_master_down_reply_time = mstime();

ri->s_down_since_time = 0;

ri->o_down_since_time = 0;

ri->down_after_period = master ? master->down_after_period :

SENTINEL_DEFAULT_DOWN_AFTER;

ri->master_link_down_time = 0;

ri->auth_pass = NULL;

ri->auth_user = NULL;

ri->slave_priority = SENTINEL_DEFAULT_SLAVE_PRIORITY;

ri->slave_reconf_sent_time = 0;

ri->slave_master_host = NULL;

ri->slave_master_port = 0;

ri->slave_master_link_status = SENTINEL_MASTER_LINK_STATUS_DOWN;

ri->slave_repl_offset = 0;

ri->sentinels = dictCreate(&instancesDictType,NULL);

ri->quorum = quorum;

ri->parallel_syncs = SENTINEL_DEFAULT_PARALLEL_SYNCS;

ri->master = master;

ri->slaves = dictCreate(&instancesDictType,NULL);

ri->info_refresh = 0;

ri->renamed_commands = dictCreate(&renamedCommandsDictType,NULL);

/* Failover state. */

ri->leader = NULL;

ri->leader_epoch = 0;

ri->failover_epoch = 0;

ri->failover_state = SENTINEL_FAILOVER_STATE_NONE;

ri->failover_state_change_time = 0;

ri->failover_start_time = 0;

ri->failover_timeout = SENTINEL_DEFAULT_FAILOVER_TIMEOUT;

ri->failover_delay_logged = 0;

ri->promoted_slave = NULL;

ri->notification_script = NULL;

ri->client_reconfig_script = NULL;

ri->info = NULL;

/* Role */

ri->role_reported = ri->flags & (SRI_MASTER|SRI_SLAVE);

ri->role_reported_time = mstime();

ri->slave_conf_change_time = mstime();

/* Add into the right table. */

dictAdd(table, ri->name, ri);

return ri;

}2.3.5.7 sentinelGetMasterByName#loadServerConfig

/* Master lookup by name */

/* 通过名字来寻找主节点 */

sentinelRedisInstance *sentinelGetMasterByName(char *name) {

sentinelRedisInstance *ri;

sds sdsname = sdsnew(name);

ri = dictFetchValue(sentinel.masters,sdsname);

sdsfree(sdsname);

return ri;

}

2.3.6 InitServerLast

2.3.7 sentinelIsRunning

/* This function gets called when the server is in Sentinel mode, started,

* loaded the configuration, and is ready for normal operations. */

void sentinelIsRunning(void) {

int j;

if (server.configfile == NULL) {

serverLog(LL_WARNING,

"Sentinel started without a config file. Exiting...");

exit(1);

} else if (access(server.configfile,W_OK) == -1) {

serverLog(LL_WARNING,

"Sentinel config file %s is not writable: %s. Exiting...",

server.configfile,strerror(errno));

exit(1);

}

/* If this Sentinel has yet no ID set in the configuration file, we

* pick a random one and persist the config on disk. From now on this

* will be this Sentinel ID across restarts. */

for (j = 0; j < CONFIG_RUN_ID_SIZE; j++)

if (sentinel.myid[j] != 0) break;

if (j == CONFIG_RUN_ID_SIZE) {

/* Pick ID and persist the config. */

getRandomHexChars(sentinel.myid,CONFIG_RUN_ID_SIZE);

sentinelFlushConfig();

}

/* Log its ID to make debugging of issues simpler. */

serverLog(LL_WARNING,"Sentinel ID is %s", sentinel.myid);

/* We want to generate a +monitor event for every configured master

* at startup. */

sentinelGenerateInitialMonitorEvents();

}函数sentinelIsRunning很简单,主要做的事情就是:

- 检查配置文件是否可写(因为后面的流程中可能有对配置文件修改的需求);

- 为没有runid的哨兵节点分配 ID,并持久化到配置文件中;

- 日志提示中给出Sentinel ID的信息;

- 生成一个

+monitor事件通知.

2.4 定时任务中哨兵相关流程

// 时间任务中的哨兵做的工作是哨兵主要的工作逻辑

int serverCron(struct aeEventLoop *eventLoop, long long id, void *clientData) {

...

/* Run the Sentinel timer if we are in sentinel mode. */

if (server.sentinel_mode) sentinelTimer();

...

}

void sentinelTimer(void) {

/* 查Sentinel是否需要进入tilt模式,更新最近一次执行Sentinel模式的周期函数的时间 */

sentinelCheckTiltCondition();

/* 对Sentinel监控的所有主节点进行递归式的执行周期性操作 */

sentinelHandleDictOfRedisInstances(sentinel.masters);

/* 运行在队列中等待的脚本 */

sentinelRunPendingScripts();

/* 清理已成功执行的脚本,重试执行错误的脚本 */

sentinelCollectTerminatedScripts();

/* 杀死执行超时的脚本,等到下个周期在sentinelCollectTerminatedScripts()函数中重试执行 */

sentinelKillTimedoutScripts();

/* We continuously change the frequency of the Redis "timer interrupt"

* in order to desynchronize every Sentinel from every other.

* This non-determinism avoids that Sentinels started at the same time

* exactly continue to stay synchronized asking to be voted at the

* same time again and again (resulting in nobody likely winning the

* election because of split brain voting). */

/*

我们不断改变Redis定期任务的执行频率,以便使每个Sentinel节点都不同步,这种不确定性可以避免

Sentinel在同一时间开始完全继续保持同步,当被要求进行投票时,一次又一次在同一时间进行投票,

因为脑裂导致有可能没有胜选者

*/

server.hz = CONFIG_DEFAULT_HZ + rand() % CONFIG_DEFAULT_HZ;

}

哨兵中每次执行serverCron时,都会调用sentinelTimer()函数。该函数会建立连接,并且定时发送心跳包

并采集信息。会在3.6.2中详细解说sentinelTimer的工作.2.4.1 sentinelCheckTiltCondition-TILT 模式判断

/* This function checks if we need to enter the TITL mode.

*

* The TILT mode is entered if we detect that between two invocations of the

* timer interrupt, a negative amount of time, or too much time has passed.

* Note that we expect that more or less just 100 milliseconds will pass

* if everything is fine. However we'll see a negative number or a

* difference bigger than SENTINEL_TILT_TRIGGER milliseconds if one of the

* following conditions happen:

*

* 1) The Sentiel process for some time is blocked, for every kind of

* random reason: the load is huge, the computer was frozen for some time

* in I/O or alike, the process was stopped by a signal. Everything.

* 2) The system clock was altered significantly.

*

* Under both this conditions we'll see everything as timed out and failing

* without good reasons. Instead we enter the TILT mode and wait

* for SENTINEL_TILT_PERIOD to elapse before starting to act again.

*

* During TILT time we still collect information, we just do not act. */

void sentinelCheckTiltCondition(void) {

mstime_t now = mstime();

mstime_t delta = now - sentinel.previous_time;

if (delta < 0 || delta > SENTINEL_TILT_TRIGGER) {

sentinel.tilt = 1;

sentinel.tilt_start_time = mstime();

sentinelEvent(LL_WARNING,"+tilt",NULL,"#tilt mode entered");

}

sentinel.previous_time = mstime();

}

2.4.2 sentinelHandleDictOfRedisInstances-执行周期性任务

/* Perform scheduled operations for all the instances in the dictionary.

* Recursively call the function against dictionaries of slaves. */

/*

调用是sentinelHandleDictOfRedisInstances(sentinel.masters);

传入的是sentinel.masters,处理每一个master,然后对每一个master的slaves也进行

处理.

*/

void sentinelHandleDictOfRedisInstances(dict *instances) {

dictIterator *di;

dictEntry *de;

sentinelRedisInstance *switch_to_promoted = NULL;

/* There are a number of things we need to perform against every master. */

/* 遍历字典中所有的实例 */

di = dictGetIterator(instances);

while((de = dictNext(di)) != NULL) {

sentinelRedisInstance *ri = dictGetVal(de);

/* 对指定的ri实例执行周期性操作 */

sentinelHandleRedisInstance(ri);

/* 如果ri实例是主节点 */

if (ri->flags & SRI_MASTER) {

/* 递归的对主节点从属的从节点执行周期性操作 */

sentinelHandleDictOfRedisInstances(ri->slaves);

/* 递归的对监控主节点的Sentinel节点执行周期性操作 */

sentinelHandleDictOfRedisInstances(ri->sentinels);

/* 如果ri实例处于完成故障转移操作的状态,所有从节点已经完成对新主节点的同步 */

if (ri->failover_state == SENTINEL_FAILOVER_STATE_UPDATE_CONFIG) {

/* 设置主从转换的标识 */

switch_to_promoted = ri;

}

}

}

/* 如果主从节点发生了转换 */

if (switch_to_promoted)

/* 将原来的主节点从主节点表中删除,并用晋升的主节点替代,

意味着已经用新晋升的主节点代替旧的主节点,包括所有从节点和旧的主节点从属当前新的主节点

*/

sentinelFailoverSwitchToPromotedSlave(switch_to_promoted);

dictReleaseIterator(di);

}2.4.2.1 sentinelHandleRedisInstance-处理Redis实例

/* Perform scheduled operations for the specified Redis instance. */

void sentinelHandleRedisInstance(sentinelRedisInstance *ri) {

/* ========== MONITORING HALF ============ */

/* ========== 一半监控操作 ============ */

/* Every kind of instance */

/* 对所有的类型的实例进行操作 */

/* 为Sentinel和ri实例创建一个网络连接,包括cc和pc */

sentinelReconnectInstance(ri);

/* 定期发送PING、PONG、PUBLISH命令到ri实例中 */

sentinelSendPeriodicCommands(ri);

/* ============== ACTING HALF ============= */

/* ============== 一半故障检测 ============= */

/* We don't proceed with the acting half if we are in TILT mode.

* TILT happens when we find something odd with the time, like a

* sudden change in the clock. */

/*

如果Sentinel处于TILT模式,则不进行故障检测

*/

if (sentinel.tilt) {

/* 如果TILT模式的时间没到,则不执行后面的动作,直接返回 */

if (mstime()-sentinel.tilt_start_time < SENTINEL_TILT_PERIOD) return;

/* 如果TILT模式时间已经到了,取消TILT模式的标识 */

sentinel.tilt = 0;

sentinelEvent(LL_WARNING,"-tilt",NULL,"#tilt mode exited");

}

/* Every kind of instance */

/* 对于各种实例进行是否下线的检测,是否处于主观下线状态 */

sentinelCheckSubjectivelyDown(ri);

/* Masters and slaves */

/* 目前对主节点和从节点的实例什么都不做 */

if (ri->flags & (SRI_MASTER|SRI_SLAVE)) {

/* Nothing so far. */

}

/* Only masters */

/* 只对主节点进行操作 */

if (ri->flags & SRI_MASTER) {

/* 检查从节点是否客观下线 */

sentinelCheckObjectivelyDown(ri);

/* 如果处于客观下线状态,则进行故障转移的状态设置 */

if (sentinelStartFailoverIfNeeded(ri))

/*

强制向其他Sentinel节点发送【SENTINEL IS-MASTER-DOWN-BY-ADDR】给所有的

Sentinel获取回复,尝试获得足够的票数,标记主节点为客观下线状态,触发故障转移

*/

sentinelAskMasterStateToOtherSentinels(ri,SENTINEL_ASK_FORCED);

/* 执行故障转移操作 */

sentinelFailoverStateMachine(ri);

/* 节点ri没有处于客观下线的状态,那么也要尝试发送【SENTINEL IS-MASTER-DOWN-BY-ADDR】

给所有的Sentinel获取回复,因为ri主节点如果有回复延迟等等状况,可以通过该命令,更新一

些主节点状态

*/

sentinelAskMasterStateToOtherSentinels(ri,SENTINEL_NO_FLAGS);

}

}

该函数将周期性的操作分为两个部分:一部分是对一个的实例进行监控的操作,另一部分是对该实例执行故障检

测.2.4.2.1.1 sentinelReconnectInstance-重新建立连接

周期性操作执行的第一个函数就是

sentinelReconnectInstance()函数,因为在载入配置的时候,我们将创建的主节点实例加入到sentinel.masters字典的时候,该主节点的连接是关闭的,所以第一件事就是为主节点和哨兵节点建立网络连接.

/* Create the async connections for the instance link if the link

* is disconnected. Note that link->disconnected is true even if just

* one of the two links (commands and pub/sub) is missing. */

void sentinelReconnectInstance(sentinelRedisInstance *ri) {

/* 如果ri实例没有连接中断,则直接返回 */

if (ri->link->disconnected == 0) return;

/* 如果ri实例地址非法,则直接返回 */

if (ri->addr->port == 0) return; /* port == 0 means invalid address. */

/* 获取当前实例的连接 */

instanceLink *link = ri->link;

/* 获取当前时间 */

mstime_t now = mstime();

/*

如果当前时间距离上一次重连时间小于SENTINEL_PING_PERIOD,就直接返回

#define SENTINEL_PING_PERIOD 1000 ---1000毫秒,1秒

*/

if (now - ri->link->last_reconn_time < SENTINEL_PING_PERIOD) return;

/* 重置最近重连的时间*/

ri->link->last_reconn_time = now;

/* Commands connection. */

/* cc-commands connection,命令连接 */

if (link->cc == NULL) {

/* 绑定ri实例的连接地址并建立连接 */

link->cc = redisAsyncConnectBind(ri->addr->ip,ri->addr->port,NET_FIRST_BIND_ADDR);

/*

link->cc->err为0代表【绑定ri实例的连接地址并建立连接】这一步没有错误,

!link->cc->err为非0也就代表【绑定ri实例的连接地址并建立连接】这一步没有错误.

server.tls_replication为真代表server.tls_replication不为NULL,

在前面两者为真的情况下instanceLinkNegotiateTLS返回C_ERR则表明TLS初始化失败

*/

if (!link->cc->err && server.tls_replication &&

(instanceLinkNegotiateTLS(link->cc) == C_ERR)) {

sentinelEvent(LL_DEBUG,"-cmd-link-reconnection",ri,"%@ #Failed to initialize TLS");

instanceLinkCloseConnection(link,link->cc);

}

/* link->cc->err非0代表【绑定ri实例的连接地址并建立连接】这一步出现错误 */

else if (link->cc->err) {

sentinelEvent(LL_DEBUG,"-cmd-link-reconnection",ri,"%@ #%s",

link->cc->errstr);

instanceLinkCloseConnection(link,link->cc);

}

/* 其他场景 */

else {

/* 将当前redis连接的待执行命令数置为0 */

link->pending_commands = 0;

/* 设置当前redis命令连接的连接时间 */

link->cc_conn_time = mstime();

/* 将redis命令连接的data指向当前连接 */

link->cc->data = link;

/* 将当前redis命令事件与服务器的事件循环做关联 */

redisAeAttach(server.el,link->cc);

/* 设置确立连接的回调函数 */

redisAsyncSetConnectCallback(link->cc,

sentinelLinkEstablishedCallback);

/* 设置断开连接的回调函数 */

redisAsyncSetDisconnectCallback(link->cc,

sentinelDisconnectCallback);

/* 如果需要哨兵向master发送Auth命令*/

sentinelSendAuthIfNeeded(ri,link->cc);

/* 发送连接的名字 */

sentinelSetClientName(ri,link->cc,"cmd");

/* Send a PING ASAP when reconnecting. */

/* 在重连的时候哨兵尽快发送PING消息 */

sentinelSendPing(ri);

}

}

/* Pub / Sub */

/*pub/sub connection-pc, pub/sub连接,发布订阅连接 */

/* 如果redis实例是master或者是slave并且发布订阅连接还没有建立 */

if ((ri->flags & (SRI_MASTER|SRI_SLAVE)) && link->pc == NULL) {

/* 绑定指定ri的连接地址并建立发布订阅连接 */

link->pc = redisAsyncConnectBind(ri->addr->ip,ri->addr->port,NET_FIRST_BIND_ADDR);

/* TLS初始化失败 */

if (!link->pc->err && server.tls_replication &&

(instanceLinkNegotiateTLS(link->pc) == C_ERR)) {

sentinelEvent(LL_DEBUG,"-pubsub-link-reconnection",ri,"%@ #Failed to initialize TLS");

}

/*【绑定ri实例的连接地址并建立连接】这一步出现错误 */

else if (link->pc->err) {

sentinelEvent(LL_DEBUG,"-pubsub-link-reconnection",ri,"%@ #%s",

link->pc->errstr);

instanceLinkCloseConnection(link,link->pc);

}

/* 其他情况 */

else {

int retval;

link->pc_conn_time = mstime();

link->pc->data = link;

redisAeAttach(server.el,link->pc);

redisAsyncSetConnectCallback(link->pc,

sentinelLinkEstablishedCallback);

redisAsyncSetDisconnectCallback(link->pc,

sentinelDisconnectCallback);

sentinelSendAuthIfNeeded(ri,link->pc);

sentinelSetClientName(ri,link->pc,"pubsub");

/* Now we subscribe to the Sentinels "Hello" channel. */

/* 发送订阅 __sentinel__:hello 频道的命令,设置回调函数处理回复.

sentinelReceiveHelloMessages是处理Pub/Sub的频道返回信息的回调函数,

可以发现订阅同一master的Sentinel节点.

*/

retval = redisAsyncCommand(link->pc,

sentinelReceiveHelloMessages, ri, "%s %s",

sentinelInstanceMapCommand(ri,"SUBSCRIBE"),

SENTINEL_HELLO_CHANNEL);

if (retval != C_OK) {

/* If we can't subscribe, the Pub/Sub connection is useless

* and we can simply disconnect it and try again. */

instanceLinkCloseConnection(link,link->pc);

return;

}

}

}

/* Clear the disconnected status only if we have both the connections

* (or just the commands connection if this is a sentinel instance). */

/*

所有角色的命令连接都建立了, 除了哨兵以外的所有发布订阅连接也建立了,

则表示已经建立了连接,将link->disconnected置为0

*/

if (link->cc && (ri->flags & SRI_SENTINEL || link->pc))

link->disconnected = 0;

}

- 关于redisAsyncConnectBind的说明

建立连接的函数redisAsyncConnectBind()是Redis的官方C语言客户端hiredis的异步连接函数,当连接成功时需要调用redisAeAttach()函数来将服务器的事件循环(ae)与连接的上下文相关联起来(因为hiredis提供了多种适配器,包括事件ae,libev,libevent,libuv),在关联的时候,会设置了网络连接的可写可读事件的处理程序. 接下来还会设置该连接的确立时和断开时的回调函数redisAsyncSetConnectCallback()和redisAsyncSetDisconnectCallback(),为什么要这么做呢?因为该连接是异步的.

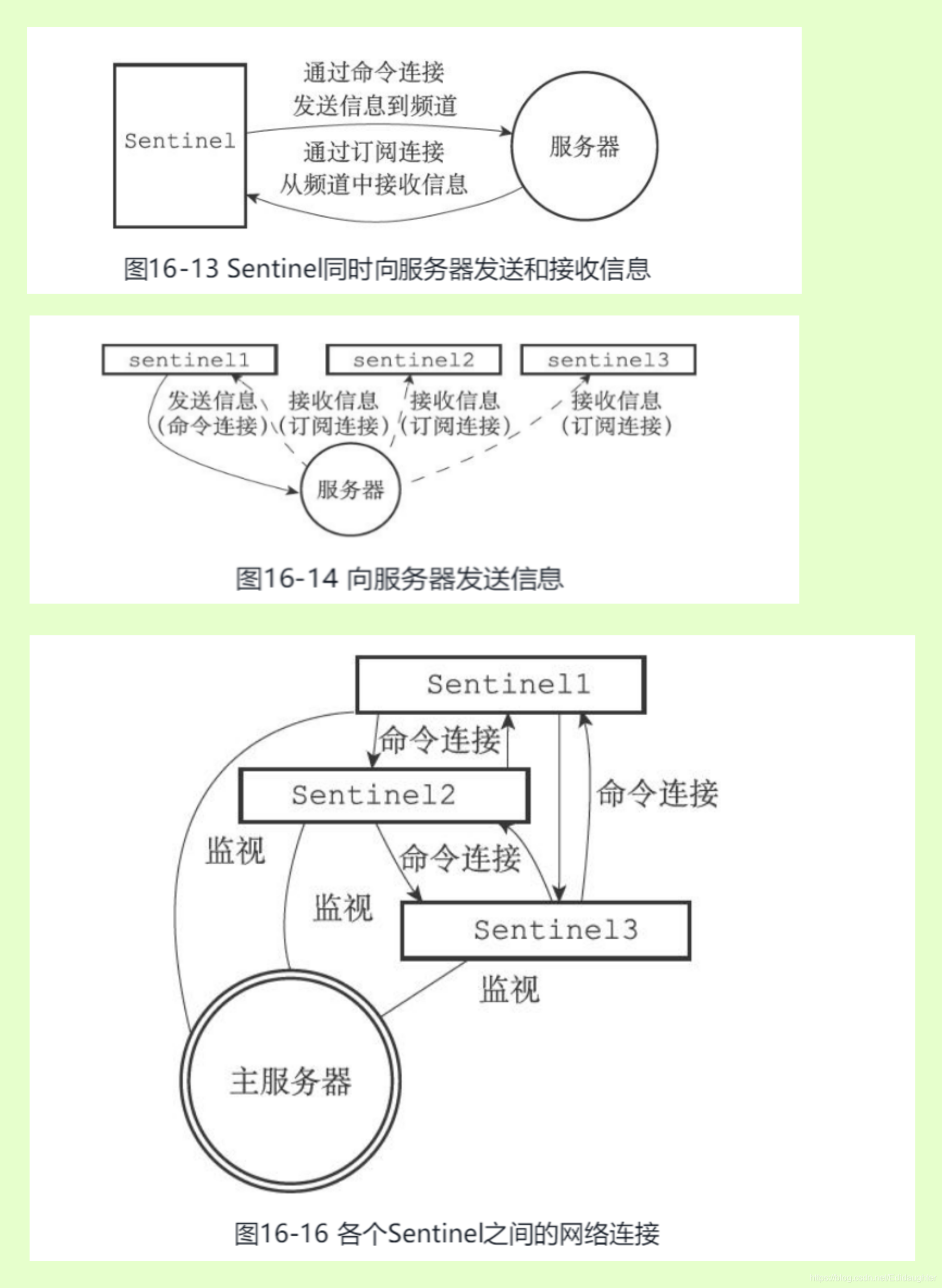

- 关于各个角色之间建立的连接说明

Sentinel在连接主服务器或者从服务器时,会同时创建命令连接和订阅连接,但是在连接其他Sentinel时,却只会创建命令连接,而不创建订阅连接. 这是因为Sentinel需要通过接收主服务器或者从服务器发来的频道信息来发现未知的新Sentinel,所以才需要建立订阅连接,而相互已知的Sentinel只要使用命令连接来进行通信就足够了.

- 建立命令连接之后执行了三个动作

- 执行sentinelSendAuthIfNeeded,它发送

AUTH命令进行认证,在此函数中设置的回复处理的回调函数是sentinelDiscardReplyCallback,sentinelDiscardReplyCallback做的操作是丢弃回复并执行link->pending_commands--;- 执行sentinelSetClientName,它发送

CLIENT SETNAME命令设置连接的名字,在此函数中设置的回复处理的回调函数也是sentinelDiscardReplyCallback,sentinelDiscardReplyCallback做的操作是丢弃回复并执行link->pending_commands--;- 执行sentinelSendPing,它发送

PING命令来判断连接状态,在此函数中设置的回复处理的回调函数是sentinelPingReplyCallback,sentinelPingReplyCallback函数根据回复的内容来更新一些连接交互时间等;

- 建立命令连接之后执行了三个动作

- 执行sentinelSendAuthIfNeeded,它发送

AUTH命令进行认证,在此函数中设置的回复处理的回调函数是sentinelDiscardReplyCallback,sentinelDiscardReplyCallback做的操作是丢弃回复并执行link->pending_commands--;- 执行sentinelSetClientName,它发送

CLIENT SETNAME命令设置连接的名字,在此函数中设置的回复处理的回调函数也是sentinelDiscardReplyCallback,sentinelDiscardReplyCallback做的操作是丢弃回复并执行link->pending_commands--;- 执行redisAsyncCommand,它发送

SUBSCRIBE命令来判订阅__sentinel__:hello频道的事件提醒,在此函数中设置的回复处理的回调函数是sentinelReceiveHelloMessages,sentinelReceiveHelloMessages函数根据实例(主节点或从节点)发送过来的hello信息,来获取其他哨兵节点或主节点的从节点信息.

- 关于连接建立是否成功的说明

如果成功建立连接,之后会清除连接断开的标志,以表示连接已建立;如果不是第一次执行,那么会判断连接是否建立,如果断开,则重新给建立,如果没有断开,那么什么都不会做直接返回.

2.4.2.1.1.1 instanceLink结构体

/* The link to a sentinelRedisInstance. When we have the same set of Sentinels

* monitoring many masters, we have different instances representing the

* same Sentinels, one per master, and we need to share the hiredis connections

* among them. Oherwise if 5 Sentinels are monitoring 100 masters we create

* 500 outgoing connections instead of 5.

*

* So this structure represents a reference counted link in terms of the two

* hiredis connections for commands and Pub/Sub, and the fields needed for

* failure detection, since the ping/pong time are now local to the link: if

* the link is available, the instance is avaialbe. This way we don't just

* have 5 connections instead of 500, we also send 5 pings instead of 500.

*

* Links are shared only for Sentinels: master and slave instances have

* a link with refcount = 1, always. */

typedef struct instanceLink {

int refcount; /* Number of sentinelRedisInstance owners. */

int disconnected; /* Non-zero if we need to reconnect cc or pc. */

int pending_commands; /* Number of commands sent waiting for a reply. */

redisAsyncContext *cc; /* Hiredis context for commands. */

redisAsyncContext *pc; /* Hiredis context for Pub / Sub. */

mstime_t cc_conn_time; /* cc connection time. */

mstime_t pc_conn_time; /* pc connection time. */

mstime_t pc_last_activity; /* Last time we received any message. */

mstime_t last_avail_time; /* Last time the instance replied to ping with

a reply we consider valid. */

mstime_t act_ping_time; /* Time at which the last pending ping (no pong

received after it) was sent. This field is

set to 0 when a pong is received, and set again

to the current time if the value is 0 and a new

ping is sent. */

mstime_t last_ping_time; /* Time at which we sent the last ping. This is

only used to avoid sending too many pings

during failure. Idle time is computed using

the act_ping_time field. */

mstime_t last_pong_time; /* Last time the instance replied to ping,

whatever the reply was. That's used to check

if the link is idle and must be reconnected. */

mstime_t last_reconn_time; /* Last reconnection attempt performed when

the link was down. */

} instanceLink;2.4.2.1.1.2 redisAsyncContext结构体

/* Context for an async connection to Redis */

typedef struct redisAsyncContext {

/* Hold the regular context, so it can be realloc'ed. */

redisContext c;

/* Setup error flags so they can be used directly. */

int err;

char *errstr;

/* Not used by hiredis */

void *data;

/* Event library data and hooks */

struct {

void *data;

/* Hooks that are called when the library expects to start

* reading/writing. These functions should be idempotent. */

void (*addRead)(void *privdata);

void (*delRead)(void *privdata);

void (*addWrite)(void *privdata);

void (*delWrite)(void *privdata);

void (*cleanup)(void *privdata);

void (*scheduleTimer)(void *privdata, struct timeval tv);

} ev;

/* Called when either the connection is terminated due to an error or per

* user request. The status is set accordingly (REDIS_OK, REDIS_ERR). */

redisDisconnectCallback *onDisconnect;

/* Called when the first write event was received. */

redisConnectCallback *onConnect;

/* Regular command callbacks */

redisCallbackList replies;

/* Address used for connect() */

struct sockaddr *saddr;

size_t addrlen;

/* Subscription callbacks */

struct {

redisCallbackList invalid;

struct dict *channels;

struct dict *patterns;

} sub;

} redisAsyncContext;2.4.2.1.1.3 redisContext结构体

/* Context for a connection to Redis */

typedef struct redisContext {

const redisContextFuncs *funcs; /* Function table */

int err; /* Error flags, 0 when there is no error, 0代表无错误 */

char errstr[128]; /* String representation of error when applicable */

redisFD fd;

int flags;

char *obuf; /* Write buffer */

redisReader *reader; /* Protocol reader */

enum redisConnectionType connection_type;

struct timeval *timeout;

struct {

char *host;

char *source_addr;

int port;

} tcp;

struct {

char *path;

} unix_sock;

/* For non-blocking connect */

struct sockadr *saddr;

size_t addrlen;

/* Additional private data for hiredis addons such as SSL */

void *privdata;

} redisContext;

2.4.2.1.1.4 关联事件循环与redis异步连接的上下文

static int redisAeAttach(aeEventLoop *loop, redisAsyncContext *ac) {

redisContext *c = &(ac->c);

redisAeEvents *e;

/* Nothing should be attached when something is already attached */

if (ac->ev.data != NULL)

return C_ERR;

/* Create container for context and r/w events */

e = (redisAeEvents*)zmalloc(sizeof(*e));

e->context = ac;

e->loop = loop;

e->fd = c->fd;

e->reading = e->writing = 0;

/* Register functions to start/stop listening for events */

ac->ev.addRead = redisAeAddRead;

ac->ev.delRead = redisAeDelRead;

ac->ev.addWrite = redisAeAddWrite;

ac->ev.delWrite = redisAeDelWrite;

ac->ev.cleanup = redisAeCleanup;

ac->ev.data = e;

return C_OK;

}2.4.2.1.2 sentinelSendPeriodicCommands-发送监控命令

/* Send periodic PING, INFO, and PUBLISH to the Hello channel to

* the specified master or slave instance. */

void sentinelSendPeriodicCommands(sentinelRedisInstance *ri) {

mstime_t now = mstime();

mstime_t info_period, ping_period;

int retval;

/* Return ASAP if we have already a PING or INFO already pending, or

* in the case the instance is not properly connected. */

if (ri->link->disconnected) return;

/* For INFO, PING, PUBLISH that are not critical commands to send we

* also have a limit of SENTINEL_MAX_PENDING_COMMANDS. We don't

* want to use a lot of memory just because a link is not working

* properly (note that anyway there is a redundant protection about this,

* that is, the link will be disconnected and reconnected if a long

* timeout condition is detected. */

if (ri->link->pending_commands >=

SENTINEL_MAX_PENDING_COMMANDS * ri->link->refcount) return;

/* If this is a slave of a master in O_DOWN condition we start sending

* it INFO every second, instead of the usual SENTINEL_INFO_PERIOD

* period. In this state we want to closely monitor slaves in case they

* are turned into masters by another Sentinel, or by the sysadmin.

*

* Similarly we monitor the INFO output more often if the slave reports

* to be disconnected from the master, so that we can have a fresh

* disconnection time figure. */

if ((ri->flags & SRI_SLAVE) &&

((ri->master->flags & (SRI_O_DOWN|SRI_FAILOVER_IN_PROGRESS)) ||

(ri->master_link_down_time != 0)))

{

info_period = 1000;

} else {

info_period = SENTINEL_INFO_PERIOD;

}

/* We ping instances every time the last received pong is older than

* the configured 'down-after-milliseconds' time, but every second

* anyway if 'down-after-milliseconds' is greater than 1 second. */

ping_period = ri->down_after_period;

if (ping_period > SENTINEL_PING_PERIOD) ping_period = SENTINEL_PING_PERIOD;

/* Send INFO to masters and slaves, not sentinels. */

if ((ri->flags & SRI_SENTINEL) == 0 &&

(ri->info_refresh == 0 ||

(now - ri->info_refresh) > info_period))

{

retval = redisAsyncCommand(ri->link->cc,

sentinelInfoReplyCallback, ri, "%s",

sentinelInstanceMapCommand(ri,"INFO"));

if (retval == C_OK) ri->link->pending_commands++;

}

/* Send PING to all the three kinds of instances. */

if ((now - ri->link->last_pong_time) > ping_period &&

(now - ri->link->last_ping_time) > ping_period/2) {

sentinelSendPing(ri);

}

/* PUBLISH hello messages to all the three kinds of instances. */

if ((now - ri->last_pub_time) > SENTINEL_PUBLISH_PERIOD) {

sentinelSendHello(ri);

}

}2.4.2.1.3 sentinelCheckSubjectivelyDown-判断节点的主观下线状态

/* ===================== SENTINEL availability checks ======================= */

/* Is this instance down from our point of view? */

void sentinelCheckSubjectivelyDown(sentinelRedisInstance *ri) {

mstime_t elapsed = 0;

if (ri->link->act_ping_time)

elapsed = mstime() - ri->link->act_ping_time;

else if (ri->link->disconnected)

elapsed = mstime() - ri->link->last_avail_time;

/* Check if we are in need for a reconnection of one of the

* links, because we are detecting low activity.

*

* 1) Check if the command link seems connected, was connected not less

* than SENTINEL_MIN_LINK_RECONNECT_PERIOD, but still we have a

* pending ping for more than half the timeout. */

if (ri->link->cc &&

(mstime() - ri->link->cc_conn_time) >

SENTINEL_MIN_LINK_RECONNECT_PERIOD &&

ri->link->act_ping_time != 0 && /* There is a pending ping... */

/* The pending ping is delayed, and we did not receive

* error replies as well. */

(mstime() - ri->link->act_ping_time) > (ri->down_after_period/2) &&

(mstime() - ri->link->last_pong_time) > (ri->down_after_period/2))

{

instanceLinkCloseConnection(ri->link,ri->link->cc);

}

/* 2) Check if the pubsub link seems connected, was connected not less

* than SENTINEL_MIN_LINK_RECONNECT_PERIOD, but still we have no

* activity in the Pub/Sub channel for more than

* SENTINEL_PUBLISH_PERIOD * 3.

*/

if (ri->link->pc &&

(mstime() - ri->link->pc_conn_time) >

SENTINEL_MIN_LINK_RECONNECT_PERIOD &&

(mstime() - ri->link->pc_last_activity) > (SENTINEL_PUBLISH_PERIOD*3))

{

instanceLinkCloseConnection(ri->link,ri->link->pc);

}

/* Update the SDOWN flag. We believe the instance is SDOWN if:

*

* 1) It is not replying.

* 2) We believe it is a master, it reports to be a slave for enough time

* to meet the down_after_period, plus enough time to get two times

* INFO report from the instance. */

if (elapsed > ri->down_after_period ||

(ri->flags & SRI_MASTER &&

ri->role_reported == SRI_SLAVE &&

mstime() - ri->role_reported_time >

(ri->down_after_period+SENTINEL_INFO_PERIOD*2)))

{

/* Is subjectively down */

if ((ri->flags & SRI_S_DOWN) == 0) {

sentinelEvent(LL_WARNING,"+sdown",ri,"%@");

ri->s_down_since_time = mstime();

ri->flags |= SRI_S_DOWN;

}

} else {

/* Is subjectively up */

if (ri->flags & SRI_S_DOWN) {

sentinelEvent(LL_WARNING,"-sdown",ri,"%@");

ri->flags &= ~(SRI_S_DOWN|SRI_SCRIPT_KILL_SENT);

}

}

}2.4.2.1.4 sentinelCheckObjectivelyDown-判断主节点的客观下线状态

/* Is this instance down according to the configured quorum?

*

* Note that ODOWN is a weak quorum, it only means that enough Sentinels

* reported in a given time range that the instance was not reachable.

* However messages can be delayed so there are no strong guarantees about

* N instances agreeing at the same time about the down state. */

void sentinelCheckObjectivelyDown(sentinelRedisInstance *master) {

dictIterator *di;

dictEntry *de;

unsigned int quorum = 0, odown = 0;

if (master->flags & SRI_S_DOWN) {

/* Is down for enough sentinels? */

quorum = 1; /* the current sentinel. */

/* Count all the other sentinels. */

di = dictGetIterator(master->sentinels);

while((de = dictNext(di)) != NULL) {

sentinelRedisInstance *ri = dictGetVal(de);

if (ri->flags & SRI_MASTER_DOWN) quorum++;

}

dictReleaseIterator(di);

if (quorum >= master->quorum) odown = 1;

}

/* Set the flag accordingly to the outcome. */

if (odown) {

if ((master->flags & SRI_O_DOWN) == 0) {

sentinelEvent(LL_WARNING,"+odown",master,"%@ #quorum %d/%d",

quorum, master->quorum);

master->flags |= SRI_O_DOWN;

master->o_down_since_time = mstime();

}

} else {

if (master->flags & SRI_O_DOWN) {

sentinelEvent(LL_WARNING,"-odown",master,"%@");

master->flags &= ~SRI_O_DOWN;

}

}

}2.4.2.1.5 sentinelFailoverStateMachine-对主节点执行故障转移

void sentinelFailoverStateMachine(sentinelRedisInstance *ri) {

serverAssert(ri->flags & SRI_MASTER);

if (!(ri->flags & SRI_FAILOVER_IN_PROGRESS)) return;

switch(ri->failover_state) {

case SENTINEL_FAILOVER_STATE_WAIT_START:

sentinelFailoverWaitStart(ri);

break;

case SENTINEL_FAILOVER_STATE_SELECT_SLAVE:

sentinelFailoverSelectSlave(ri);

break;

case SENTINEL_FAILOVER_STATE_SEND_SLAVEOF_NOONE:

sentinelFailoverSendSlaveOfNoOne(ri);

break;

case SENTINEL_FAILOVER_STATE_WAIT_PROMOTION:

sentinelFailoverWaitPromotion(ri);

break;

case SENTINEL_FAILOVER_STATE_RECONF_SLAVES:

sentinelFailoverReconfNextSlave(ri);

break;

}

}2.4.2.1.5.1 sentinelFailoverWaitStart-故障转移开始

/* ---------------- Failover state machine implementation ------------------- */

void sentinelFailoverWaitStart(sentinelRedisInstance *ri) {

char *leader;

int isleader;

/* Check if we are the leader for the failover epoch. */

leader = sentinelGetLeader(ri, ri->failover_epoch);

isleader = leader && strcasecmp(leader,sentinel.myid) == 0;

sdsfree(leader);

/* If I'm not the leader, and it is not a forced failover via

* SENTINEL FAILOVER, then I can't continue with the failover. */

if (!isleader && !(ri->flags & SRI_FORCE_FAILOVER)) {

int election_timeout = SENTINEL_ELECTION_TIMEOUT;

/* The election timeout is the MIN between SENTINEL_ELECTION_TIMEOUT

* and the configured failover timeout. */

if (election_timeout > ri->failover_timeout)

election_timeout = ri->failover_timeout;

/* Abort the failover if I'm not the leader after some time. */

if (mstime() - ri->failover_start_time > election_timeout) {

sentinelEvent(LL_WARNING,"-failover-abort-not-elected",ri,"%@");

sentinelAbortFailover(ri);

}

return;

}

sentinelEvent(LL_WARNING,"+elected-leader",ri,"%@");

if (sentinel.simfailure_flags & SENTINEL_SIMFAILURE_CRASH_AFTER_ELECTION)

sentinelSimFailureCrash();

ri->failover_state = SENTINEL_FAILOVER_STATE_SELECT_SLAVE;

ri->failover_state_change_time = mstime();

sentinelEvent(LL_WARNING,"+failover-state-select-slave",ri,"%@");

}

2.4.2.1.5.2 sentinelFailoverSelectSlave-选择一个要晋升的从节点

void sentinelFailoverSelectSlave(sentinelRedisInstance *ri) {

sentinelRedisInstance *slave = sentinelSelectSlave(ri);

/* We don't handle the timeout in this state as the function aborts

* the failover or go forward in the next state. */

if (slave == NULL) {

sentinelEvent(LL_WARNING,"-failover-abort-no-good-slave",ri,"%@");

sentinelAbortFailover(ri);

} else {

sentinelEvent(LL_WARNING,"+selected-slave",slave,"%@");

slave->flags |= SRI_PROMOTED;

ri->promoted_slave = slave;

ri->failover_state = SENTINEL_FAILOVER_STATE_SEND_SLAVEOF_NOONE;

ri->failover_state_change_time = mstime();

sentinelEvent(LL_NOTICE,"+failover-state-send-slaveof-noone",

slave, "%@");

}

}2.4.2.1.5.3 sentinelFailoverSendSlaveOfNoOne-使从节点变为主节点

void sentinelFailoverSendSlaveOfNoOne(sentinelRedisInstance *ri) {

int retval;

/* We can't send the command to the promoted slave if it is now

* disconnected. Retry again and again with this state until the timeout

* is reached, then abort the failover. */

if (ri->promoted_slave->link->disconnected) {

if (mstime() - ri->failover_state_change_time > ri->failover_timeout) {

sentinelEvent(LL_WARNING,"-failover-abort-slave-timeout",ri,"%@");

sentinelAbortFailover(ri);

}

return;

}

/* Send SLAVEOF NO ONE command to turn the slave into a master.

* We actually register a generic callback for this command as we don't

* really care about the reply. We check if it worked indirectly observing

* if INFO returns a different role (master instead of slave). */

retval = sentinelSendSlaveOf(ri->promoted_slave,NULL,0);

if (retval != C_OK) return;

sentinelEvent(LL_NOTICE, "+failover-state-wait-promotion",

ri->promoted_slave,"%@");

ri->failover_state = SENTINEL_FAILOVER_STATE_WAIT_PROMOTION;

ri->failover_state_change_time = mstime();

}2.4.2.1.5.4 sentinelFailoverReconfNextSlave-从节点同步新的主节点

/* Send SLAVE OF <new master address> to all the remaining slaves that

* still don't appear to have the configuration updated. */

void sentinelFailoverReconfNextSlave(sentinelRedisInstance *master) {

dictIterator *di;

dictEntry *de;

int in_progress = 0;

di = dictGetIterator(master->slaves);

while((de = dictNext(di)) != NULL) {

sentinelRedisInstance *slave = dictGetVal(de);

if (slave->flags & (SRI_RECONF_SENT|SRI_RECONF_INPROG))

in_progress++;

}

dictReleaseIterator(di);

di = dictGetIterator(master->slaves);

while(in_progress < master->parallel_syncs &&

(de = dictNext(di)) != NULL)

{

sentinelRedisInstance *slave = dictGetVal(de);

int retval;

/* Skip the promoted slave, and already configured slaves. */

if (slave->flags & (SRI_PROMOTED|SRI_RECONF_DONE)) continue;

/* If too much time elapsed without the slave moving forward to

* the next state, consider it reconfigured even if it is not.

* Sentinels will detect the slave as misconfigured and fix its

* configuration later. */

if ((slave->flags & SRI_RECONF_SENT) &&

(mstime() - slave->slave_reconf_sent_time) >

SENTINEL_SLAVE_RECONF_TIMEOUT)

{

sentinelEvent(LL_NOTICE,"-slave-reconf-sent-timeout",slave,"%@");

slave->flags &= ~SRI_RECONF_SENT;

slave->flags |= SRI_RECONF_DONE;

}

/* Nothing to do for instances that are disconnected or already

* in RECONF_SENT state. */

if (slave->flags & (SRI_RECONF_SENT|SRI_RECONF_INPROG)) continue;

if (slave->link->disconnected) continue;

/* Send SLAVEOF <new master>. */

retval = sentinelSendSlaveOf(slave,

master->promoted_slave->addr->ip,

master->promoted_slave->addr->port);

if (retval == C_OK) {

slave->flags |= SRI_RECONF_SENT;

slave->slave_reconf_sent_time = mstime();

sentinelEvent(LL_NOTICE,"+slave-reconf-sent",slave,"%@");

in_progress++;

}

}

dictReleaseIterator(di);

/* Check if all the slaves are reconfigured and handle timeout. */

sentinelFailoverDetectEnd(master);

}2.4.2.1.6 sentinelAskMasterStateToOtherSentinels-更新主节点的状态

/* If we think the master is down, we start sending

* SENTINEL IS-MASTER-DOWN-BY-ADDR requests to other sentinels

* in order to get the replies that allow to reach the quorum

* needed to mark the master in ODOWN state and trigger a failover. */

#define SENTINEL_ASK_FORCED (1<<0)

void sentinelAskMasterStateToOtherSentinels(sentinelRedisInstance *master, int flags) {

dictIterator *di;

dictEntry *de;

di = dictGetIterator(master->sentinels);

while((de = dictNext(di)) != NULL) {

sentinelRedisInstance *ri = dictGetVal(de);

mstime_t elapsed = mstime() - ri->last_master_down_reply_time;

char port[32];

int retval;

/* If the master state from other sentinel is too old, we clear it. */

if (elapsed > SENTINEL_ASK_PERIOD*5) {

ri->flags &= ~SRI_MASTER_DOWN;

sdsfree(ri->leader);

ri->leader = NULL;

}

/* Only ask if master is down to other sentinels if:

*

* 1) We believe it is down, or there is a failover in progress.

* 2) Sentinel is connected.

* 3) We did not receive the info within SENTINEL_ASK_PERIOD ms. */

if ((master->flags & SRI_S_DOWN) == 0) continue;

if (ri->link->disconnected) continue;

if (!(flags & SENTINEL_ASK_FORCED) &&

mstime() - ri->last_master_down_reply_time < SENTINEL_ASK_PERIOD)

continue;

/* Ask */

ll2string(port,sizeof(port),master->addr->port);

retval = redisAsyncCommand(ri->link->cc,

sentinelReceiveIsMasterDownReply, ri,

"%s is-master-down-by-addr %s %s %llu %s",

sentinelInstanceMapCommand(ri,"SENTINEL"),

master->addr->ip, port,

sentinel.current_epoch,

(master->failover_state > SENTINEL_FAILOVER_STATE_NONE) ?

sentinel.myid : "*");

if (retval == C_OK) ri->link->pending_commands++;

}

dictReleaseIterator(di);

}2.4.2.2 sentinelHandleDictOfRedisInstances-处理主从切换

/* Perform scheduled operations for all the instances in the dictionary.

* Recursively call the function against dictionaries of slaves. */

void sentinelHandleDictOfRedisInstances(dict *instances) {

dictIterator *di;

dictEntry *de;

sentinelRedisInstance *switch_to_promoted = NULL;

/* There are a number of things we need to perform against every master. */

di = dictGetIterator(instances);

while((de = dictNext(di)) != NULL) {

sentinelRedisInstance *ri = dictGetVal(de);

sentinelHandleRedisInstance(ri);

if (ri->flags & SRI_MASTER) {

sentinelHandleDictOfRedisInstances(ri->slaves);

sentinelHandleDictOfRedisInstances(ri->sentinels);

if (ri->failover_state == SENTINEL_FAILOVER_STATE_UPDATE_CONFIG) {

switch_to_promoted = ri;

}

}

}

if (switch_to_promoted)

sentinelFailoverSwitchToPromotedSlave(switch_to_promoted);

dictReleaseIterator(di);

}2.4.3 sentinelRunPendingScripts-执行脚本任务

/* Run pending scripts if we are not already at max number of running

* scripts. */

void sentinelRunPendingScripts(void) {

listNode *ln;

listIter li;

mstime_t now = mstime();

/* Find jobs that are not running and run them, from the top to the

* tail of the queue, so we run older jobs first. */

listRewind(sentinel.scripts_queue,&li);

/* 跳过正在运行的脚本 */

while (sentinel.running_scripts < SENTINEL_SCRIPT_MAX_RUNNING &&

(ln = listNext(&li)) != NULL)

{

sentinelScriptJob *sj = ln->value;

pid_t pid;

/* Skip if already running. */

/* 该脚本没有到达重新执行的时间, 跳过 */

if (sj->flags & SENTINEL_SCRIPT_RUNNING) continue;

/* Skip if it's a retry, but not enough time has elapsed. */

/* 设置正在执行标志 */

if (sj->start_time && sj->start_time > now) continue;

/* 开始执行时间 */

sj->flags |= SENTINEL_SCRIPT_RUNNING;

sj->start_time = mstime();

/* 执行次数加1 */

sj->retry_num++;

/* 创建子进程执行 */

pid = fork();

/* fork()失败,报告错误 */

if (pid == -1) {

/* Parent (fork error).

* We report fork errors as signal 99, in order to unify the

* reporting with other kind of errors. */

sentinelEvent(LL_WARNING,"-script-error",NULL,

"%s %d %d", sj->argv[0], 99, 0);

sj->flags &= ~SENTINEL_SCRIPT_RUNNING;

sj->pid = 0;

}

/* 子进程执行的代码 */

else if (pid == 0)

{

/* Child */

execve(sj->argv[0],sj->argv,environ);

/* If we are here an error occurred. */

_exit(2); /* Don't retry execution. */

}

/* 父进程,更新脚本的pid,和同时执行脚本的个数 */

else {

sentinel.running_scripts++;

sj->pid = pid;

/* 通知事件 */

sentinelEvent(LL_DEBUG,"+script-child",NULL,"%ld",(long)pid);

}

}

}执行脚本需要创建一个子进程,

- 子进程:执行没有正在执行和已经到了执行时间的脚本任务;

- 父进程:更新脚本的信息。例如:正在执行的个数和执行脚本的子进程的

pid等等;父进程更新完脚本的信息后就会继续执行下一个

sentinelCollectTerminatedScripts()函数.

2.4.4 sentinelCollectTerminatedScripts-脚本清理工作

如果在子进程执行的脚本已经执行完成,则可以从脚本队列中将其删除;

如果在子进程执行的脚本执行出错,但是可以在规定时间后重新执行,那么设置其执行的时间,下个周期重新执

行;

如果在子进程执行的脚本执行出错,但是无法在执行,那么也会脚本队里中将其删除.

/* Check for scripts that terminated, and remove them from the queue if the

* script terminated successfully. If instead the script was terminated by

* a signal, or returned exit code "1", it is scheduled to run again if

* the max number of retries did not already elapsed. */

void sentinelCollectTerminatedScripts(void) {

int statloc;

pid_t pid;

/* 接受子进程退出码, WNOHANG-如果没有子进程退出,则立刻返回 */

while ((pid = wait3(&statloc,WNOHANG,NULL)) > 0) {

int exitcode = WEXITSTATUS(statloc);

int bysignal = 0;

listNode *ln;

sentinelScriptJob *sj;

/* 获取造成脚本终止的信号 */

if (WIFSIGNALED(statloc)) bysignal = WTERMSIG(statloc);

sentinelEvent(LL_DEBUG,"-script-child",NULL,"%ld %d %d",

(long)pid, exitcode, bysignal);

/* 根据pid查找并返回正在运行的脚本节点 */

ln = sentinelGetScriptListNodeByPid(pid);

if (ln == NULL) {

serverLog(LL_WARNING,"wait3() returned a pid (%ld) we can't find in our scripts execution queue!", (long)pid);

continue;

}

sj = ln->value;

/* If the script was terminated by a signal or returns an

* exit code of "1" (that means: please retry), we reschedule it

* if the max number of retries is not already reached. */

/* 如果退出码是1并且没到脚本最大的重试数量 */

if ((bysignal || exitcode == 1) &&

sj->retry_num != SENTINEL_SCRIPT_MAX_RETRY)

{

/* 取消正在执行的标志 */

sj->flags &= ~SENTINEL_SCRIPT_RUNNING;

sj->pid = 0;

/* 设置下次执行脚本的时间 */

sj->start_time = mstime() +

sentinelScriptRetryDelay(sj->retry_num);

}

/* 脚本不能重新执行 */

else

{

/* Otherwise let's remove the script, but log the event if the

* execution did not terminated in the best of the ways. */

/* 发送脚本错误的事件通知 */

if (bysignal || exitcode != 0) {

sentinelEvent(LL_WARNING,"-script-error",NULL,

"%s %d %d", sj->argv[0], bysignal, exitcode);

}

/* 从脚本队列中删除脚本 */

listDelNode(sentinel.scripts_queue,ln);

/* 释放一个脚本任务结构和所有关联的数据 */

sentinelReleaseScriptJob(sj);

}

/* 目前正在执行脚本的数量减1 */

sentinel.running_scripts--;

}

}2.4.4 sentinelKillTimedoutScripts-杀死超时脚本

/* Kill scripts in timeout, they'll be collected by the

* sentinelCollectTerminatedScripts() function. */

/*

Sentinel规定一个脚本最多执行60s,如果执行超时,则会杀死正在执行的脚本.

*/

void sentinelKillTimedoutScripts(void) {

listNode *ln;

listIter li;

mstime_t now = mstime();

/* 遍历脚本队列 */

listRewind(sentinel.scripts_queue,&li);

/* 如果当前脚本正在执行且执行,且脚本执行的时间超过60s */

while ((ln = listNext(&li)) != NULL) {

sentinelScriptJob *sj = ln->value;

if (sj->flags & SENTINEL_SCRIPT_RUNNING &&

(now - sj->start_time) > SENTINEL_SCRIPT_MAX_RUNTIME)

{

/* 发送脚本超时的事件 */

sentinelEvent(LL_WARNING,"-script-timeout",NULL,"%s %ld",

sj->argv[0], (long)sj->pid);

/* 杀死执行脚本的子进程 */

kill(sj->pid,SIGKILL);

}

}

}

2.4.5 server.hz的调整防脑裂

+----+ +----+

| M1 |---------| R1 |

| S1 | | S2 |

+----+ +----+

Configuration: quorum = 1

// M1是主节点

// R1是从节点

// S1、S2是哨兵节点

在此种情况中,如果主节点M1出现故障,那么R1将被晋升为主节点,因为两个Sentinel节点可以就配置的

quorum = 1达成一致, 并且会执行故障转移操作, 如下图所示:

+----+ +------+

| M1 |----//-----| [M1] |

| S1 | | S2 |

+----+ +------+

如果执行了故障转移之后,就会完全以对称的方式创建了两个主节点. 客户端可能会不明确的写入数据到

两个主节点,这就可能造成很多严重的后果,例如:争抢服务器的资源, 争抢应用服务, 数据损坏等等.

因此,最好不要进行这样的部署.

在哨兵模式的主函数sentinelTimer(),为了防止这样的部署造成的一些后果,所以每次执行后都会更改服

务器的周期任务执行频率,如下所述:

server.hz = CONFIG_DEFAULT_HZ + rand() % CONFIG_DEFAULT_HZ;

不断改变Redis定期任务的执行频率,以便使每个Sentinel节点都不同步,这种不确定性可以避免Sentinel在同一

时间开始完全继续保持同步,当被要求进行投票时,一次又一次在同一时间进行投票,因为脑裂导致有可能没有胜选

者.

本文深入剖析Redis哨兵机制,涵盖哨兵启动配置、主流程分析及定时任务处理等内容。详细介绍了哨兵如何监控主从节点状态,执行故障转移流程,并通过定时任务维护集群健康。

本文深入剖析Redis哨兵机制,涵盖哨兵启动配置、主流程分析及定时任务处理等内容。详细介绍了哨兵如何监控主从节点状态,执行故障转移流程,并通过定时任务维护集群健康。

1987

1987

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?