BLEU是一种机器翻译(machine translation) 评价指标。

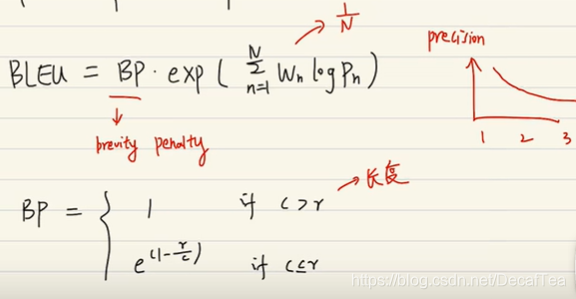

公式解释:

Pn: modified precision score for n-gram。n = 1时是“匹配”单词,n>1时是“匹配”短语。值域[0, 1]。

wnlogPn: weighted log(modified precision score)。

- why log?

我们发现precision随n的增加呈指数递减,n越小precision越高。为了同等对待不同n的precision score,我们用logPn拉回差距,不让n较小(大)时的precision score拉高(低)了precision。 - why wn?

如果不偏好某种n-gram,wn都取1/N, 直接求logPn的average即可。

**BP(brievity penalty):**惩罚比参考翻译结果(reference)长度短的翻译结果。denote 参考翻译结果长度 = r,模型翻译结果 = c。如果c>=r, 不惩罚,如果c<r, 则c越短,BP越小,惩罚越重。

Pn如何计算?

Pn = sum of count clip for all n-grams/sum of count for all n-grams.

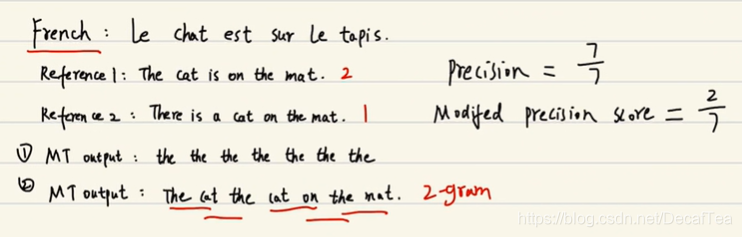

For example,

1-gram:

1-gram: the

count = 7

max-ref-count = 2 (because there are 2 the in reference sentence 1 and 1 the in reference sentence 2)

count clip = min{count, max-ref-count} = 2

Hence, Pn = 2/7

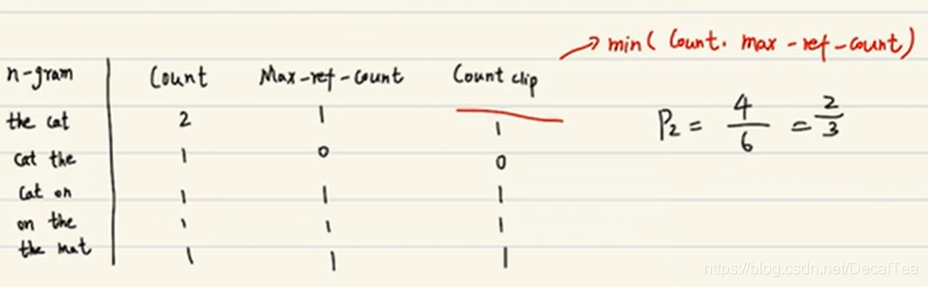

2-gram:

2-grams: the cat, cat the, cat on, on the, the mat

Pn = count clip/count = (1+0+1+1+1)/(2+1+1+1+1) = 2/3

如何解释Pn呢?

count反映了待评估的翻译结果(Machine translation output)的n-gram的种类和频次,而max-ref-count则是标杆翻译结果(reference)的与MT output相符的n-gram的种类和频次。max-ref-count总和越接近count总和,则说明翻译结果越好,越接近reference sentences。当二者相等时,Pn = 1。

每个max-ref-count一般不会超过其对应的count的值。

reference:

[1] https://www.bilibili.com/video/BV1Jb411W7ah

[2] https://en.wikipedia.org/wiki/BLEU

BLEU是一种衡量机器翻译质量的指标,通过计算n-gram的精确度并应用简洁性惩罚来评估。精确度使用log进行调整,使得不同n值的影响平等。简洁性惩罚(BP)用于惩罚翻译结果比参考翻译短的情况。Pn是计算n-gram精确度的关键,表示翻译结果中匹配参考翻译的n-gram比例。当翻译结果与参考翻译接近时,BLEU分数更高。

BLEU是一种衡量机器翻译质量的指标,通过计算n-gram的精确度并应用简洁性惩罚来评估。精确度使用log进行调整,使得不同n值的影响平等。简洁性惩罚(BP)用于惩罚翻译结果比参考翻译短的情况。Pn是计算n-gram精确度的关键,表示翻译结果中匹配参考翻译的n-gram比例。当翻译结果与参考翻译接近时,BLEU分数更高。

1637

1637

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?