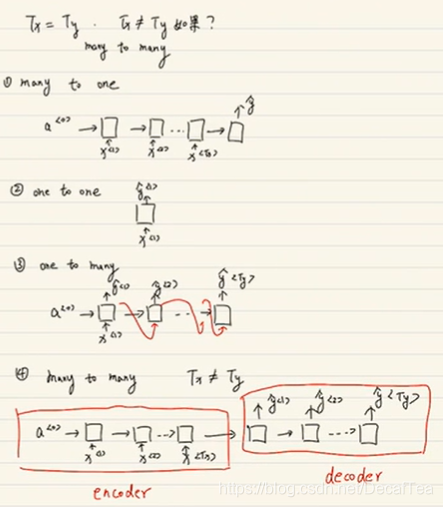

1. many-to-many (Tx = Ty, the same sequence length)

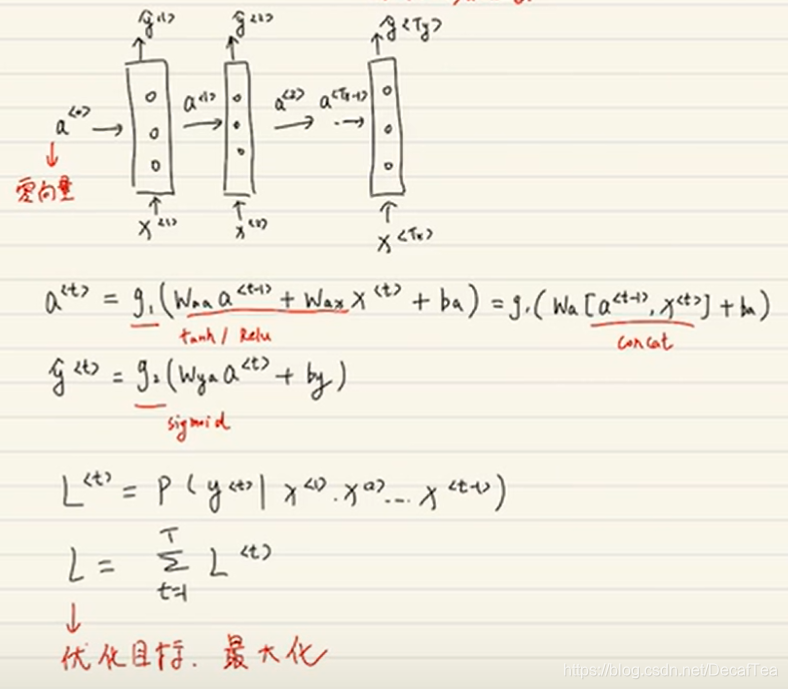

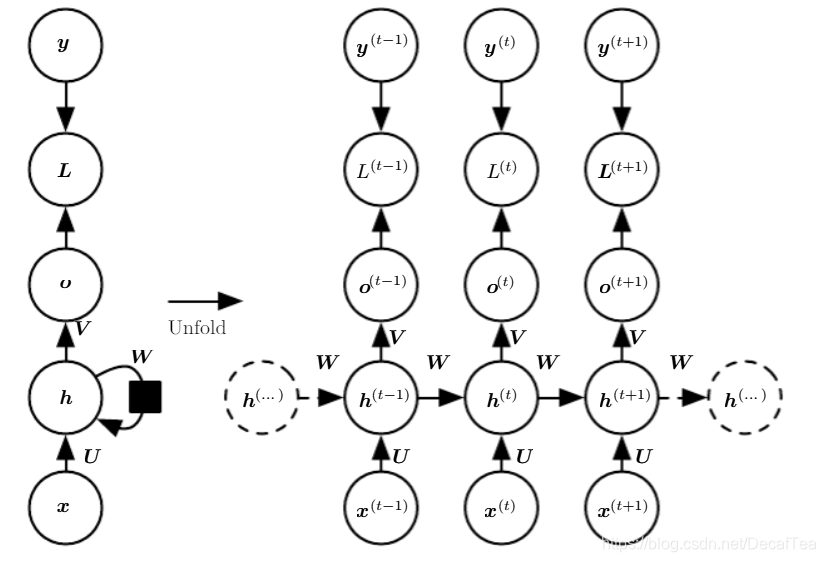

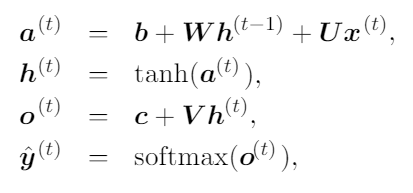

RNN的hidden state,output更新公式,cost function公式

隐藏层激活函数,通常可选 tanh 或 ReLU;

输出层激活函数,可以采用 Softmax、Sigmoid 或线性函数(回归任务)。

交叉熵损失函数:sum of t steps: H(y^,y)

2. other cases

many-to-many (Tx != Ty): encoder - decoder

one-to-one: deep neural netwerk

one-to-many: take in last output and last hidden state to the current state

many-to-one: take in current input and last hidden state to the current state

reference:

[1] https://www.bilibili.com/video/BV1Bb411p7Bd

[2] https://www.zhihu.com/question/41949741

[3] Deep learning by Ian Goodfellow

3186

3186

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?