Enhanced LSTM for Natural Language Inference

https://arxiv.org/pdf/1609.06038v3.pdf

Related Work

- Enhancing sequential inference models based on chain networks

- Further, considering recursive architectures to encode syntactic parsing information

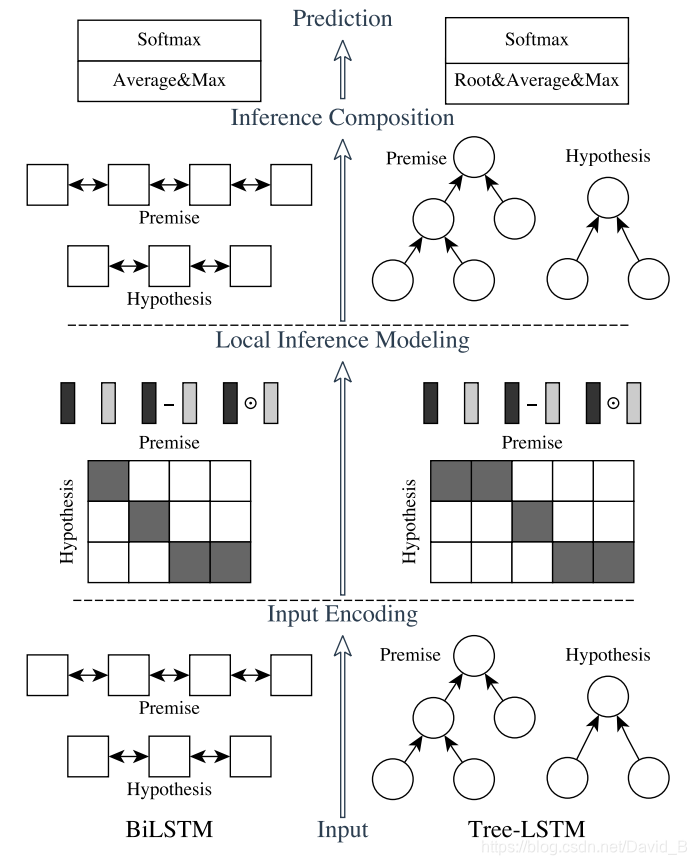

Hybrid Neural Inference Models

Major components

- input encoding、local inference modeling、inference composition

- ESIM(sequential NLI model)、Tree LSTM(incorporate syntactic parsing information)

Notation

- Two sentences:

- a = ( a 1 , . . . , a l a ) a = (a_1, ..., a_{l_a}) a=(a1,...,ala)

- b = ( b 1 , . . . , b l b ) b = (b_1, ..., b_{l_b}) b=(b1,...,blb)

- Enbedding of l l l-dimensional vector: a i a_i ai、 b j ∈ R l b_j\in \mathbb{R}^l bj∈Rl

- a ˉ i \bar {a}_i aˉi: generated by the B i L S T M BiLSTM BiLSTM at time i i i over the input sequence a a a

Goal

- Predict a label y y y that indicates the logic relationship between a a a and b b b

Input Encoding

-

Use B i L S T M BiLSTM BiLSTM to encode the input premise and hypothesis

-

Hidden states by two LSTMs at each time step are concatenated to represent that time step and its context

-

Encode syntactic parse trees of a premise and hypothesis through tree-LSTM

-

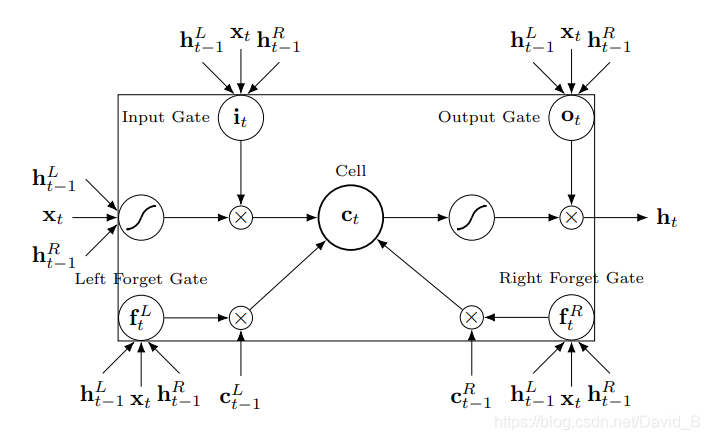

A tree node is deployed with a tree-LSTM memory block depicted

- At each node, an input vector x t x_t xt and hidden vectors of it( h t − 1 L h^L_{t-1} ht−1L and h t − 1 R h^R_{t-1} ht−1R)are taken in as the input to calculate the current node’s hidden vector h t h_t ht

- At each node, an input vector x t x_t xt and hidden vectors of it( h t − 1 L h^L_{t-1} ht−1L and h t − 1 R h^R_{t-1} ht−1R)are taken in as the input to calculate the current node’s hidden vector h t h_t ht

-

Detailed computation:

- h t = T r L S T M ( x t , h t − 1 L , h t − 1 R ) h_t=TrLSTM(x_t, h^L_{t-1}, h^R_{t-1}) ht=TrLSTM(xt,ht−1L,ht−1R)

- h t = o t ⊙ t a n h ( c t ) h_t=o_t\odot tanh(c_t) ht=ot⊙tanh(ct)

- o t = σ ( W o x t + U o L h t − 1 L + U o R h t − 1 R ) o_t=\sigma(W_ox_t+U^L_oh^L_{t-1}+U^R_oh^R_{t-1}) ot=σ(Woxt+UoLht−1L+UoR

该文介绍了一种增强型LSTM(ESIM)模型,用于自然语言推理任务。模型结合了双向LSTM和树LSTM来编码输入序列和句法解析信息。通过局部推理建模和推理组合,利用注意力机制捕捉句子间的相关性,并通过池化转换为固定长度的向量,最后输入到多层感知机分类器进行逻辑关系预测。

该文介绍了一种增强型LSTM(ESIM)模型,用于自然语言推理任务。模型结合了双向LSTM和树LSTM来编码输入序列和句法解析信息。通过局部推理建模和推理组合,利用注意力机制捕捉句子间的相关性,并通过池化转换为固定长度的向量,最后输入到多层感知机分类器进行逻辑关系预测。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

1278

1278

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?