CompHub 实时聚合多平台的数据类(Kaggle、天池…)和OJ类(Leetcode、牛客…)比赛。本账号会推送最新的比赛消息,欢迎关注!

更多比赛信息见 CompHub主页

以下内容摘自比赛主页(点击文末阅读原文进入)

Part1赛题介绍

题目

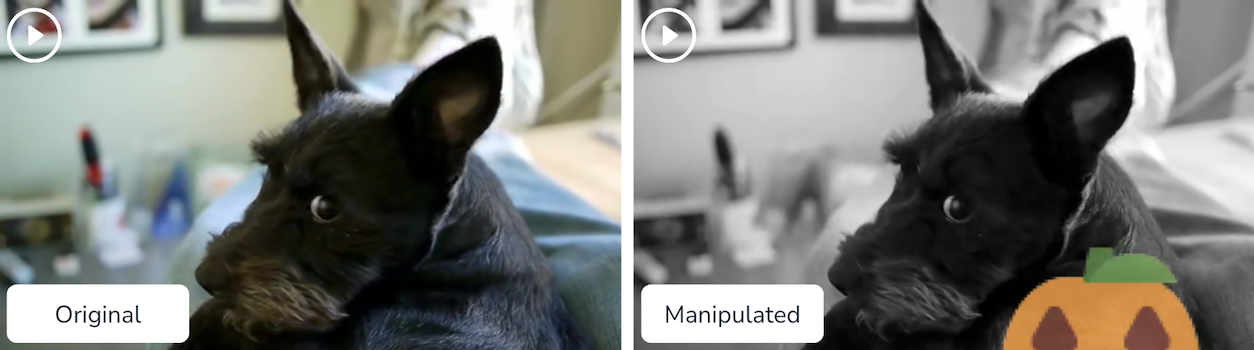

Meta AI Video Similarity Challenge

-

Descriptor Track: generate useful vector representations of videos

-

Matching Track: detects which specific clips of a query video correspond to which specific clips in one or more videos in a large corpus of reference videos.

举办平台

DrivenData

主办方

背景

Manual content moderation has challenges scaling to meet the large volume of content on platforms like Instagram and Facebook, where tens of thousands of hours of video are uploaded each day. Accurate and performant algorithms are critical in flagging and removing inappropriate content. This competition allows you to test your skills in building a key part of that content tracing system, and in so doing contribute to making social media more trustworthy and safe for the people who use it.

Part2时间安排

Phase 1: Model Development (December 2, 2022 00:00 UTC - March 24, 2023 23:59 UTC): Participants have access to the research dataset to develop and refine their models. Submissions may be made to the public leaderboard and evaluated for the Phase 1 leaderboard. These scores will not determine final leaderboard and rankings for prizes.

Phase 2: Final Scoring (April 2, 2023 00:00 UTC to April 9, 2023 23:59 UTC): Participants will have the opportunity to make up to three submissions against a new, unseen test set. Performance against this new test set will be used to determine rankings for prizes.

Part3奖励机制

| Place | Descriptor Track | Matching Track |

|---|---|---|

| 1st | $25,000 | $25,000 |

| 2nd | $15,000 | $15,000 |

| 3rd | $10,000 | $10,000 |

Part4赛题描述

-

For the Descriptor Track, your goal is to generate useful vector representations of videos for this video similarity task. You will submit descriptors for both query and reference set videos. A standardized similarity search using pair-wise inner-product similarity will be used to generate ranked video match predictions.

-

For the Matching Track, your goal is to create a model that directly detects which specific clips of a query video correspond to which specific clips in one or more videos in a large corpus of reference videos. You will submit predictions indicating which portions of a query video are derived from a reference video.

本次竞赛由DrivenData主办,旨在开发准确高效的算法来应对大规模视频内容审核挑战,特别是针对如Instagram和Facebook等平台上每日上传的大量视频内容。竞赛分为两部分:DescriptorTrack和MatchingTrack,分别聚焦于视频特征向量生成及视频片段匹配。

本次竞赛由DrivenData主办,旨在开发准确高效的算法来应对大规模视频内容审核挑战,特别是针对如Instagram和Facebook等平台上每日上传的大量视频内容。竞赛分为两部分:DescriptorTrack和MatchingTrack,分别聚焦于视频特征向量生成及视频片段匹配。

3458

3458

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?