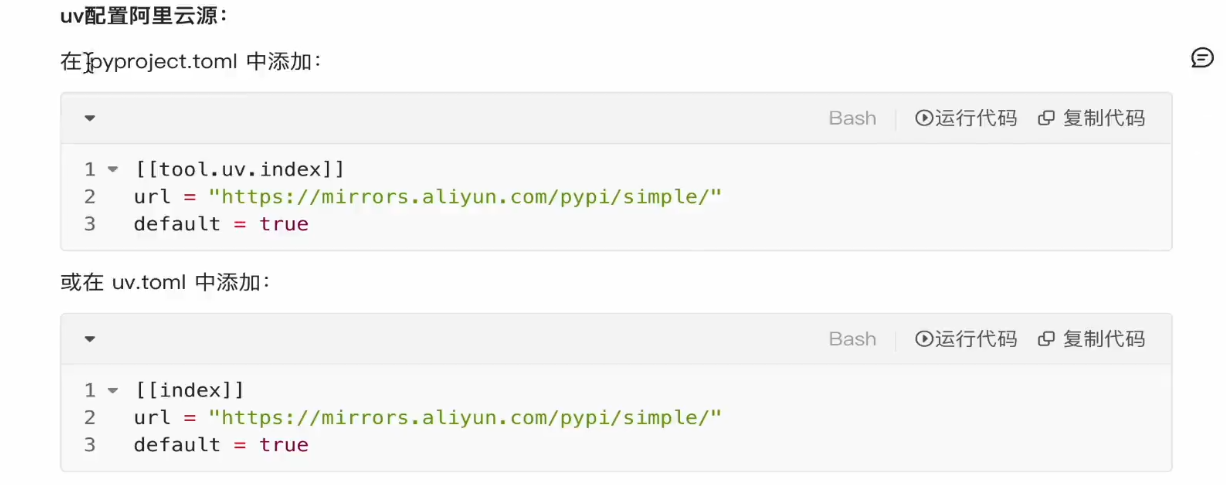

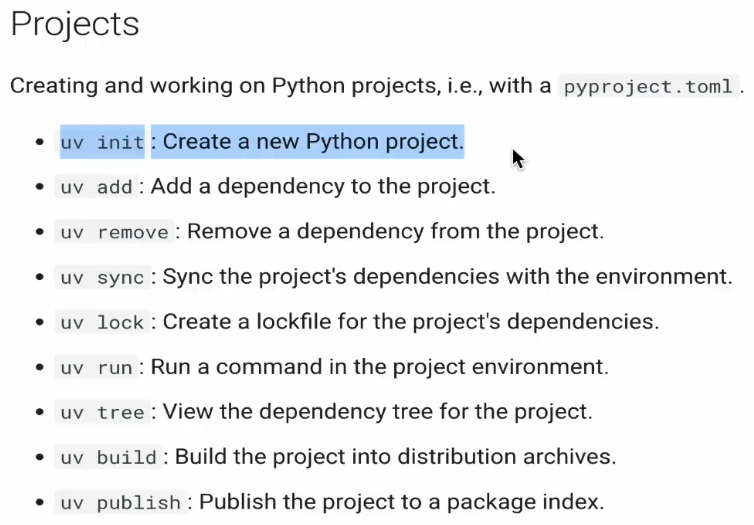

一.python 包管理器和环境管理器

#初始化项目

uv init ai-agent-test

cd ai-agent-test

#当前项目安装

uv add pyyaml

二.智能体开发流程

三.大模型调用方法

方法1、Ollama本地调用

启动服务

ollama serve

查看本地安装了哪些大模型

ollama ls

安装并运行大模型

ollama run deepseek-r1:70b

3.2调用大模型

使用 langchain-ollama 库:https://docs.langchain.com/oss/python/integrations/providers/ollama

uv add langchain-ollama

from langchain_ollama.chat_models import ChatOllama

if __name__ == "__main__":

llm = ChatOllama(model="llama4:latest")

resp = llm.invoke("你是谁?")

print(resp)

# 上下文回答

messages = [

(

"system",

"You are a helpful assistant that translates English to French. Translate the user sentence.",

),

("human", "I love programming."),

]

ai_msg = llm.invoke(messages)

ai_msg

3.3 ollama大模型的流式调用

if __name__ == "__main__":

llm = ChatOllama(model="deepseek-r1:7b")

messages = [

(

"system",

"You are a helpful assistant that translates English to French"

),

("human", "I love programming.")

]

resp = llm.stream(messages)

for chunk in resp:

print(chunk.content, end="")

方法2、阿里云百炼API调用

https://bailian.console.aliyun.com/

from openai import OpenAI

client = OpenAI(

# 若没有配置环境变量,请用百炼API Key将下行替换为: api_key="sk-xxx",

api_key="sk-d60f9a55704a46d096c51bc200d2b7fd",

base_url="https://dashscope.aliyuncs.com/compatible-mode/v1",

)

completion = client.chat.completions.create(

# 模型列表: https://help.aliyun.com/zh/model-studio/getting-started/models

model="qwen-plus",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "你是谁?"},

],

# Qwen3模型通过enable_thinking参数控制思考过程(开源版默认True,商业版默认False)

# 使用Qwen3开源版模型时,若未启用流式输出,请将下行取消注释,否则会报错

# extra_body={"enable_thinking": False},

)

print(completion.model_dump_json())

选择推理模型

from openai import OpenAI

import os

# 初始化OpenAI客户端

client = OpenAI(

# 如果没有配置环境变量,请用阿里云百炼API Key替换:api_key="sk-xxx"

# 新加坡和北京地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

api_key=os.getenv("DASHSCOPE_API_KEY"),

# 以下是北京地域base_url,如果使用新加坡地域的模型,需要将base_url替换为:https://dashscope-intl.aliyuncs.com/compatible-mode/v1

base_url="https://dashscope.aliyuncs.com/compatible-mode/v1",

)

messages = [{"role": "user", "content": "你是谁"}]

completion = client.chat.completions.create(

model="qwen-plus", # 您可以按需更换为其它深度思考模型

messages=messages,

extra_body={"enable_thinking": True},

stream=True,

stream_options={

"include_usage": True

},

)

reasoning_content = "" # 完整思考过程

answer_content = "" # 完整回复

is_answering = False # 是否进入回复阶段

print("\n" + "=" * 20 + "思考过程" + "=" * 20 + "\n")

for chunk in completion:

if not chunk.choices:

print("\nUsage:")

print(chunk.usage)

continue

delta = chunk.choices[0].delta

# 只收集思考内容

if hasattr(delta, "reasoning_content") and delta.reasoning_content is not None:

if not is_answering:

print(delta.reasoning_content, end="", flush=True)

reasoning_content += delta.reasoning_content

# 收到content,开始进行回复

if hasattr(delta, "content") and delta.content:

if not is_answering:

print("\n" + "=" * 20 + "完整回复" + "=" * 20 + "\n")

is_answering = True

print(delta.content, end="", flush=True)

answer_content += delta.content

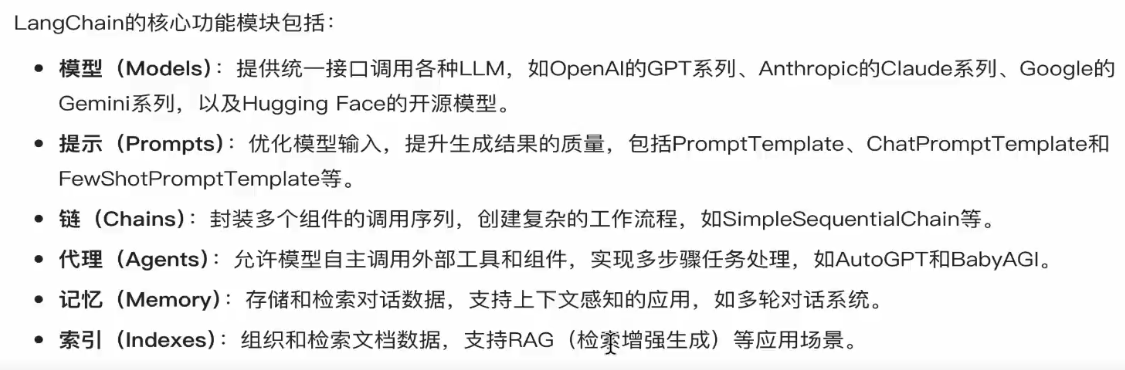

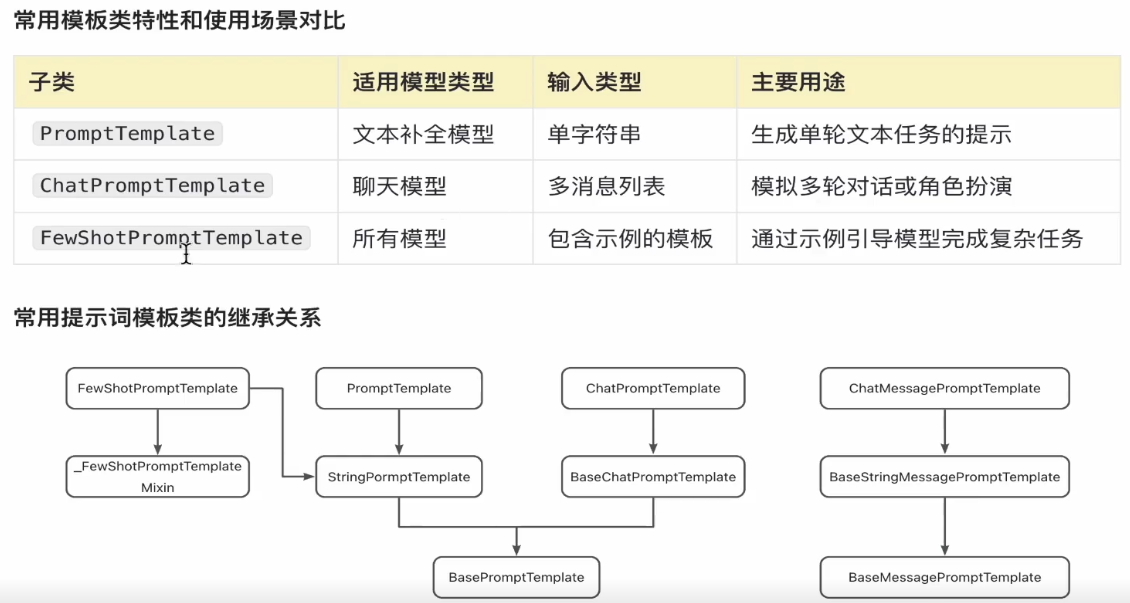

使用LangChain调用通义千问

"""https://www.yuque.com/xiaxiaoshuai/xsc8y4/glv267gwfc5nwxy9"""

# from langchain_core.prompts import PromptTemplate

from langchain_core.prompts import ChatPromptTemplate,ChatMessagePromptTemplate,FewShotPromptTemplate,PromptTemplate

from langchain_openai import ChatOpenAI

from pydantic import SecretStr

# 实例化大模型

llm = ChatOpenAI(

model="qwen-max-latest",

base_url="https://dashscope.aliyuncs.com/compatible-mode/v1",

api_key=SecretStr("sk-160f9a55704a46d096c51bc200d2b7fd"),

streaming=True,

)

# 消息体的抽象和复用

system_message_template = ChatMessagePromptTemplate.from_template(

template="你是一位{role}专家,擅长回答{domain}领域的问题",

role="system"

)

human_message_template = ChatMessagePromptTemplate.from_template(

template="用户问题:{question}",

role="user",

)

# 1.创建提示词模板

# prompt_template = PromptTemplate.from_messages("今天{something}真不错")

# prompt = prompt_template.format(something="天气")

# print(prompt)

chat_prompt_template = ChatPromptTemplate.from_messages([

# ("system", "你是一位{role}专家,擅长回答{domain}领域的问题"),

# ("user", "用户问题:{question}")

system_message_template,

human_message_template

])

# 2.模板+变量 =》 提示词

# resp = llm.stream(prompt)

prompt = chat_prompt_template.format_messages(

role="编程",

domain="Web开发",

question="如何构建一个基于Vue的前端应用?"

)

example_template = "输入:{input}\n输出:{output}"

examples = [

{"input": "将'Hello'翻译成中文", "output": "你好"},

{"input": "将'Goodbye'翻译成中文", "output": "再见"},

{"input": "将'Pen'翻译成中文", "output": "钢笔"},

]

# FewShotPromptTemplate通过提示词实现大模型少样本学习

few_shot_prompt_template = FewShotPromptTemplate(

examples=examples,

example_prompt=PromptTemplate.from_template(example_template),

prefix="请将以下英文翻译成中文:",

suffix="输入:{text}\n输出:",

input_variables=["text"]

)

prompt = few_shot_prompt_template.format(text="Thank you!")

print(prompt)

resp = llm.stream(prompt)

for chunk in resp:

print(chunk.conten, end="")

# 链式调用大模型

chain = few_shot_prompt_template | llm

resp = chain.stream(input={"text": "Thank you!"})

for chunk in resp:

print(chunk.content, end="")

绑定自定义工具

from pydantic import BaseModel, Field

from langchain_core.tools import tool

from app.bailian.common import chat_prompt_template, llm

class AddInputArgs(BaseModel):

a: int = Field(description="first number")

b: int = Field(description="second number")

@tool(

description="add two numbers",

args_schema =AddInputArgs

)

def add(a, b):

"""add two numbers"""

return a + b

# add_tools = Tool.from_function(

# func=add,

# name="add",

# description="add two numbers",

# )

# llm_with_tools = llm.bind_tools([add_tools])

llm_with_tools = llm.bind_tools([add])

chain = chat_prompt_template | llm_with_tools

resp = chain.stream(input={"role": "计算", "domain": "数学计算", "question": "使用工具计算:100+100=?"})

tool_dict = {

"add": add

}

for tool_calls in resp.tool_calls:

print(tool_calls)

args = tool_calls["args"]

func_name = tool_calls["name"]

print(func_name)

tool_func = tool_dict[func_name]

# tool_content = tool_func(int(args["__arg1"]), int(args["__arg2"]))

tool_content = tool_func.invoke(args)

print(tool_content)

102

102

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?