https://github.com/sofiathefirst/AIcode/tree/master/03minstDemo

from __future__ import print_function

import numpy as np

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers.core import Dense, Dropout, Activation,Flatten

from keras.optimizers import RMSprop

from keras.utils import np_utils

from keras.layers.convolutional import Conv2D, MaxPooling2D

import matplotlib.pyplot as plt

np.random.seed(1671) # for reproducibility

# network and training

NB_EPOCH = 20

BATCH_SIZE = 128

VERBOSE = 1

NB_CLASSES = 10 # number of outputs = number of digits

OPTIMIZER = RMSprop() # optimizer, explainedin this chapter

N_HIDDEN = 128

VALIDATION_SPLIT=0.2 # how much TRAIN is reserved for VALIDATION

DROPOUT = 0.3

# data: shuffled and split between train and test sets

(X_train, y_train), (X_test, y_test) = mnist.load_data()

#X_train is 60000 rows of 28x28 values --> reshaped in 60000 x 784

RESHAPED = 784

#

X_train = X_train.reshape(60000, 28,28,1)

X_test = X_test.reshape(10000, 28,28,1)

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

# normalize

X_train /= 255

X_test /= 255

print(X_train.shape[0], 'train samples')

print(X_test.shape[0], 'test samples')

# convert class vectors to binary class matrices

Y_train = np_utils.to_categorical(y_train, NB_CLASSES)

Y_test = np_utils.to_categorical(y_test, NB_CLASSES)

# M_HIDDEN hidden layers

# 10 outputs

# final stage is softmax

model = Sequential()

model.add(Conv2D(32, kernel_size=5, padding='same',

input_shape=(28, 28, 1)))#32个卷积核 ,

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))#池化层

model.add(Dropout(0.25))#随机丢弃层

model.add(Conv2D(64, kernel_size=5, padding='same'))#64个卷积核

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))#池化层

model.add(Dropout(0.25))#随机丢弃层

model.add(Flatten())

model.add(Dense(1024))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(NB_CLASSES))

model.add(Activation('softmax'))

model.summary()

model.compile(loss='categorical_crossentropy',

optimizer=OPTIMIZER,

metrics=['accuracy'])

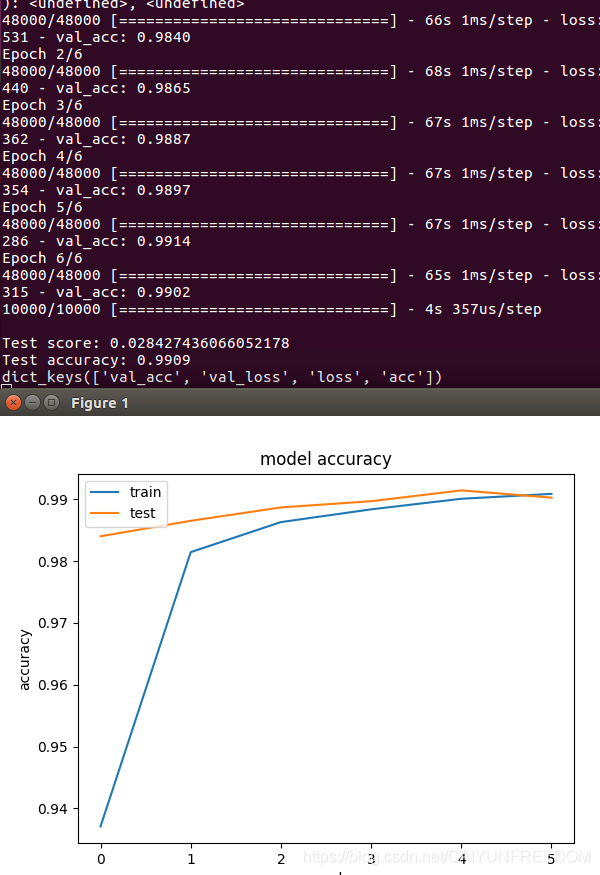

history = model.fit(X_train, Y_train,

batch_size=BATCH_SIZE, epochs=6,

verbose=VERBOSE, validation_split=VALIDATION_SPLIT)

score = model.evaluate(X_test, Y_test, verbose=VERBOSE)

print("\nTest score:", score[0])

print('Test accuracy:', score[1])

# list all data in history

print(history.history.keys())

# summarize history for accuracy

plt.plot(history.history['acc'])

plt.plot(history.history['val_acc'])

plt.title('model accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()

# summarize history for loss

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('model loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()model summary

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 28, 28, 32) 832

_________________________________________________________________

activation_1 (Activation) (None, 28, 28, 32) 0

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 14, 14, 32) 0

_________________________________________________________________

dropout_1 (Dropout) (None, 14, 14, 32) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 14, 14, 64) 51264

_________________________________________________________________

activation_2 (Activation) (None, 14, 14, 64) 0

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 7, 7, 64) 0

_________________________________________________________________

dropout_2 (Dropout) (None, 7, 7, 64) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 3136) 0

_________________________________________________________________

dense_1 (Dense) (None, 1024) 3212288

_________________________________________________________________

activation_3 (Activation) (None, 1024) 0

_________________________________________________________________

dropout_3 (Dropout) (None, 1024) 0

_________________________________________________________________

dense_2 (Dense) (None, 10) 10250

_________________________________________________________________

activation_4 (Activation) (None, 10) 0

=================================================================

Total params: 3,274,634

本文介绍了一种使用Keras库实现的深度学习模型,该模型通过卷积神经网络(CNN)对MNIST数据集的手写数字进行识别。模型包含多个卷积层、激活层、池化层和全连接层,最终输出层使用softmax激活函数进行分类。经过训练,模型在测试集上达到了较高的准确率。

本文介绍了一种使用Keras库实现的深度学习模型,该模型通过卷积神经网络(CNN)对MNIST数据集的手写数字进行识别。模型包含多个卷积层、激活层、池化层和全连接层,最终输出层使用softmax激活函数进行分类。经过训练,模型在测试集上达到了较高的准确率。

641

641

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?